Rose Yu

Demystifying Mergeability: Interpretable Properties to Predict Model Merging Success

Jan 29, 2026Abstract:Model merging combines knowledge from separately fine-tuned models, yet success factors remain poorly understood. While recent work treats mergeability as an intrinsic property, we show with an architecture-agnostic framework that it fundamentally depends on both the merging method and the partner tasks. Using linear optimization over a set of interpretable pairwise metrics (e.g., gradient L2 distance), we uncover properties correlating with post-merge performance across four merging methods. We find substantial variation in success drivers (46.7% metric overlap; 55.3% sign agreement), revealing method-specific "fingerprints". Crucially, however, subspace overlap and gradient alignment metrics consistently emerge as foundational, method-agnostic prerequisites for compatibility. These findings provide a diagnostic foundation for understanding mergeability and motivate future fine-tuning strategies that explicitly encourage these properties.

Guardian-regularized Safe Offline Reinforcement Learning for Smart Weaning of Mechanical Circulatory Devices

Nov 08, 2025Abstract:We study the sequential decision-making problem for automated weaning of mechanical circulatory support (MCS) devices in cardiogenic shock patients. MCS devices are percutaneous micro-axial flow pumps that provide left ventricular unloading and forward blood flow, but current weaning strategies vary significantly across care teams and lack data-driven approaches. Offline reinforcement learning (RL) has proven to be successful in sequential decision-making tasks, but our setting presents challenges for training and evaluating traditional offline RL methods: prohibition of online patient interaction, highly uncertain circulatory dynamics due to concurrent treatments, and limited data availability. We developed an end-to-end machine learning framework with two key contributions (1) Clinically-aware OOD-regularized Model-based Policy Optimization (CORMPO), a density-regularized offline RL algorithm for out-of-distribution suppression that also incorporates clinically-informed reward shaping and (2) a Transformer-based probabilistic digital twin that models MCS circulatory dynamics for policy evaluation with rich physiological and clinical metrics. We prove that \textsf{CORMPO} achieves theoretical performance guarantees under mild assumptions. CORMPO attains a higher reward than the offline RL baselines by 28% and higher scores in clinical metrics by 82.6% on real and synthetic datasets. Our approach offers a principled framework for safe offline policy learning in high-stakes medical applications where domain expertise and safety constraints are essential.

Symmetry in Neural Network Parameter Spaces

Jun 16, 2025Abstract:Modern deep learning models are highly overparameterized, resulting in large sets of parameter configurations that yield the same outputs. A significant portion of this redundancy is explained by symmetries in the parameter space--transformations that leave the network function unchanged. These symmetries shape the loss landscape and constrain learning dynamics, offering a new lens for understanding optimization, generalization, and model complexity that complements existing theory of deep learning. This survey provides an overview of parameter space symmetry. We summarize existing literature, uncover connections between symmetry and learning theory, and identify gaps and opportunities in this emerging field.

Understanding Mode Connectivity via Parameter Space Symmetry

May 29, 2025Abstract:Neural network minima are often connected by curves along which train and test loss remain nearly constant, a phenomenon known as mode connectivity. While this property has enabled applications such as model merging and fine-tuning, its theoretical explanation remains unclear. We propose a new approach to exploring the connectedness of minima using parameter space symmetry. By linking the topology of symmetry groups to that of the minima, we derive the number of connected components of the minima of linear networks and show that skip connections reduce this number. We then examine when mode connectivity and linear mode connectivity hold or fail, using parameter symmetries which account for a significant part of the minimum. Finally, we provide explicit expressions for connecting curves in the minima induced by symmetry. Using the curvature of these curves, we derive conditions under which linear mode connectivity approximately holds. Our findings highlight the role of continuous symmetries in understanding the neural network loss landscape.

Discovering Symbolic Differential Equations with Symmetry Invariants

May 17, 2025Abstract:Discovering symbolic differential equations from data uncovers fundamental dynamical laws underlying complex systems. However, existing methods often struggle with the vast search space of equations and may produce equations that violate known physical laws. In this work, we address these problems by introducing the concept of \textit{symmetry invariants} in equation discovery. We leverage the fact that differential equations admitting a symmetry group can be expressed in terms of differential invariants of symmetry transformations. Thus, we propose to use these invariants as atomic entities in equation discovery, ensuring the discovered equations satisfy the specified symmetry. Our approach integrates seamlessly with existing equation discovery methods such as sparse regression and genetic programming, improving their accuracy and efficiency. We validate the proposed method through applications to various physical systems, such as fluid and reaction-diffusion, demonstrating its ability to recover parsimonious and interpretable equations that respect the laws of physics.

Improving Learning to Optimize Using Parameter Symmetries

Apr 21, 2025Abstract:We analyze a learning-to-optimize (L2O) algorithm that exploits parameter space symmetry to enhance optimization efficiency. Prior work has shown that jointly learning symmetry transformations and local updates improves meta-optimizer performance. Supporting this, our theoretical analysis demonstrates that even without identifying the optimal group element, the method locally resembles Newton's method. We further provide an example where the algorithm provably learns the correct symmetry transformation during training. To empirically evaluate L2O with teleportation, we introduce a benchmark, analyze its success and failure cases, and show that enhancements like momentum further improve performance. Our results highlight the potential of leveraging neural network parameter space symmetry to advance meta-optimization.

AtlasD: Automatic Local Symmetry Discovery

Apr 15, 2025Abstract:Existing symmetry discovery methods predominantly focus on global transformations across the entire system or space, but they fail to consider the symmetries in local neighborhoods. This may result in the reported symmetry group being a misrepresentation of the true symmetry. In this paper, we formalize the notion of local symmetry as atlas equivariance. Our proposed pipeline, automatic local symmetry discovery (AtlasD), recovers the local symmetries of a function by training local predictor networks and then learning a Lie group basis to which the predictors are equivariant. We demonstrate AtlasD is capable of discovering local symmetry groups with multiple connected components in top-quark tagging and partial differential equation experiments. The discovered local symmetry is shown to be a useful inductive bias that improves the performance of downstream tasks in climate segmentation and vision tasks.

Can LLM feedback enhance review quality? A randomized study of 20K reviews at ICLR 2025

Apr 13, 2025Abstract:Peer review at AI conferences is stressed by rapidly rising submission volumes, leading to deteriorating review quality and increased author dissatisfaction. To address these issues, we developed Review Feedback Agent, a system leveraging multiple large language models (LLMs) to improve review clarity and actionability by providing automated feedback on vague comments, content misunderstandings, and unprofessional remarks to reviewers. Implemented at ICLR 2025 as a large randomized control study, our system provided optional feedback to more than 20,000 randomly selected reviews. To ensure high-quality feedback for reviewers at this scale, we also developed a suite of automated reliability tests powered by LLMs that acted as guardrails to ensure feedback quality, with feedback only being sent to reviewers if it passed all the tests. The results show that 27% of reviewers who received feedback updated their reviews, and over 12,000 feedback suggestions from the agent were incorporated by those reviewers. This suggests that many reviewers found the AI-generated feedback sufficiently helpful to merit updating their reviews. Incorporating AI feedback led to significantly longer reviews (an average increase of 80 words among those who updated after receiving feedback) and more informative reviews, as evaluated by blinded researchers. Moreover, reviewers who were selected to receive AI feedback were also more engaged during paper rebuttals, as seen in longer author-reviewer discussions. This work demonstrates that carefully designed LLM-generated review feedback can enhance peer review quality by making reviews more specific and actionable while increasing engagement between reviewers and authors. The Review Feedback Agent is publicly available at https://github.com/zou-group/review_feedback_agent.

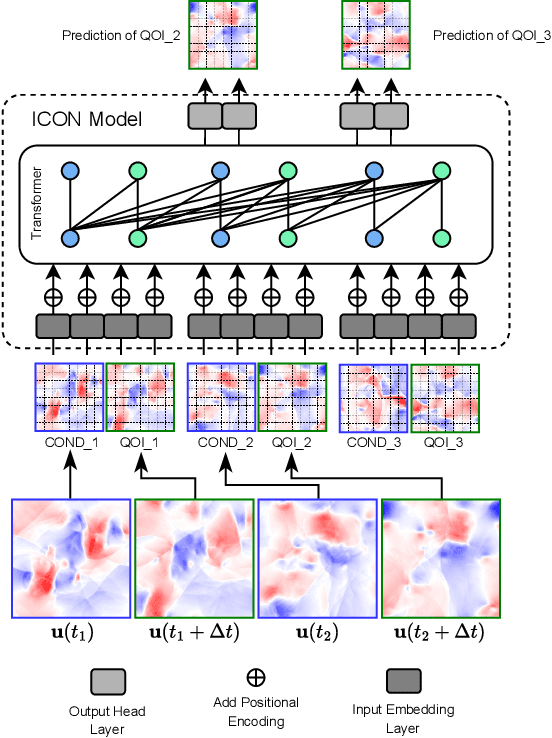

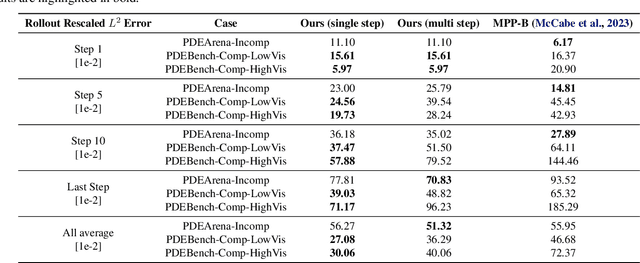

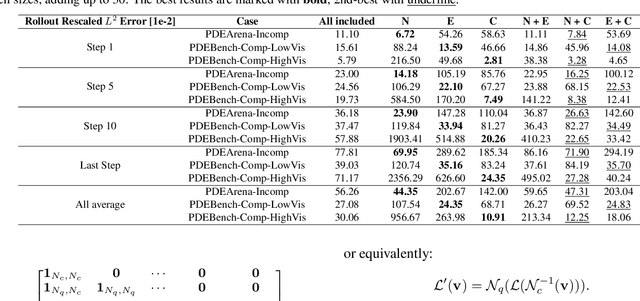

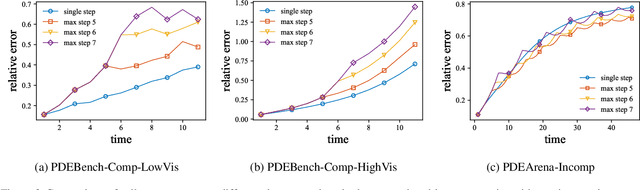

VICON: Vision In-Context Operator Networks for Multi-Physics Fluid Dynamics Prediction

Nov 25, 2024

Abstract:In-Context Operator Networks (ICONs) are models that learn operators across different types of PDEs using a few-shot, in-context approach. Although they show successful generalization to various PDEs, existing methods treat each data point as a single token, and suffer from computational inefficiency when processing dense data, limiting their application in higher spatial dimensions. In this work, we propose Vision In-Context Operator Networks (VICON), incorporating a vision transformer architecture that efficiently processes 2D functions through patch-wise operations. We evaluated our method on three fluid dynamics datasets, demonstrating both superior performance (reducing scaled $L^2$ error by $40\%$ and $61.6\%$ for two benchmark datasets for compressible flows, respectively) and computational efficiency (requiring only one-third of the inference time per frame) in long-term rollout predictions compared to the current state-of-the-art sequence-to-sequence model with fixed timestep prediction: Multiple Physics Pretraining (MPP). Compared to MPP, our method preserves the benefits of in-context operator learning, enabling flexible context formation when dealing with insufficient frame counts or varying timestep values.

Multi-Modal Forecaster: Jointly Predicting Time Series and Textual Data

Nov 21, 2024Abstract:Current forecasting approaches are largely unimodal and ignore the rich textual data that often accompany the time series due to lack of well-curated multimodal benchmark dataset. In this work, we develop TimeText Corpus (TTC), a carefully curated, time-aligned text and time dataset for multimodal forecasting. Our dataset is composed of sequences of numbers and text aligned to timestamps, and includes data from two different domains: climate science and healthcare. Our data is a significant contribution to the rare selection of available multimodal datasets. We also propose the Hybrid Multi-Modal Forecaster (Hybrid-MMF), a multimodal LLM that jointly forecasts both text and time series data using shared embeddings. However, contrary to our expectations, our Hybrid-MMF model does not outperform existing baselines in our experiments. This negative result highlights the challenges inherent in multimodal forecasting. Our code and data are available at https://github.com/Rose-STL-Lab/Multimodal_ Forecasting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge