Kai Kim

Multi-Modal Forecaster: Jointly Predicting Time Series and Textual Data

Nov 21, 2024Abstract:Current forecasting approaches are largely unimodal and ignore the rich textual data that often accompany the time series due to lack of well-curated multimodal benchmark dataset. In this work, we develop TimeText Corpus (TTC), a carefully curated, time-aligned text and time dataset for multimodal forecasting. Our dataset is composed of sequences of numbers and text aligned to timestamps, and includes data from two different domains: climate science and healthcare. Our data is a significant contribution to the rare selection of available multimodal datasets. We also propose the Hybrid Multi-Modal Forecaster (Hybrid-MMF), a multimodal LLM that jointly forecasts both text and time series data using shared embeddings. However, contrary to our expectations, our Hybrid-MMF model does not outperform existing baselines in our experiments. This negative result highlights the challenges inherent in multimodal forecasting. Our code and data are available at https://github.com/Rose-STL-Lab/Multimodal_ Forecasting.

Multi-Fidelity Residual Neural Processes for Scalable Surrogate Modeling

Feb 29, 2024

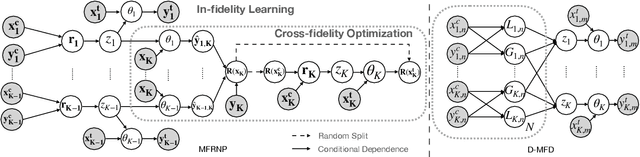

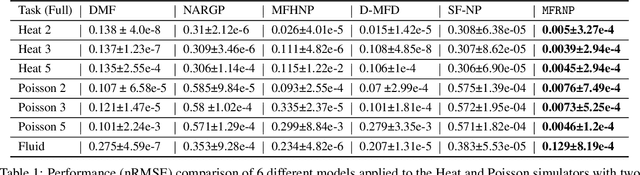

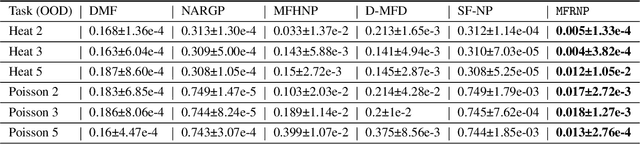

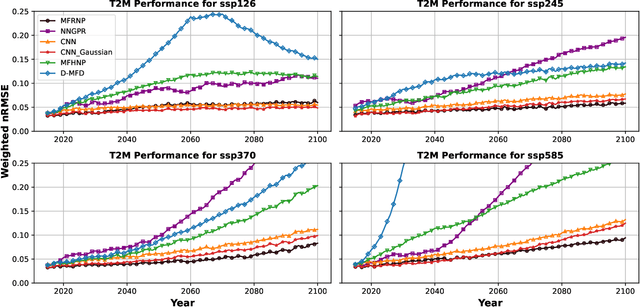

Abstract:Multi-fidelity surrogate modeling aims to learn an accurate surrogate at the highest fidelity level by combining data from multiple sources. Traditional methods relying on Gaussian processes can hardly scale to high-dimensional data. Deep learning approaches utilize neural network based encoders and decoders to improve scalability. These approaches share encoded representations across fidelities without including corresponding decoder parameters. At the highest fidelity, the representations are decoded with different parameters, making the shared information inherently inaccurate. This hinders inference performance, especially in out-of-distribution scenarios when the highest fidelity data has limited domain coverage. To address these limitations, we propose Multi-fidelity Residual Neural Processes (MFRNP), a novel multi-fidelity surrogate modeling framework. MFRNP optimizes lower fidelity decoders for accurate information sharing by aggregating lower fidelity surrogate outputs and models residual between the aggregation and ground truth on the highest fidelity. We show that MFRNP significantly outperforms current state-of-the-art in learning partial differential equations and a real-world climate modeling task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge