James P. Crutchfield

Santa Fe Institute

Way More Than the Sum of Their Parts: From Statistical to Structural Mixtures

Jul 10, 2025

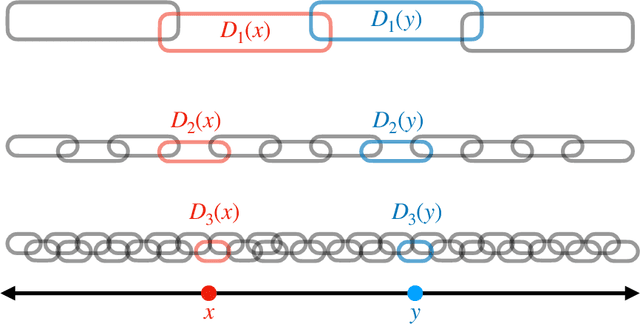

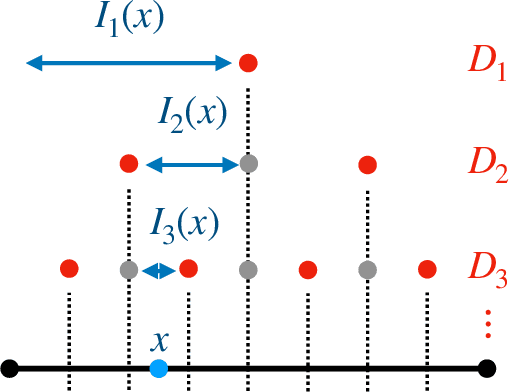

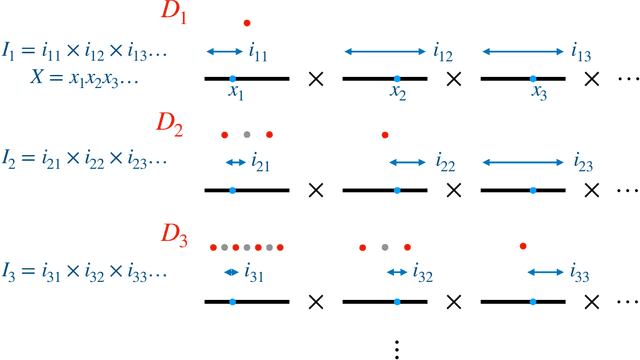

Abstract:We show that mixtures comprised of multicomponent systems typically are much more structurally complex than the sum of their parts; sometimes, infinitely more complex. We contrast this with the more familiar notion of statistical mixtures, demonstrating how statistical mixtures miss key aspects of emergent hierarchical organization. This leads us to identify a new kind of structural complexity inherent in multicomponent systems and to draw out broad consequences for system ergodicity.

Learning Stochastic Thermodynamics Directly from Correlation and Trajectory-Fluctuation Currents

Apr 26, 2025Abstract:Markedly increased computational power and data acquisition have led to growing interest in data-driven inverse dynamics problems. These seek to answer a fundamental question: What can we learn from time series measurements of a complex dynamical system? For small systems interacting with external environments, the effective dynamics are inherently stochastic, making it crucial to properly manage noise in data. Here, we explore this for systems obeying Langevin dynamics and, using currents, we construct a learning framework for stochastic modeling. Currents have recently gained increased attention for their role in bounding entropy production (EP) from thermodynamic uncertainty relations (TURs). We introduce a fundamental relationship between the cumulant currents there and standard machine-learning loss functions. Using this, we derive loss functions for several key thermodynamic functions directly from the system dynamics without the (common) intermediate step of deriving a TUR. These loss functions reproduce results derived both from TURs and other methods. More significantly, they open a path to discover new loss functions for previously inaccessible quantities. Notably, this includes access to per-trajectory entropy production, even if the observed system is driven far from its steady-state. We also consider higher order estimation. Our method is straightforward and unifies dynamic inference with recent approaches to entropy production estimation. Taken altogether, this reveals a deep connection between diffusion models in machine learning and entropy production estimation in stochastic thermodynamics.

On Principles of Emergent Organization

Nov 23, 2023Abstract:After more than a century of concerted effort, physics still lacks basic principles of spontaneous self-organization. To appreciate why, we first state the problem, outline historical approaches, and survey the present state of the physics of self-organization. This frames the particular challenges arising from mathematical intractability and the resulting need for computational approaches, as well as those arising from a chronic failure to define structure. Then, an overview of two modern mathematical formulations of organization -- intrinsic computation and evolution operators -- lays out a way to overcome these challenges. Together, the vantage point they afford shows how to account for the emergence of structured states via a statistical mechanics of systems arbitrarily far from equilibrium. The result is a constructive path forward to principles of organization that builds on mathematical identification of structure.

Physics-Informed Representation Learning for Emergent Organization in Complex Dynamical Systems

Apr 25, 2023

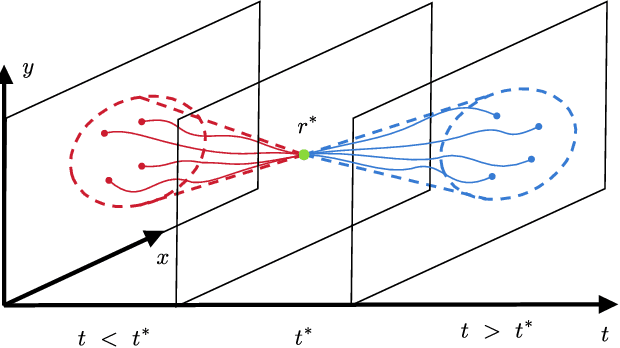

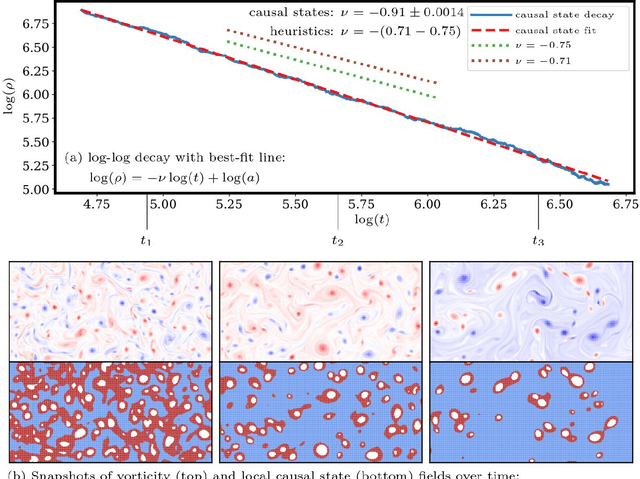

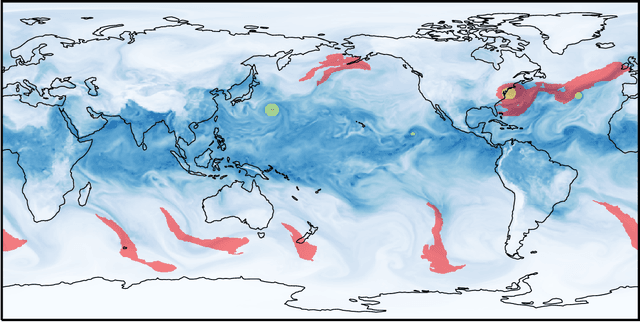

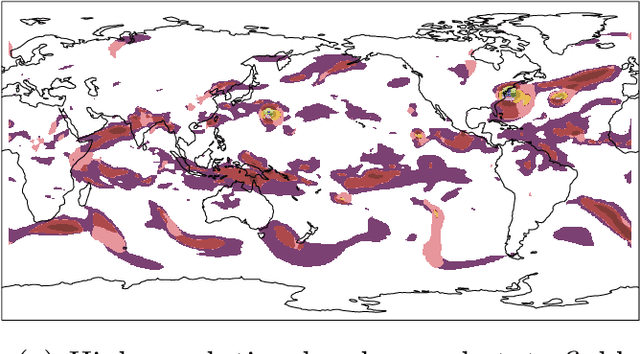

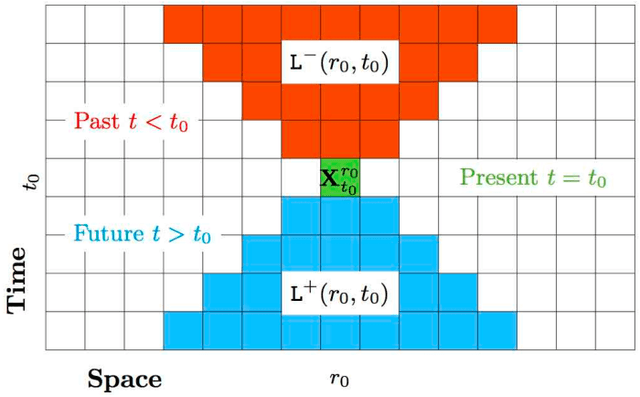

Abstract:Nonlinearly interacting system components often introduce instabilities that generate phenomena with new properties and at different space-time scales than the components. This is known as spontaneous self-organization and is ubiquitous in systems far from thermodynamic equilibrium. We introduce a theoretically-grounded framework for emergent organization that, via data-driven algorithms, is constructive in practice. Its building blocks are spacetime lightcones that capture how information propagates across a system through local interactions. We show that predictive equivalence classes of lightcones, local causal states, capture organized behaviors and coherent structures in complex spatiotemporal systems. Using our unsupervised physics-informed machine learning algorithm and a high-performance computing implementation, we demonstrate the applicability of the local causal states for real-world domain science problems. We show that the local causal states capture vortices and their power-law decay behavior in two-dimensional turbulence. We then show that known (hurricanes and atmospheric rivers) and novel extreme weather events can be identified on a pixel-level basis and tracked through time in high-resolution climate data.

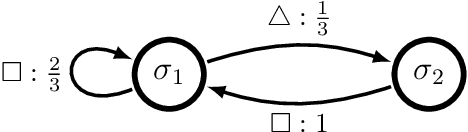

Complexity-calibrated Benchmarks for Machine Learning Reveal When Next-Generation Reservoir Computer Predictions Succeed and Mislead

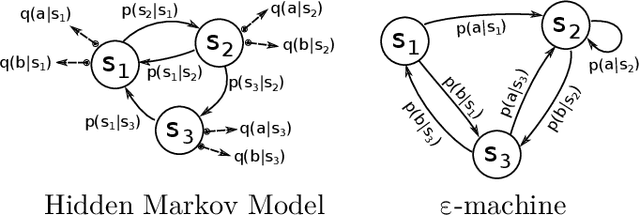

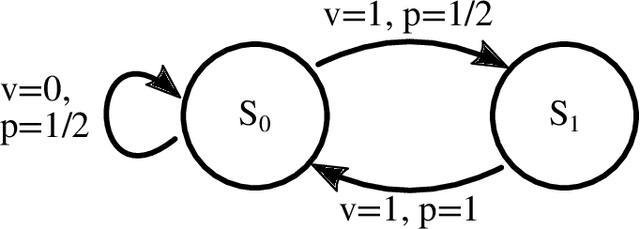

Mar 25, 2023Abstract:Recurrent neural networks are used to forecast time series in finance, climate, language, and from many other domains. Reservoir computers are a particularly easily trainable form of recurrent neural network. Recently, a "next-generation" reservoir computer was introduced in which the memory trace involves only a finite number of previous symbols. We explore the inherent limitations of finite-past memory traces in this intriguing proposal. A lower bound from Fano's inequality shows that, on highly non-Markovian processes generated by large probabilistic state machines, next-generation reservoir computers with reasonably long memory traces have an error probability that is at least ~ 60% higher than the minimal attainable error probability in predicting the next observation. More generally, it appears that popular recurrent neural networks fall far short of optimally predicting such complex processes. These results highlight the need for a new generation of optimized recurrent neural network architectures. Alongside this finding, we present concentration-of-measure results for randomly-generated but complex processes. One conclusion is that large probabilistic state machines -- specifically, large $\epsilon$-machines -- are key to generating challenging and structurally-unbiased stimuli for ground-truthing recurrent neural network architectures.

Exploring Predictive States via Cantor Embeddings and Wasserstein Distance

Jun 09, 2022

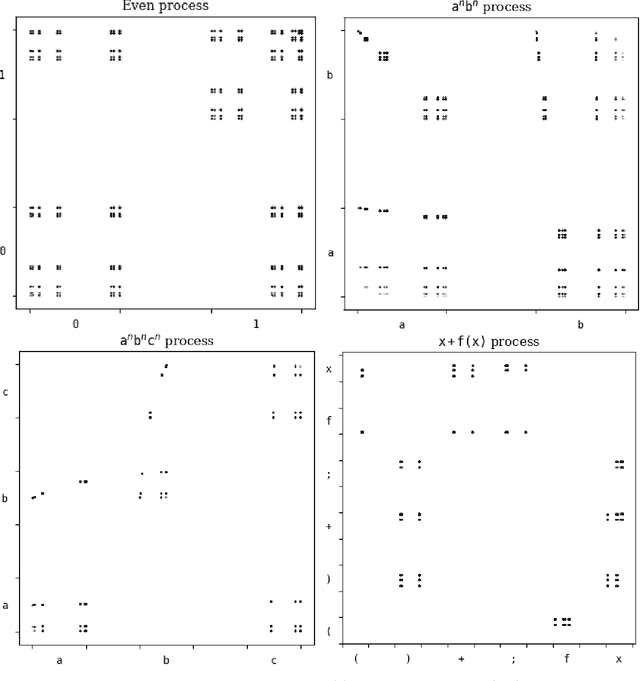

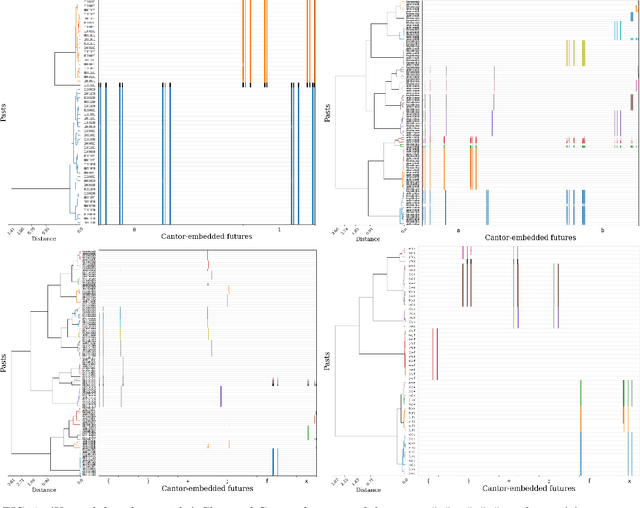

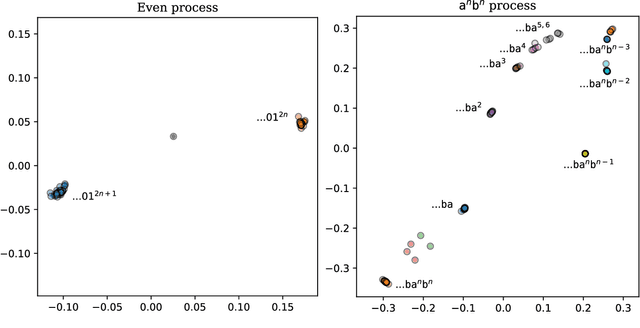

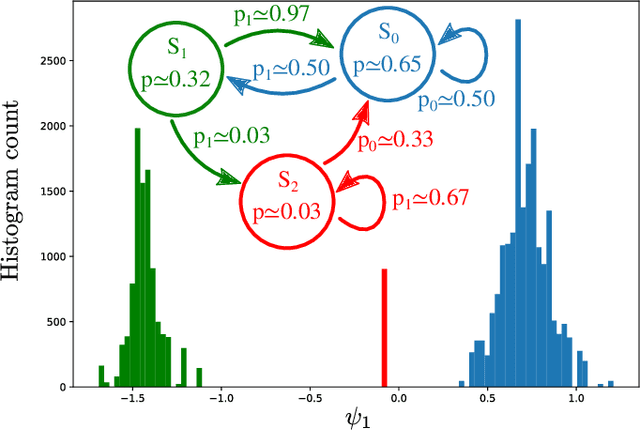

Abstract:Predictive states for stochastic processes are a nonparametric and interpretable construct with relevance across a multitude of modeling paradigms. Recent progress on the self-supervised reconstruction of predictive states from time-series data focused on the use of reproducing kernel Hilbert spaces. Here, we examine how Wasserstein distances may be used to detect predictive equivalences in symbolic data. We compute Wasserstein distances between distributions over sequences ("predictions"), using a finite-dimensional embedding of sequences based on the Cantor for the underlying geometry. We show that exploratory data analysis using the resulting geometry via hierarchical clustering and dimension reduction provides insight into the temporal structure of processes ranging from the relatively simple (e.g., finite-state hidden Markov models) to the very complex (e.g., infinite-state indexed grammars).

Topology, Convergence, and Reconstruction of Predictive States

Sep 19, 2021

Abstract:Predictive equivalence in discrete stochastic processes have been applied with great success to identify randomness and structure in statistical physics and chaotic dynamical systems and to inferring hidden Markov models. We examine the conditions under which they can be reliably reconstructed from time-series data, showing that convergence of predictive states can be achieved from empirical samples in the weak topology of measures. Moreover, predictive states may be represented in Hilbert spaces that replicate the weak topology. We mathematically explain how these representations are particularly beneficial when reconstructing high-memory processes and connect them to reproducing kernel Hilbert spaces.

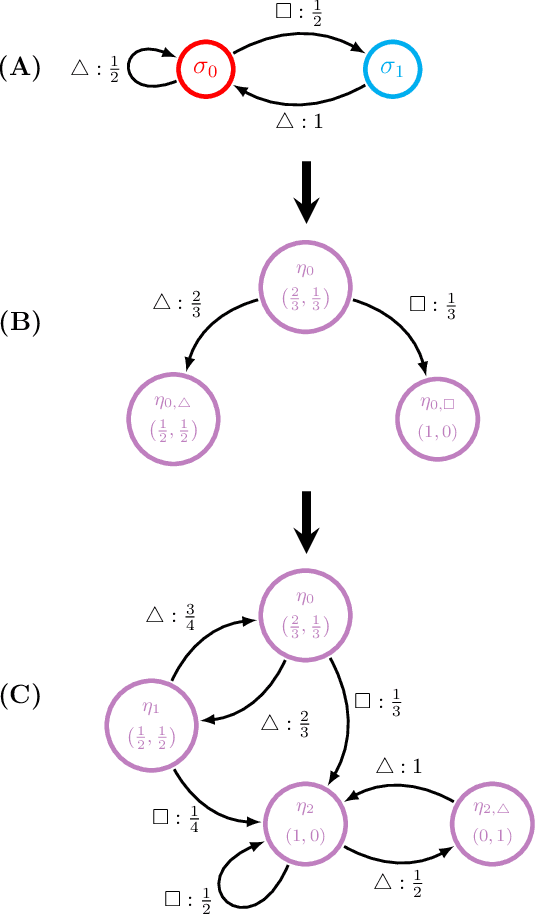

Discovering Causal Structure with Reproducing-Kernel Hilbert Space $ε$-Machines

Nov 23, 2020

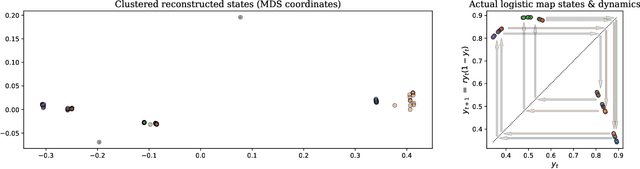

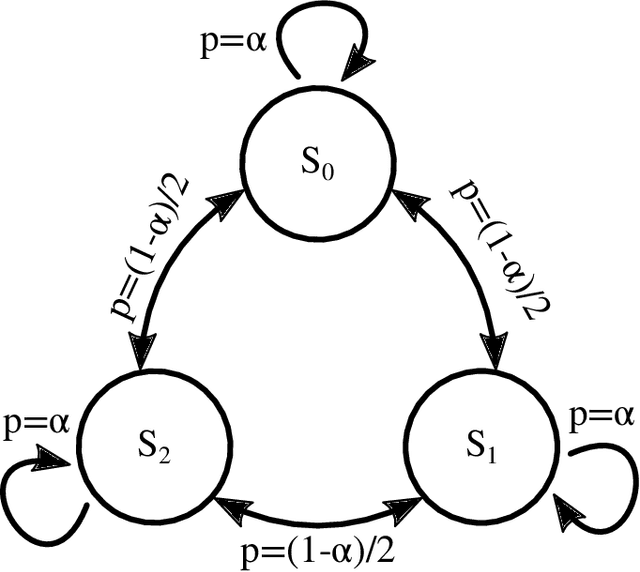

Abstract:We merge computational mechanics' definition of causal states (predictively-equivalent histories) with reproducing-kernel Hilbert space (RKHS) representation inference. The result is a widely-applicable method that infers causal structure directly from observations of a system's behaviors whether they are over discrete or continuous events or time. A structural representation -- a finite- or infinite-state kernel $\epsilon$-machine -- is extracted by a reduced-dimension transform that gives an efficient representation of causal states and their topology. In this way, the system dynamics are represented by a stochastic (ordinary or partial) differential equation that acts on causal states. We introduce an algorithm to estimate the associated evolution operator. Paralleling the Fokker-Plank equation, it efficiently evolves causal-state distributions and makes predictions in the original data space via an RKHS functional mapping. We demonstrate these techniques, together with their predictive abilities, on discrete-time, discrete-value infinite Markov-order processes generated by finite-state hidden Markov models with (i) finite or (ii) uncountably-infinite causal states and (iii) a continuous-time, continuous-value process generated by a thermally-driven chaotic flow. The method robustly estimates causal structure in the presence of varying external and measurement noise levels.

Spacetime Autoencoders Using Local Causal States

Oct 12, 2020

Abstract:Local causal states are latent representations that capture organized pattern and structure in complex spatiotemporal systems. We expand their functionality, framing them as spacetime autoencoders. Previously, they were only considered as maps from observable spacetime fields to latent local causal state fields. Here, we show that there is a stochastic decoding that maps back from the latent fields to observable fields. Furthermore, their Markovian properties define a stochastic dynamic in the latent space. Combined with stochastic decoding, this gives a new method for forecasting spacetime fields.

Shannon Entropy Rate of Hidden Markov Processes

Aug 29, 2020

Abstract:Hidden Markov chains are widely applied statistical models of stochastic processes, from fundamental physics and chemistry to finance, health, and artificial intelligence. The hidden Markov processes they generate are notoriously complicated, however, even if the chain is finite state: no finite expression for their Shannon entropy rate exists, as the set of their predictive features is generically infinite. As such, to date one cannot make general statements about how random they are nor how structured. Here, we address the first part of this challenge by showing how to efficiently and accurately calculate their entropy rates. We also show how this method gives the minimal set of infinite predictive features. A sequel addresses the challenge's second part on structure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge