Victor Lee

Concorde: Fast and Accurate CPU Performance Modeling with Compositional Analytical-ML Fusion

Mar 29, 2025Abstract:Cycle-level simulators such as gem5 are widely used in microarchitecture design, but they are prohibitively slow for large-scale design space explorations. We present Concorde, a new methodology for learning fast and accurate performance models of microarchitectures. Unlike existing simulators and learning approaches that emulate each instruction, Concorde predicts the behavior of a program based on compact performance distributions that capture the impact of different microarchitectural components. It derives these performance distributions using simple analytical models that estimate bounds on performance induced by each microarchitectural component, providing a simple yet rich representation of a program's performance characteristics across a large space of microarchitectural parameters. Experiments show that Concorde is more than five orders of magnitude faster than a reference cycle-level simulator, with about 2% average Cycles-Per-Instruction (CPI) prediction error across a range of SPEC, open-source, and proprietary benchmarks. This enables rapid design-space exploration and performance sensitivity analyses that are currently infeasible, e.g., in about an hour, we conducted a first-of-its-kind fine-grained performance attribution to different microarchitectural components across a diverse set of programs, requiring nearly 150 million CPI evaluations.

Alternately Optimized Graph Neural Networks

Jun 08, 2022

Abstract:Graph Neural Networks (GNNs) have demonstrated powerful representation capability in numerous graph-based tasks. Specifically, the decoupled structures of GNNs such as APPNP become popular due to their simplicity and performance advantages. However, the end-to-end training of these GNNs makes them inefficient in computation and memory consumption. In order to deal with these limitations, in this work, we propose an alternating optimization framework for graph neural networks that does not require end-to-end training. Extensive experiments under different settings demonstrate that the performance of the proposed algorithm is comparable to existing state-of-the-art algorithms but has significantly better computation and memory efficiency. Additionally, we show that our framework can be taken advantage to enhance existing decoupled GNNs.

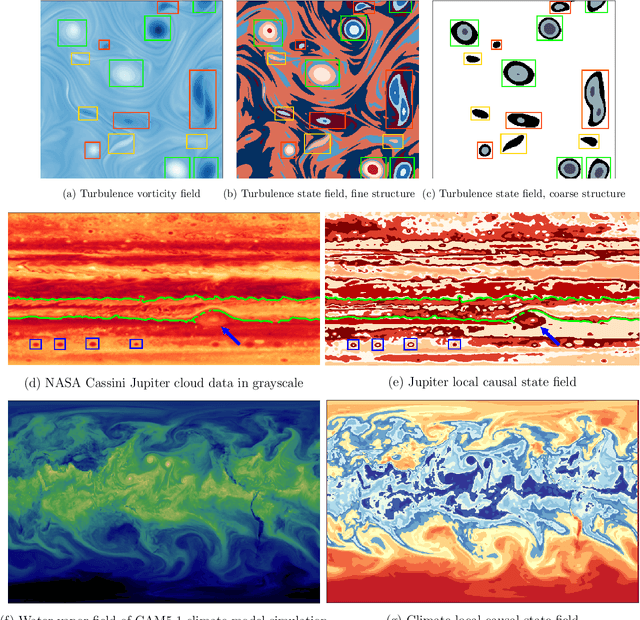

DisCo: Physics-Based Unsupervised Discovery of Coherent Structures in Spatiotemporal Systems

Sep 25, 2019

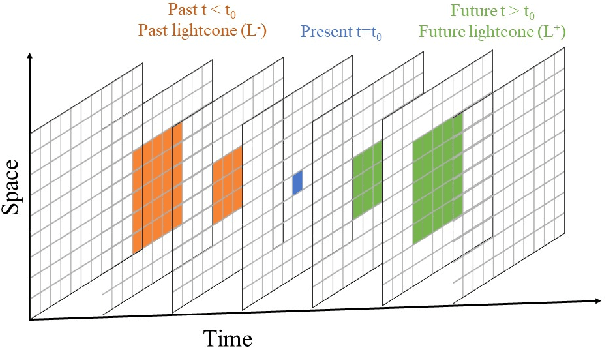

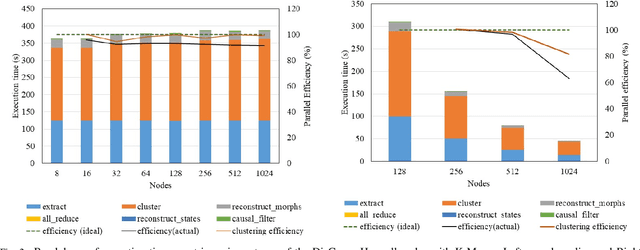

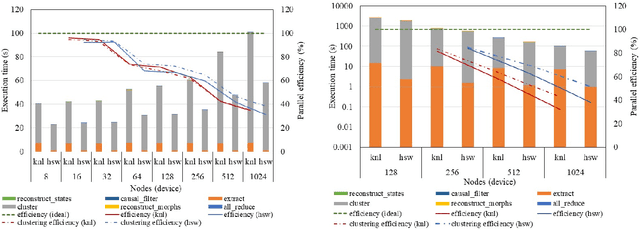

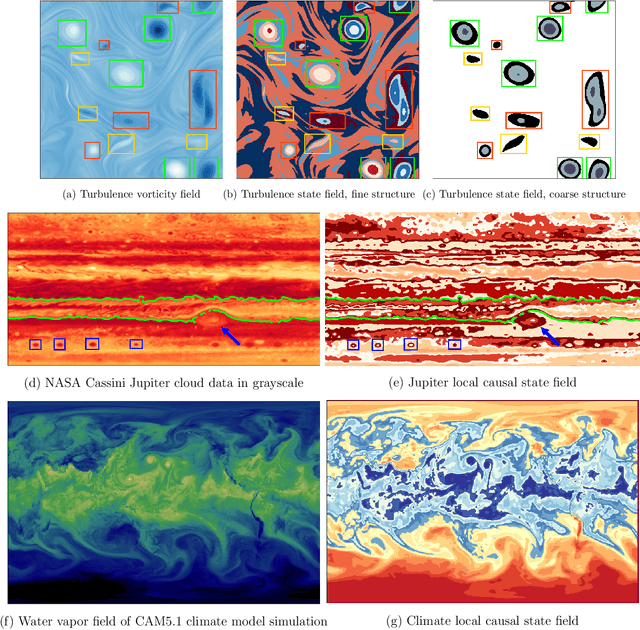

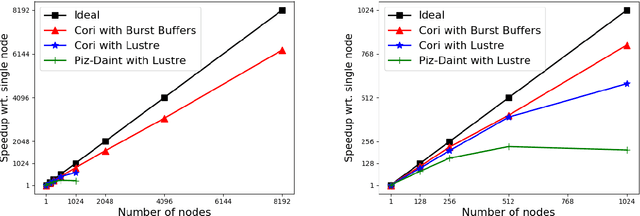

Abstract:Extracting actionable insight from complex unlabeled scientific data is an open challenge and key to unlocking data-driven discovery in science. Complementary and alternative to supervised machine learning approaches, unsupervised physics-based methods based on behavior-driven theories hold great promise. Due to computational limitations, practical application on real-world domain science problems has lagged far behind theoretical development. We present our first step towards bridging this divide - DisCo - a high-performance distributed workflow for the behavior-driven local causal state theory. DisCo provides a scalable unsupervised physics-based representation learning method that decomposes spatiotemporal systems into their structurally relevant components, which are captured by the latent local causal state variables. Complex spatiotemporal systems are generally highly structured and organize around a lower-dimensional skeleton of coherent structures, and in several firsts we demonstrate the efficacy of DisCo in capturing such structures from observational and simulated scientific data. To the best of our knowledge, DisCo is also the first application software developed entirely in Python to scale to over 1000 machine nodes, providing good performance along with ensuring domain scientists' productivity. We developed scalable, performant methods optimized for Intel many-core processors that will be upstreamed to open-source Python library packages. Our capstone experiment, using newly developed DisCo workflow and libraries, performs unsupervised spacetime segmentation analysis of CAM5.1 climate simulation data, processing an unprecedented 89.5 TB in 6.6 minutes end-to-end using 1024 Intel Haswell nodes on the Cori supercomputer obtaining 91% weak-scaling and 64% strong-scaling efficiency.

Towards Unsupervised Segmentation of Extreme Weather Events

Sep 16, 2019

Abstract:Extreme weather is one of the main mechanisms through which climate change will directly impact human society. Coping with such change as a global community requires markedly improved understanding of how global warming drives extreme weather events. While alternative climate scenarios can be simulated using sophisticated models, identifying extreme weather events in these simulations requires automation due to the vast amounts of complex high-dimensional data produced. Atmospheric dynamics, and hydrodynamic flows more generally, are highly structured and largely organize around a lower dimensional skeleton of coherent structures. Indeed, extreme weather events are a special case of more general hydrodynamic coherent structures. We present a scalable physics-based representation learning method that decomposes spatiotemporal systems into their structurally relevant components, which are captured by latent variables known as local causal states. For complex fluid flows we show our method is capable of capturing known coherent structures, and with promising segmentation results on CAM5.1 water vapor data we outline the path to extreme weather identification from unlabeled climate model simulation data.

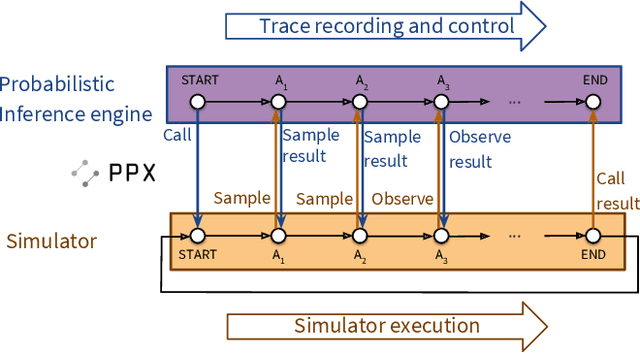

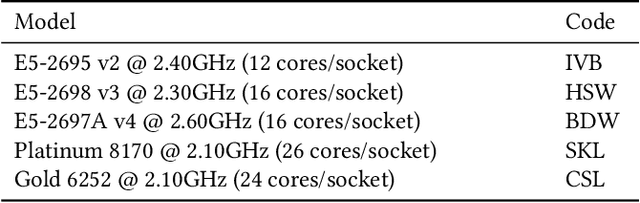

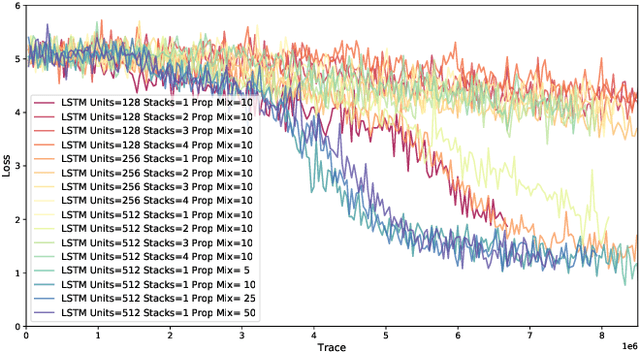

Etalumis: Bringing Probabilistic Programming to Scientific Simulators at Scale

Jul 08, 2019

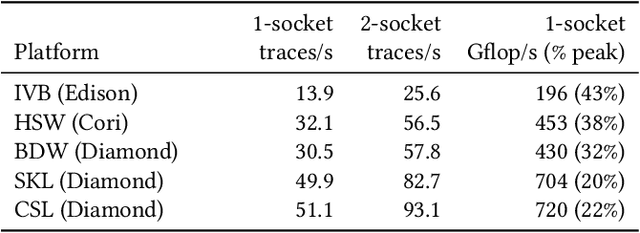

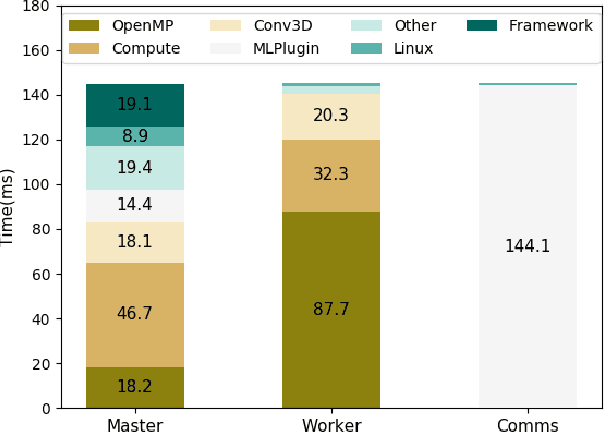

Abstract:Probabilistic programming languages (PPLs) are receiving widespread attention for performing Bayesian inference in complex generative models. However, applications to science remain limited because of the impracticability of rewriting complex scientific simulators in a PPL, the computational cost of inference, and the lack of scalable implementations. To address these, we present a novel PPL framework that couples directly to existing scientific simulators through a cross-platform probabilistic execution protocol and provides Markov chain Monte Carlo (MCMC) and deep-learning-based inference compilation (IC) engines for tractable inference. To guide IC inference, we perform distributed training of a dynamic 3DCNN--LSTM architecture with a PyTorch-MPI-based framework on 1,024 32-core CPU nodes of the Cori supercomputer with a global minibatch size of 128k: achieving a performance of 450 Tflop/s through enhancements to PyTorch. We demonstrate a Large Hadron Collider (LHC) use-case with the C++ Sherpa simulator and achieve the largest-scale posterior inference in a Turing-complete PPL.

CosmoFlow: Using Deep Learning to Learn the Universe at Scale

Aug 14, 2018

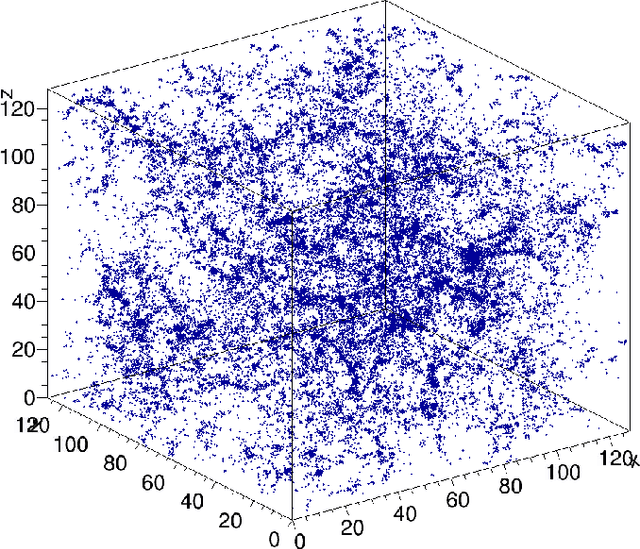

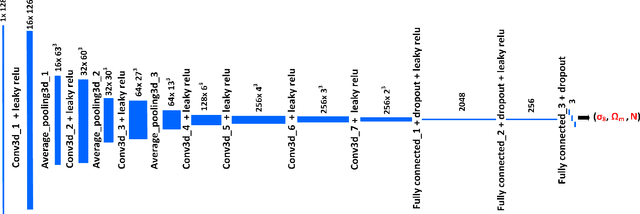

Abstract:Deep learning is a promising tool to determine the physical model that describes our universe. To handle the considerable computational cost of this problem, we present CosmoFlow: a highly scalable deep learning application built on top of the TensorFlow framework. CosmoFlow uses efficient implementations of 3D convolution and pooling primitives, together with improvements in threading for many element-wise operations, to improve training performance on Intel(C) Xeon Phi(TM) processors. We also utilize the Cray PE Machine Learning Plugin for efficient scaling to multiple nodes. We demonstrate fully synchronous data-parallel training on 8192 nodes of Cori with 77% parallel efficiency, achieving 3.5 Pflop/s sustained performance. To our knowledge, this is the first large-scale science application of the TensorFlow framework at supercomputer scale with fully-synchronous training. These enhancements enable us to process large 3D dark matter distribution and predict the cosmological parameters $\Omega_M$, $\sigma_8$ and n$_s$ with unprecedented accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge