Jialin Liu

OPUS: Towards Efficient and Principled Data Selection in Large Language Model Pre-training in Every Iteration

Feb 05, 2026Abstract:As high-quality public text approaches exhaustion, a phenomenon known as the Data Wall, pre-training is shifting from more tokens to better tokens. However, existing methods either rely on heuristic static filters that ignore training dynamics, or use dynamic yet optimizer-agnostic criteria based on raw gradients. We propose OPUS (Optimizer-induced Projected Utility Selection), a dynamic data selection framework that defines utility in the optimizer-induced update space. OPUS scores candidates by projecting their effective updates, shaped by modern optimizers, onto a target direction derived from a stable, in-distribution proxy. To ensure scalability, we employ Ghost technique with CountSketch for computational efficiency, and Boltzmann sampling for data diversity, incurring only 4.7\% additional compute overhead. OPUS achieves remarkable results across diverse corpora, quality tiers, optimizers, and model scales. In pre-training of GPT-2 Large/XL on FineWeb and FineWeb-Edu with 30B tokens, OPUS outperforms industrial-level baselines and even full 200B-token training. Moreover, when combined with industrial-level static filters, OPUS further improves pre-training efficiency, even with lower-quality data. Furthermore, in continued pre-training of Qwen3-8B-Base on SciencePedia, OPUS achieves superior performance using only 0.5B tokens compared to full training with 3B tokens, demonstrating significant data efficiency gains in specialized domains.

Keep Rehearsing and Refining: Lifelong Learning Vehicle Routing under Continually Drifting Tasks

Jan 30, 2026Abstract:Existing neural solvers for vehicle routing problems (VRPs) are typically trained either in a one-off manner on a fixed set of pre-defined tasks or in a lifelong manner on several tasks arriving sequentially, assuming sufficient training on each task. Both settings overlook a common real-world property: problem patterns may drift continually over time, yielding massive tasks sequentially arising while offering only limited training resources per task. In this paper, we study a novel lifelong learning paradigm for neural VRP solvers under continually drifting tasks over learning time steps, where sufficient training for any given task at any time is not available. We propose Dual Replay with Experience Enhancement (DREE), a general framework to improve learning efficiency and mitigate catastrophic forgetting under such drift. Extensive experiments show that, under such continual drift, DREE effectively learns new tasks, preserves prior knowledge, improves generalization to unseen tasks, and can be applied to diverse existing neural solvers.

GVGAI-LLM: Evaluating Large Language Model Agents with Infinite Games

Aug 11, 2025Abstract:We introduce GVGAI-LLM, a video game benchmark for evaluating the reasoning and problem-solving capabilities of large language models (LLMs). Built on the General Video Game AI framework, it features a diverse collection of arcade-style games designed to test a model's ability to handle tasks that differ from most existing LLM benchmarks. The benchmark leverages a game description language that enables rapid creation of new games and levels, helping to prevent overfitting over time. Each game scene is represented by a compact set of ASCII characters, allowing for efficient processing by language models. GVGAI-LLM defines interpretable metrics, including the meaningful step ratio, step efficiency, and overall score, to assess model behavior. Through zero-shot evaluations across a broad set of games and levels with diverse challenges and skill depth, we reveal persistent limitations of LLMs in spatial reasoning and basic planning. Current models consistently exhibit spatial and logical errors, motivating structured prompting and spatial grounding techniques. While these interventions lead to partial improvements, the benchmark remains very far from solved. GVGAI-LLM provides a reproducible testbed for advancing research on language model capabilities, with a particular emphasis on agentic behavior and contextual reasoning.

LiBOG: Lifelong Learning for Black-Box Optimizer Generation

May 19, 2025Abstract:Meta-Black-Box Optimization (MetaBBO) garners attention due to its success in automating the configuration and generation of black-box optimizers, significantly reducing the human effort required for optimizer design and discovering optimizers with higher performance than classic human-designed optimizers. However, existing MetaBBO methods conduct one-off training under the assumption that a stationary problem distribution with extensive and representative training problem samples is pre-available. This assumption is often impractical in real-world scenarios, where diverse problems following shifting distribution continually arise. Consequently, there is a pressing need for methods that can continuously learn from new problems encountered on-the-fly and progressively enhance their capabilities. In this work, we explore a novel paradigm of lifelong learning in MetaBBO and introduce LiBOG, a novel approach designed to learn from sequentially encountered problems and generate high-performance optimizers for Black-Box Optimization (BBO). LiBOG consolidates knowledge both across tasks and within tasks to mitigate catastrophic forgetting. Extensive experiments demonstrate LiBOG's effectiveness in learning to generate high-performance optimizers in a lifelong learning manner, addressing catastrophic forgetting while maintaining plasticity to learn new tasks.

StarGen: A Spatiotemporal Autoregression Framework with Video Diffusion Model for Scalable and Controllable Scene Generation

Jan 10, 2025

Abstract:Recent advances in large reconstruction and generative models have significantly improved scene reconstruction and novel view generation. However, due to compute limitations, each inference with these large models is confined to a small area, making long-range consistent scene generation challenging. To address this, we propose StarGen, a novel framework that employs a pre-trained video diffusion model in an autoregressive manner for long-range scene generation. The generation of each video clip is conditioned on the 3D warping of spatially adjacent images and the temporally overlapping image from previously generated clips, improving spatiotemporal consistency in long-range scene generation with precise pose control. The spatiotemporal condition is compatible with various input conditions, facilitating diverse tasks, including sparse view interpolation, perpetual view generation, and layout-conditioned city generation. Quantitative and qualitative evaluations demonstrate StarGen's superior scalability, fidelity, and pose accuracy compared to state-of-the-art methods.

Deeply Learned Robust Matrix Completion for Large-scale Low-rank Data Recovery

Dec 31, 2024

Abstract:Robust matrix completion (RMC) is a widely used machine learning tool that simultaneously tackles two critical issues in low-rank data analysis: missing data entries and extreme outliers. This paper proposes a novel scalable and learnable non-convex approach, coined Learned Robust Matrix Completion (LRMC), for large-scale RMC problems. LRMC enjoys low computational complexity with linear convergence. Motivated by the proposed theorem, the free parameters of LRMC can be effectively learned via deep unfolding to achieve optimum performance. Furthermore, this paper proposes a flexible feedforward-recurrent-mixed neural network framework that extends deep unfolding from fix-number iterations to infinite iterations. The superior empirical performance of LRMC is verified with extensive experiments against state-of-the-art on synthetic datasets and real applications, including video background subtraction, ultrasound imaging, face modeling, and cloud removal from satellite imagery.

Exploring Accuracy-Fairness Trade-off in Large Language Models

Nov 21, 2024

Abstract:Large Language Models (LLMs) have made significant strides in the field of artificial intelligence, showcasing their ability to interact with humans and influence human cognition through information dissemination. However, recent studies have brought to light instances of bias inherent within these LLMs, presenting a critical issue that demands attention. In our research, we delve deeper into the intricate challenge of harmonising accuracy and fairness in the enhancement of LLMs. While improving accuracy can indeed enhance overall LLM performance, it often occurs at the expense of fairness. Overemphasising optimisation of one metric invariably leads to a significant degradation of the other. This underscores the necessity of taking into account multiple considerations during the design and optimisation phases of LLMs. Therefore, we advocate for reformulating the LLM training process as a multi-objective learning task. Our investigation reveals that multi-objective evolutionary learning (MOEL) methodologies offer promising avenues for tackling this challenge. Our MOEL framework enables the simultaneous optimisation of both accuracy and fairness metrics, resulting in a Pareto-optimal set of LLMs. In summary, our study sheds valuable lights on the delicate equilibrium between accuracy and fairness within LLMs, which is increasingly significant for their real-world applications. By harnessing MOEL, we present a promising pathway towards fairer and more efficacious AI technologies.

Learning Attentional Mixture of LoRAs for Language Model Continual Learning

Sep 29, 2024Abstract:Fine-tuning large language models (LLMs) with Low-Rank adaption (LoRA) is widely acknowledged as an effective approach for continual learning for new tasks. However, it often suffers from catastrophic forgetting when dealing with multiple tasks sequentially. To this end, we propose Attentional Mixture of LoRAs (AM-LoRA), a continual learning approach tailored for LLMs. Specifically, AM-LoRA learns a sequence of LoRAs for a series of tasks to continually learn knowledge from different tasks. The key of our approach is that we devise an attention mechanism as a knowledge mixture module to adaptively integrate information from each LoRA. With the attention mechanism, AM-LoRA can efficiently leverage the distinctive contributions of each LoRA, while mitigating the risk of mutually negative interactions among them that may lead to catastrophic forgetting. Moreover, we further introduce $L1$ norm in the learning process to make the attention vector more sparse. The sparse constraints can enable the model to lean towards selecting a few highly relevant LoRAs, rather than aggregating and weighting all LoRAs collectively, which can further reduce the impact stemming from mutual interference. Experimental results on continual learning benchmarks indicate the superiority of our proposed method.

Fairness-aware Multiobjective Evolutionary Learning

Sep 27, 2024

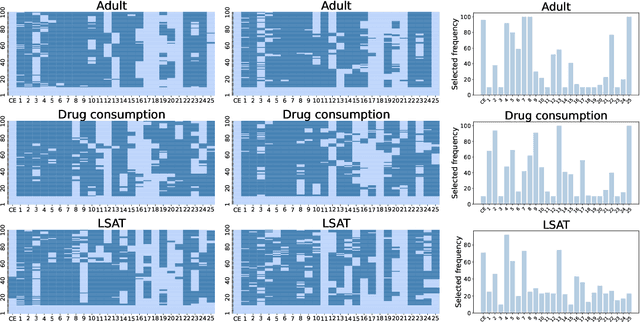

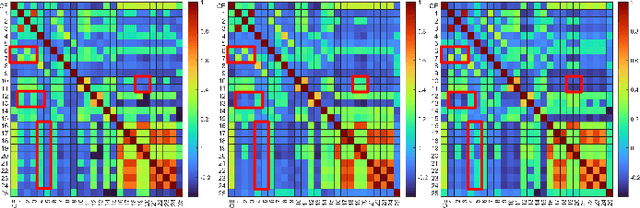

Abstract:Multiobjective evolutionary learning (MOEL) has demonstrated its advantages of training fairer machine learning models considering a predefined set of conflicting objectives, including accuracy and different fairness measures. Recent works propose to construct a representative subset of fairness measures as optimisation objectives of MOEL throughout model training. However, the determination of a representative measure set relies on dataset, prior knowledge and requires substantial computational costs. What's more, those representative measures may differ across different model training processes. Instead of using a static predefined set determined before model training, this paper proposes to dynamically and adaptively determine a representative measure set online during model training. The dynamically determined representative set is then used as optimising objectives of the MOEL framework and can vary with time. Extensive experimental results on 12 well-known benchmark datasets demonstrate that our proposed framework achieves outstanding performance compared to state-of-the-art approaches for mitigating unfairness in terms of accuracy as well as 25 fairness measures although only a few of them were dynamically selected and used as optimisation objectives. The results indicate the importance of setting optimisation objectives dynamically during training.

* 14 pages

S$^3$Attention: Improving Long Sequence Attention with Smoothed Skeleton Sketching

Aug 16, 2024Abstract:Attention based models have achieved many remarkable breakthroughs in numerous applications. However, the quadratic complexity of Attention makes the vanilla Attention based models hard to apply to long sequence tasks. Various improved Attention structures are proposed to reduce the computation cost by inducing low rankness and approximating the whole sequence by sub-sequences. The most challenging part of those approaches is maintaining the proper balance between information preservation and computation reduction: the longer sub-sequences used, the better information is preserved, but at the price of introducing more noise and computational costs. In this paper, we propose a smoothed skeleton sketching based Attention structure, coined S$^3$Attention, which significantly improves upon the previous attempts to negotiate this trade-off. S$^3$Attention has two mechanisms to effectively minimize the impact of noise while keeping the linear complexity to the sequence length: a smoothing block to mix information over long sequences and a matrix sketching method that simultaneously selects columns and rows from the input matrix. We verify the effectiveness of S$^3$Attention both theoretically and empirically. Extensive studies over Long Range Arena (LRA) datasets and six time-series forecasting show that S$^3$Attention significantly outperforms both vanilla Attention and other state-of-the-art variants of Attention structures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge