Jiyuan Pei

Keep Rehearsing and Refining: Lifelong Learning Vehicle Routing under Continually Drifting Tasks

Jan 30, 2026Abstract:Existing neural solvers for vehicle routing problems (VRPs) are typically trained either in a one-off manner on a fixed set of pre-defined tasks or in a lifelong manner on several tasks arriving sequentially, assuming sufficient training on each task. Both settings overlook a common real-world property: problem patterns may drift continually over time, yielding massive tasks sequentially arising while offering only limited training resources per task. In this paper, we study a novel lifelong learning paradigm for neural VRP solvers under continually drifting tasks over learning time steps, where sufficient training for any given task at any time is not available. We propose Dual Replay with Experience Enhancement (DREE), a general framework to improve learning efficiency and mitigate catastrophic forgetting under such drift. Extensive experiments show that, under such continual drift, DREE effectively learns new tasks, preserves prior knowledge, improves generalization to unseen tasks, and can be applied to diverse existing neural solvers.

LiBOG: Lifelong Learning for Black-Box Optimizer Generation

May 19, 2025Abstract:Meta-Black-Box Optimization (MetaBBO) garners attention due to its success in automating the configuration and generation of black-box optimizers, significantly reducing the human effort required for optimizer design and discovering optimizers with higher performance than classic human-designed optimizers. However, existing MetaBBO methods conduct one-off training under the assumption that a stationary problem distribution with extensive and representative training problem samples is pre-available. This assumption is often impractical in real-world scenarios, where diverse problems following shifting distribution continually arise. Consequently, there is a pressing need for methods that can continuously learn from new problems encountered on-the-fly and progressively enhance their capabilities. In this work, we explore a novel paradigm of lifelong learning in MetaBBO and introduce LiBOG, a novel approach designed to learn from sequentially encountered problems and generate high-performance optimizers for Black-Box Optimization (BBO). LiBOG consolidates knowledge both across tasks and within tasks to mitigate catastrophic forgetting. Extensive experiments demonstrate LiBOG's effectiveness in learning to generate high-performance optimizers in a lifelong learning manner, addressing catastrophic forgetting while maintaining plasticity to learn new tasks.

Learning from Offline and Online Experiences: A Hybrid Adaptive Operator Selection Framework

Apr 16, 2024Abstract:In many practical applications, usually, similar optimisation problems or scenarios repeatedly appear. Learning from previous problem-solving experiences can help adjust algorithm components of meta-heuristics, e.g., adaptively selecting promising search operators, to achieve better optimisation performance. However, those experiences obtained from previously solved problems, namely offline experiences, may sometimes provide misleading perceptions when solving a new problem, if the characteristics of previous problems and the new one are relatively different. Learning from online experiences obtained during the ongoing problem-solving process is more instructive but highly restricted by limited computational resources. This paper focuses on the effective combination of offline and online experiences. A novel hybrid framework that learns to dynamically and adaptively select promising search operators is proposed. Two adaptive operator selection modules with complementary paradigms cooperate in the framework to learn from offline and online experiences and make decisions. An adaptive decision policy is maintained to balance the use of those two modules in an online manner. Extensive experiments on 170 widely studied real-value benchmark optimisation problems and a benchmark set with 34 instances for combinatorial optimisation show that the proposed hybrid framework outperforms the state-of-the-art methods. Ablation study verifies the effectiveness of each component of the framework.

Local Optima Correlation Assisted Adaptive Operator Selection

May 03, 2023

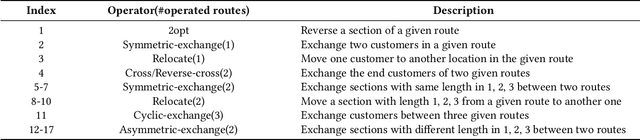

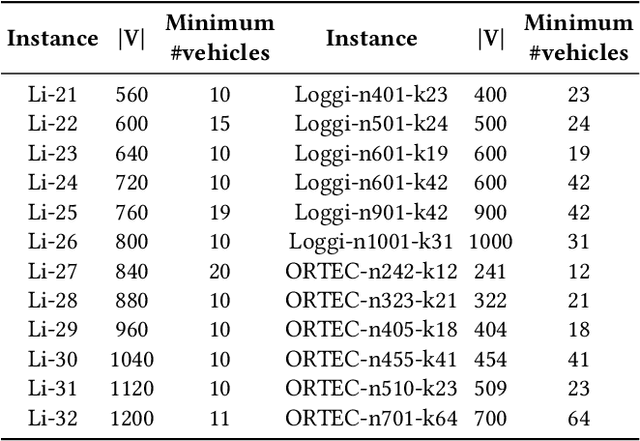

Abstract:For solving combinatorial optimisation problems with metaheuristics, different search operators are applied for sampling new solutions in the neighbourhood of a given solution. It is important to understand the relationship between operators for various purposes, e.g., adaptively deciding when to use which operator to find optimal solutions efficiently. However, it is difficult to theoretically analyse this relationship, especially in the complex solution space of combinatorial optimisation problems. In this paper, we propose to empirically analyse the relationship between operators in terms of the correlation between their local optima and develop a measure for quantifying their relationship. The comprehensive analyses on a wide range of capacitated vehicle routing problem benchmark instances show that there is a consistent pattern in the correlation between commonly used operators. Based on this newly proposed local optima correlation metric, we propose a novel approach for adaptively selecting among the operators during the search process. The core intention is to improve search efficiency by preventing wasting computational resources on exploring neighbourhoods where the local optima have already been reached. Experiments on randomly generated instances and commonly used benchmark datasets are conducted. Results show that the proposed approach outperforms commonly used adaptive operator selection methods.

Evolving Constrained Reinforcement Learning Policy

Apr 19, 2023Abstract:Evolutionary algorithms have been used to evolve a population of actors to generate diverse experiences for training reinforcement learning agents, which helps to tackle the temporal credit assignment problem and improves the exploration efficiency. However, when adapting this approach to address constrained problems, balancing the trade-off between the reward and constraint violation is hard. In this paper, we propose a novel evolutionary constrained reinforcement learning (ECRL) algorithm, which adaptively balances the reward and constraint violation with stochastic ranking, and at the same time, restricts the policy's behaviour by maintaining a set of Lagrange relaxation coefficients with a constraint buffer. Extensive experiments on robotic control benchmarks show that our ECRL achieves outstanding performance compared to state-of-the-art algorithms. Ablation analysis shows the benefits of introducing stochastic ranking and constraint buffer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge