Mengjie Zhang

Enhancing Generalization in Evolutionary Feature Construction for Symbolic Regression through Vicinal Jensen Gap Minimization

Feb 02, 2026Abstract:Genetic programming-based feature construction has achieved significant success in recent years as an automated machine learning technique to enhance learning performance. However, overfitting remains a challenge that limits its broader applicability. To improve generalization, we prove that vicinal risk, estimated through noise perturbation or mixup-based data augmentation, is bounded by the sum of empirical risk and a regularization term-either finite difference or the vicinal Jensen gap. Leveraging this decomposition, we propose an evolutionary feature construction framework that jointly optimizes empirical risk and the vicinal Jensen gap to control overfitting. Since datasets may vary in noise levels, we develop a noise estimation strategy to dynamically adjust regularization strength. Furthermore, to mitigate manifold intrusion-where data augmentation may generate unrealistic samples that fall outside the data manifold-we propose a manifold intrusion detection mechanism. Experimental results on 58 datasets demonstrate the effectiveness of Jensen gap minimization compared to other complexity measures. Comparisons with 15 machine learning algorithms further indicate that genetic programming with the proposed overfitting control strategy achieves superior performance.

Keep Rehearsing and Refining: Lifelong Learning Vehicle Routing under Continually Drifting Tasks

Jan 30, 2026Abstract:Existing neural solvers for vehicle routing problems (VRPs) are typically trained either in a one-off manner on a fixed set of pre-defined tasks or in a lifelong manner on several tasks arriving sequentially, assuming sufficient training on each task. Both settings overlook a common real-world property: problem patterns may drift continually over time, yielding massive tasks sequentially arising while offering only limited training resources per task. In this paper, we study a novel lifelong learning paradigm for neural VRP solvers under continually drifting tasks over learning time steps, where sufficient training for any given task at any time is not available. We propose Dual Replay with Experience Enhancement (DREE), a general framework to improve learning efficiency and mitigate catastrophic forgetting under such drift. Extensive experiments show that, under such continual drift, DREE effectively learns new tasks, preserves prior knowledge, improves generalization to unseen tasks, and can be applied to diverse existing neural solvers.

Towards Gold-Standard Depth Estimation for Tree Branches in UAV Forestry: Benchmarking Deep Stereo Matching Methods

Jan 27, 2026Abstract:Autonomous UAV forestry operations require robust depth estimation with strong cross-domain generalization, yet existing evaluations focus on urban and indoor scenarios, leaving a critical gap for vegetation-dense environments. We present the first systematic zero-shot evaluation of eight stereo methods spanning iterative refinement, foundation model, diffusion-based, and 3D CNN paradigms. All methods use officially released pretrained weights (trained on Scene Flow) and are evaluated on four standard benchmarks (ETH3D, KITTI 2012/2015, Middlebury) plus a novel 5,313-pair Canterbury Tree Branches dataset ($1920 \times 1080$). Results reveal scene-dependent patterns: foundation models excel on structured scenes (BridgeDepth: 0.23 px on ETH3D; DEFOM: 4.65 px on Middlebury), while iterative methods show variable cross-benchmark performance (IGEV++: 0.36 px on ETH3D but 6.77 px on Middlebury; IGEV: 0.33 px on ETH3D but 4.99 px on Middlebury). Qualitative evaluation on the Tree Branches dataset establishes DEFOM as the gold-standard baseline for vegetation depth estimation, with superior cross-domain consistency (consistently ranking 1st-2nd across benchmarks, average rank 1.75). DEFOM predictions will serve as pseudo-ground-truth for future benchmarking.

HEATACO: Heatmap-Guided Ant Colony Decoding for Large-Scale Travelling Salesman Problems

Jan 26, 2026Abstract:Heatmap-based non-autoregressive solvers for large-scale Travelling Salesman Problems output dense edge-probability scores, yet final performance largely hinges on the decoder that must satisfy degree-2 constraints and form a single Hamiltonian tour. Greedy commitment can cascade into irreparable mistakes at large $N$, whereas MCTS-guided local search is accurate but compute-heavy and highly engineered. We instead treat the heatmap as a soft edge prior and cast decoding as probabilistic tour construction under feasibility constraints, where the key is to correct local mis-rankings via inexpensive global coordination. Based on this view, we introduce HeatACO, a plug-and-play Max-Min Ant System decoder whose transition policy is softly biased by the heatmap while pheromone updates provide lightweight, instance-specific feedback to resolve global conflicts; optional 2-opt/3-opt post-processing further improves tour quality. On TSP500/1K/10K, using heatmaps produced by four pretrained predictors, HeatACO+2opt achieves gaps down to 0.11%/0.23%/1.15% with seconds-to-minutes CPU decoding for fixed heatmaps, offering a better quality--time trade-off than greedy decoding and published MCTS-based decoders. Finally, we find the gains track heatmap reliability: under distribution shift, miscalibration and confidence collapse bound decoding improvements, suggesting heatmap generalisation is a primary lever for further progress.

Investigation of the Generalisation Ability of Genetic Programming-evolved Scheduling Rules in Dynamic Flexible Job Shop Scheduling

Jan 22, 2026Abstract:Dynamic Flexible Job Shop Scheduling (DFJSS) is a complex combinatorial optimisation problem that requires simultaneous machine assignment and operation sequencing decisions in dynamic production environments. Genetic Programming (GP) has been widely applied to automatically evolve scheduling rules for DFJSS. However, existing studies typically train and test GP-evolved rules on DFJSS instances of the same type, which differ only by random seeds rather than by structural characteristics, leaving their cross-type generalisation ability largely unexplored. To address this gap, this paper systematically investigates the generalisation ability of GP-evolved scheduling rules under diverse DFJSS conditions. A series of experiments are conducted across multiple dimensions, including problem scale (i.e., the number of machines and jobs), key job shop parameters (e.g., utilisation level), and data distributions, to analyse how these factors influence GP performance on unseen instance types. The results show that good generalisation occurs when the training instances contain more jobs than the test instances while keeping the number of machines fixed, and when both training and test instances have similar scales or job shop parameters. Further analysis reveals that the number and distribution of decision points in DFJSS instances play a crucial role in explaining these performance differences. Similar decision point distributions lead to better generalisation, whereas significant discrepancies result in a marked degradation of performance. Overall, this study provides new insights into the generalisation ability of GP in DFJSS and highlights the necessity of evolving more generalisable GP rules capable of handling heterogeneous DFJSS instances effectively.

Scalable Knee-Point Guided Activity Group Selection in Multi-Tree Genetic Programming for Dynamic Multi-Mode Project Scheduling

Jan 20, 2026Abstract:The dynamic multi-mode resource-constrained project scheduling problem is a challenging scheduling problem that requires making decisions on both the execution order of activities and their corresponding execution modes. Genetic programming has been widely applied as a hyper-heuristic to evolve priority rules that guide the selection of activity-mode pairs from the current eligible set. Recently, an activity group selection strategy has been proposed to select a subset of activities rather than a single activity at each decision point, allowing for more effective scheduling by considering the interdependence between activities. Although effective in small-scale instances, this strategy suffers from scalability issues when applied to larger problems. In this work, we enhance the scalability of the group selection strategy by introducing a knee-point-based selection mechanism to identify a promising subset of activities before evaluating their combinations. An activity ordering rule is first used to rank all eligible activity-mode pairs, followed by a knee point selection to find the promising pairs. Then, a group selection rule selects the best activity combination. We develop a multi-tree GP framework to evolve both types of rules simultaneously. Experimental results demonstrate that our approach scales well to large instances and outperforms GP with sequential decision-making in most scenarios.

Evo-TFS: Evolutionary Time-Frequency Domain-Based Synthetic Minority Oversampling Approach to Imbalanced Time Series Classification

Jan 03, 2026Abstract:Time series classification is a fundamental machine learning task with broad real-world applications. Although many deep learning methods have proven effective in learning time-series data for classification, they were originally developed under the assumption of balanced data distributions. Once data distribution is uneven, these methods tend to ignore the minority class that is typically of higher practical significance. Oversampling methods have been designed to address this by generating minority-class samples, but their reliance on linear interpolation often hampers the preservation of temporal dynamics and the generation of diverse samples. Therefore, in this paper, we propose Evo-TFS, a novel evolutionary oversampling method that integrates both time- and frequency-domain characteristics. In Evo-TFS, strongly typed genetic programming is employed to evolve diverse, high-quality time series, guided by a fitness function that incorporates both time-domain and frequency-domain characteristics. Experiments conducted on imbalanced time series datasets demonstrate that Evo-TFS outperforms existing oversampling methods, significantly enhancing the performance of time-domain and frequency-domain classifiers.

Overlooked Safety Vulnerability in LLMs: Malicious Intelligent Optimization Algorithm Request and its Jailbreak

Jan 01, 2026Abstract:The widespread deployment of large language models (LLMs) has raised growing concerns about their misuse risks and associated safety issues. While prior studies have examined the safety of LLMs in general usage, code generation, and agent-based applications, their vulnerabilities in automated algorithm design remain underexplored. To fill this gap, this study investigates this overlooked safety vulnerability, with a particular focus on intelligent optimization algorithm design, given its prevalent use in complex decision-making scenarios. We introduce MalOptBench, a benchmark consisting of 60 malicious optimization algorithm requests, and propose MOBjailbreak, a jailbreak method tailored for this scenario. Through extensive evaluation of 13 mainstream LLMs including the latest GPT-5 and DeepSeek-V3.1, we reveal that most models remain highly susceptible to such attacks, with an average attack success rate of 83.59% and an average harmfulness score of 4.28 out of 5 on original harmful prompts, and near-complete failure under MOBjailbreak. Furthermore, we assess state-of-the-art plug-and-play defenses that can be applied to closed-source models, and find that they are only marginally effective against MOBjailbreak and prone to exaggerated safety behaviors. These findings highlight the urgent need for stronger alignment techniques to safeguard LLMs against misuse in algorithm design.

Solver-Independent Automated Problem Formulation via LLMs for High-Cost Simulation-Driven Design

Dec 21, 2025Abstract:In the high-cost simulation-driven design domain, translating ambiguous design requirements into a mathematical optimization formulation is a bottleneck for optimizing product performance. This process is time-consuming and heavily reliant on expert knowledge. While large language models (LLMs) offer potential for automating this task, existing approaches either suffer from poor formalization that fails to accurately align with the design intent or rely on solver feedback for data filtering, which is unavailable due to the high simulation costs. To address this challenge, we propose APF, a framework for solver-independent, automated problem formulation via LLMs designed to automatically convert engineers' natural language requirements into executable optimization models. The core of this framework is an innovative pipeline for automatically generating high-quality data, which overcomes the difficulty of constructing suitable fine-tuning datasets in the absence of high-cost solver feedback with the help of data generation and test instance annotation. The generated high-quality dataset is used to perform supervised fine-tuning on LLMs, significantly enhancing their ability to generate accurate and executable optimization problem formulations. Experimental results on antenna design demonstrate that APF significantly outperforms the existing methods in both the accuracy of requirement formalization and the quality of resulting radiation efficiency curves in meeting the design goals.

GAMA: A Neural Neighborhood Search Method with Graph-aware Multi-modal Attention for Vehicle Routing Problem

Nov 11, 2025

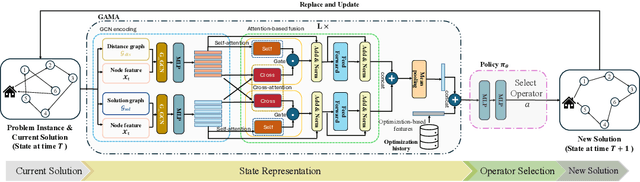

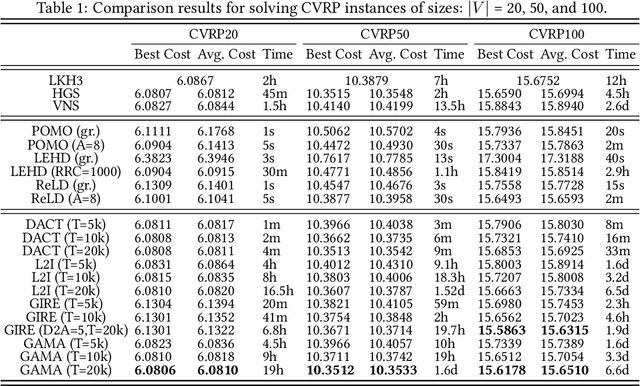

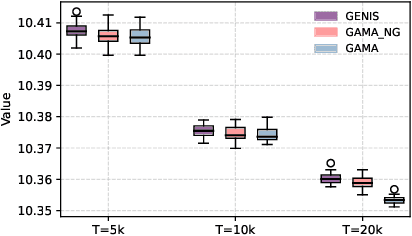

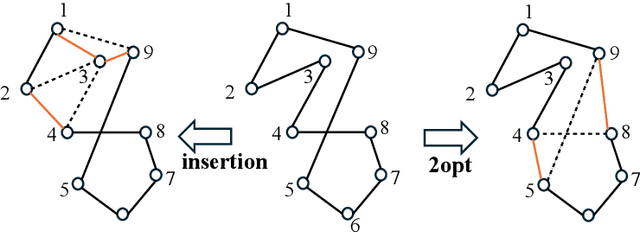

Abstract:Recent advances in neural neighborhood search methods have shown potential in tackling Vehicle Routing Problems (VRPs). However, most existing approaches rely on simplistic state representations and fuse heterogeneous information via naive concatenation, limiting their ability to capture rich structural and semantic context. To address these limitations, we propose GAMA, a neural neighborhood search method with Graph-aware Multi-modal Attention model in VRP. GAMA encodes the problem instance and its evolving solution as distinct modalities using graph neural networks, and models their intra- and inter-modal interactions through stacked self- and cross-attention layers. A gated fusion mechanism further integrates the multi-modal representations into a structured state, enabling the policy to make informed and generalizable operator selection decisions. Extensive experiments conducted across various synthetic and benchmark instances demonstrate that the proposed algorithm GAMA significantly outperforms the recent neural baselines. Further ablation studies confirm that both the multi-modal attention mechanism and the gated fusion design play a key role in achieving the observed performance gains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge