Lukas Heinrich

Strategic White Paper on AI Infrastructure for Particle, Nuclear, and Astroparticle Physics: Insights from JENA and EuCAIF

Mar 18, 2025

Abstract:Artificial intelligence (AI) is transforming scientific research, with deep learning methods playing a central role in data analysis, simulations, and signal detection across particle, nuclear, and astroparticle physics. Within the JENA communities-ECFA, NuPECC, and APPEC-and as part of the EuCAIF initiative, AI integration is advancing steadily. However, broader adoption remains constrained by challenges such as limited computational resources, a lack of expertise, and difficulties in transitioning from research and development (R&D) to production. This white paper provides a strategic roadmap, informed by a community survey, to address these barriers. It outlines critical infrastructure requirements, prioritizes training initiatives, and proposes funding strategies to scale AI capabilities across fundamental physics over the next five years.

Combined track finding with GNN & CKF

Jan 29, 2024Abstract:The application of Graph Neural Networks (GNN) in track reconstruction is a promising approach to cope with the challenges arising at the High-Luminosity upgrade of the Large Hadron Collider (HL-LHC). GNNs show good track-finding performance in high-multiplicity scenarios and are naturally parallelizable on heterogeneous compute architectures. Typical high-energy-physics detectors have high resolution in the innermost layers to support vertex reconstruction but lower resolution in the outer parts. GNNs mainly rely on 3D space-point information, which can cause reduced track-finding performance in the outer regions. In this contribution, we present a novel combination of GNN-based track finding with the classical Combinatorial Kalman Filter (CKF) algorithm to circumvent this issue: The GNN resolves the track candidates in the inner pixel region, where 3D space points can represent measurements very well. These candidates are then picked up by the CKF in the outer regions, where the CKF performs well even for 1D measurements. Using the ACTS infrastructure, we present a proof of concept based on truth tracking in the pixels as well as a dedicated GNN pipeline trained on $t\bar{t}$ events with pile-up 200 in the OpenDataDetector.

Masked Particle Modeling on Sets: Towards Self-Supervised High Energy Physics Foundation Models

Jan 25, 2024

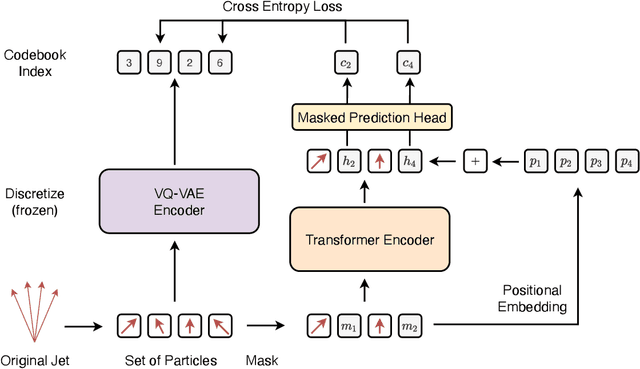

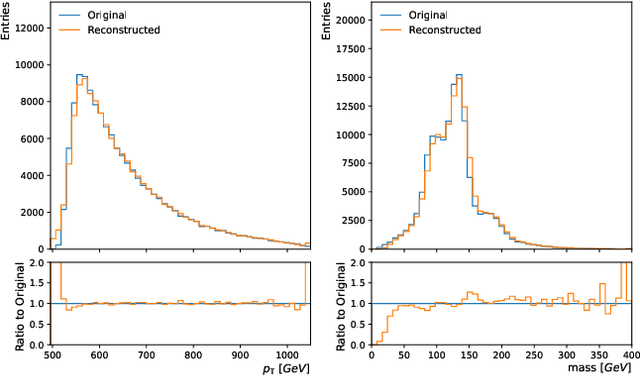

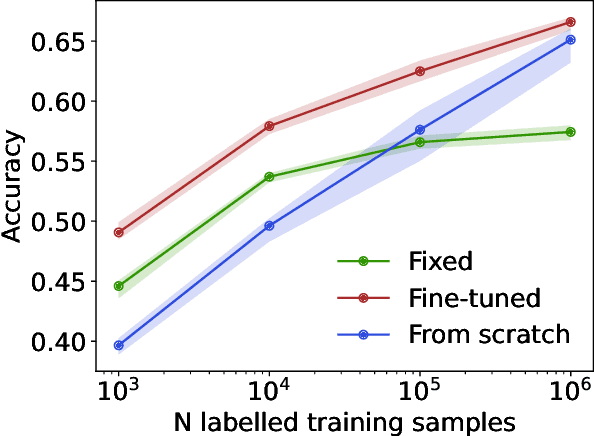

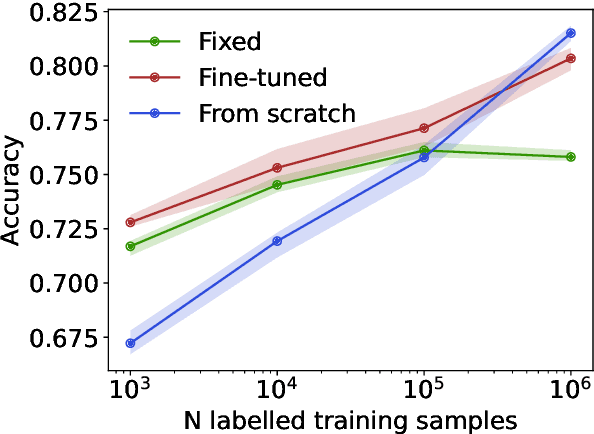

Abstract:We propose masked particle modeling (MPM) as a self-supervised method for learning generic, transferable, and reusable representations on unordered sets of inputs for use in high energy physics (HEP) scientific data. This work provides a novel scheme to perform masked modeling based pre-training to learn permutation invariant functions on sets. More generally, this work provides a step towards building large foundation models for HEP that can be generically pre-trained with self-supervised learning and later fine-tuned for a variety of down-stream tasks. In MPM, particles in a set are masked and the training objective is to recover their identity, as defined by a discretized token representation of a pre-trained vector quantized variational autoencoder. We study the efficacy of the method in samples of high energy jets at collider physics experiments, including studies on the impact of discretization, permutation invariance, and ordering. We also study the fine-tuning capability of the model, showing that it can be adapted to tasks such as supervised and weakly supervised jet classification, and that the model can transfer efficiently with small fine-tuning data sets to new classes and new data domains.

Finetuning Foundation Models for Joint Analysis Optimization

Jan 25, 2024Abstract:In this work we demonstrate that significant gains in performance and data efficiency can be achieved in High Energy Physics (HEP) by moving beyond the standard paradigm of sequential optimization or reconstruction and analysis components. We conceptually connect HEP reconstruction and analysis to modern machine learning workflows such as pretraining, finetuning, domain adaptation and high-dimensional embedding spaces and quantify the gains in the example usecase of searches of heavy resonances decaying via an intermediate di-Higgs system to four $b$-jets.

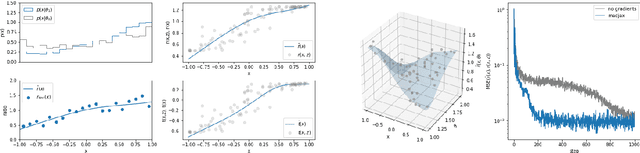

Branches of a Tree: Taking Derivatives of Programs with Discrete and Branching Randomness in High Energy Physics

Aug 31, 2023

Abstract:We propose to apply several gradient estimation techniques to enable the differentiation of programs with discrete randomness in High Energy Physics. Such programs are common in High Energy Physics due to the presence of branching processes and clustering-based analysis. Thus differentiating such programs can open the way for gradient based optimization in the context of detector design optimization, simulator tuning, or data analysis and reconstruction optimization. We discuss several possible gradient estimation strategies, including the recent Stochastic AD method, and compare them in simplified detector design experiments. In doing so we develop, to the best of our knowledge, the first fully differentiable branching program.

Hierarchical Neural Simulation-Based Inference Over Event Ensembles

Jun 21, 2023Abstract:When analyzing real-world data it is common to work with event ensembles, which comprise sets of observations that collectively constrain the parameters of an underlying model of interest. Such models often have a hierarchical structure, where "local" parameters impact individual events and "global" parameters influence the entire dataset. We introduce practical approaches for optimal dataset-wide probabilistic inference in cases where the likelihood is intractable, but simulations can be realized via forward modeling. We construct neural estimators for the likelihood(-ratio) or posterior and show that explicitly accounting for the model's hierarchical structure can lead to tighter parameter constraints. We ground our discussion using case studies from the physical sciences, focusing on examples from particle physics (particle collider data) and astrophysics (strong gravitational lensing observations).

Configurable calorimeter simulation for AI applications

Mar 08, 2023Abstract:A configurable calorimeter simulation for AI (COCOA) applications is presented, based on the Geant4 toolkit and interfaced with the Pythia event generator. This open-source project is aimed to support the development of machine learning algorithms in high energy physics that rely on realistic particle shower descriptions, such as reconstruction, fast simulation, and low-level analysis. Specifications such as the granularity and material of its nearly hermetic geometry are user-configurable. The tool is supplemented with simple event processing including topological clustering, jet algorithms, and a nearest-neighbors graph construction. Formatting is also provided to visualise events using the Phoenix event display software.

neos: End-to-End-Optimised Summary Statistics for High Energy Physics

Mar 10, 2022

Abstract:The advent of deep learning has yielded powerful tools to automatically compute gradients of computations. This is because training a neural network equates to iteratively updating its parameters using gradient descent to find the minimum of a loss function. Deep learning is then a subset of a broader paradigm; a workflow with free parameters that is end-to-end optimisable, provided one can keep track of the gradients all the way through. This work introduces neos: an example implementation following this paradigm of a fully differentiable high-energy physics workflow, capable of optimising a learnable summary statistic with respect to the expected sensitivity of an analysis. Doing this results in an optimisation process that is aware of the modelling and treatment of systematic uncertainties.

Differentiable Matrix Elements with MadJax

Feb 28, 2022

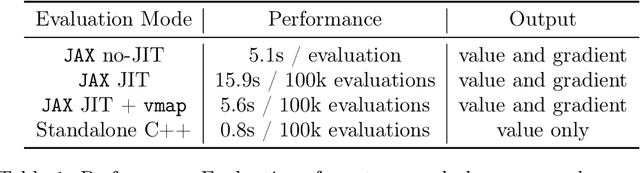

Abstract:MadJax is a tool for generating and evaluating differentiable matrix elements of high energy scattering processes. As such, it is a step towards a differentiable programming paradigm in high energy physics that facilitates the incorporation of high energy physics domain knowledge, encoded in simulation software, into gradient based learning and optimization pipelines. MadJax comprises two components: (a) a plugin to the general purpose matrix element generator MadGraph that integrates matrix element and phase space sampling code with the JAX differentiable programming framework, and (b) a standalone wrapping API for accessing the matrix element code and its gradients, which are computed with automatic differentiation. The MadJax implementation and example applications of simulation based inference and normalizing flow based matrix element modeling, with capabilities enabled uniquely with differentiable matrix elements, are presented.

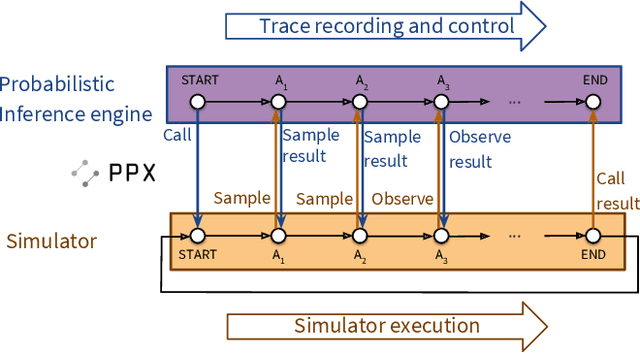

Etalumis: Bringing Probabilistic Programming to Scientific Simulators at Scale

Jul 08, 2019

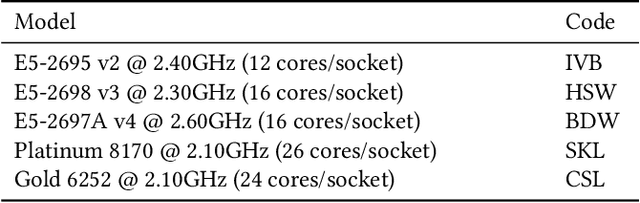

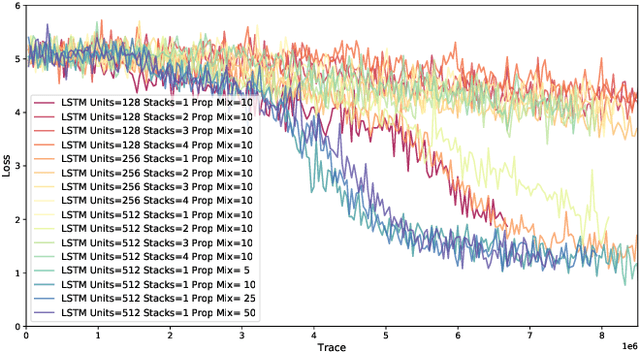

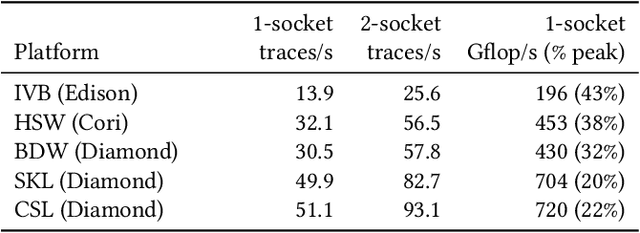

Abstract:Probabilistic programming languages (PPLs) are receiving widespread attention for performing Bayesian inference in complex generative models. However, applications to science remain limited because of the impracticability of rewriting complex scientific simulators in a PPL, the computational cost of inference, and the lack of scalable implementations. To address these, we present a novel PPL framework that couples directly to existing scientific simulators through a cross-platform probabilistic execution protocol and provides Markov chain Monte Carlo (MCMC) and deep-learning-based inference compilation (IC) engines for tractable inference. To guide IC inference, we perform distributed training of a dynamic 3DCNN--LSTM architecture with a PyTorch-MPI-based framework on 1,024 32-core CPU nodes of the Cori supercomputer with a global minibatch size of 128k: achieving a performance of 450 Tflop/s through enhancements to PyTorch. We demonstrate a Large Hadron Collider (LHC) use-case with the C++ Sherpa simulator and achieve the largest-scale posterior inference in a Turing-complete PPL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge