Philipp Windischhofer

Hierarchical Neural Simulation-Based Inference Over Event Ensembles

Jun 21, 2023Abstract:When analyzing real-world data it is common to work with event ensembles, which comprise sets of observations that collectively constrain the parameters of an underlying model of interest. Such models often have a hierarchical structure, where "local" parameters impact individual events and "global" parameters influence the entire dataset. We introduce practical approaches for optimal dataset-wide probabilistic inference in cases where the likelihood is intractable, but simulations can be realized via forward modeling. We construct neural estimators for the likelihood(-ratio) or posterior and show that explicitly accounting for the model's hierarchical structure can lead to tighter parameter constraints. We ground our discussion using case studies from the physical sciences, focusing on examples from particle physics (particle collider data) and astrophysics (strong gravitational lensing observations).

Transport away your problems: Calibrating stochastic simulations with optimal transport

Jul 19, 2021

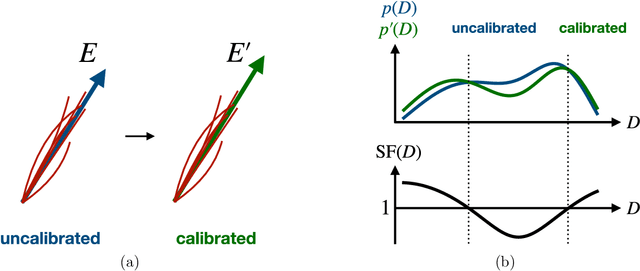

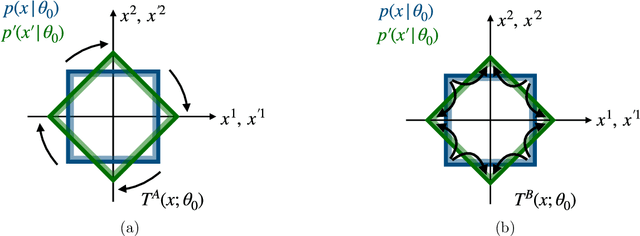

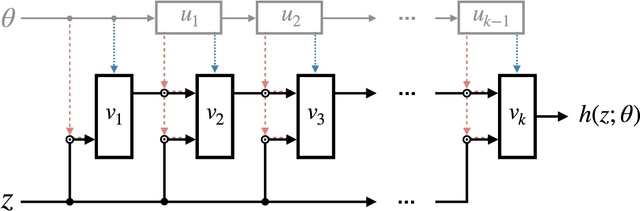

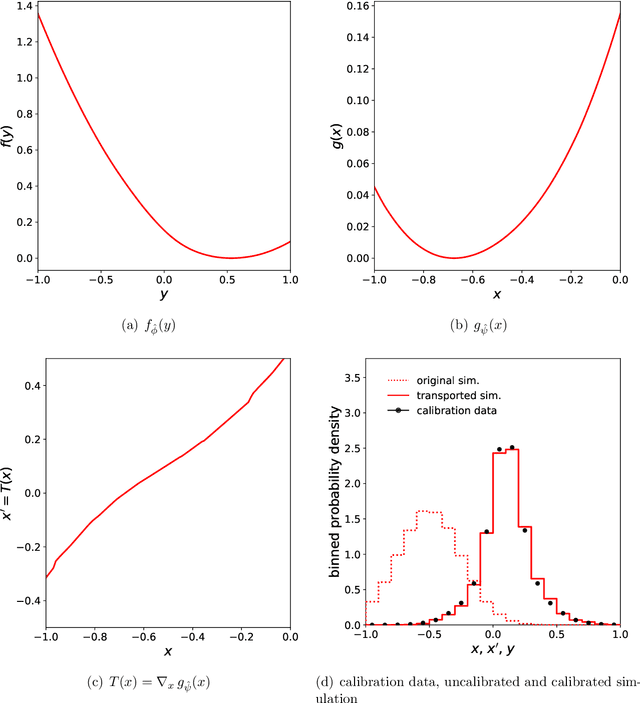

Abstract:Stochastic simulators are an indispensable tool in many branches of science. Often based on first principles, they deliver a series of samples whose distribution implicitly defines a probability measure to describe the phenomena of interest. However, the fidelity of these simulators is not always sufficient for all scientific purposes, necessitating the construction of ad-hoc corrections to "calibrate" the simulation and ensure that its output is a faithful representation of reality. In this paper, we leverage methods from transportation theory to construct such corrections in a systematic way. We use a neural network to compute minimal modifications to the individual samples produced by the simulator such that the resulting distribution becomes properly calibrated. We illustrate the method and its benefits in the context of experimental particle physics, where the need for calibrated stochastic simulators is particularly pronounced.

Preserving physically important variables in optimal event selections: A case study in Higgs physics

Jul 03, 2019

Abstract:Analyses of collider data, often assisted by modern Machine Learning methods, condense a number of observables into a few powerful discriminants for the separation of the targeted signal process from the contributing backgrounds. These discriminants are highly correlated with important physical observables; using them in the event selection thus leads to the distortion of physically relevant distributions. We present an alternative event selection strategy, based on adversarially trained classifiers, that exploits the discriminating power contained in many event variables, but preserves the distributions of selected observables. This method is implemented and evaluated for the case of a Standard Model Higgs boson decaying into a pair of bottom quarks. Compared to a cut-based approach, it leads to a significant improvement in analysis sensitivity and retains the shapes of the relevant distributions to a greater extent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge