Elena Cuoco

Strategic White Paper on AI Infrastructure for Particle, Nuclear, and Astroparticle Physics: Insights from JENA and EuCAIF

Mar 18, 2025

Abstract:Artificial intelligence (AI) is transforming scientific research, with deep learning methods playing a central role in data analysis, simulations, and signal detection across particle, nuclear, and astroparticle physics. Within the JENA communities-ECFA, NuPECC, and APPEC-and as part of the EuCAIF initiative, AI integration is advancing steadily. However, broader adoption remains constrained by challenges such as limited computational resources, a lack of expertise, and difficulties in transitioning from research and development (R&D) to production. This white paper provides a strategic roadmap, informed by a community survey, to address these barriers. It outlines critical infrastructure requirements, prioritizes training initiatives, and proposes funding strategies to scale AI capabilities across fundamental physics over the next five years.

LSTM and CNN application for core-collapse supernova search in gravitational wave real data

Jan 23, 2023Abstract:$Context.$ Core-collapse supernovae (CCSNe) are expected to emit gravitational wave signals that could be detected by current and future generation interferometers within the Milky Way and nearby galaxies. The stochastic nature of the signal arising from CCSNe requires alternative detection methods to matched filtering. $Aims.$ We aim to show the potential of machine learning (ML) for multi-label classification of different CCSNe simulated signals and noise transients using real data. We compared the performance of 1D and 2D convolutional neural networks (CNNs) on single and multiple detector data. For the first time, we tested multi-label classification also with long short-term memory (LSTM) networks. $Methods.$ We applied a search and classification procedure for CCSNe signals, using an event trigger generator, the Wavelet Detection Filter (WDF), coupled with ML. We used time series and time-frequency representations of the data as inputs to the ML models. To compute classification accuracies, we simultaneously injected, at detectable distance of 1\,kpc, CCSN waveforms, obtained from recent hydrodynamical simulations of neutrino-driven core-collapse, onto interferometer noise from the O2 LIGO and Virgo science run. $Results.$ We compared the performance of the three models on single detector data. We then merged the output of the models for single detector classification of noise and astrophysical transients, obtaining overall accuracies for LIGO ($\sim99\%$) and ($\sim80\%$) for Virgo. We extended our analysis to the multi-detector case using triggers coincident among the three ITFs and achieved an accuracy of $\sim98\%$.

* 10 pages, 13 figures. Accepted by A&A journal

MLGWSC-1: The first Machine Learning Gravitational-Wave Search Mock Data Challenge

Sep 22, 2022

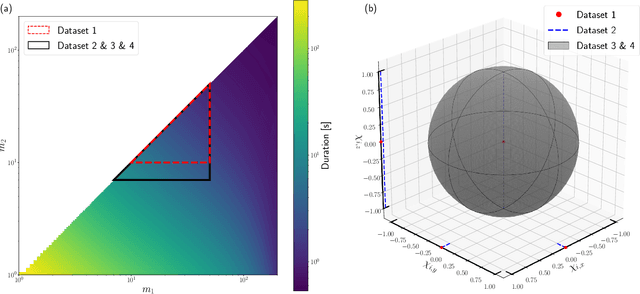

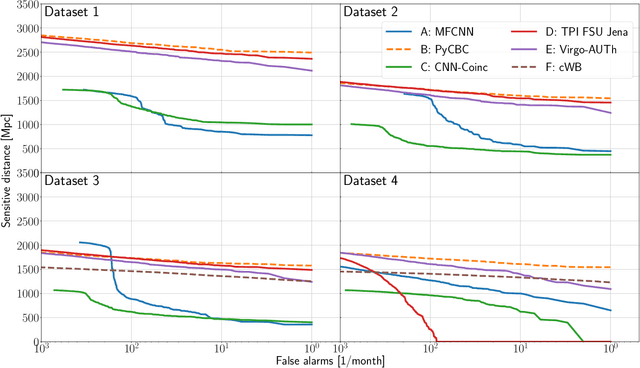

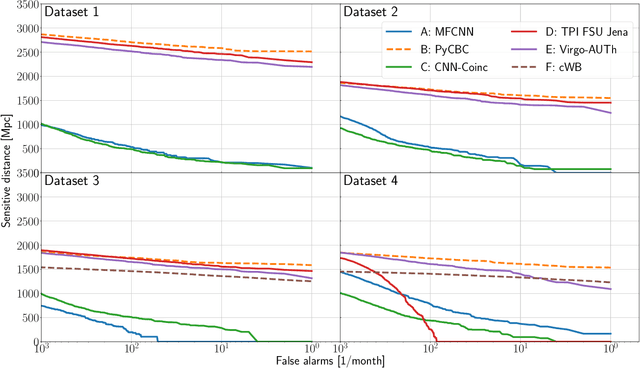

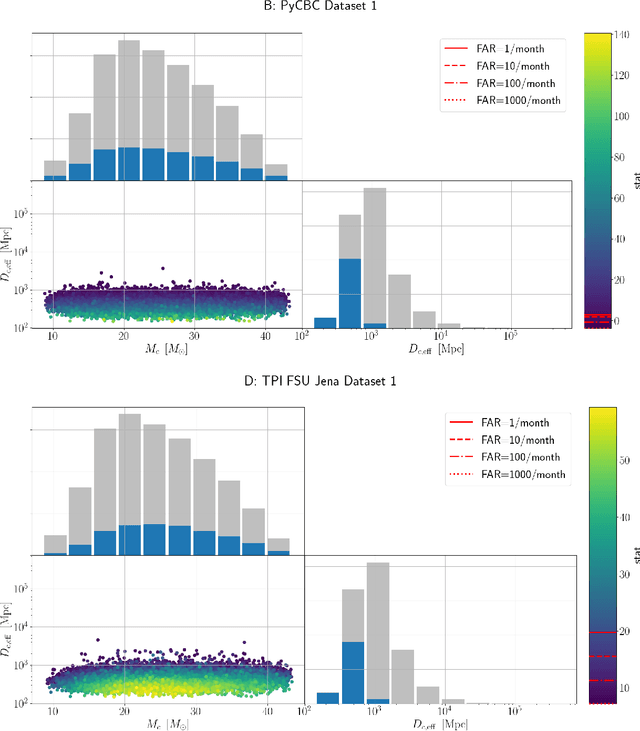

Abstract:We present the results of the first Machine Learning Gravitational-Wave Search Mock Data Challenge (MLGWSC-1). For this challenge, participating groups had to identify gravitational-wave signals from binary black hole mergers of increasing complexity and duration embedded in progressively more realistic noise. The final of the 4 provided datasets contained real noise from the O3a observing run and signals up to a duration of 20 seconds with the inclusion of precession effects and higher order modes. We present the average sensitivity distance and runtime for the 6 entered algorithms derived from 1 month of test data unknown to the participants prior to submission. Of these, 4 are machine learning algorithms. We find that the best machine learning based algorithms are able to achieve up to 95% of the sensitive distance of matched-filtering based production analyses for simulated Gaussian noise at a false-alarm rate (FAR) of one per month. In contrast, for real noise, the leading machine learning search achieved 70%. For higher FARs the differences in sensitive distance shrink to the point where select machine learning submissions outperform traditional search algorithms at FARs $\geq 200$ per month on some datasets. Our results show that current machine learning search algorithms may already be sensitive enough in limited parameter regions to be useful for some production settings. To improve the state-of-the-art, machine learning algorithms need to reduce the false-alarm rates at which they are capable of detecting signals and extend their validity to regions of parameter space where modeled searches are computationally expensive to run. Based on our findings we compile a list of research areas that we believe are the most important to elevate machine learning searches to an invaluable tool in gravitational-wave signal detection.

Core-Collapse Supernova Gravitational-Wave Search and Deep Learning Classification

Jan 01, 2020

Abstract:We describe a search and classification procedure for gravitational waves emitted by core-collapse supernova (CCSN) explosions, using a convolutional neural network (CNN) combined with an event trigger generator known as Wavelet Detection Filter (WDF). We employ both a 1-D CNN search using time series gravitational-wave data as input, and a 2-D CNN search with time-frequency representation of the data as input. To test the accuracies of our 1-D and 2-D CNN classification, we add CCSN waveforms from the most recent hydrodynamical simulations of neutrino-driven core-collapse to simulated Gaussian colored noise with the Virgo interferometer and the planned Einstein Telescope sensitivity curve. We find classification accuracies, for a single detector, of over 95% for both 1-D and 2-D CNN pipelines. For the first time in machine learning CCSN studies, we add short duration detector noise transients to our data to test the robustness of our method against false alarms created by detector noise artifacts. Further to this, we show that the CNN can distinguish between different types of CCSN waveform models.

Image-based deep learning for classification of noise transients in gravitational wave detectors

Mar 27, 2018

Abstract:The detection of gravitational waves has inaugurated the era of gravitational astronomy and opened new avenues for the multimessenger study of cosmic sources. Thanks to their sensitivity, the Advanced LIGO and Advanced Virgo interferometers will probe a much larger volume of space and expand the capability of discovering new gravitational wave emitters. The characterization of these detectors is a primary task in order to recognize the main sources of noise and optimize the sensitivity of interferometers. Glitches are transient noise events that can impact the data quality of the interferometers and their classification is an important task for detector characterization. Deep learning techniques are a promising tool for the recognition and classification of glitches. We present a classification pipeline that exploits convolutional neural networks to classify glitches starting from their time-frequency evolution represented as images. We evaluated the classification accuracy on simulated glitches, showing that the proposed algorithm can automatically classify glitches on very fast timescales and with high accuracy, thus providing a promising tool for online detector characterization.

* 25 pages, 8 figures, accepted for publication in Classical and Quantum Gravity

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge