Zhixiang Ren

Pseudodata-guided Invariant Representation Learning Boosts the Out-of-Distribution Generalization in Enzymatic Kinetic Parameter Prediction

Jan 12, 2026Abstract:Accurate prediction of enzyme kinetic parameters is essential for understanding catalytic mechanisms and guiding enzyme engineering.However, existing deep learning-based enzyme-substrate interaction (ESI) predictors often exhibit performance degradation on sequence-divergent, out-of-distribution (OOD) cases, limiting robustness under biologically relevant perturbations.We propose O$^2$DENet, a lightweight, plug-and-play module that enhances OOD generalization via biologically and chemically informed perturbation augmentation and invariant representation learning.O$^2$DENet introduces enzyme-substrate perturbations and enforces consistency between original and augmented enzyme-substrate-pair representations to encourage invariance to distributional shifts.When integrated with representative ESI models, O$^2$DENet consistently improves predictive performance for both $k_{cat}$ and $K_m$ across stringent sequence-identity-based OOD benchmarks, achieving state-of-the-art results among the evaluated methods in terms of accuracy and robustness metrics.Overall, O$^2$DENet provides a general and effective strategy to enhance the stability and deployability of data-driven enzyme kinetics predictors for real-world enzyme engineering applications.

Concept-Driven Deep Learning for Enhanced Protein-Specific Molecular Generation

Mar 11, 2025Abstract:In recent years, deep learning techniques have made significant strides in molecular generation for specific targets, driving advancements in drug discovery. However, existing molecular generation methods present significant limitations: those operating at the atomic level often lack synthetic feasibility, drug-likeness, and interpretability, while fragment-based approaches frequently overlook comprehensive factors that influence protein-molecule interactions. To address these challenges, we propose a novel fragment-based molecular generation framework tailored for specific proteins. Our method begins by constructing a protein subpocket and molecular arm concept-based neural network, which systematically integrates interaction force information and geometric complementarity to sample molecular arms for specific protein subpockets. Subsequently, we introduce a diffusion model to generate molecular backbones that connect these arms, ensuring structural integrity and chemical diversity. Our approach significantly improves synthetic feasibility and binding affinity, with a 4% increase in drug-likeness and a 6% improvement in synthetic feasibility. Furthermore, by integrating explicit interaction data through a concept-based model, our framework enhances interpretability, offering valuable insights into the molecular design process.

Deep Learning in Single-Cell and Spatial Transcriptomics Data Analysis: Advances and Challenges from a Data Science Perspective

Dec 04, 2024

Abstract:The development of single-cell and spatial transcriptomics has revolutionized our capacity to investigate cellular properties, functions, and interactions in both cellular and spatial contexts. However, the analysis of single-cell and spatial omics data remains challenging. First, single-cell sequencing data are high-dimensional and sparse, often contaminated by noise and uncertainty, obscuring the underlying biological signals. Second, these data often encompass multiple modalities, including gene expression, epigenetic modifications, and spatial locations. Integrating these diverse data modalities is crucial for enhancing prediction accuracy and biological interpretability. Third, while the scale of single-cell sequencing has expanded to millions of cells, high-quality annotated datasets are still limited. Fourth, the complex correlations of biological tissues make it difficult to accurately reconstruct cellular states and spatial contexts. Traditional feature engineering-based analysis methods struggle to deal with the various challenges presented by intricate biological networks. Deep learning has emerged as a powerful tool capable of handling high-dimensional complex data and automatically identifying meaningful patterns, offering significant promise in addressing these challenges. This review systematically analyzes these challenges and discusses related deep learning approaches. Moreover, we have curated 21 datasets from 9 benchmarks, encompassing 58 computational methods, and evaluated their performance on the respective modeling tasks. Finally, we highlight three areas for future development from a technical, dataset, and application perspective. This work will serve as a valuable resource for understanding how deep learning can be effectively utilized in single-cell and spatial transcriptomics analyses, while inspiring novel approaches to address emerging challenges.

ProtFAD: Introducing function-aware domains as implicit modality towards protein function perception

May 24, 2024

Abstract:Protein function prediction is currently achieved by encoding its sequence or structure, where the sequence-to-function transcendence and high-quality structural data scarcity lead to obvious performance bottlenecks. Protein domains are "building blocks" of proteins that are functionally independent, and their combinations determine the diverse biological functions. However, most existing studies have yet to thoroughly explore the intricate functional information contained in the protein domains. To fill this gap, we propose a synergistic integration approach for a function-aware domain representation, and a domain-joint contrastive learning strategy to distinguish different protein functions while aligning the modalities. Specifically, we associate domains with the GO terms as function priors to pre-train domain embeddings. Furthermore, we partition proteins into multiple sub-views based on continuous joint domains for contrastive training under the supervision of a novel triplet InfoNCE loss. Our approach significantly and comprehensively outperforms the state-of-the-art methods on various benchmarks, and clearly differentiates proteins carrying distinct functions compared to the competitor.

A Self-feedback Knowledge Elicitation Approach for Chemical Reaction Predictions

Apr 15, 2024Abstract:The task of chemical reaction predictions (CRPs) plays a pivotal role in advancing drug discovery and material science. However, its effectiveness is constrained by the vast and uncertain chemical reaction space and challenges in capturing reaction selectivity, particularly due to existing methods' limitations in exploiting the data's inherent knowledge. To address these challenges, we introduce a data-curated self-feedback knowledge elicitation approach. This method starts from iterative optimization of molecular representations and facilitates the extraction of knowledge on chemical reaction types (RTs). Then, we employ adaptive prompt learning to infuse the prior knowledge into the large language model (LLM). As a result, we achieve significant enhancements: a 14.2% increase in retrosynthesis prediction accuracy, a 74.2% rise in reagent prediction accuracy, and an expansion in the model's capability for handling multi-task chemical reactions. This research offers a novel paradigm for knowledge elicitation in scientific research and showcases the untapped potential of LLMs in CRPs.

The Impact of Domain Knowledge and Multi-Modality on Intelligent Molecular Property Prediction: A Systematic Survey

Feb 18, 2024

Abstract:The precise prediction of molecular properties is essential for advancements in drug development, particularly in virtual screening and compound optimization. The recent introduction of numerous deep learning-based methods has shown remarkable potential in enhancing molecular property prediction (MPP), especially improving accuracy and insights into molecular structures. Yet, two critical questions arise: does the integration of domain knowledge augment the accuracy of molecular property prediction and does employing multi-modal data fusion yield more precise results than unique data source methods? To explore these matters, we comprehensively review and quantitatively analyze recent deep learning methods based on various benchmarks. We discover that integrating molecular information will improve both MPP regression and classification tasks by upto 3.98% and 1.72%, respectively. We also discover that the utilizing 3-dimensional information with 1-dimensional and 2-dimensional information simultaneously can substantially enhance MPP upto 4.2%. The two consolidated insights offer crucial guidance for future advancements in drug discovery.

Scientific Language Modeling: A Quantitative Review of Large Language Models in Molecular Science

Feb 06, 2024Abstract:Efficient molecular modeling and design are crucial for the discovery and exploration of novel molecules, and the incorporation of deep learning methods has revolutionized this field. In particular, large language models (LLMs) offer a fresh approach to tackle scientific problems from a natural language processing (NLP) perspective, introducing a research paradigm called scientific language modeling (SLM). However, two key issues remain: how to quantify the match between model and data modalities and how to identify the knowledge-learning preferences of models. To address these challenges, we propose a multi-modal benchmark, named ChEBI-20-MM, and perform 1263 experiments to assess the model's compatibility with data modalities and knowledge acquisition. Through the modal transition probability matrix, we provide insights into the most suitable modalities for tasks. Furthermore, we introduce a statistically interpretable approach to discover context-specific knowledge mapping by localized feature filtering. Our pioneering analysis offers an exploration of the learning mechanism and paves the way for advancing SLM in molecular science.

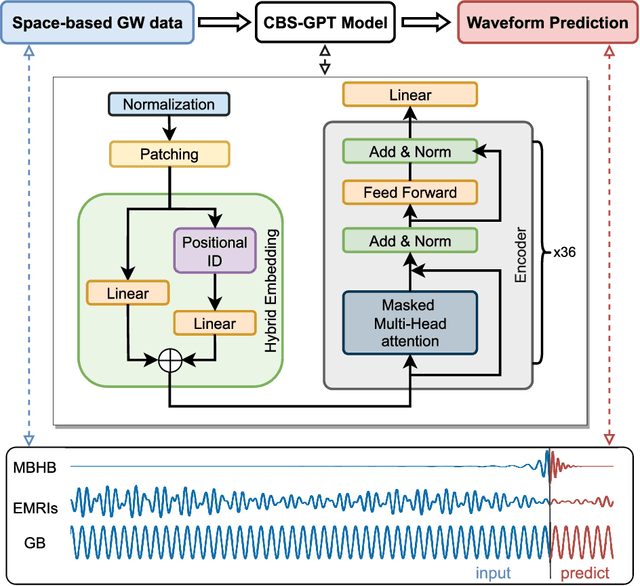

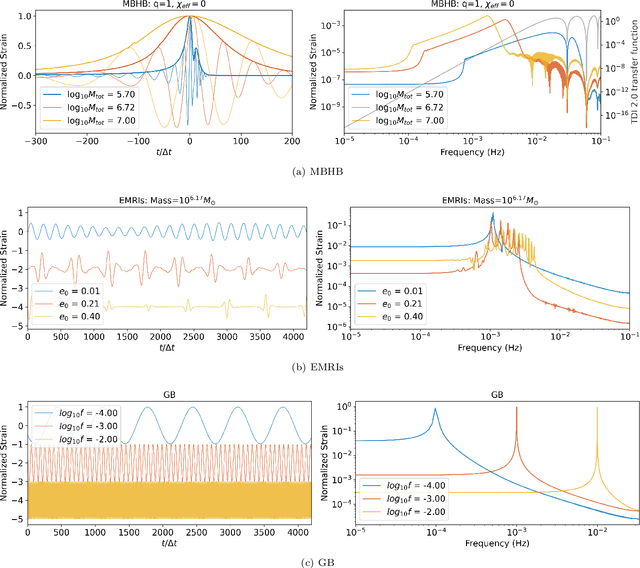

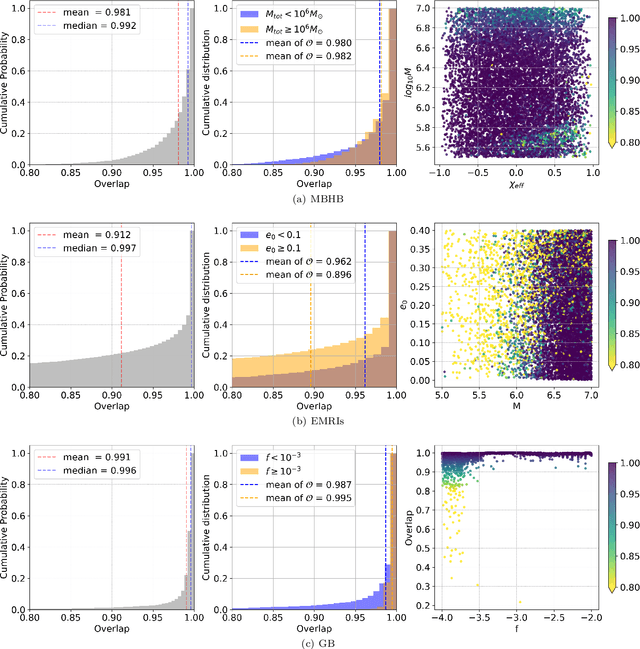

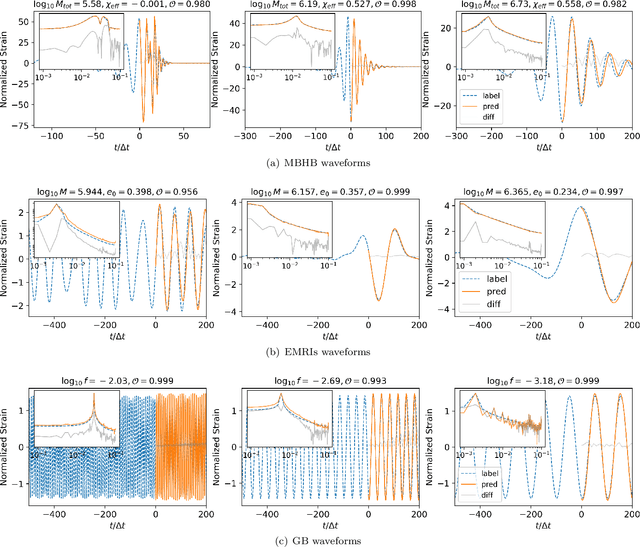

Compact Binary Systems Waveform Generation with Generative Pre-trained Transformer

Oct 31, 2023

Abstract:Space-based gravitational wave detection is one of the most anticipated gravitational wave (GW) detection projects in the next decade, which will detect abundant compact binary systems. However, the precise prediction of space GW waveforms remains unexplored. To solve the data processing difficulty in the increasing waveform complexity caused by detectors' response and second-generation time-delay interferometry (TDI 2.0), an interpretable pre-trained large model named CBS-GPT (Compact Binary Systems Waveform Generation with Generative Pre-trained Transformer) is proposed. For compact binary system waveforms, three models were trained to predict the waveforms of massive black hole binary (MBHB), extreme mass-ratio inspirals (EMRIs), and galactic binary (GB), achieving prediction accuracies of 98%, 91%, and 99%, respectively. The CBS-GPT model exhibits notable interpretability, with its hidden parameters effectively capturing the intricate information of waveforms, even with complex instrument response and a wide parameter range. Our research demonstrates the potential of large pre-trained models in gravitational wave data processing, opening up new opportunities for future tasks such as gap completion, GW signal detection, and signal noise reduction.

3D-Mol: A Novel Contrastive Learning Framework for Molecular Property Prediction with 3D Information

Sep 28, 2023Abstract:Molecular property prediction offers an effective and efficient approach for early screening and optimization of drug candidates. Although deep learning based methods have made notable progress, most existing works still do not fully utilize 3D spatial information. This can lead to a single molecular representation representing multiple actual molecules. To address these issues, we propose a novel 3D structure-based molecular modeling method named 3D-Mol. In order to accurately represent complete spatial structure, we design a novel encoder to extract 3D features by deconstructing the molecules into three geometric graphs. In addition, we use 20M unlabeled data to pretrain our model by contrastive learning. We consider conformations with the same topological structure as positive pairs and the opposites as negative pairs, while the weight is determined by the dissimilarity between the conformations. We compare 3D-Mol with various state-of-the-art (SOTA) baselines on 7 benchmarks and demonstrate our outstanding performance in 5 benchmarks.

DECODE: DilatEd COnvolutional neural network for Detecting Extreme-mass-ratio inspirals

Aug 31, 2023Abstract:The detection of Extreme Mass Ratio Inspirals (EMRIs) is intricate due to their complex waveforms, extended duration, and low signal-to-noise ratio (SNR), making them more challenging to be identified compared to compact binary coalescences. While matched filtering-based techniques are known for their computational demands, existing deep learning-based methods primarily handle time-domain data and are often constrained by data duration and SNR. In addition, most existing work ignores time-delay interferometry (TDI) and applies the long-wavelength approximation in detector response calculations, thus limiting their ability to handle laser frequency noise. In this study, we introduce DECODE, an end-to-end model focusing on EMRI signal detection by sequence modeling in the frequency domain. Centered around a dilated causal convolutional neural network, trained on synthetic data considering TDI-1.5 detector response, DECODE can efficiently process a year's worth of multichannel TDI data with an SNR of around 50. We evaluate our model on 1-year data with accumulated SNR ranging from 50 to 120 and achieve a true positive rate of 96.3% at a false positive rate of 1%, keeping an inference time of less than 0.01 seconds. With the visualization of three showcased EMRI signals for interpretability and generalization, DECODE exhibits strong potential for future space-based gravitational wave data analyses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge