Andreas Salzburger

ColliderML: The First Release of an OpenDataDetector High-Luminosity Physics Benchmark Dataset

Dec 17, 2025Abstract:We introduce ColliderML - a large, open, experiment-agnostic dataset of fully simulated and digitised proton-proton collisions in High-Luminosity Large Hadron Collider conditions ($\sqrt{s}=14$ TeV, mean pile-up $μ= 200$). ColliderML provides one million events across ten Standard Model and Beyond Standard Model processes, plus extensive single-particle samples, all produced with modern next-to-leading order matrix element calculation and showering, realistic per-event pile-up overlay, a validated OpenDataDetector geometry, and standard reconstructions. The release fills a major gap for machine learning (ML) research on detector-level data, provided on the ML-friendly Hugging Face platform. We present physics coverage and the generation, simulation, digitisation and reconstruction pipeline, describe format and access, and initial collider physics benchmarks.

Combined track finding with GNN & CKF

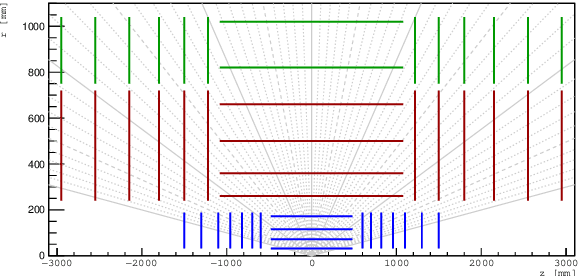

Jan 29, 2024Abstract:The application of Graph Neural Networks (GNN) in track reconstruction is a promising approach to cope with the challenges arising at the High-Luminosity upgrade of the Large Hadron Collider (HL-LHC). GNNs show good track-finding performance in high-multiplicity scenarios and are naturally parallelizable on heterogeneous compute architectures. Typical high-energy-physics detectors have high resolution in the innermost layers to support vertex reconstruction but lower resolution in the outer parts. GNNs mainly rely on 3D space-point information, which can cause reduced track-finding performance in the outer regions. In this contribution, we present a novel combination of GNN-based track finding with the classical Combinatorial Kalman Filter (CKF) algorithm to circumvent this issue: The GNN resolves the track candidates in the inner pixel region, where 3D space points can represent measurements very well. These candidates are then picked up by the CKF in the outer regions, where the CKF performs well even for 1D measurements. Using the ACTS infrastructure, we present a proof of concept based on truth tracking in the pixels as well as a dedicated GNN pipeline trained on $t\bar{t}$ events with pile-up 200 in the OpenDataDetector.

Finding Morton-Like Layouts for Multi-Dimensional Arrays Using Evolutionary Algorithms

Sep 13, 2023

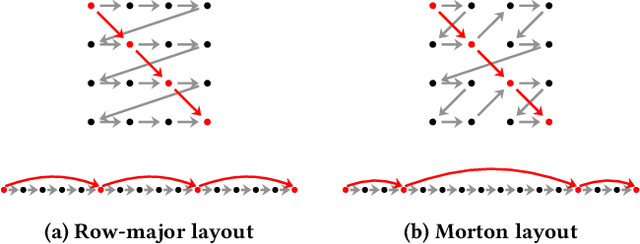

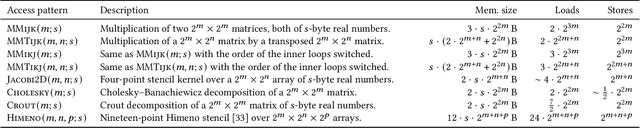

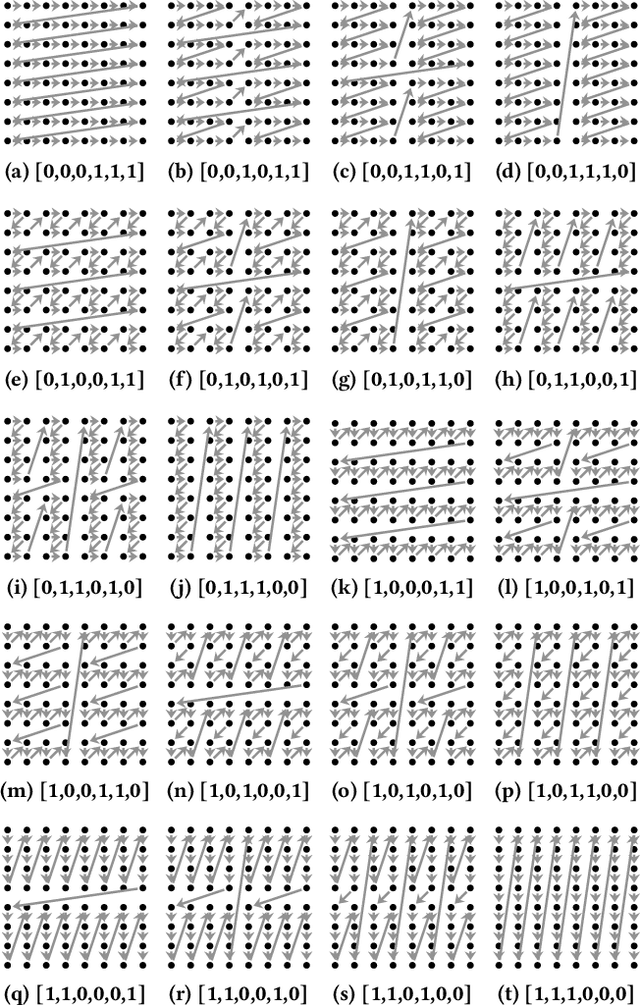

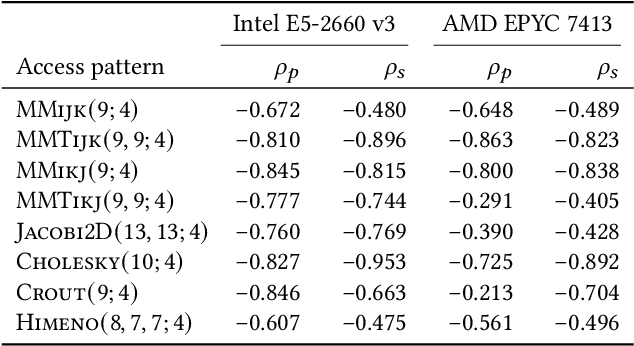

Abstract:The layout of multi-dimensional data can have a significant impact on the efficacy of hardware caches and, by extension, the performance of applications. Common multi-dimensional layouts include the canonical row-major and column-major layouts as well as the Morton curve layout. In this paper, we describe how the Morton layout can be generalized to a very large family of multi-dimensional data layouts with widely varying performance characteristics. We posit that this design space can be efficiently explored using a combinatorial evolutionary methodology based on genetic algorithms. To this end, we propose a chromosomal representation for such layouts as well as a methodology for estimating the fitness of array layouts using cache simulation. We show that our fitness function correlates to kernel running time in real hardware, and that our evolutionary strategy allows us to find candidates with favorable simulated cache properties in four out of the eight real-world applications under consideration in a small number of generations. Finally, we demonstrate that the array layouts found using our evolutionary method perform well not only in simulated environments but that they can effect significant performance gains -- up to a factor ten in extreme cases -- in real hardware.

Machine learning for surface prediction in ACTS

Aug 06, 2021

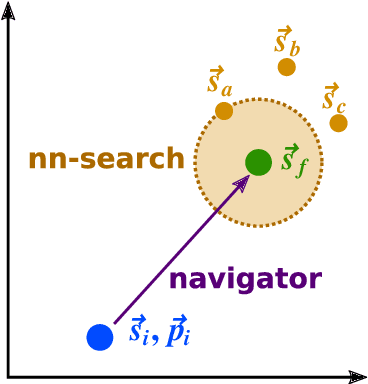

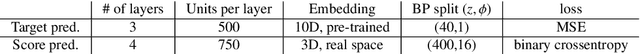

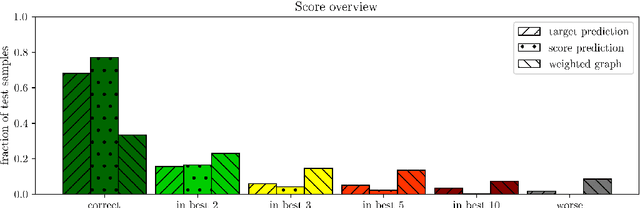

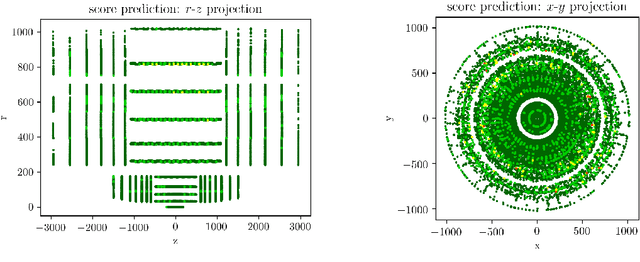

Abstract:We present an ongoing R&D activity for machine-learning-assisted navigation through detectors to be used for track reconstruction. We investigate different approaches of training neural networks for surface prediction and compare their results. This work is carried out in the context of the ACTS tracking toolkit.

The Tracking Machine Learning challenge : Throughput phase

May 14, 2021

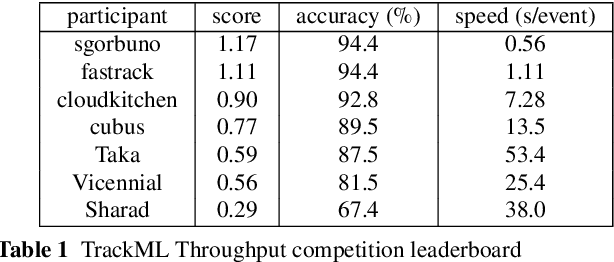

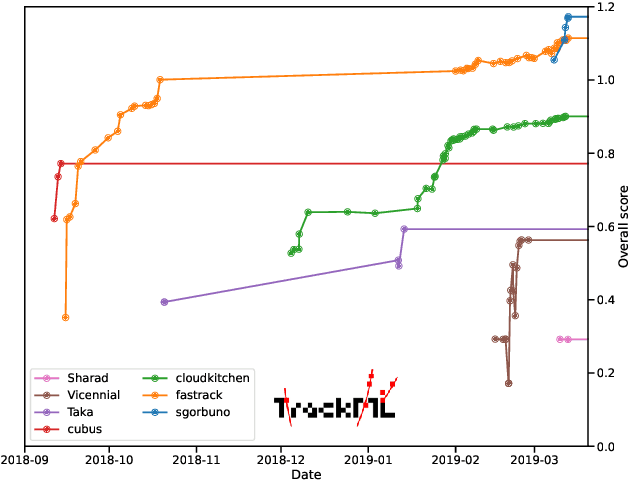

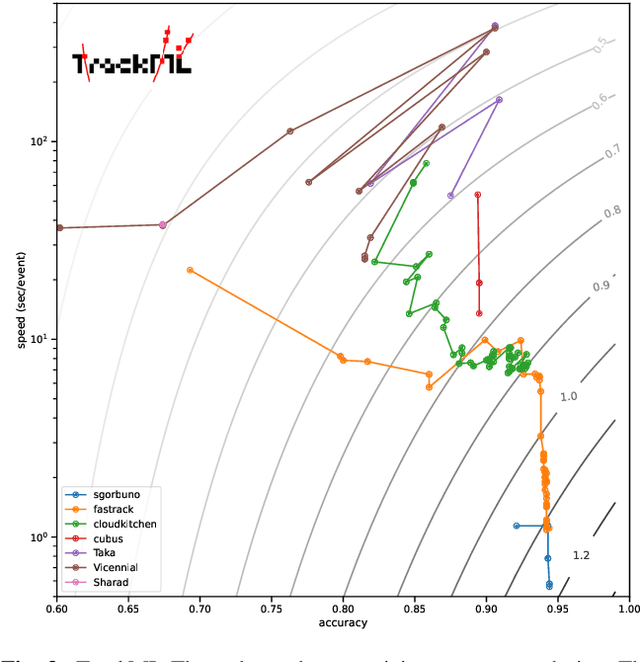

Abstract:This paper reports on the second "Throughput" phase of the Tracking Machine Learning (TrackML) challenge on the Codalab platform. As in the first "Accuracy" phase, the participants had to solve a difficult experimental problem linked to tracking accurately the trajectory of particles as e.g. created at the Large Hadron Collider (LHC): given O($10^5$) points, the participants had to connect them into O($10^4$) individual groups that represent the particle trajectories which are approximated helical. While in the first phase only the accuracy mattered, the goal of this second phase was a compromise between the accuracy and the speed of inference. Both were measured on the Codalab platform where the participants had to upload their software. The best three participants had solutions with good accuracy and speed an order of magnitude faster than the state of the art when the challenge was designed. Although the core algorithms were less diverse than in the first phase, a diversity of techniques have been used and are described in this paper. The performance of the algorithms are analysed in depth and lessons derived.

Hashing and metric learning for charged particle tracking

Jan 16, 2021

Abstract:We propose a novel approach to charged particle tracking at high intensity particle colliders based on Approximate Nearest Neighbors search. With hundreds of thousands of measurements per collision to be reconstructed e.g. at the High Luminosity Large Hadron Collider, the currently employed combinatorial track finding approaches become inadequate. Here, we use hashing techniques to separate measurements into buckets of 20-50 hits and increase their purity using metric learning. Two different approaches are studied to further resolve tracks inside buckets: Local Fisher Discriminant Analysis and Neural Networks for triplet similarity learning. We demonstrate the proposed approach on simulated collisions and show significant speed improvement with bucket tracking efficiency of 96% and a fake rate of 8% on unseen particle events.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge