Paolo Calafiura

Lawrence Berkeley National Laboratory

Scaling Graph Neural Networks for Particle Track Reconstruction

Apr 07, 2025Abstract:Particle track reconstruction is an important problem in high-energy physics (HEP), necessary to study properties of subatomic particles. Traditional track reconstruction algorithms scale poorly with the number of particles within the accelerator. The Exa.TrkX project, to alleviate this computational burden, introduces a pipeline that reduces particle track reconstruction to edge classification on a graph, and uses graph neural networks (GNNs) to produce particle tracks. However, this GNN-based approach is memory-prohibitive and skips graphs that would exceed GPU memory. We introduce improvements to the Exa.TrkX pipeline to train on samples of input particle graphs, and show that these improvements generalize to higher precision and recall. In addition, we adapt performance optimizations, introduced for GNN training, to fit our augmented Exa.TrkX pipeline. These optimizations provide a $2\times$ speedup over our baseline implementation in PyTorch Geometric.

FAIR Universe HiggsML Uncertainty Challenge Competition

Oct 03, 2024

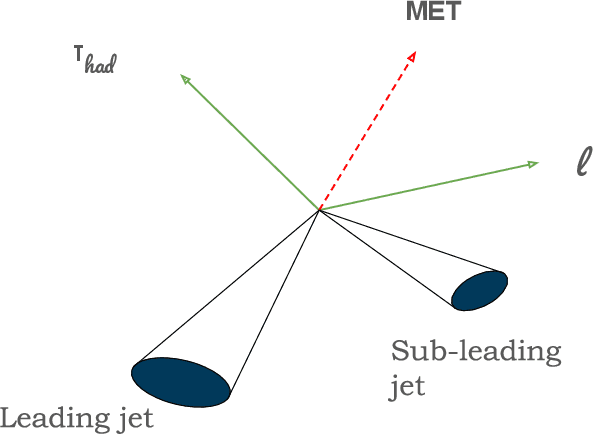

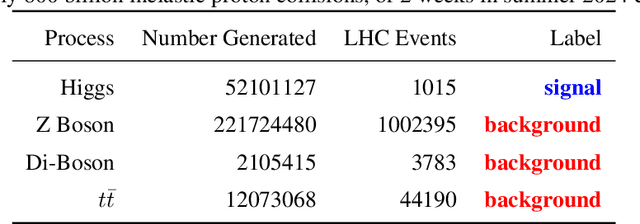

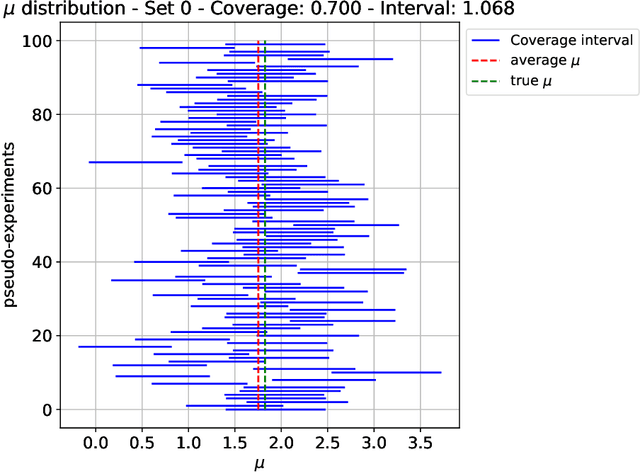

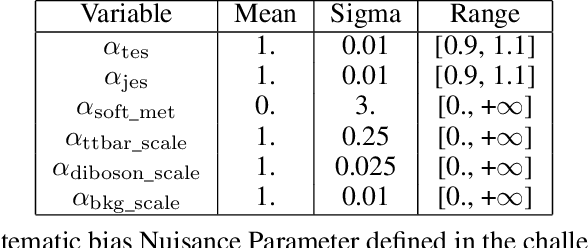

Abstract:The FAIR Universe -- HiggsML Uncertainty Challenge focuses on measuring the physics properties of elementary particles with imperfect simulators due to differences in modelling systematic errors. Additionally, the challenge is leveraging a large-compute-scale AI platform for sharing datasets, training models, and hosting machine learning competitions. Our challenge brings together the physics and machine learning communities to advance our understanding and methodologies in handling systematic (epistemic) uncertainties within AI techniques.

EggNet: An Evolving Graph-based Graph Attention Network for Particle Track Reconstruction

Jul 18, 2024

Abstract:Track reconstruction is a crucial task in particle experiments and is traditionally very computationally expensive due to its combinatorial nature. Recently, graph neural networks (GNNs) have emerged as a promising approach that can improve scalability. Most of these GNN-based methods, including the edge classification (EC) and the object condensation (OC) approach, require an input graph that needs to be constructed beforehand. In this work, we consider a one-shot OC approach that reconstructs particle tracks directly from a set of hits (point cloud) by recursively applying graph attention networks with an evolving graph structure. This approach iteratively updates the graphs and can better facilitate the message passing across each graph. Preliminary studies on the TrackML dataset show better track performance compared to the methods that require a fixed input graph.

A Language Model for Particle Tracking

Feb 14, 2024

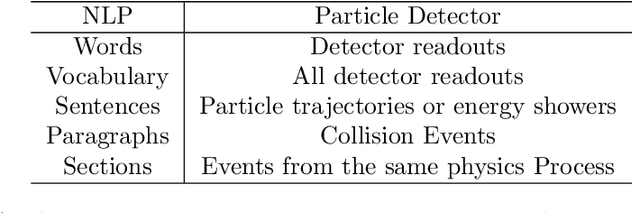

Abstract:Particle tracking is crucial for almost all physics analysis programs at the Large Hadron Collider. Deep learning models are pervasively used in particle tracking related tasks. However, the current practice is to design and train one deep learning model for one task with supervised learning techniques. The trained models work well for tasks they are trained on but show no or little generalization capabilities. We propose to unify these models with a language model. In this paper, we present a tokenized detector representation that allows us to train a BERT model for particle tracking. The trained BERT model, namely TrackingBERT, offers latent detector module embedding that can be used for other tasks. This work represents the first step towards developing a foundational model for particle detector understanding.

Hierarchical Graph Neural Networks for Particle Track Reconstruction

Mar 03, 2023

Abstract:We introduce a novel variant of GNN for particle tracking called Hierarchical Graph Neural Network (HGNN). The architecture creates a set of higher-level representations which correspond to tracks and assigns spacepoints to these tracks, allowing disconnected spacepoints to be assigned to the same track, as well as multiple tracks to share the same spacepoint. We propose a novel learnable pooling algorithm called GMPool to generate these higher-level representations called "super-nodes", as well as a new loss function designed for tracking problems and HGNN specifically. On a standard tracking problem, we show that, compared with previous ML-based tracking algorithms, the HGNN has better tracking efficiency performance, better robustness against inefficient input graphs, and better convergence compared with traditional GNNs.

Graph Neural Networks in Particle Physics: Implementations, Innovations, and Challenges

Mar 25, 2022

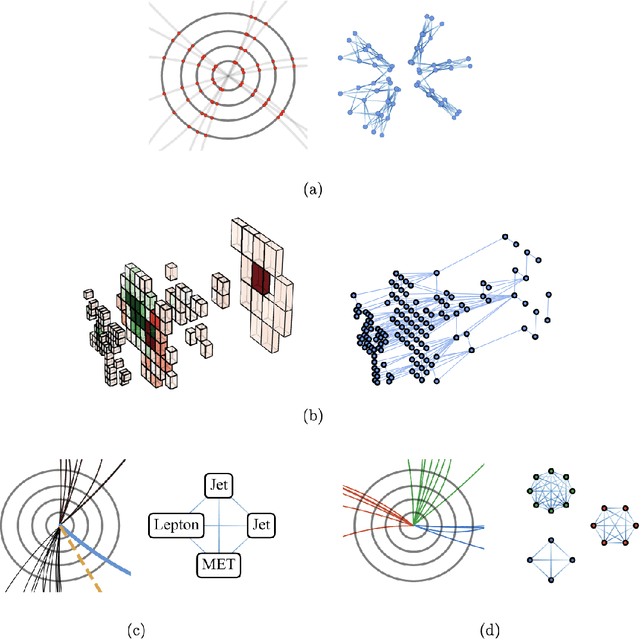

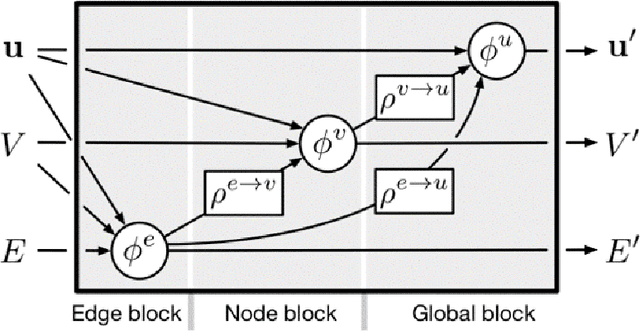

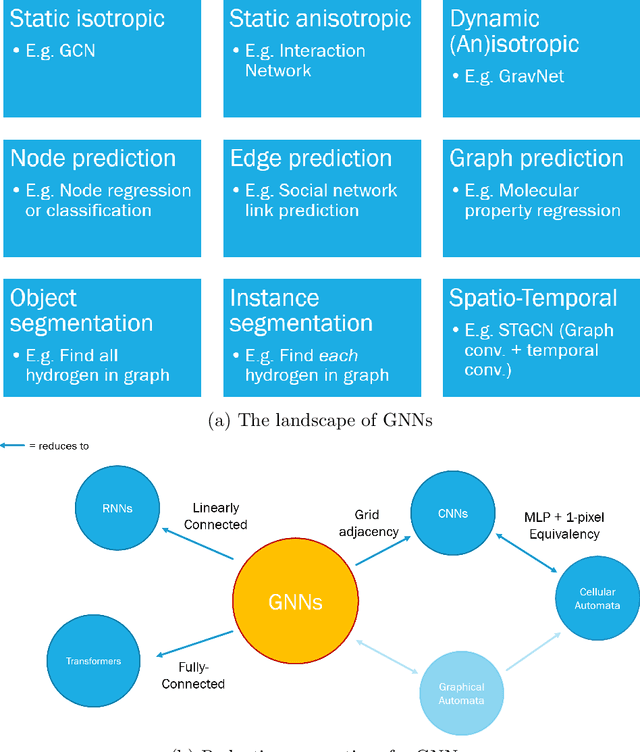

Abstract:Many physical systems can be best understood as sets of discrete data with associated relationships. Where previously these sets of data have been formulated as series or image data to match the available machine learning architectures, with the advent of graph neural networks (GNNs), these systems can be learned natively as graphs. This allows a wide variety of high- and low-level physical features to be attached to measurements and, by the same token, a wide variety of HEP tasks to be accomplished by the same GNN architectures. GNNs have found powerful use-cases in reconstruction, tagging, generation and end-to-end analysis. With the wide-spread adoption of GNNs in industry, the HEP community is well-placed to benefit from rapid improvements in GNN latency and memory usage. However, industry use-cases are not perfectly aligned with HEP and much work needs to be done to best match unique GNN capabilities to unique HEP obstacles. We present here a range of these capabilities, predictions of which are currently being well-adopted in HEP communities, and which are still immature. We hope to capture the landscape of graph techniques in machine learning as well as point out the most significant gaps that are inhibiting potentially large leaps in research.

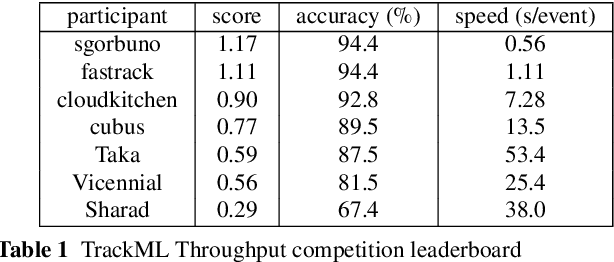

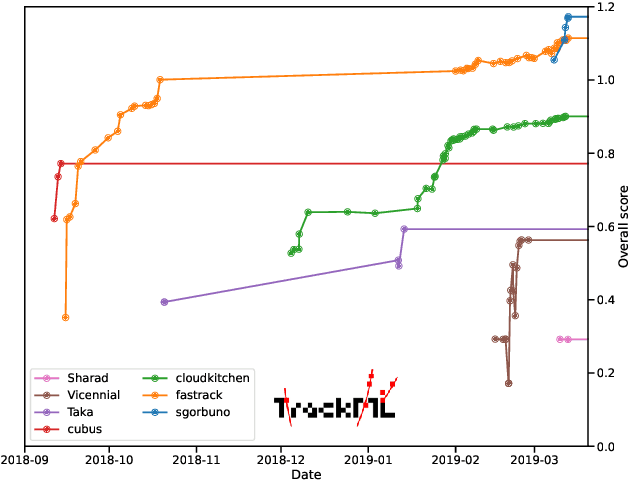

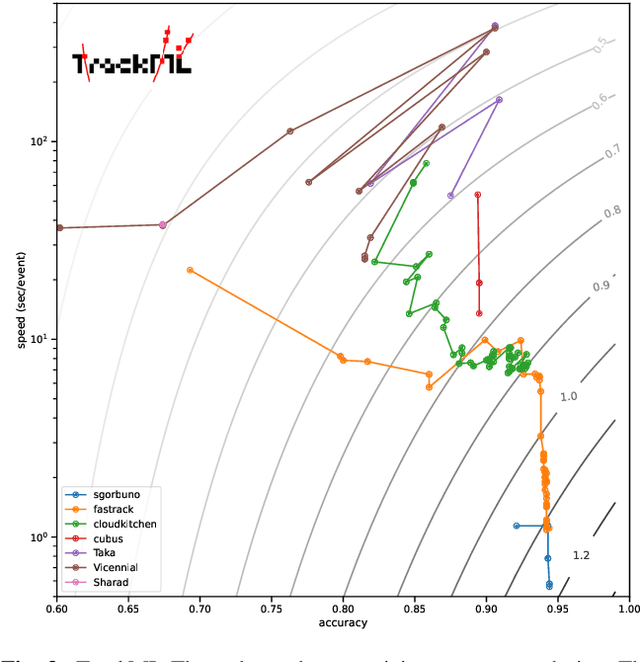

The Tracking Machine Learning challenge : Throughput phase

May 14, 2021

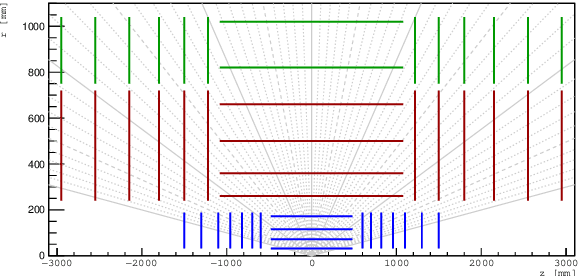

Abstract:This paper reports on the second "Throughput" phase of the Tracking Machine Learning (TrackML) challenge on the Codalab platform. As in the first "Accuracy" phase, the participants had to solve a difficult experimental problem linked to tracking accurately the trajectory of particles as e.g. created at the Large Hadron Collider (LHC): given O($10^5$) points, the participants had to connect them into O($10^4$) individual groups that represent the particle trajectories which are approximated helical. While in the first phase only the accuracy mattered, the goal of this second phase was a compromise between the accuracy and the speed of inference. Both were measured on the Codalab platform where the participants had to upload their software. The best three participants had solutions with good accuracy and speed an order of magnitude faster than the state of the art when the challenge was designed. Although the core algorithms were less diverse than in the first phase, a diversity of techniques have been used and are described in this paper. The performance of the algorithms are analysed in depth and lessons derived.

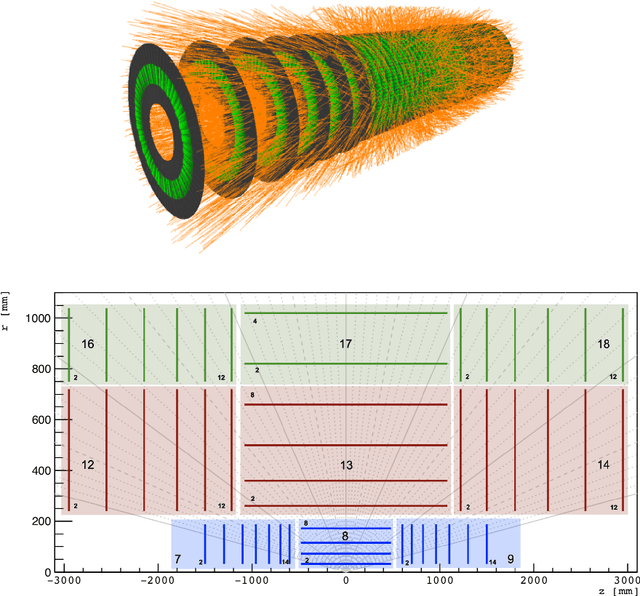

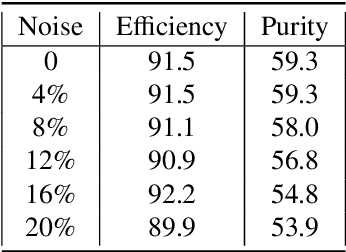

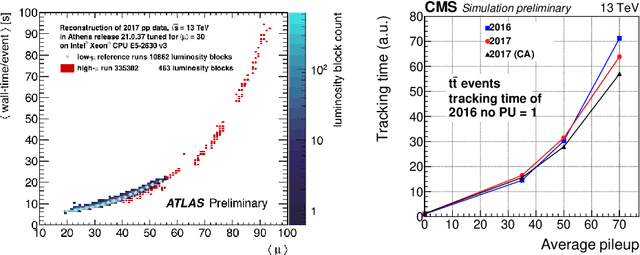

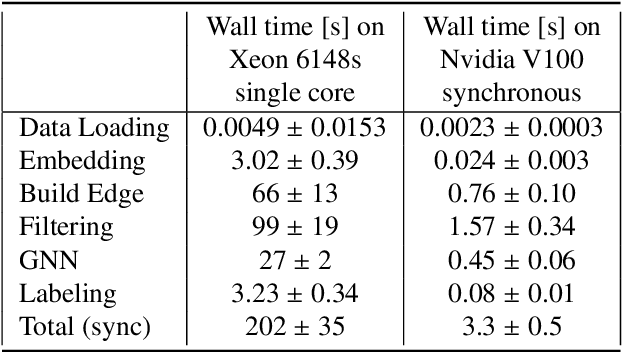

Physics and Computing Performance of the Exa.TrkX TrackML Pipeline

Mar 11, 2021

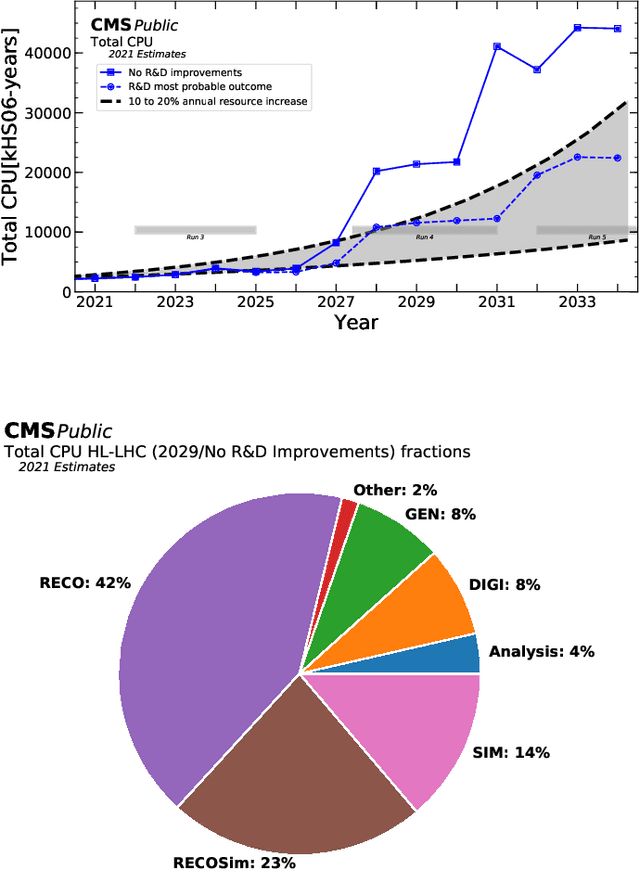

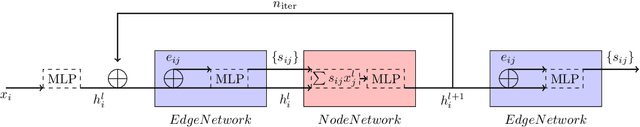

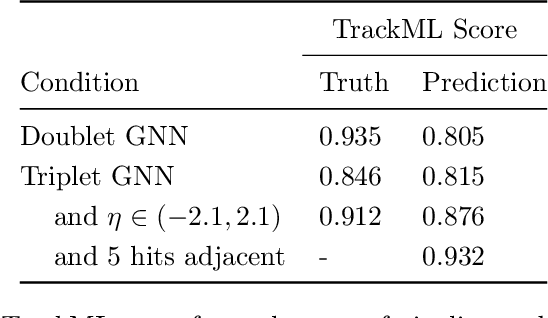

Abstract:The Exa.TrkX project has applied geometric learning concepts such as metric learning and graph neural networks to HEP particle tracking. The Exa.TrkX tracking pipeline clusters detector measurements to form track candidates and filters them. The pipeline, originally developed using the TrackML dataset (a simulation of an LHC-like tracking detector), has been demonstrated on various detectors, including the DUNE LArTPC and the CMS High-Granularity Calorimeter. This paper documents new developments needed to study the physics and computing performance of the Exa.TrkX pipeline on the full TrackML dataset, a first step towards validating the pipeline using ATLAS and CMS data. The pipeline achieves tracking efficiency and purity similar to production tracking algorithms. Crucially for future HEP applications, the pipeline benefits significantly from GPU acceleration, and its computational requirements scale close to linearly with the number of particles in the event.

Track Seeding and Labelling with Embedded-space Graph Neural Networks

Jun 30, 2020

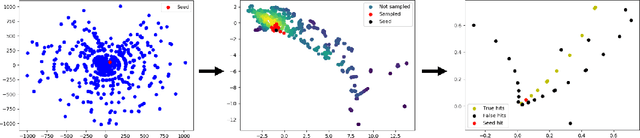

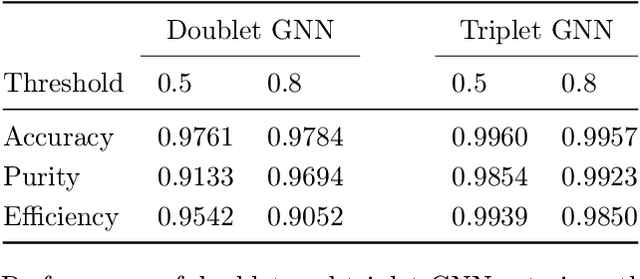

Abstract:To address the unprecedented scale of HL-LHC data, the Exa.TrkX project is investigating a variety of machine learning approaches to particle track reconstruction. The most promising of these solutions, graph neural networks (GNN), process the event as a graph that connects track measurements (detector hits corresponding to nodes) with candidate line segments between the hits (corresponding to edges). Detector information can be associated with nodes and edges, enabling a GNN to propagate the embedded parameters around the graph and predict node-, edge- and graph-level observables. Previously, message-passing GNNs have shown success in predicting doublet likelihood, and we here report updates on the state-of-the-art architectures for this task. In addition, the Exa.TrkX project has investigated innovations in both graph construction, and embedded representations, in an effort to achieve fully learned end-to-end track finding. Hence, we present a suite of extensions to the original model, with encouraging results for hitgraph classification. In addition, we explore increased performance by constructing graphs from learned representations which contain non-linear metric structure, allowing for efficient clustering and neighborhood queries of data points. We demonstrate how this framework fits in with both traditional clustering pipelines, and GNN approaches. The embedded graphs feed into high-accuracy doublet and triplet classifiers, or can be used as an end-to-end track classifier by clustering in an embedded space. A set of post-processing methods improve performance with knowledge of the detector physics. Finally, we present numerical results on the TrackML particle tracking challenge dataset, where our framework shows favorable results in both seeding and track finding.

Machine Learning in High Energy Physics Community White Paper

Jul 08, 2018

Abstract:Machine learning is an important research area in particle physics, beginning with applications to high-level physics analysis in the 1990s and 2000s, followed by an explosion of applications in particle and event identification and reconstruction in the 2010s. In this document we discuss promising future research and development areas in machine learning in particle physics with a roadmap for their implementation, software and hardware resource requirements, collaborative initiatives with the data science community, academia and industry, and training the particle physics community in data science. The main objective of the document is to connect and motivate these areas of research and development with the physics drivers of the High-Luminosity Large Hadron Collider and future neutrino experiments and identify the resource needs for their implementation. Additionally we identify areas where collaboration with external communities will be of great benefit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge