Xiangyang Ju

Lawrence Berkeley National Laboratory

Scaling Graph Neural Networks for Particle Track Reconstruction

Apr 07, 2025Abstract:Particle track reconstruction is an important problem in high-energy physics (HEP), necessary to study properties of subatomic particles. Traditional track reconstruction algorithms scale poorly with the number of particles within the accelerator. The Exa.TrkX project, to alleviate this computational burden, introduces a pipeline that reduces particle track reconstruction to edge classification on a graph, and uses graph neural networks (GNNs) to produce particle tracks. However, this GNN-based approach is memory-prohibitive and skips graphs that would exceed GPU memory. We introduce improvements to the Exa.TrkX pipeline to train on samples of input particle graphs, and show that these improvements generalize to higher precision and recall. In addition, we adapt performance optimizations, introduced for GNN training, to fit our augmented Exa.TrkX pipeline. These optimizations provide a $2\times$ speedup over our baseline implementation in PyTorch Geometric.

TrackSorter: A Transformer-based sorting algorithm for track finding in High Energy Physics

Jul 31, 2024

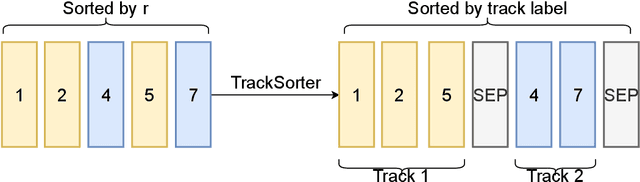

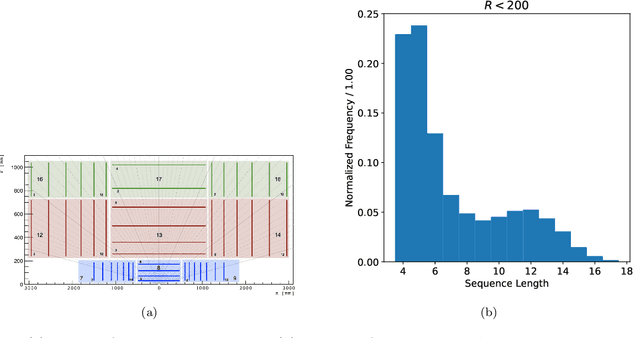

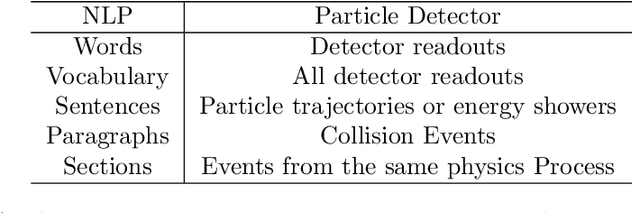

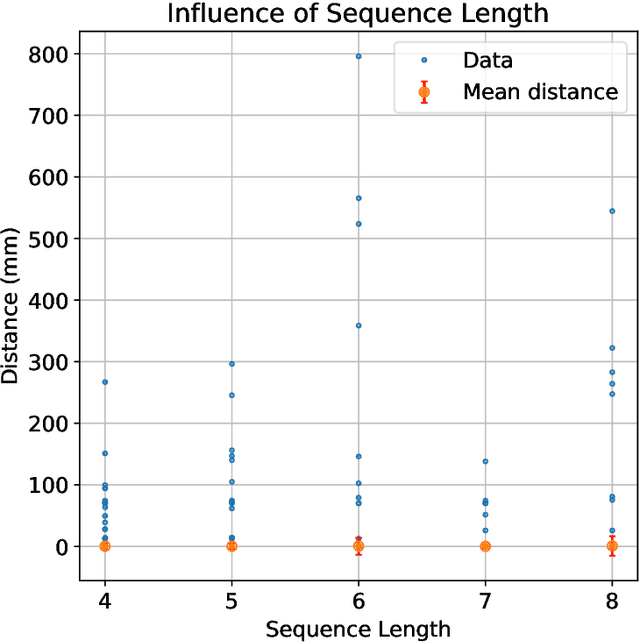

Abstract:Track finding in particle data is a challenging pattern recognition problem in High Energy Physics. It takes as inputs a point cloud of space points and labels them so that space points created by the same particle have the same label. The list of space points with the same label is a track candidate. We argue that this pattern recognition problem can be formulated as a sorting problem, of which the inputs are a list of space points sorted by their distances away from the collision points and the outputs are the space points sorted by their labels. In this paper, we propose the TrackSorter algorithm: a Transformer-based algorithm for pattern recognition in particle data. TrackSorter uses a simple tokenization scheme to convert space points into discrete tokens. It then uses the tokenized space points as inputs and sorts the input tokens into track candidates. TrackSorter is a novel end-to-end track finding algorithm that leverages Transformer-based models to solve pattern recognition problems. It is evaluated on the TrackML dataset and has good track finding performance.

A Language Model for Particle Tracking

Feb 14, 2024

Abstract:Particle tracking is crucial for almost all physics analysis programs at the Large Hadron Collider. Deep learning models are pervasively used in particle tracking related tasks. However, the current practice is to design and train one deep learning model for one task with supervised learning techniques. The trained models work well for tasks they are trained on but show no or little generalization capabilities. We propose to unify these models with a language model. In this paper, we present a tokenized detector representation that allows us to train a BERT model for particle tracking. The trained BERT model, namely TrackingBERT, offers latent detector module embedding that can be used for other tasks. This work represents the first step towards developing a foundational model for particle detector understanding.

Hierarchical Graph Neural Networks for Particle Track Reconstruction

Mar 03, 2023

Abstract:We introduce a novel variant of GNN for particle tracking called Hierarchical Graph Neural Network (HGNN). The architecture creates a set of higher-level representations which correspond to tracks and assigns spacepoints to these tracks, allowing disconnected spacepoints to be assigned to the same track, as well as multiple tracks to share the same spacepoint. We propose a novel learnable pooling algorithm called GMPool to generate these higher-level representations called "super-nodes", as well as a new loss function designed for tracking problems and HGNN specifically. On a standard tracking problem, we show that, compared with previous ML-based tracking algorithms, the HGNN has better tracking efficiency performance, better robustness against inefficient input graphs, and better convergence compared with traditional GNNs.

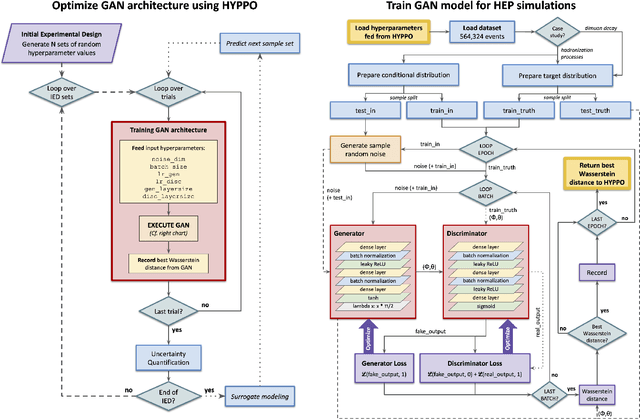

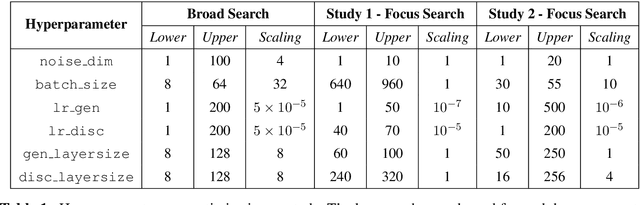

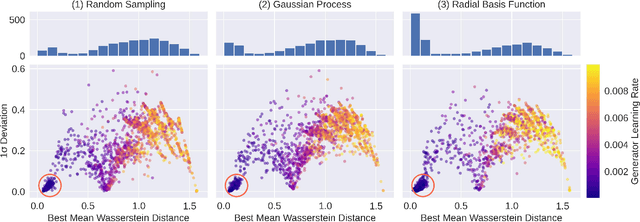

Hyperparameter Optimization of Generative Adversarial Network Models for High-Energy Physics Simulations

Aug 12, 2022

Abstract:The Generative Adversarial Network (GAN) is a powerful and flexible tool that can generate high-fidelity synthesized data by learning. It has seen many applications in simulating events in High Energy Physics (HEP), including simulating detector responses and physics events. However, training GANs is notoriously hard and optimizing their hyperparameters even more so. It normally requires many trial-and-error training attempts to force a stable training and reach a reasonable fidelity. Significant tuning work has to be done to achieve the accuracy required by physics analyses. This work uses the physics-agnostic and high-performance-computer-friendly hyperparameter optimization tool HYPPO to optimize and examine the sensitivities of the hyperparameters of a GAN for two independent HEP datasets. This work provides the first insights into efficiently tuning GANs for Large Hadron Collider data. We show that given proper hyperparameter tuning, we can find GANs that provide high-quality approximations of the desired quantities. We also provide guidelines for how to go about GAN architecture tuning using the analysis tools in HYPPO.

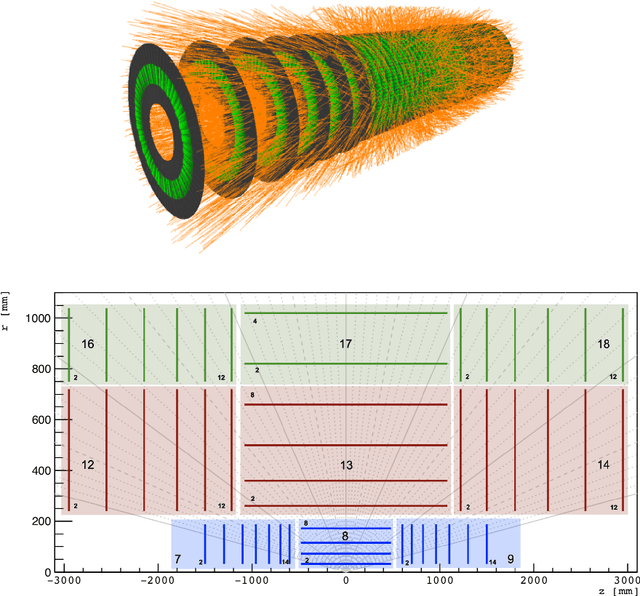

Physics and Computing Performance of the Exa.TrkX TrackML Pipeline

Mar 11, 2021

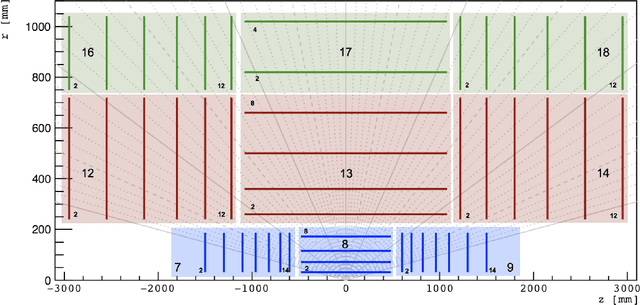

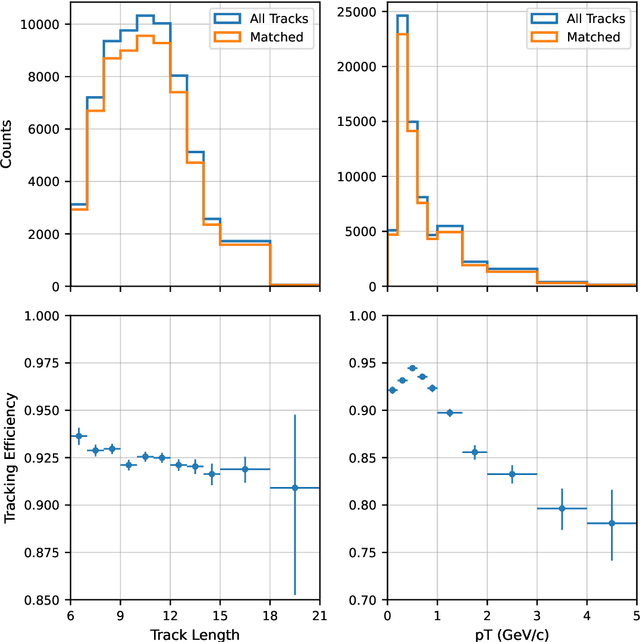

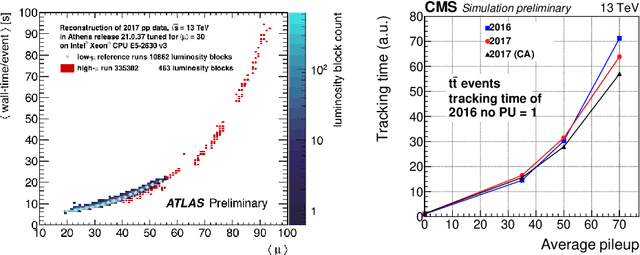

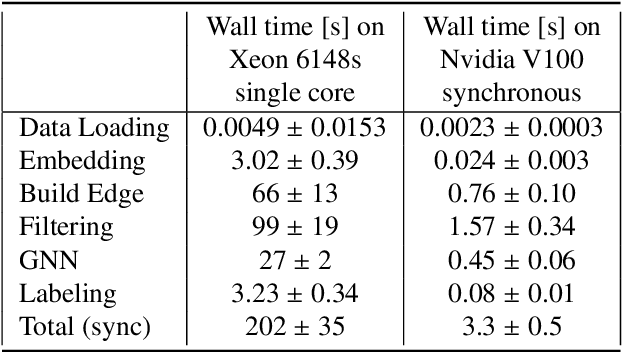

Abstract:The Exa.TrkX project has applied geometric learning concepts such as metric learning and graph neural networks to HEP particle tracking. The Exa.TrkX tracking pipeline clusters detector measurements to form track candidates and filters them. The pipeline, originally developed using the TrackML dataset (a simulation of an LHC-like tracking detector), has been demonstrated on various detectors, including the DUNE LArTPC and the CMS High-Granularity Calorimeter. This paper documents new developments needed to study the physics and computing performance of the Exa.TrkX pipeline on the full TrackML dataset, a first step towards validating the pipeline using ATLAS and CMS data. The pipeline achieves tracking efficiency and purity similar to production tracking algorithms. Crucially for future HEP applications, the pipeline benefits significantly from GPU acceleration, and its computational requirements scale close to linearly with the number of particles in the event.

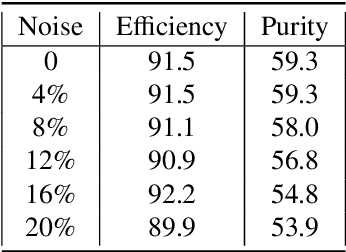

Beyond 4D Tracking: Using Cluster Shapes for Track Seeding

Dec 08, 2020

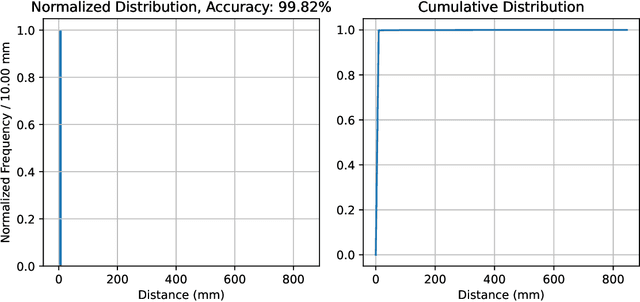

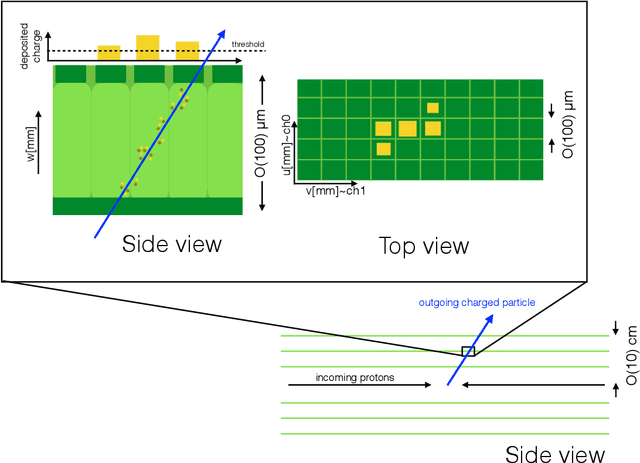

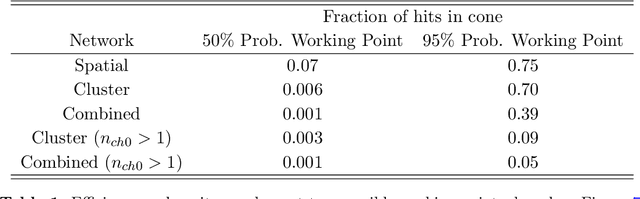

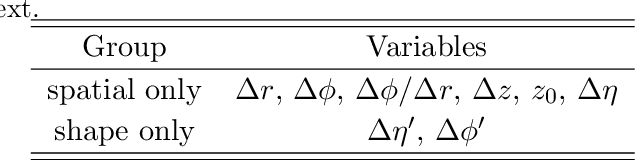

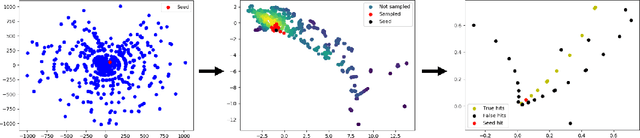

Abstract:Tracking is one of the most time consuming aspects of event reconstruction at the Large Hadron Collider (LHC) and its high-luminosity upgrade (HL-LHC). Innovative detector technologies extend tracking to four-dimensions by including timing in the pattern recognition and parameter estimation. However, present and future hardware already have additional information that is largely unused by existing track seeding algorithms. The shape of clusters provides an additional dimension for track seeding that can significantly reduce the combinatorial challenge of track finding. We use neural networks to show that cluster shapes can reduce significantly the rate of fake combinatorical backgrounds while preserving a high efficiency. We demonstrate this using the information in cluster singlets, doublets and triplets. Numerical results are presented with simulations from the TrackML challenge.

Track Seeding and Labelling with Embedded-space Graph Neural Networks

Jun 30, 2020

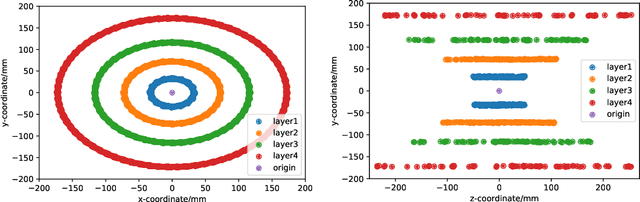

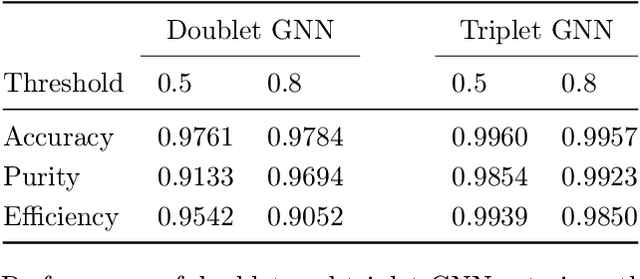

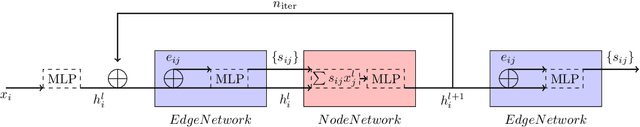

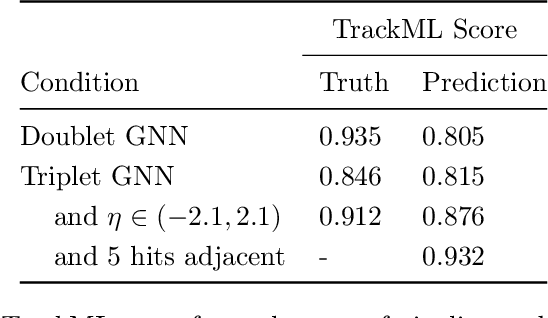

Abstract:To address the unprecedented scale of HL-LHC data, the Exa.TrkX project is investigating a variety of machine learning approaches to particle track reconstruction. The most promising of these solutions, graph neural networks (GNN), process the event as a graph that connects track measurements (detector hits corresponding to nodes) with candidate line segments between the hits (corresponding to edges). Detector information can be associated with nodes and edges, enabling a GNN to propagate the embedded parameters around the graph and predict node-, edge- and graph-level observables. Previously, message-passing GNNs have shown success in predicting doublet likelihood, and we here report updates on the state-of-the-art architectures for this task. In addition, the Exa.TrkX project has investigated innovations in both graph construction, and embedded representations, in an effort to achieve fully learned end-to-end track finding. Hence, we present a suite of extensions to the original model, with encouraging results for hitgraph classification. In addition, we explore increased performance by constructing graphs from learned representations which contain non-linear metric structure, allowing for efficient clustering and neighborhood queries of data points. We demonstrate how this framework fits in with both traditional clustering pipelines, and GNN approaches. The embedded graphs feed into high-accuracy doublet and triplet classifiers, or can be used as an end-to-end track classifier by clustering in an embedded space. A set of post-processing methods improve performance with knowledge of the detector physics. Finally, we present numerical results on the TrackML particle tracking challenge dataset, where our framework shows favorable results in both seeding and track finding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge