Kazuhiro Terao

for the DeepLearnPhysics Collaboration

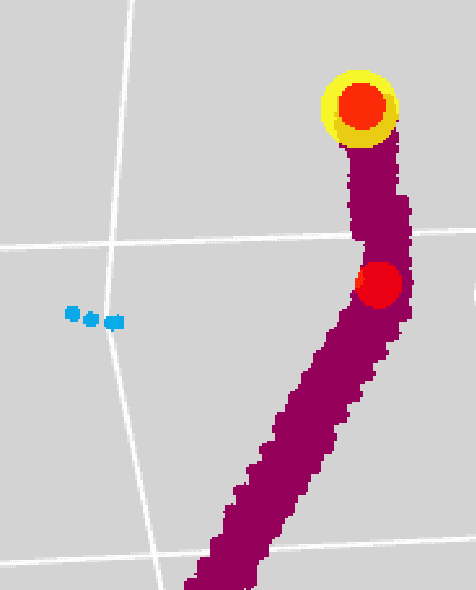

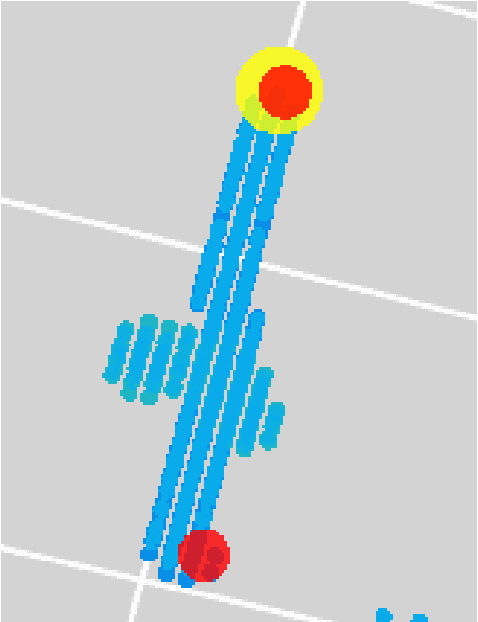

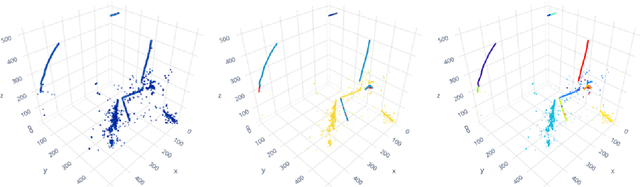

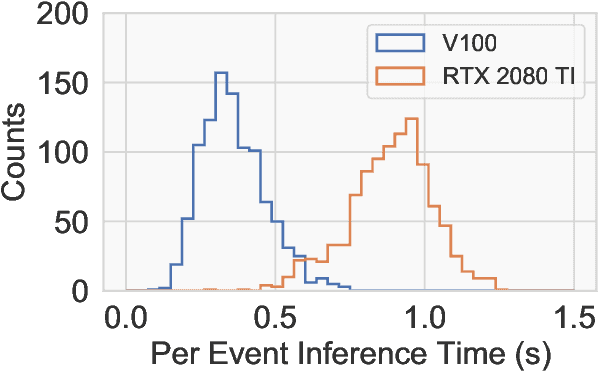

Particle Trajectory Representation Learning with Masked Point Modeling

Feb 04, 2025Abstract:Effective self-supervised learning (SSL) techniques have been key to unlocking large datasets for representation learning. While many promising methods have been developed using online corpora and captioned photographs, their application to scientific domains, where data encodes highly specialized knowledge, remains in its early stages. We present a self-supervised masked modeling framework for 3D particle trajectory analysis in Time Projection Chambers (TPCs). These detectors produce globally sparse (<1% occupancy) but locally dense point clouds, capturing meter-scale particle trajectories at millimeter resolution. Starting with PointMAE, this work proposes volumetric tokenization to group sparse ionization points into resolution-agnostic patches, as well as an auxiliary energy infilling task to improve trajectory semantics. This approach -- which we call Point-based Liquid Argon Masked Autoencoder (PoLAr-MAE) -- achieves 99.4% track and 97.7% shower classification F-scores, matching that of supervised baselines without any labeled data. While the model learns rich particle trajectory representations, it struggles with sub-token phenomena like overlapping or short-lived particle trajectories. To support further research, we release PILArNet-M -- the largest open LArTPC dataset (1M+ events, 5.2B labeled points) -- to advance SSL in high energy physics (HEP). Project site: https://youngsm.com/polarmae/

Implicit Neural Representation as a Differentiable Surrogate for Photon Propagation in a Monolithic Neutrino Detector

Nov 02, 2022

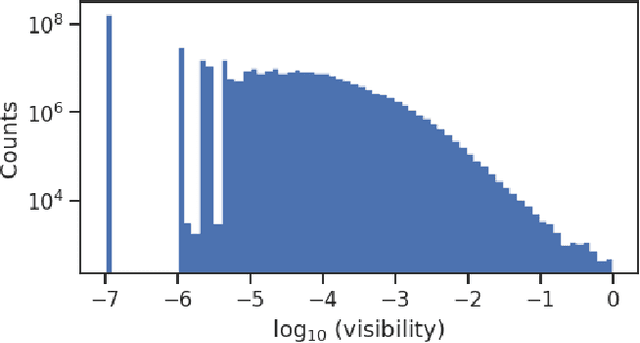

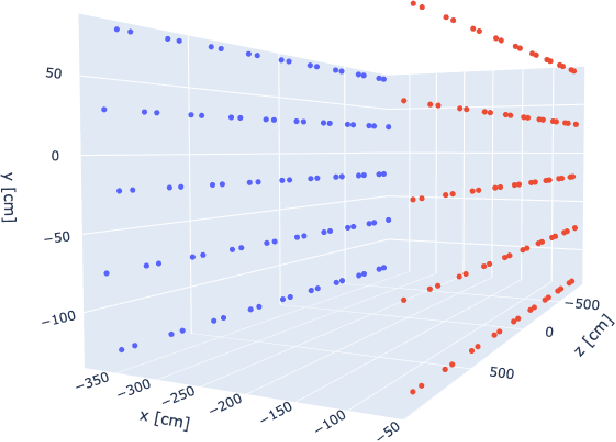

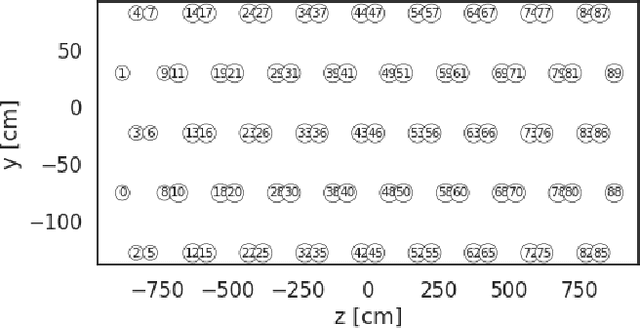

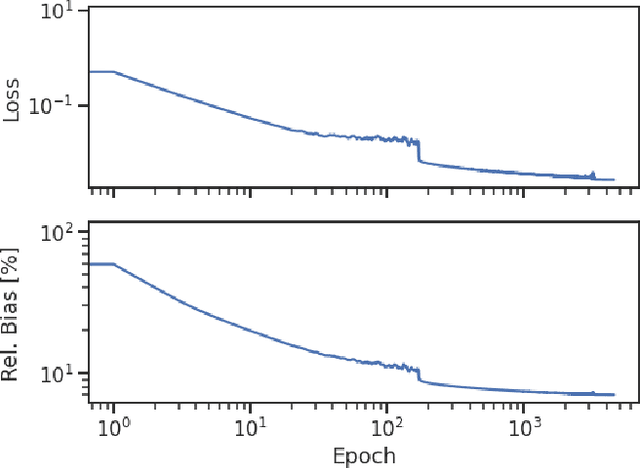

Abstract:Optical photons are used as signal in a wide variety of particle detectors. Modern neutrino experiments employ hundreds to tens of thousands of photon detectors to observe signal from millions to billions of scintillation photons produced from energy deposition of charged particles. These neutrino detectors are typically large, containing kilotons of target volume, with different optical properties. Modeling individual photon propagation in form of look-up table requires huge computational resources. As the size of a table increases with detector volume for a fixed resolution, this method scales poorly for future larger detectors. Alternative approaches such as fitting a polynomial to the model could address the memory issue, but results in poorer performance. Both look-up table and fitting approaches are prone to discrepancies between the detector simulation and the data collected. We propose a new approach using SIREN, an implicit neural representation with periodic activation functions, to model the look-up table as a 3D scene and reproduces the acceptance map with high accuracy. The number of parameters in our SIREN model is orders of magnitude smaller than the number of voxels in the look-up table. As it models an underlying functional shape, SIREN is scalable to a larger detector. Furthermore, SIREN can successfully learn the spatial gradients of the photon library, providing additional information for downstream applications. Finally, as SIREN is a neural network representation, it is differentiable with respect to its parameters, and therefore tunable via gradient descent. We demonstrate the potential of optimizing SIREN directly on real data, which mitigates the concern of data vs. simulation discrepancies. We further present an application for data reconstruction where SIREN is used to form a likelihood function for photon statistics.

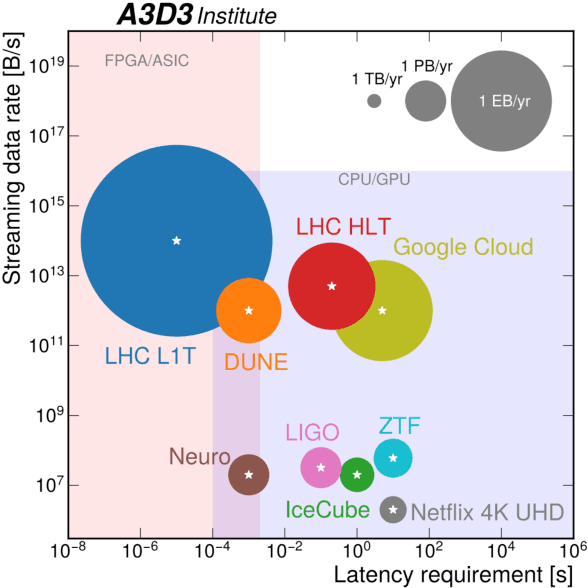

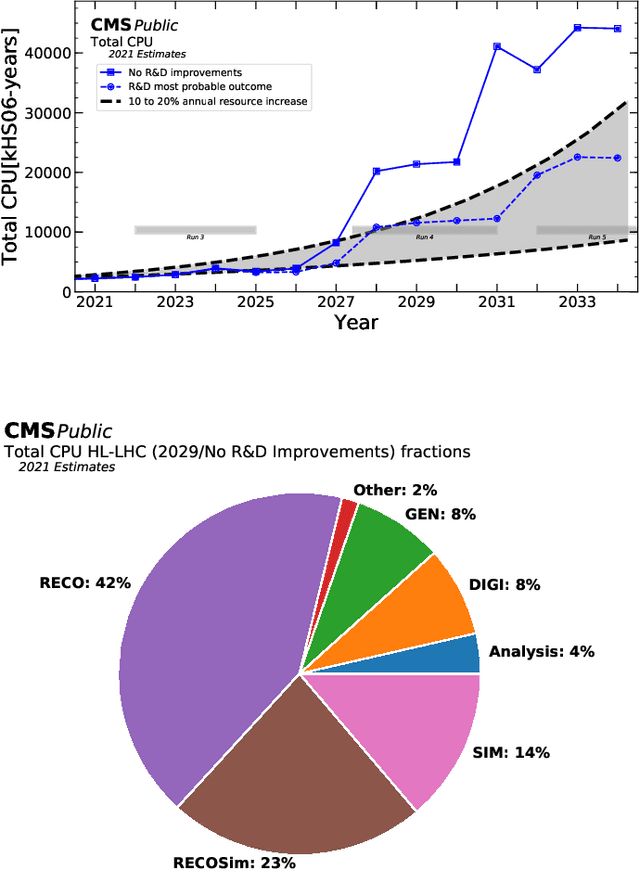

Snowmass 2021 Computational Frontier CompF03 Topical Group Report: Machine Learning

Sep 15, 2022

Abstract:The rapidly-developing intersection of machine learning (ML) with high-energy physics (HEP) presents both opportunities and challenges to our community. Far beyond applications of standard ML tools to HEP problems, genuinely new and potentially revolutionary approaches are being developed by a generation of talent literate in both fields. There is an urgent need to support the needs of the interdisciplinary community driving these developments, including funding dedicated research at the intersection of the two fields, investing in high-performance computing at universities and tailoring allocation policies to support this work, developing of community tools and standards, and providing education and career paths for young researchers attracted by the intellectual vitality of machine learning for high energy physics.

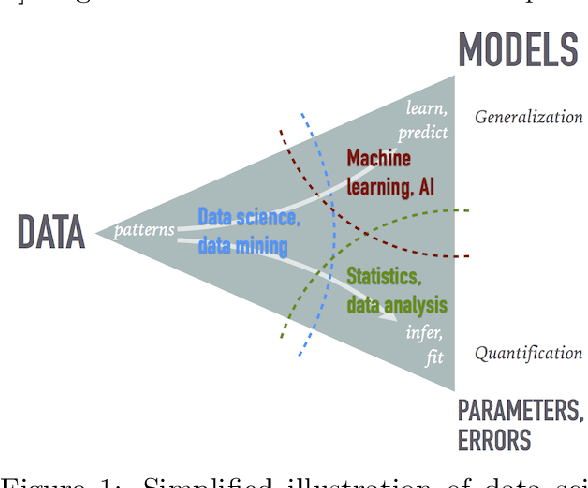

Data Science and Machine Learning in Education

Jul 19, 2022

Abstract:The growing role of data science (DS) and machine learning (ML) in high-energy physics (HEP) is well established and pertinent given the complex detectors, large data, sets and sophisticated analyses at the heart of HEP research. Moreover, exploiting symmetries inherent in physics data have inspired physics-informed ML as a vibrant sub-field of computer science research. HEP researchers benefit greatly from materials widely available materials for use in education, training and workforce development. They are also contributing to these materials and providing software to DS/ML-related fields. Increasingly, physics departments are offering courses at the intersection of DS, ML and physics, often using curricula developed by HEP researchers and involving open software and data used in HEP. In this white paper, we explore synergies between HEP research and DS/ML education, discuss opportunities and challenges at this intersection, and propose community activities that will be mutually beneficial.

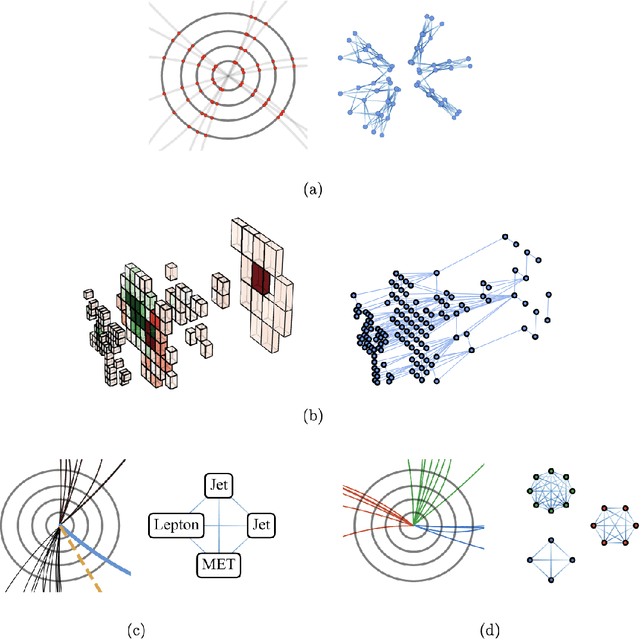

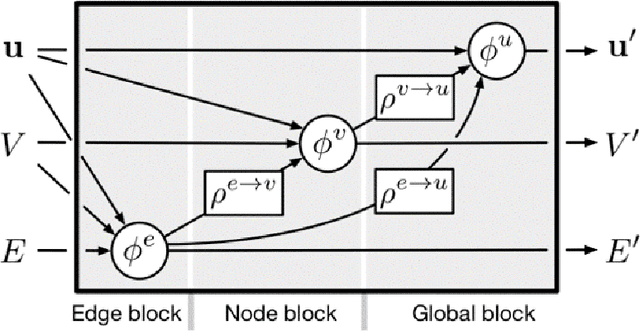

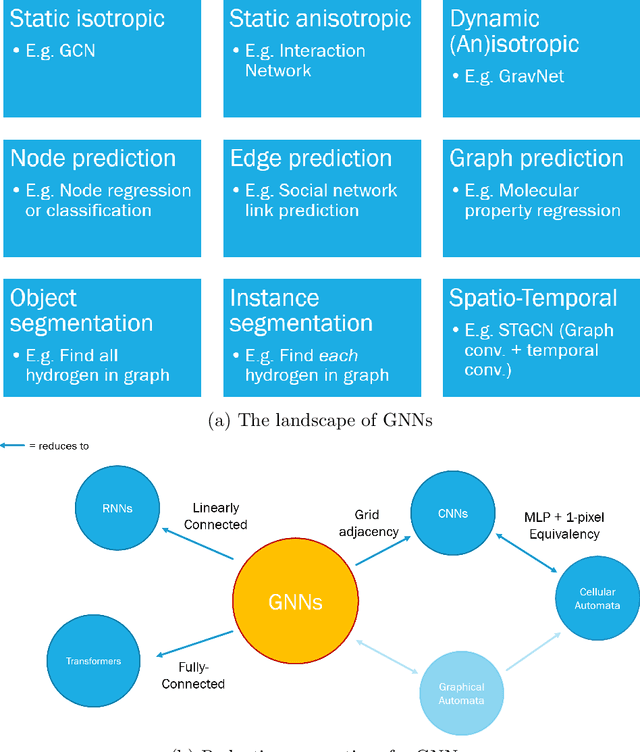

Graph Neural Networks in Particle Physics: Implementations, Innovations, and Challenges

Mar 25, 2022

Abstract:Many physical systems can be best understood as sets of discrete data with associated relationships. Where previously these sets of data have been formulated as series or image data to match the available machine learning architectures, with the advent of graph neural networks (GNNs), these systems can be learned natively as graphs. This allows a wide variety of high- and low-level physical features to be attached to measurements and, by the same token, a wide variety of HEP tasks to be accomplished by the same GNN architectures. GNNs have found powerful use-cases in reconstruction, tagging, generation and end-to-end analysis. With the wide-spread adoption of GNNs in industry, the HEP community is well-placed to benefit from rapid improvements in GNN latency and memory usage. However, industry use-cases are not perfectly aligned with HEP and much work needs to be done to best match unique GNN capabilities to unique HEP obstacles. We present here a range of these capabilities, predictions of which are currently being well-adopted in HEP communities, and which are still immature. We hope to capture the landscape of graph techniques in machine learning as well as point out the most significant gaps that are inhibiting potentially large leaps in research.

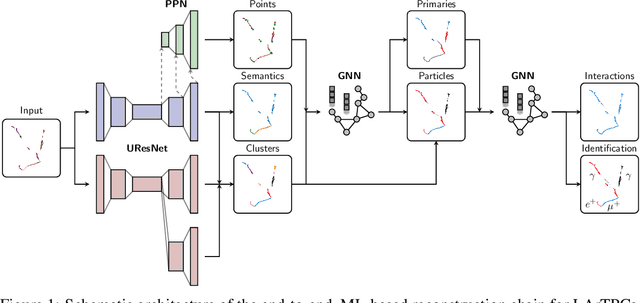

Scalable, End-to-End, Deep-Learning-Based Data Reconstruction Chain for Particle Imaging Detectors

Feb 01, 2021

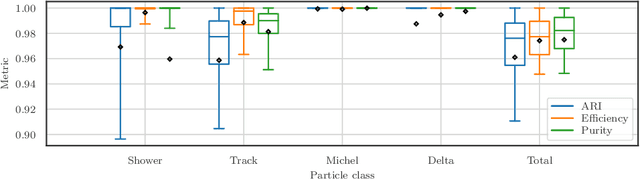

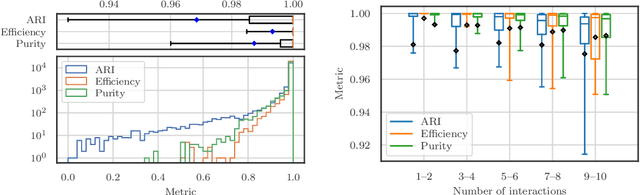

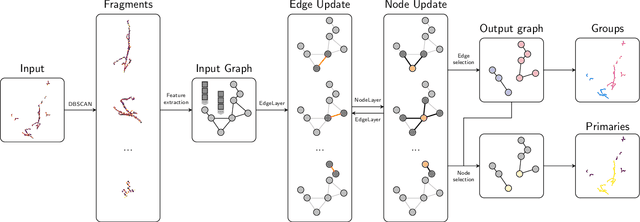

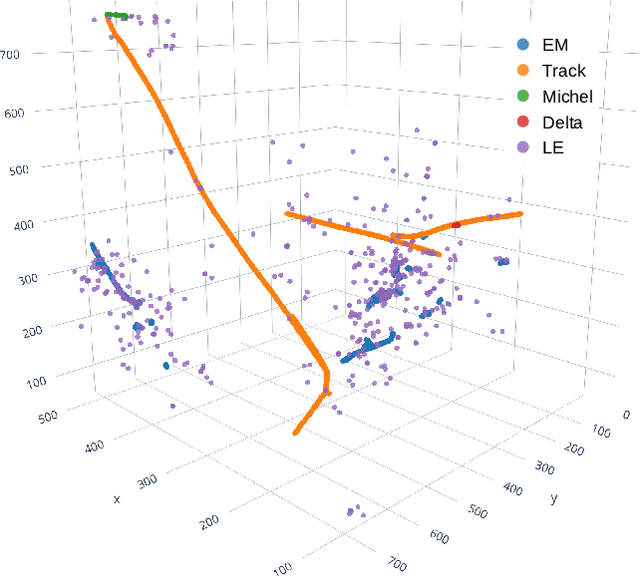

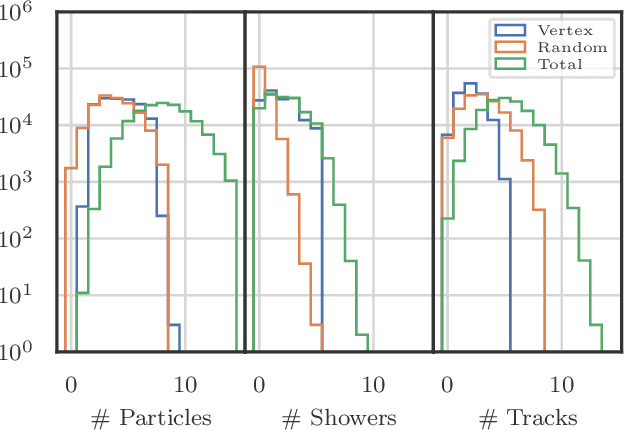

Abstract:Recent inroads in Computer Vision (CV) and Machine Learning (ML) have motivated a new approach to the analysis of particle imaging detector data. Unlike previous efforts which tackled isolated CV tasks, this paper introduces an end-to-end, ML-based data reconstruction chain for Liquid Argon Time Projection Chambers (LArTPCs), the state-of-the-art in precision imaging at the intensity frontier of neutrino physics. The chain is a multi-task network cascade which combines voxel-level feature extraction using Sparse Convolutional Neural Networks and particle superstructure formation using Graph Neural Networks. Each algorithm incorporates physics-informed inductive biases, while their collective hierarchy is used to enforce a causal structure. The output is a comprehensive description of an event that may be used for high-level physics inference. The chain is end-to-end optimizable, eliminating the need for time-intensive manual software adjustments. It is also the first implementation to handle the unprecedented pile-up of dozens of high energy neutrino interactions, expected in the 3D-imaging LArTPC of the Deep Underground Neutrino Experiment. The chain is trained as a whole and its performance is assessed at each step using an open simulated data set.

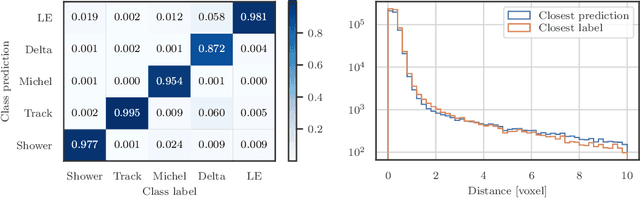

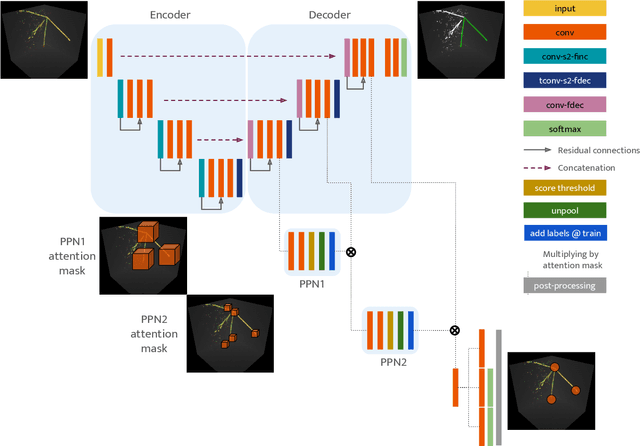

Point Proposal Network for Reconstructing 3D Particle Endpoints with Sub-Pixel Precision in Liquid Argon Time Projection Chambers

Jul 10, 2020

Abstract:Liquid Argon Time Projection Chambers (LArTPC) are particle imaging detectors recording 2D or 3D images of trajectories of charged particles. Identifying points of interest in these images, namely the initial and terminal points of track-like particle trajectories such as muons and protons, and the initial points of electromagnetic shower-like particle trajectories such as electrons and gamma rays, is a crucial step of identifying and analyzing these particles and impacts the inference of physics signals such as neutrino interaction. The Point Proposal Network is designed to discover these specific points of interest. The algorithm predicts with a sub-voxel precision their spatial location, and also determines the category of the identified points of interest. Using as a benchmark the PILArNet public LArTPC data sample in which the voxel resolution is 3mm/voxel, our algorithm successfully predicted 96.8% and 97.8% of 3D points within a distance of 3 and 10~voxels from the provided true point locations respectively. For the predicted 3D points within 3 voxels of the closest true point locations, the median distance is found to be 0.25 voxels, achieving the sub-voxel level precision. In addition, we report our analysis of the mistakes where our algorithm prediction differs from the provided true point positions by more than 10~voxels. Among 50 mistakes visually scanned, 25 were due to the definition of true position location, 15 were legitimate mistakes where a physicist cannot visually disagree with the algorithm's prediction, and 10 were genuine mistakes that we wish to improve in the future. Further, using these predicted points, we demonstrate a simple algorithm to cluster 3D voxels into individual track-like particle trajectories with a clustering efficiency, purity, and Adjusted Rand Index of 96%, 93%, and 91% respectively.

Scalable, Proposal-free Instance Segmentation Network for 3D Pixel Clustering and Particle Trajectory Reconstruction in Liquid Argon Time Projection Chambers

Jul 06, 2020

Abstract:Liquid Argon Time Projection Chambers (LArTPCs) are high resolution particle imaging detectors, employed by accelerator-based neutrino oscillation experiments for high precision physics measurements. While images of particle trajectories are intuitive to analyze for physicists, the development of a high quality, automated data reconstruction chain remains challenging. One of the most critical reconstruction steps is particle clustering: the task of grouping 3D image pixels into different particle instances that share the same particle type. In this paper, we propose the first scalable deep learning algorithm for particle clustering in LArTPC data using sparse convolutional neural networks (SCNN). Building on previous works on SCNNs and proposal free instance segmentation, we build an end-to-end trainable instance segmentation network that learns an embedding of the image pixels to perform point cloud clustering in a transformed space. We benchmark the performance of our algorithm on PILArNet, a public 3D particle imaging dataset, with respect to common clustering evaluation metrics. 3D pixels were successfully clustered into individual particle trajectories with 90% of them having an adjusted Rand index score greater than 92% with a mean pixel clustering efficiency and purity above 96%. This work contributes to the development of an end-to-end optimizable full data reconstruction chain for LArTPCs, in particular pixel-based 3D imaging detectors including the near detector of the Deep Underground Neutrino Experiment. Our algorithm is made available in the open access repository, and we share our Singularity software container, which can be used to reproduce our work on the dataset.

Clustering of Electromagnetic Showers and Particle Interactions with Graph Neural Networks in Liquid Argon Time Projection Chambers Data

Jul 02, 2020

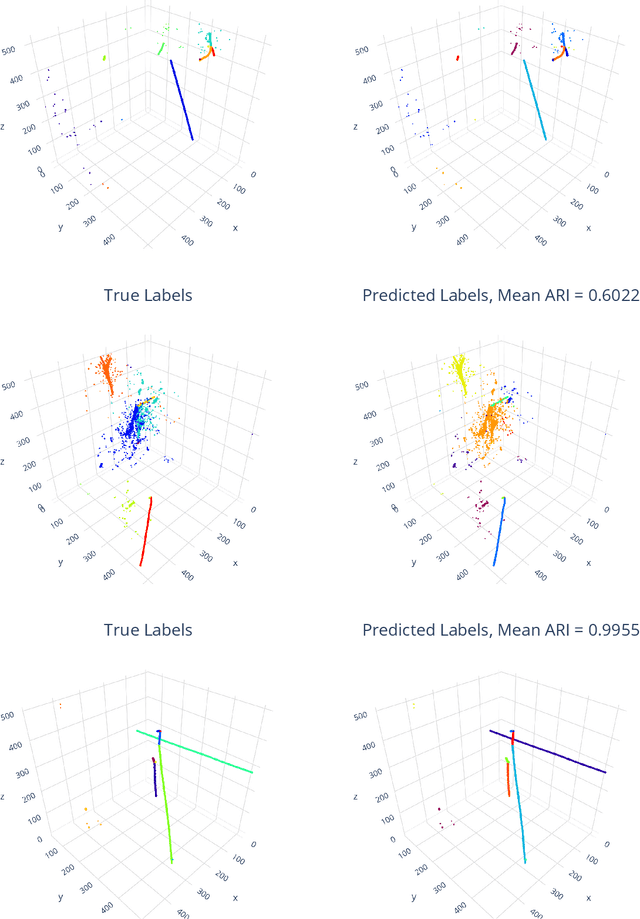

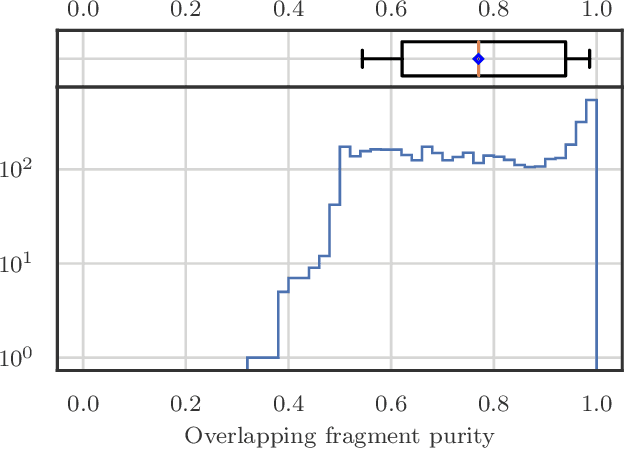

Abstract:Liquid Argon Time Projection Chambers (LArTPCs) are a class of detectors that produce high resolution images of charged particles within their sensitive volume. In these images, the clustering of distinct particles into superstructures is of central importance to the current and future neutrino physics program. Electromagnetic (EM) activity typically exhibits spatially detached fragments of varying morphology and orientation that are challenging to efficiently assemble using traditional algorithms. Similarly, particles that are spatially removed from each other in the detector may originate from a common interaction. Graph Neural Networks (GNNs) were developed in recent years to find correlations between objects embedded in an arbitrary space. GNNs are first studied with the goal of predicting the adjacency matrix of EM shower fragments and to identify the origin of showers, i.e. primary fragments. On the PILArNet public LArTPC simulation dataset, the algorithm developed in this paper achieves a shower clustering accuracy characterized by a mean adjusted Rand index (ARI) of 97.8 % and a primary identification accuracy of 99.8 %. It yields a relative shower energy resolution of $(4.1+1.4/\sqrt{E (\text{GeV})})\,\%$ and a shower direction resolution of $(2.1/\sqrt{E(\text{GeV})})^{\circ}$. The optimized GNN is then applied to the related task of clustering particle instances into interactions and yields a mean ARI of 99.2 % for an interaction density of $\sim\mathcal{O}(1)\,m^{-3}$.

Track Seeding and Labelling with Embedded-space Graph Neural Networks

Jun 30, 2020

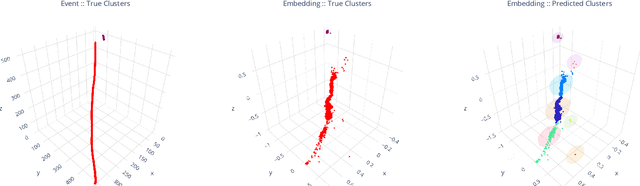

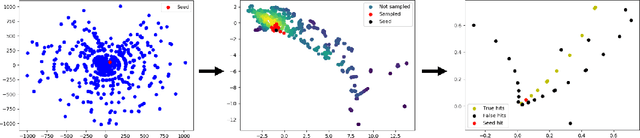

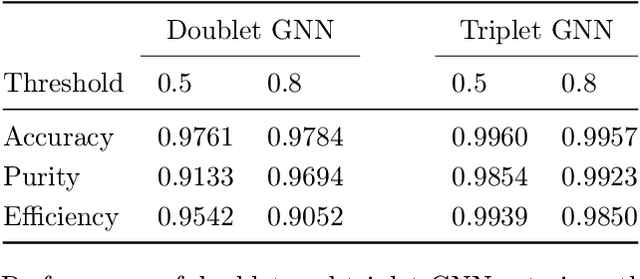

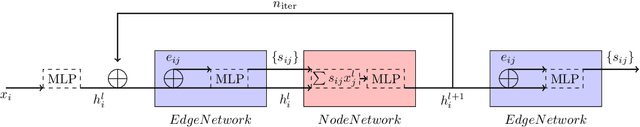

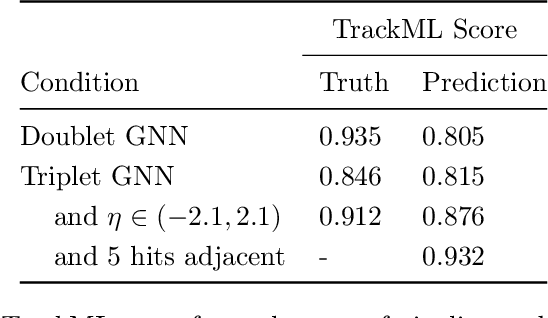

Abstract:To address the unprecedented scale of HL-LHC data, the Exa.TrkX project is investigating a variety of machine learning approaches to particle track reconstruction. The most promising of these solutions, graph neural networks (GNN), process the event as a graph that connects track measurements (detector hits corresponding to nodes) with candidate line segments between the hits (corresponding to edges). Detector information can be associated with nodes and edges, enabling a GNN to propagate the embedded parameters around the graph and predict node-, edge- and graph-level observables. Previously, message-passing GNNs have shown success in predicting doublet likelihood, and we here report updates on the state-of-the-art architectures for this task. In addition, the Exa.TrkX project has investigated innovations in both graph construction, and embedded representations, in an effort to achieve fully learned end-to-end track finding. Hence, we present a suite of extensions to the original model, with encouraging results for hitgraph classification. In addition, we explore increased performance by constructing graphs from learned representations which contain non-linear metric structure, allowing for efficient clustering and neighborhood queries of data points. We demonstrate how this framework fits in with both traditional clustering pipelines, and GNN approaches. The embedded graphs feed into high-accuracy doublet and triplet classifiers, or can be used as an end-to-end track classifier by clustering in an embedded space. A set of post-processing methods improve performance with knowledge of the detector physics. Finally, we present numerical results on the TrackML particle tracking challenge dataset, where our framework shows favorable results in both seeding and track finding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge