Thomas Y. Chen

Deep Learning for Space Weather Prediction: Bridging the Gap between Heliophysics Data and Theory

Dec 27, 2022

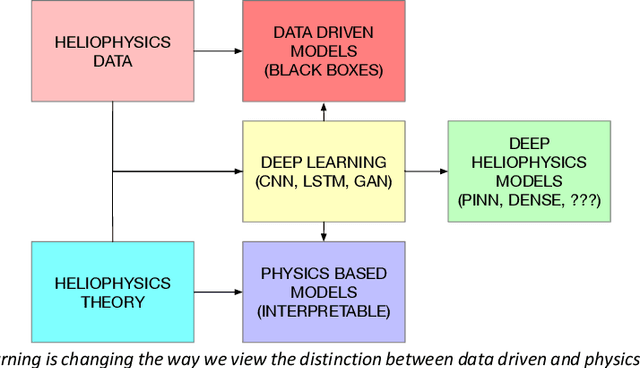

Abstract:Traditionally, data analysis and theory have been viewed as separate disciplines, each feeding into fundamentally different types of models. Modern deep learning technology is beginning to unify these two disciplines and will produce a new class of predictively powerful space weather models that combine the physical insights gained by data and theory. We call on NASA to invest in the research and infrastructure necessary for the heliophysics' community to take advantage of these advances.

Interpretable Uncertainty Quantification in AI for HEP

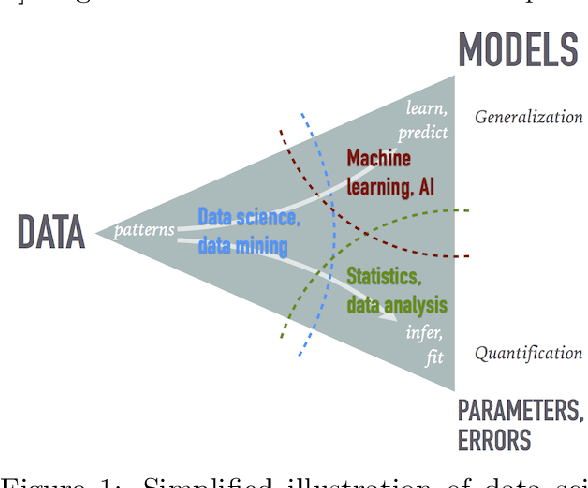

Aug 08, 2022Abstract:Estimating uncertainty is at the core of performing scientific measurements in HEP: a measurement is not useful without an estimate of its uncertainty. The goal of uncertainty quantification (UQ) is inextricably linked to the question, "how do we physically and statistically interpret these uncertainties?" The answer to this question depends not only on the computational task we aim to undertake, but also on the methods we use for that task. For artificial intelligence (AI) applications in HEP, there are several areas where interpretable methods for UQ are essential, including inference, simulation, and control/decision-making. There exist some methods for each of these areas, but they have not yet been demonstrated to be as trustworthy as more traditional approaches currently employed in physics (e.g., non-AI frequentist and Bayesian methods). Shedding light on the questions above requires additional understanding of the interplay of AI systems and uncertainty quantification. We briefly discuss the existing methods in each area and relate them to tasks across HEP. We then discuss recommendations for avenues to pursue to develop the necessary techniques for reliable widespread usage of AI with UQ over the next decade.

Data Science and Machine Learning in Education

Jul 19, 2022

Abstract:The growing role of data science (DS) and machine learning (ML) in high-energy physics (HEP) is well established and pertinent given the complex detectors, large data, sets and sophisticated analyses at the heart of HEP research. Moreover, exploiting symmetries inherent in physics data have inspired physics-informed ML as a vibrant sub-field of computer science research. HEP researchers benefit greatly from materials widely available materials for use in education, training and workforce development. They are also contributing to these materials and providing software to DS/ML-related fields. Increasingly, physics departments are offering courses at the intersection of DS, ML and physics, often using curricula developed by HEP researchers and involving open software and data used in HEP. In this white paper, we explore synergies between HEP research and DS/ML education, discuss opportunities and challenges at this intersection, and propose community activities that will be mutually beneficial.

MonarchNet: Differentiating Monarch Butterflies from Butterflies Species with Similar Phenotypes

Jan 24, 2022

Abstract:In recent years, the monarch butterfly's iconic migration patterns have come under threat from a number of factors, from climate change to pesticide use. To track trends in their populations, scientists as well as citizen scientists must identify individuals accurately. This is uniquely key for the study of monarch butterflies because there exist other species of butterfly, such as viceroy butterflies, that are "look-alikes" (coined by the Convention on International Trade in Endangered Species of Wild Fauna and Flora), having similar phenotypes. To tackle this problem and to aid in more efficient identification, we present MonarchNet, the first comprehensive dataset consisting of butterfly imagery for monarchs and five look-alike species. We train a baseline deep-learning classification model to serve as a tool for differentiating monarch butterflies and its various look-alikes. We seek to contribute to the study of biodiversity and butterfly ecology by providing a novel method for computational classification of these particular butterfly species. The ultimate aim is to help scientists track monarch butterfly population and migration trends in the most precise and efficient manner possible.

Interpretability in Convolutional Neural Networks for Building Damage Classification in Satellite Imagery

Jan 24, 2022

Abstract:Natural disasters ravage the world's cities, valleys, and shores on a regular basis. Deploying precise and efficient computational mechanisms for assessing infrastructure damage is essential to channel resources and minimize the loss of life. Using a dataset that includes labeled pre- and post- disaster satellite imagery, we take a machine learning-based remote sensing approach and train multiple convolutional neural networks (CNNs) to assess building damage on a per-building basis. We present a novel methodology of interpretable deep learning that seeks to explicitly investigate the most useful modalities of information in the training data to create an accurate classification model. We also investigate which loss functions best optimize these models. Our findings include that ordinal-cross entropy loss is the most optimal criterion for optimization to use and that including the type of disaster that caused the damage in combination with pre- and post-disaster training data most accurately predicts the level of damage caused. Further, we make progress in the qualitative representation of which parts of the images that the model is using to predict damage levels, through gradient-weighted class activation mapping (Grad-CAM). Our research seeks to computationally contribute to aiding in this ongoing and growing humanitarian crisis, heightened by anthropogenic climate change.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge