Laura Dominé

for the DeepLearnPhysics Collaboration

Commissioning An All-Sky Infrared Camera Array for Detection Of Airborne Objects

Nov 12, 2024

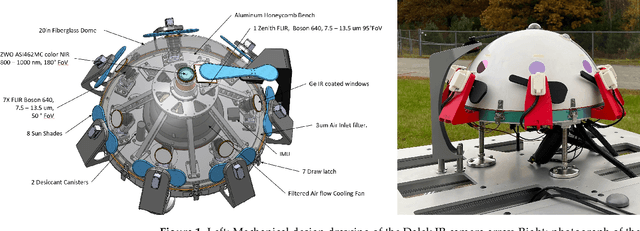

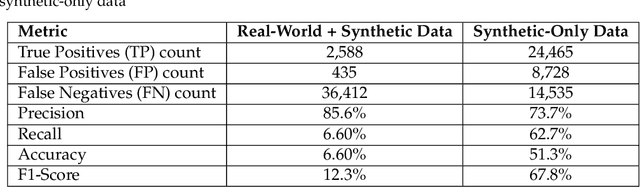

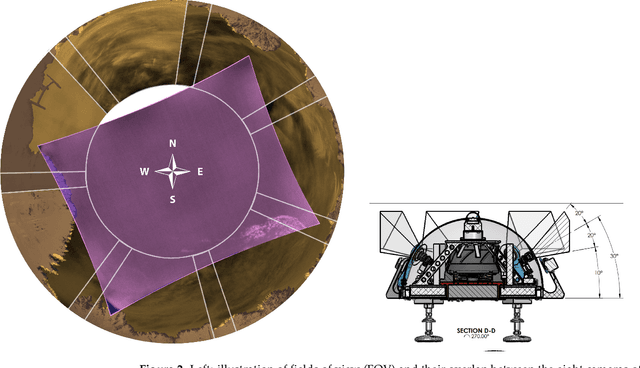

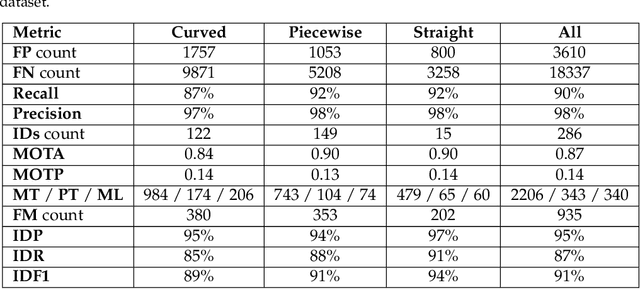

Abstract:To date there is little publicly available scientific data on Unidentified Aerial Phenomena (UAP) whose properties and kinematics purportedly reside outside the performance envelope of known phenomena. To address this deficiency, the Galileo Project is designing, building, and commissioning a multi-modal ground-based observatory to continuously monitor the sky and conduct a rigorous long-term aerial census of all aerial phenomena, including natural and human-made. One of the key instruments is an all-sky infrared camera array using eight uncooled long-wave infrared FLIR Boson 640 cameras. Their calibration includes a novel extrinsic calibration method using airplane positions from Automatic Dependent Surveillance-Broadcast (ADS-B) data. We establish a first baseline for the system performance over five months of field operation, using a real-world dataset derived from ADS-B data, synthetic 3-D trajectories, and a hand-labelled real-world dataset. We report acceptance rates (e.g. viewable airplanes that are recorded) and detection efficiencies (e.g. recorded airplanes which are successfully detected) for a variety of weather conditions, range and aircraft size. We reconstruct $\sim$500,000 trajectories of aerial objects from this commissioning period. A toy outlier search focused on large sinuosity of the 2-D reconstructed trajectories flags about 16% of trajectories as outliers. After manual review, 144 trajectories remain ambiguous: they are likely mundane objects but cannot be elucidated at this stage of development without distance and kinematics estimation or other sensor modalities. Our observed count of ambiguous outliers combined with systematic uncertainties yields an upper limit of 18,271 outliers count for the five-month interval at a 95% confidence level. This likelihood-based method to evaluate significance is applicable to all of our future outlier searches.

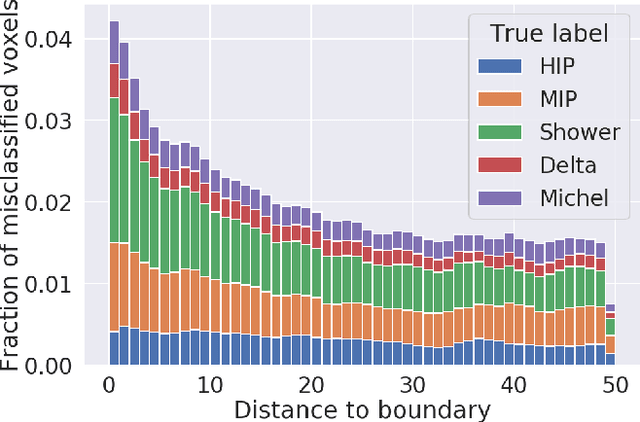

Scalable, End-to-End, Deep-Learning-Based Data Reconstruction Chain for Particle Imaging Detectors

Feb 01, 2021

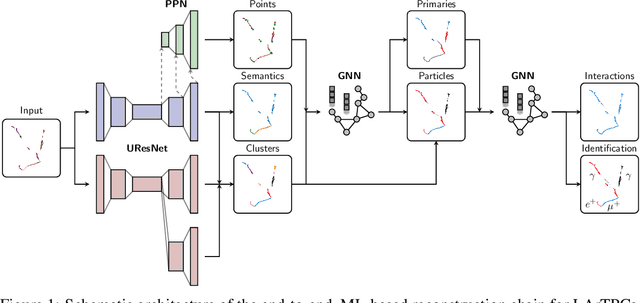

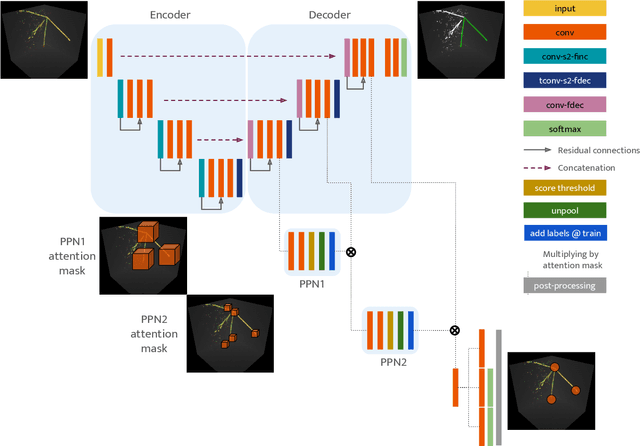

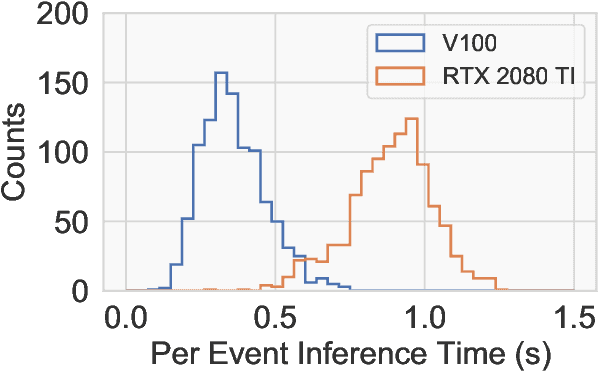

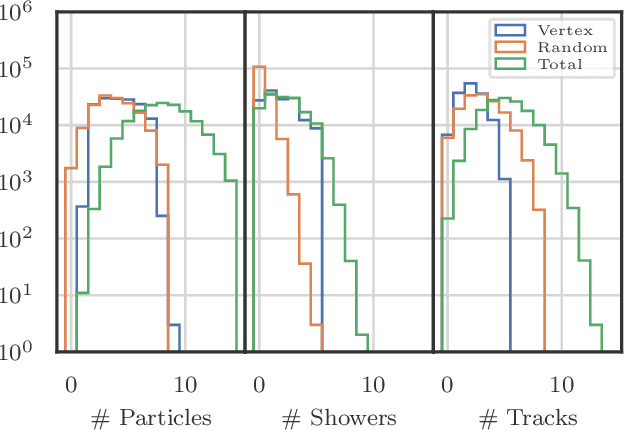

Abstract:Recent inroads in Computer Vision (CV) and Machine Learning (ML) have motivated a new approach to the analysis of particle imaging detector data. Unlike previous efforts which tackled isolated CV tasks, this paper introduces an end-to-end, ML-based data reconstruction chain for Liquid Argon Time Projection Chambers (LArTPCs), the state-of-the-art in precision imaging at the intensity frontier of neutrino physics. The chain is a multi-task network cascade which combines voxel-level feature extraction using Sparse Convolutional Neural Networks and particle superstructure formation using Graph Neural Networks. Each algorithm incorporates physics-informed inductive biases, while their collective hierarchy is used to enforce a causal structure. The output is a comprehensive description of an event that may be used for high-level physics inference. The chain is end-to-end optimizable, eliminating the need for time-intensive manual software adjustments. It is also the first implementation to handle the unprecedented pile-up of dozens of high energy neutrino interactions, expected in the 3D-imaging LArTPC of the Deep Underground Neutrino Experiment. The chain is trained as a whole and its performance is assessed at each step using an open simulated data set.

Central Yup'ik and Machine Translation of Low-Resource Polysynthetic Languages

Sep 09, 2020

Abstract:Machine translation tools do not yet exist for the Yup'ik language, a polysynthetic language spoken by around 8,000 people who live primarily in Southwest Alaska. We compiled a parallel text corpus for Yup'ik and English and developed a morphological parser for Yup'ik based on grammar rules. We trained a seq2seq neural machine translation model with attention to translate Yup'ik input into English. We then compared the influence of different tokenization methods, namely rule-based, unsupervised (byte pair encoding), and unsupervised morphological (Morfessor) parsing, on BLEU score accuracy for Yup'ik to English translation. We find that using tokenized input increases the translation accuracy compared to that of unparsed input. Although overall Morfessor did best with a vocabulary size of 30k, our first experiments show that BPE performed best with a reduced vocabulary size.

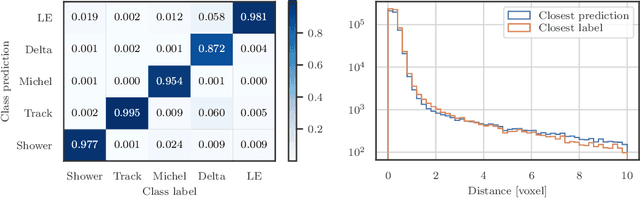

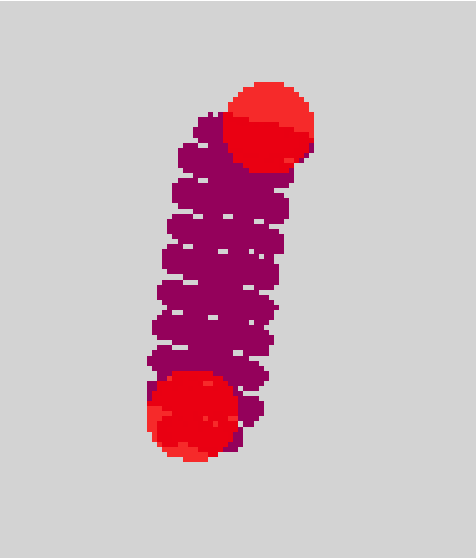

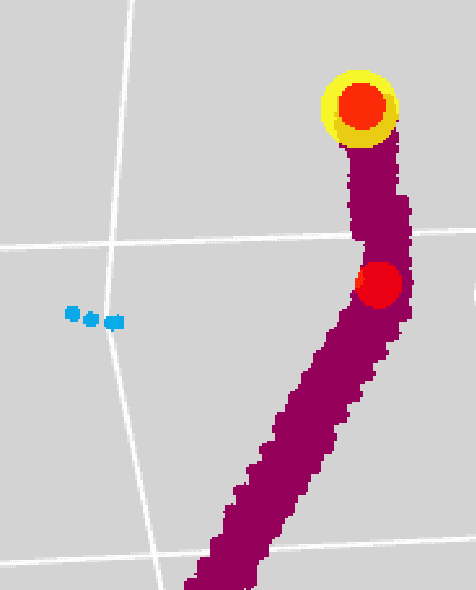

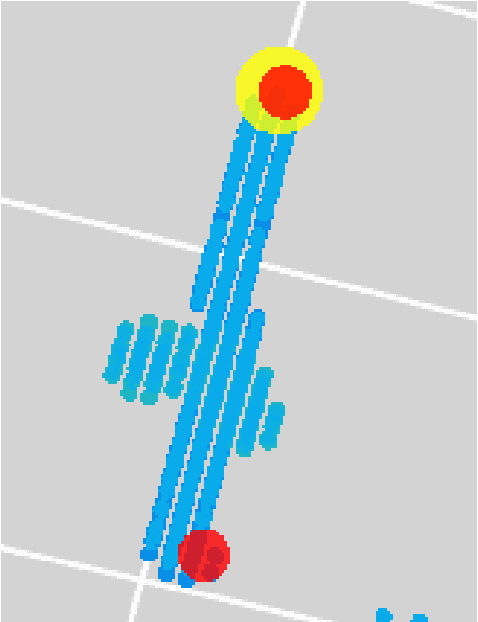

Point Proposal Network for Reconstructing 3D Particle Endpoints with Sub-Pixel Precision in Liquid Argon Time Projection Chambers

Jul 10, 2020

Abstract:Liquid Argon Time Projection Chambers (LArTPC) are particle imaging detectors recording 2D or 3D images of trajectories of charged particles. Identifying points of interest in these images, namely the initial and terminal points of track-like particle trajectories such as muons and protons, and the initial points of electromagnetic shower-like particle trajectories such as electrons and gamma rays, is a crucial step of identifying and analyzing these particles and impacts the inference of physics signals such as neutrino interaction. The Point Proposal Network is designed to discover these specific points of interest. The algorithm predicts with a sub-voxel precision their spatial location, and also determines the category of the identified points of interest. Using as a benchmark the PILArNet public LArTPC data sample in which the voxel resolution is 3mm/voxel, our algorithm successfully predicted 96.8% and 97.8% of 3D points within a distance of 3 and 10~voxels from the provided true point locations respectively. For the predicted 3D points within 3 voxels of the closest true point locations, the median distance is found to be 0.25 voxels, achieving the sub-voxel level precision. In addition, we report our analysis of the mistakes where our algorithm prediction differs from the provided true point positions by more than 10~voxels. Among 50 mistakes visually scanned, 25 were due to the definition of true position location, 15 were legitimate mistakes where a physicist cannot visually disagree with the algorithm's prediction, and 10 were genuine mistakes that we wish to improve in the future. Further, using these predicted points, we demonstrate a simple algorithm to cluster 3D voxels into individual track-like particle trajectories with a clustering efficiency, purity, and Adjusted Rand Index of 96%, 93%, and 91% respectively.

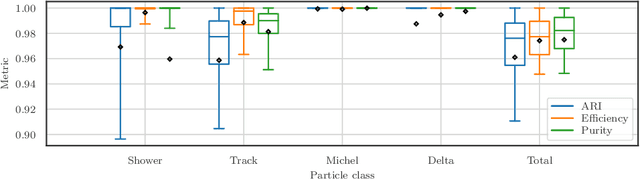

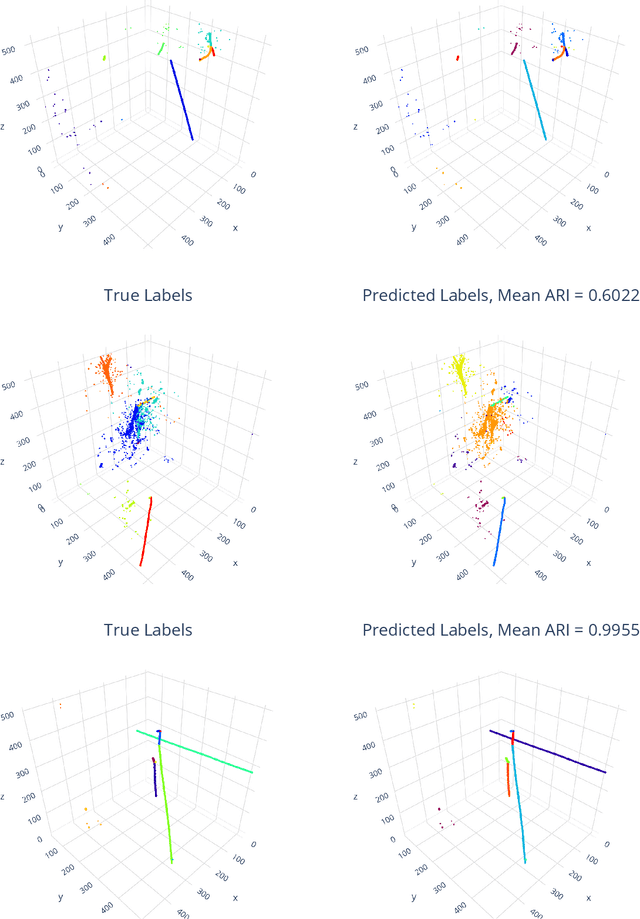

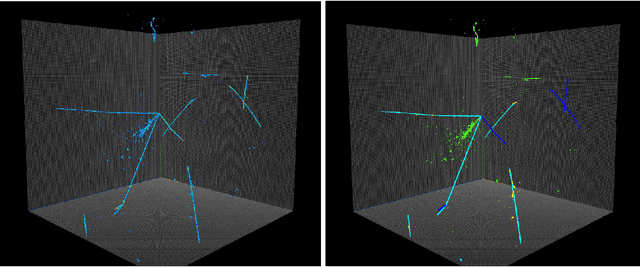

Scalable, Proposal-free Instance Segmentation Network for 3D Pixel Clustering and Particle Trajectory Reconstruction in Liquid Argon Time Projection Chambers

Jul 06, 2020

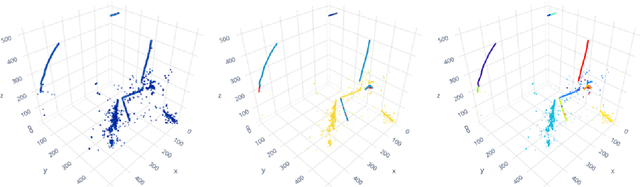

Abstract:Liquid Argon Time Projection Chambers (LArTPCs) are high resolution particle imaging detectors, employed by accelerator-based neutrino oscillation experiments for high precision physics measurements. While images of particle trajectories are intuitive to analyze for physicists, the development of a high quality, automated data reconstruction chain remains challenging. One of the most critical reconstruction steps is particle clustering: the task of grouping 3D image pixels into different particle instances that share the same particle type. In this paper, we propose the first scalable deep learning algorithm for particle clustering in LArTPC data using sparse convolutional neural networks (SCNN). Building on previous works on SCNNs and proposal free instance segmentation, we build an end-to-end trainable instance segmentation network that learns an embedding of the image pixels to perform point cloud clustering in a transformed space. We benchmark the performance of our algorithm on PILArNet, a public 3D particle imaging dataset, with respect to common clustering evaluation metrics. 3D pixels were successfully clustered into individual particle trajectories with 90% of them having an adjusted Rand index score greater than 92% with a mean pixel clustering efficiency and purity above 96%. This work contributes to the development of an end-to-end optimizable full data reconstruction chain for LArTPCs, in particular pixel-based 3D imaging detectors including the near detector of the Deep Underground Neutrino Experiment. Our algorithm is made available in the open access repository, and we share our Singularity software container, which can be used to reproduce our work on the dataset.

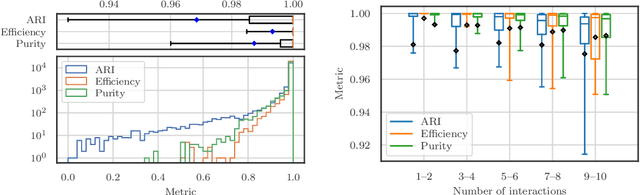

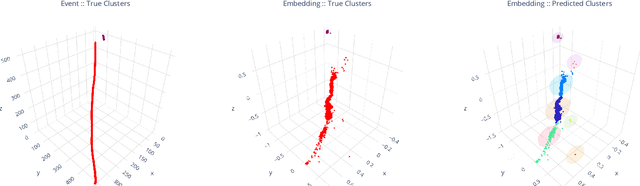

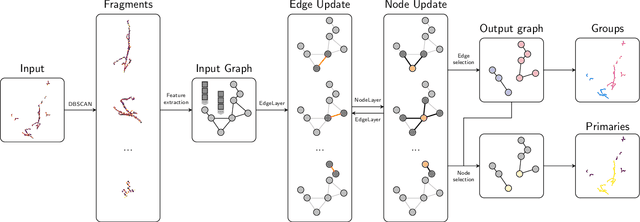

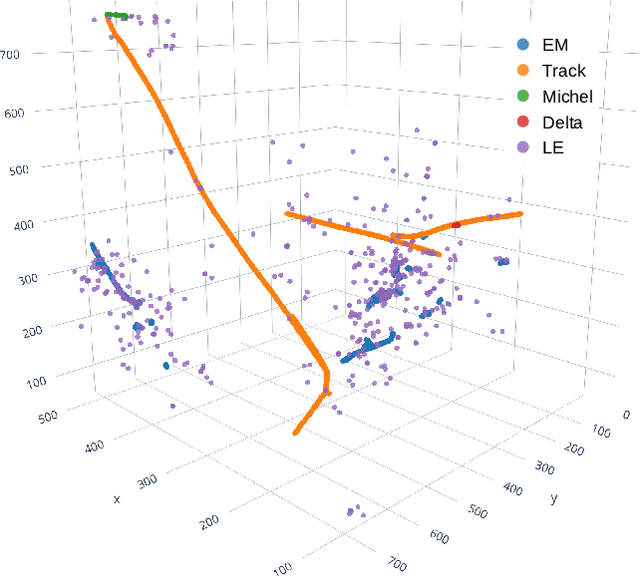

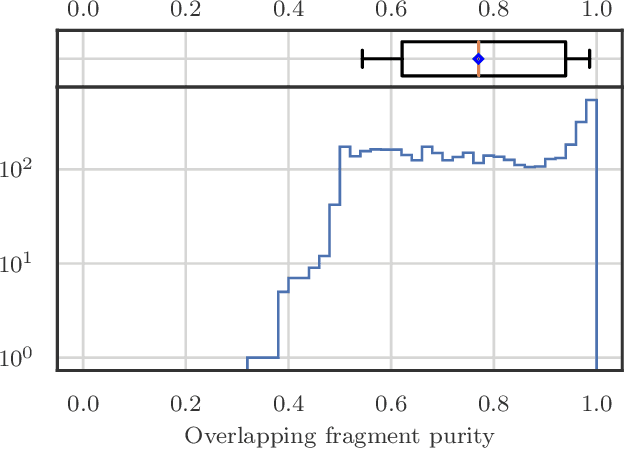

Clustering of Electromagnetic Showers and Particle Interactions with Graph Neural Networks in Liquid Argon Time Projection Chambers Data

Jul 02, 2020

Abstract:Liquid Argon Time Projection Chambers (LArTPCs) are a class of detectors that produce high resolution images of charged particles within their sensitive volume. In these images, the clustering of distinct particles into superstructures is of central importance to the current and future neutrino physics program. Electromagnetic (EM) activity typically exhibits spatially detached fragments of varying morphology and orientation that are challenging to efficiently assemble using traditional algorithms. Similarly, particles that are spatially removed from each other in the detector may originate from a common interaction. Graph Neural Networks (GNNs) were developed in recent years to find correlations between objects embedded in an arbitrary space. GNNs are first studied with the goal of predicting the adjacency matrix of EM shower fragments and to identify the origin of showers, i.e. primary fragments. On the PILArNet public LArTPC simulation dataset, the algorithm developed in this paper achieves a shower clustering accuracy characterized by a mean adjusted Rand index (ARI) of 97.8 % and a primary identification accuracy of 99.8 %. It yields a relative shower energy resolution of $(4.1+1.4/\sqrt{E (\text{GeV})})\,\%$ and a shower direction resolution of $(2.1/\sqrt{E(\text{GeV})})^{\circ}$. The optimized GNN is then applied to the related task of clustering particle instances into interactions and yields a mean ARI of 99.2 % for an interaction density of $\sim\mathcal{O}(1)\,m^{-3}$.

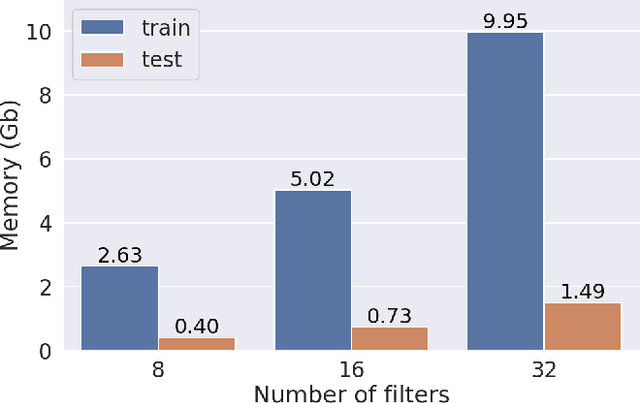

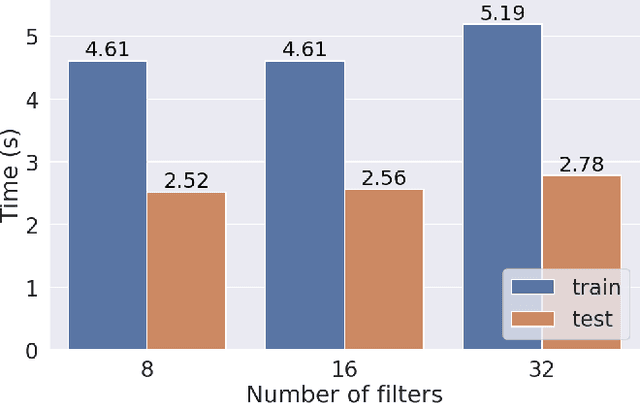

Scalable Deep Convolutional Neural Networks for Sparse, Locally Dense Liquid Argon Time Projection Chamber Data

Mar 15, 2019

Abstract:Deep convolutional neural networks (CNNs) show strong promise for analyzing scientific data in many domains including particle imaging detectors such as a liquid argon time projection chamber (LArTPC). Yet the high sparsity of LArTPC data challenges traditional CNNs which were designed for dense data such as photographs. A naive application of CNNs on LArTPC data results in inefficient computations and a poor scalability to large LArTPC detectors such as the Short Baseline Neutrino Program and Deep Underground Neutrino Experiment. Recently Submanifold Sparse Convolutional Networks (SSCNs) have been proposed to address this challenge. We report their performance on a 3D semantic segmentation task on simulated LArTPC samples. In comparison with standard CNNs, we observe that the computation memory and wall-time cost for inference are reduced by factor of 364 and 33 respectively without loss of accuracy. The same factors for 2D samples are found to be 93 and 3.1 respectively. Using SSCN, we present the first machine learning-based approach to the reconstruction of Michel electrons using public 3D LArTPC samples. We find a Michel electron identification efficiency of 93.9\% with 98.8\% of true positive rate. Reconstructed Michel electron clusters yield 96.1\% in average pixel clustering efficiency and 97.3\% in purity. The results are compelling to show strong promise of scalable data reconstruction technique using deep neural networks for large scale LArTPC detectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge