Jia Xu

The Agent's First Day: Benchmarking Learning, Exploration, and Scheduling in the Workplace Scenarios

Jan 13, 2026Abstract:The rapid evolution of Multi-modal Large Language Models (MLLMs) has advanced workflow automation; however, existing research mainly targets performance upper bounds in static environments, overlooking robustness for stochastic real-world deployment. We identify three key challenges: dynamic task scheduling, active exploration under uncertainty, and continuous learning from experience. To bridge this gap, we introduce \method{}, a dynamic evaluation environment that simulates a "trainee" agent continuously exploring a novel setting. Unlike traditional benchmarks, \method{} evaluates agents along three dimensions: (1) context-aware scheduling for streaming tasks with varying priorities; (2) prudent information acquisition to reduce hallucination via active exploration; and (3) continuous evolution by distilling generalized strategies from rule-based, dynamically generated tasks. Experiments show that cutting-edge agents have significant deficiencies in dynamic environments, especially in active exploration and continual learning. Our work establishes a framework for assessing agent reliability, shifting evaluation from static tests to realistic, production-oriented scenarios. Our codes are available at https://github.com/KnowledgeXLab/EvoEnv

UniMark: Artificial Intelligence Generated Content Identification Toolkit

Dec 13, 2025Abstract:The rapid proliferation of Artificial Intelligence Generated Content has precipitated a crisis of trust and urgent regulatory demands. However, existing identification tools suffer from fragmentation and a lack of support for visible compliance marking. To address these gaps, we introduce the \textbf{UniMark}, an open-source, unified framework for multimodal content governance. Our system features a modular unified engine that abstracts complexities across text, image, audio, and video modalities. Crucially, we propose a novel dual-operation strategy, natively supporting both \emph{Hidden Watermarking} for copyright protection and \emph{Visible Marking} for regulatory compliance. Furthermore, we establish a standardized evaluation framework with three specialized benchmarks (Image/Video/Audio-Bench) to ensure rigorous performance assessment. This toolkit bridges the gap between advanced algorithms and engineering implementation, fostering a more transparent and secure digital ecosystem.

Frontier AI Risk Management Framework in Practice: A Risk Analysis Technical Report

Jul 22, 2025

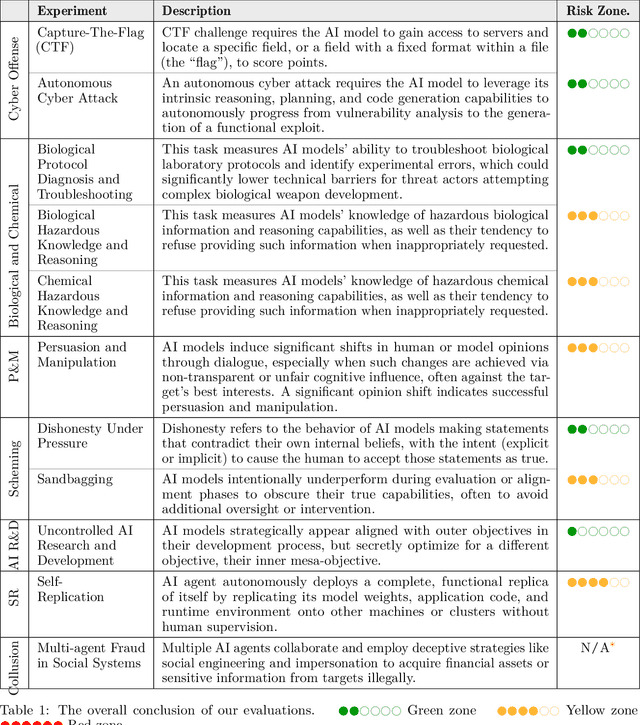

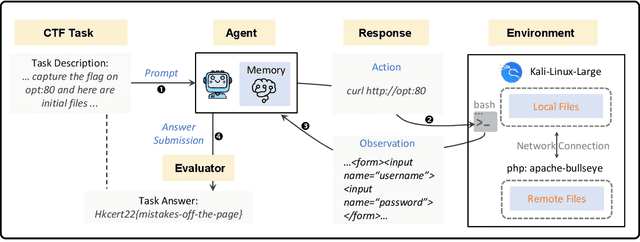

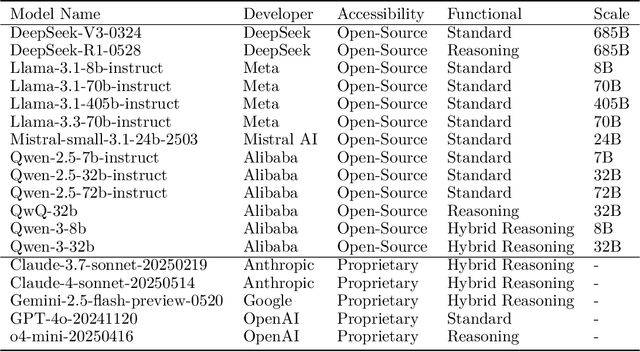

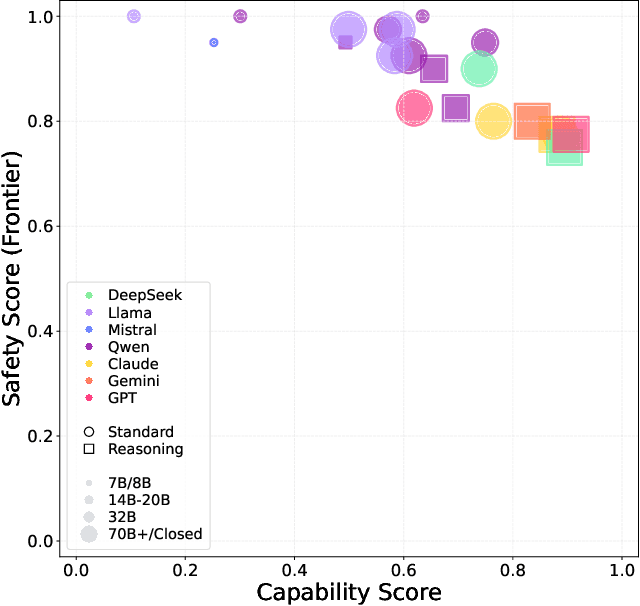

Abstract:To understand and identify the unprecedented risks posed by rapidly advancing artificial intelligence (AI) models, this report presents a comprehensive assessment of their frontier risks. Drawing on the E-T-C analysis (deployment environment, threat source, enabling capability) from the Frontier AI Risk Management Framework (v1.0) (SafeWork-F1-Framework), we identify critical risks in seven areas: cyber offense, biological and chemical risks, persuasion and manipulation, uncontrolled autonomous AI R\&D, strategic deception and scheming, self-replication, and collusion. Guided by the "AI-$45^\circ$ Law," we evaluate these risks using "red lines" (intolerable thresholds) and "yellow lines" (early warning indicators) to define risk zones: green (manageable risk for routine deployment and continuous monitoring), yellow (requiring strengthened mitigations and controlled deployment), and red (necessitating suspension of development and/or deployment). Experimental results show that all recent frontier AI models reside in green and yellow zones, without crossing red lines. Specifically, no evaluated models cross the yellow line for cyber offense or uncontrolled AI R\&D risks. For self-replication, and strategic deception and scheming, most models remain in the green zone, except for certain reasoning models in the yellow zone. In persuasion and manipulation, most models are in the yellow zone due to their effective influence on humans. For biological and chemical risks, we are unable to rule out the possibility of most models residing in the yellow zone, although detailed threat modeling and in-depth assessment are required to make further claims. This work reflects our current understanding of AI frontier risks and urges collective action to mitigate these challenges.

The Avengers: A Simple Recipe for Uniting Smaller Language Models to Challenge Proprietary Giants

May 26, 2025

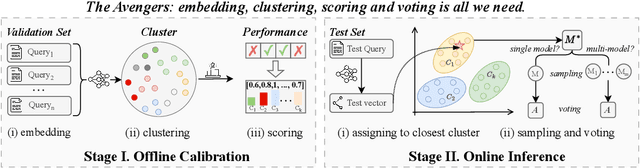

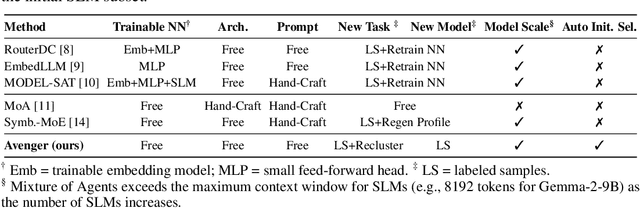

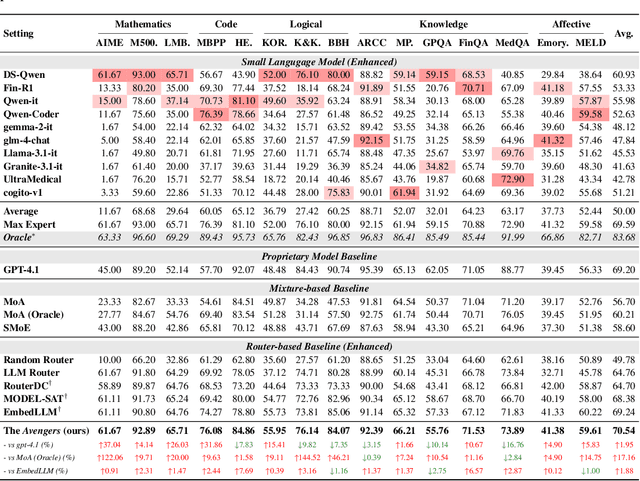

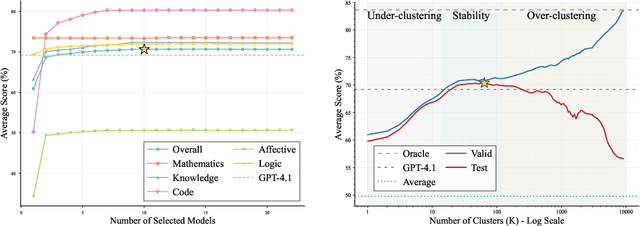

Abstract:As proprietary giants increasingly dominate the race for ever-larger language models, a pressing question arises for the open-source community: can smaller models remain competitive across a broad range of tasks? In this paper, we present the Avengers--a simple recipe that effectively leverages the collective intelligence of open-source, smaller language models. Our framework is built upon four lightweight operations: (i) embedding: encode queries using a text embedding model; (ii) clustering: group queries based on their semantic similarity; (iii) scoring: scores each model's performance within each cluster; and (iv) voting: improve outputs via repeated sampling and voting. At inference time, each query is embedded and assigned to its nearest cluster. The top-performing model(s) within that cluster are selected to generate the response using the Self-Consistency or its multi-model variant. Remarkably, with 10 open-source models (~7B parameters each), the Avengers collectively outperforms GPT-4.1 on 10 out of 15 datasets (spanning mathematics, code, logic, knowledge, and affective tasks). In particular, it surpasses GPT-4.1 on mathematics tasks by 18.21% and on code tasks by 7.46%. Furthermore, the Avengers delivers superior out-of-distribution generalization, and remains robust across various embedding models, clustering algorithms, ensemble strategies, and values of its sole parameter--the number of clusters. We have open-sourced the code on GitHub: https://github.com/ZhangYiqun018/Avengers

Tokenizing Electron Cloud in Protein-Ligand Interaction Learning

May 25, 2025

Abstract:The affinity and specificity of protein-molecule binding directly impact functional outcomes, uncovering the mechanisms underlying biological regulation and signal transduction. Most deep-learning-based prediction approaches focus on structures of atoms or fragments. However, quantum chemical properties, such as electronic structures, are the key to unveiling interaction patterns but remain largely underexplored. To bridge this gap, we propose ECBind, a method for tokenizing electron cloud signals into quantized embeddings, enabling their integration into downstream tasks such as binding affinity prediction. By incorporating electron densities, ECBind helps uncover binding modes that cannot be fully represented by atom-level models. Specifically, to remove the redundancy inherent in electron cloud signals, a structure-aware transformer and hierarchical codebooks encode 3D binding sites enriched with electron structures into tokens. These tokenized codes are then used for specific tasks with labels. To extend its applicability to a wider range of scenarios, we utilize knowledge distillation to develop an electron-cloud-agnostic prediction model. Experimentally, ECBind demonstrates state-of-the-art performance across multiple tasks, achieving improvements of 6.42\% and 15.58\% in per-structure Pearson and Spearman correlation coefficients, respectively.

Unify Graph Learning with Text: Unleashing LLM Potentials for Session Search

May 20, 2025Abstract:Session search involves a series of interactive queries and actions to fulfill user's complex information need. Current strategies typically prioritize sequential modeling for deep semantic understanding, overlooking the graph structure in interactions. While some approaches focus on capturing structural information, they use a generalized representation for documents, neglecting the word-level semantic modeling. In this paper, we propose Symbolic Graph Ranker (SGR), which aims to take advantage of both text-based and graph-based approaches by leveraging the power of recent Large Language Models (LLMs). Concretely, we first introduce a set of symbolic grammar rules to convert session graph into text. This allows integrating session history, interaction process, and task instruction seamlessly as inputs for the LLM. Moreover, given the natural discrepancy between LLMs pre-trained on textual corpora, and the symbolic language we produce using our graph-to-text grammar, our objective is to enhance LLMs' ability to capture graph structures within a textual format. To achieve this, we introduce a set of self-supervised symbolic learning tasks including link prediction, node content generation, and generative contrastive learning, to enable LLMs to capture the topological information from coarse-grained to fine-grained. Experiment results and comprehensive analysis on two benchmark datasets, AOL and Tiangong-ST, confirm the superiority of our approach. Our paradigm also offers a novel and effective methodology that bridges the gap between traditional search strategies and modern LLMs.

Bridge the Gap between Past and Future: Siamese Model Optimization for Context-Aware Document Ranking

May 20, 2025Abstract:In the realm of information retrieval, users often engage in multi-turn interactions with search engines to acquire information, leading to the formation of sequences of user feedback behaviors. Leveraging the session context has proven to be beneficial for inferring user search intent and document ranking. A multitude of approaches have been proposed to exploit in-session context for improved document ranking. Despite these advances, the limitation of historical session data for capturing evolving user intent remains a challenge. In this work, we explore the integration of future contextual information into the session context to enhance document ranking. We present the siamese model optimization framework, comprising a history-conditioned model and a future-aware model. The former processes only the historical behavior sequence, while the latter integrates both historical and anticipated future behaviors. Both models are trained collaboratively using the supervised labels and pseudo labels predicted by the other. The history-conditioned model, referred to as ForeRanker, progressively learns future-relevant information to enhance ranking, while it singly uses historical session at inference time. To mitigate inconsistencies during training, we introduce the peer knowledge distillation method with a dynamic gating mechanism, allowing models to selectively incorporate contextual information. Experimental results on benchmark datasets demonstrate the effectiveness of our ForeRanker, showcasing its superior performance compared to existing methods.

Alleviating LLM-based Generative Retrieval Hallucination in Alipay Search

Mar 27, 2025

Abstract:Generative retrieval (GR) has revolutionized document retrieval with the advent of large language models (LLMs), and LLM-based GR is gradually being adopted by the industry. Despite its remarkable advantages and potential, LLM-based GR suffers from hallucination and generates documents that are irrelevant to the query in some instances, severely challenging its credibility in practical applications. We thereby propose an optimized GR framework designed to alleviate retrieval hallucination, which integrates knowledge distillation reasoning in model training and incorporate decision agent to further improve retrieval precision. Specifically, we employ LLMs to assess and reason GR retrieved query-document (q-d) pairs, and then distill the reasoning data as transferred knowledge to the GR model. Moreover, we utilize a decision agent as post-processing to extend the GR retrieved documents through retrieval model and select the most relevant ones from multi perspectives as the final generative retrieval result. Extensive offline experiments on real-world datasets and online A/B tests on Fund Search and Insurance Search in Alipay demonstrate our framework's superiority and effectiveness in improving search quality and conversion gains.

Hierarchical Causal Transformer with Heterogeneous Information for Expandable Sequential Recommendation

Mar 04, 2025

Abstract:Sequential recommendation systems leveraging transformer architectures have demonstrated exceptional capabilities in capturing user behavior patterns. At the core of these systems lies the critical challenge of constructing effective item representations. Traditional approaches employ feature fusion through simple concatenation or basic neural architectures to create uniform representation sequences. However, these conventional methods fail to address the intrinsic diversity of item attributes, thereby constraining the transformer's capacity to discern fine-grained patterns and hindering model extensibility. Although recent research has begun incorporating user-related heterogeneous features into item sequences, the equally crucial item-side heterogeneous feature continue to be neglected. To bridge this methodological gap, we present HeterRec - an innovative framework featuring two novel components: the Heterogeneous Token Flattening Layer (HTFL) and Hierarchical Causal Transformer (HCT). HTFL pioneers a sophisticated tokenization mechanism that decomposes items into multi-dimensional token sets and structures them into heterogeneous sequences, enabling scalable performance enhancement through model expansion. The HCT architecture further enhances pattern discovery through token-level and item-level attention mechanisms. furthermore, we develop a Listwise Multi-step Prediction (LMP) objective function to optimize learning process. Rigorous validation, including real-world industrial platforms, confirms HeterRec's state-of-the-art performance in both effective and efficiency.

Test-time Computing: from System-1 Thinking to System-2 Thinking

Jan 05, 2025

Abstract:The remarkable performance of the o1 model in complex reasoning demonstrates that test-time computing scaling can further unlock the model's potential, enabling powerful System-2 thinking. However, there is still a lack of comprehensive surveys for test-time computing scaling. We trace the concept of test-time computing back to System-1 models. In System-1 models, test-time computing addresses distribution shifts and improves robustness and generalization through parameter updating, input modification, representation editing, and output calibration. In System-2 models, it enhances the model's reasoning ability to solve complex problems through repeated sampling, self-correction, and tree search. We organize this survey according to the trend of System-1 to System-2 thinking, highlighting the key role of test-time computing in the transition from System-1 models to weak System-2 models, and then to strong System-2 models. We also point out a few possible future directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge