Guannan Zhang

SwimBird: Eliciting Switchable Reasoning Mode in Hybrid Autoregressive MLLMs

Feb 05, 2026Abstract:Multimodal Large Language Models (MLLMs) have made remarkable progress in multimodal perception and reasoning by bridging vision and language. However, most existing MLLMs perform reasoning primarily with textual CoT, which limits their effectiveness on vision-intensive tasks. Recent approaches inject a fixed number of continuous hidden states as "visual thoughts" into the reasoning process and improve visual performance, but often at the cost of degraded text-based logical reasoning. We argue that the core limitation lies in a rigid, pre-defined reasoning pattern that cannot adaptively choose the most suitable thinking modality for different user queries. We introduce SwimBird, a reasoning-switchable MLLM that dynamically switches among three reasoning modes conditioned on the input: (1) text-only reasoning, (2) vision-only reasoning (continuous hidden states as visual thoughts), and (3) interleaved vision-text reasoning. To enable this capability, we adopt a hybrid autoregressive formulation that unifies next-token prediction for textual thoughts with next-embedding prediction for visual thoughts, and design a systematic reasoning-mode curation strategy to construct SwimBird-SFT-92K, a diverse supervised fine-tuning dataset covering all three reasoning patterns. By enabling flexible, query-adaptive mode selection, SwimBird preserves strong textual logic while substantially improving performance on vision-dense tasks. Experiments across diverse benchmarks covering textual reasoning and challenging visual understanding demonstrate that SwimBird achieves state-of-the-art results and robust gains over prior fixed-pattern multimodal reasoning methods.

A Score-based Diffusion Model Approach for Adaptive Learning of Stochastic Partial Differential Equation Solutions

Aug 09, 2025Abstract:We propose a novel framework for adaptively learning the time-evolving solutions of stochastic partial differential equations (SPDEs) using score-based diffusion models within a recursive Bayesian inference setting. SPDEs play a central role in modeling complex physical systems under uncertainty, but their numerical solutions often suffer from model errors and reduced accuracy due to incomplete physical knowledge and environmental variability. To address these challenges, we encode the governing physics into the score function of a diffusion model using simulation data and incorporate observational information via a likelihood-based correction in a reverse-time stochastic differential equation. This enables adaptive learning through iterative refinement of the solution as new data becomes available. To improve computational efficiency in high-dimensional settings, we introduce the ensemble score filter, a training-free approximation of the score function designed for real-time inference. Numerical experiments on benchmark SPDEs demonstrate the accuracy and robustness of the proposed method under sparse and noisy observations.

Unify Graph Learning with Text: Unleashing LLM Potentials for Session Search

May 20, 2025Abstract:Session search involves a series of interactive queries and actions to fulfill user's complex information need. Current strategies typically prioritize sequential modeling for deep semantic understanding, overlooking the graph structure in interactions. While some approaches focus on capturing structural information, they use a generalized representation for documents, neglecting the word-level semantic modeling. In this paper, we propose Symbolic Graph Ranker (SGR), which aims to take advantage of both text-based and graph-based approaches by leveraging the power of recent Large Language Models (LLMs). Concretely, we first introduce a set of symbolic grammar rules to convert session graph into text. This allows integrating session history, interaction process, and task instruction seamlessly as inputs for the LLM. Moreover, given the natural discrepancy between LLMs pre-trained on textual corpora, and the symbolic language we produce using our graph-to-text grammar, our objective is to enhance LLMs' ability to capture graph structures within a textual format. To achieve this, we introduce a set of self-supervised symbolic learning tasks including link prediction, node content generation, and generative contrastive learning, to enable LLMs to capture the topological information from coarse-grained to fine-grained. Experiment results and comprehensive analysis on two benchmark datasets, AOL and Tiangong-ST, confirm the superiority of our approach. Our paradigm also offers a novel and effective methodology that bridges the gap between traditional search strategies and modern LLMs.

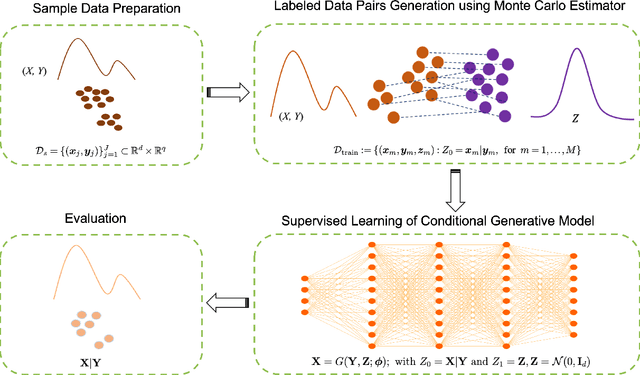

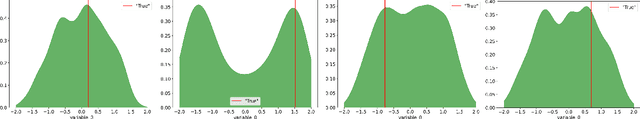

Multi-fidelity Parameter Estimation Using Conditional Diffusion Models

Apr 02, 2025

Abstract:We present a multi-fidelity method for uncertainty quantification of parameter estimates in complex systems, leveraging generative models trained to sample the target conditional distribution. In the Bayesian inference setting, traditional parameter estimation methods rely on repeated simulations of potentially expensive forward models to determine the posterior distribution of the parameter values, which may result in computationally intractable workflows. Furthermore, methods such as Markov Chain Monte Carlo (MCMC) necessitate rerunning the entire algorithm for each new data observation, further increasing the computational burden. Hence, we propose a novel method for efficiently obtaining posterior distributions of parameter estimates for high-fidelity models given data observations of interest. The method first constructs a low-fidelity, conditional generative model capable of amortized Bayesian inference and hence rapid posterior density approximation over a wide-range of data observations. When higher accuracy is needed for a specific data observation, the method employs adaptive refinement of the density approximation. It uses outputs from the low-fidelity generative model to refine the parameter sampling space, ensuring efficient use of the computationally expensive high-fidelity solver. Subsequently, a high-fidelity, unconditional generative model is trained to achieve greater accuracy in the target posterior distribution. Both low- and high- fidelity generative models enable efficient sampling from the target posterior and do not require repeated simulation of the high-fidelity forward model. We demonstrate the effectiveness of the proposed method on several numerical examples, including cases with multi-modal densities, as well as an application in plasma physics for a runaway electron simulation model.

MoDULA: Mixture of Domain-Specific and Universal LoRA for Multi-Task Learning

Dec 10, 2024

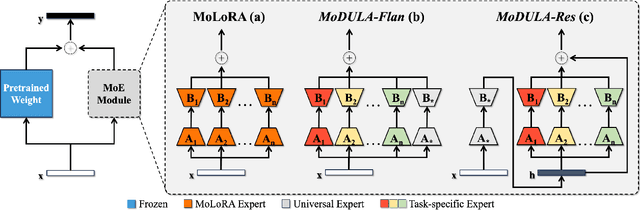

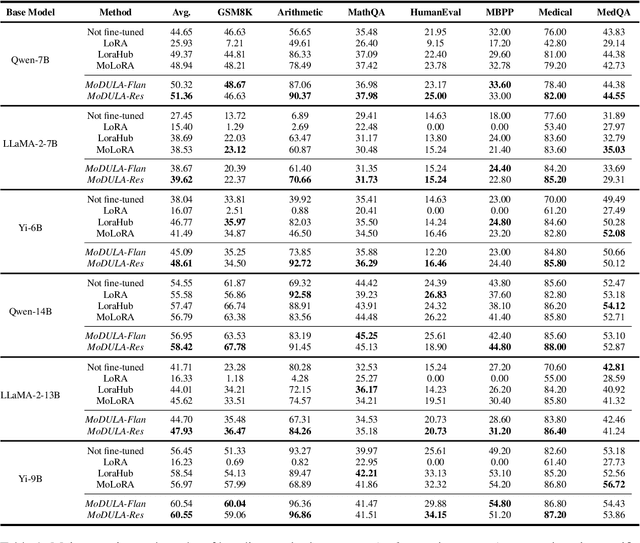

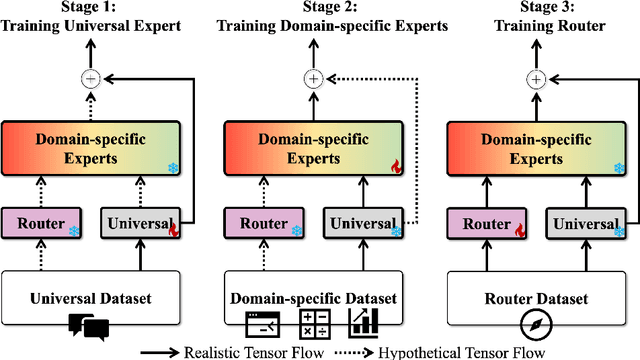

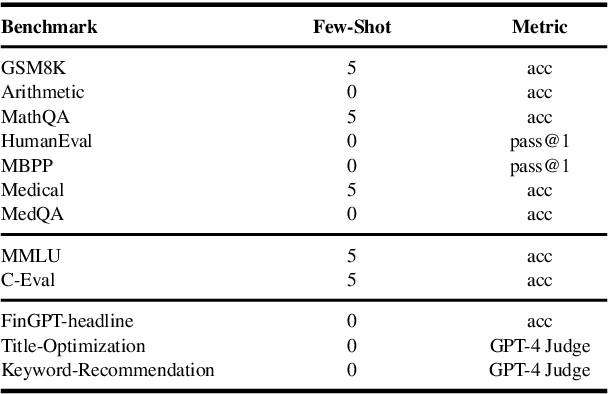

Abstract:The growing demand for larger-scale models in the development of \textbf{L}arge \textbf{L}anguage \textbf{M}odels (LLMs) poses challenges for efficient training within limited computational resources. Traditional fine-tuning methods often exhibit instability in multi-task learning and rely heavily on extensive training resources. Here, we propose MoDULA (\textbf{M}ixture \textbf{o}f \textbf{D}omain-Specific and \textbf{U}niversal \textbf{L}oR\textbf{A}), a novel \textbf{P}arameter \textbf{E}fficient \textbf{F}ine-\textbf{T}uning (PEFT) \textbf{M}ixture-\textbf{o}f-\textbf{E}xpert (MoE) paradigm for improved fine-tuning and parameter efficiency in multi-task learning. The paradigm effectively improves the multi-task capability of the model by training universal experts, domain-specific experts, and routers separately. MoDULA-Res is a new method within the MoDULA paradigm, which maintains the model's general capability by connecting universal and task-specific experts through residual connections. The experimental results demonstrate that the overall performance of the MoDULA-Flan and MoDULA-Res methods surpasses that of existing fine-tuning methods on various LLMs. Notably, MoDULA-Res achieves more significant performance improvements in multiple tasks while reducing training costs by over 80\% without losing general capability. Moreover, MoDULA displays flexible pluggability, allowing for the efficient addition of new tasks without retraining existing experts from scratch. This progressive training paradigm circumvents data balancing issues, enhancing training efficiency and model stability. Overall, MoDULA provides a scalable, cost-effective solution for fine-tuning LLMs with enhanced parameter efficiency and generalization capability.

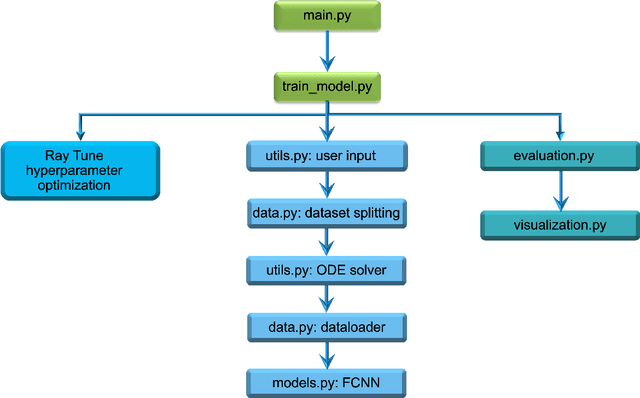

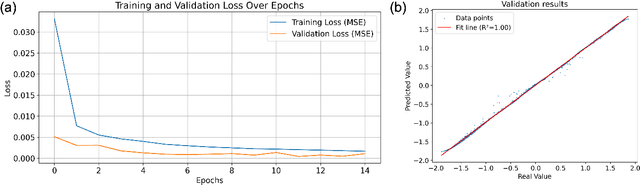

GenAI4UQ: A Software for Inverse Uncertainty Quantification Using Conditional Generative Models

Dec 09, 2024

Abstract:We introduce GenAI4UQ, a software package for inverse uncertainty quantification in model calibration, parameter estimation, and ensemble forecasting in scientific applications. GenAI4UQ leverages a generative artificial intelligence (AI) based conditional modeling framework to address the limitations of traditional inverse modeling techniques, such as Markov Chain Monte Carlo methods. By replacing computationally intensive iterative processes with a direct, learned mapping, GenAI4UQ enables efficient calibration of model input parameters and generation of output predictions directly from observations. The software's design allows for rapid ensemble forecasting with robust uncertainty quantification, while maintaining high computational and storage efficiency. GenAI4UQ simplifies the model training process through built-in auto-tuning of hyperparameters, making it accessible to users with varying levels of expertise. Its conditional generative framework ensures versatility, enabling applicability across a wide range of scientific domains. At its core, GenAI4UQ transforms the paradigm of inverse modeling by providing a fast, reliable, and user-friendly solution. It empowers researchers and practitioners to quickly estimate parameter distributions and generate model predictions for new observations, facilitating efficient decision-making and advancing the state of uncertainty quantification in computational modeling. (The code and data are available at https://github.com/patrickfan/GenAI4UQ).

An End-to-End Deep Learning Method for Solving Nonlocal Allen-Cahn and Cahn-Hilliard Phase-Field Models

Oct 11, 2024

Abstract:We propose an efficient end-to-end deep learning method for solving nonlocal Allen-Cahn (AC) and Cahn-Hilliard (CH) phase-field models. One motivation for this effort emanates from the fact that discretized partial differential equation-based AC or CH phase-field models result in diffuse interfaces between phases, with the only recourse for remediation is to severely refine the spatial grids in the vicinity of the true moving sharp interface whose width is determined by a grid-independent parameter that is substantially larger than the local grid size. In this work, we introduce non-mass conserving nonlocal AC or CH phase-field models with regular, logarithmic, or obstacle double-well potentials. Because of non-locality, some of these models feature totally sharp interfaces separating phases. The discretization of such models can lead to a transition between phases whose width is only a single grid cell wide. Another motivation is to use deep learning approaches to ameliorate the otherwise high cost of solving discretized nonlocal phase-field models. To this end, loss functions of the customized neural networks are defined using the residual of the fully discrete approximations of the AC or CH models, which results from applying a Fourier collocation method and a temporal semi-implicit approximation. To address the long-range interactions in the models, we tailor the architecture of the neural network by incorporating a nonlocal kernel as an input channel to the neural network model. We then provide the results of extensive computational experiments to illustrate the accuracy, structure-preserving properties, predictive capabilities, and cost reductions of the proposed method.

A Training-Free Conditional Diffusion Model for Learning Stochastic Dynamical Systems

Oct 04, 2024Abstract:This study introduces a training-free conditional diffusion model for learning unknown stochastic differential equations (SDEs) using data. The proposed approach addresses key challenges in computational efficiency and accuracy for modeling SDEs by utilizing a score-based diffusion model to approximate their stochastic flow map. Unlike the existing methods, this technique is based on an analytically derived closed-form exact score function, which can be efficiently estimated by Monte Carlo method using the trajectory data, and eliminates the need for neural network training to learn the score function. By generating labeled data through solving the corresponding reverse ordinary differential equation, the approach enables supervised learning of the flow map. Extensive numerical experiments across various SDE types, including linear, nonlinear, and multi-dimensional systems, demonstrate the versatility and effectiveness of the method. The learned models exhibit significant improvements in predicting both short-term and long-term behaviors of unknown stochastic systems, often surpassing baseline methods like GANs in estimating drift and diffusion coefficients.

Nonuniform random feature models using derivative information

Oct 03, 2024Abstract:We propose nonuniform data-driven parameter distributions for neural network initialization based on derivative data of the function to be approximated. These parameter distributions are developed in the context of non-parametric regression models based on shallow neural networks, and compare favorably to well-established uniform random feature models based on conventional weight initialization. We address the cases of Heaviside and ReLU activation functions, and their smooth approximations (sigmoid and softplus), and use recent results on the harmonic analysis and sparse representation of neural networks resulting from fully trained optimal networks. Extending analytic results that give exact representation, we obtain densities that concentrate in regions of the parameter space corresponding to neurons that are well suited to model the local derivatives of the unknown function. Based on these results, we suggest simplifications of these exact densities based on approximate derivative data in the input points that allow for very efficient sampling and lead to performance of random feature models close to optimal networks in several scenarios.

MLoRA: Multi-Domain Low-Rank Adaptive Network for CTR Prediction

Aug 14, 2024

Abstract:Click-through rate (CTR) prediction is one of the fundamental tasks in the industry, especially in e-commerce, social media, and streaming media. It directly impacts website revenues, user satisfaction, and user retention. However, real-world production platforms often encompass various domains to cater for diverse customer needs. Traditional CTR prediction models struggle in multi-domain recommendation scenarios, facing challenges of data sparsity and disparate data distributions across domains. Existing multi-domain recommendation approaches introduce specific-domain modules for each domain, which partially address these issues but often significantly increase model parameters and lead to insufficient training. In this paper, we propose a Multi-domain Low-Rank Adaptive network (MLoRA) for CTR prediction, where we introduce a specialized LoRA module for each domain. This approach enhances the model's performance in multi-domain CTR prediction tasks and is able to be applied to various deep-learning models. We evaluate the proposed method on several multi-domain datasets. Experimental results demonstrate our MLoRA approach achieves a significant improvement compared with state-of-the-art baselines. Furthermore, we deploy it in the production environment of the Alibaba.COM. The online A/B testing results indicate the superiority and flexibility in real-world production environments. The code of our MLoRA is publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge