Zhibo Xiao

RecGPT Technical Report

Jul 30, 2025

Abstract:Recommender systems are among the most impactful applications of artificial intelligence, serving as critical infrastructure connecting users, merchants, and platforms. However, most current industrial systems remain heavily reliant on historical co-occurrence patterns and log-fitting objectives, i.e., optimizing for past user interactions without explicitly modeling user intent. This log-fitting approach often leads to overfitting to narrow historical preferences, failing to capture users' evolving and latent interests. As a result, it reinforces filter bubbles and long-tail phenomena, ultimately harming user experience and threatening the sustainability of the whole recommendation ecosystem. To address these challenges, we rethink the overall design paradigm of recommender systems and propose RecGPT, a next-generation framework that places user intent at the center of the recommendation pipeline. By integrating large language models (LLMs) into key stages of user interest mining, item retrieval, and explanation generation, RecGPT transforms log-fitting recommendation into an intent-centric process. To effectively align general-purpose LLMs to the above domain-specific recommendation tasks at scale, RecGPT incorporates a multi-stage training paradigm, which integrates reasoning-enhanced pre-alignment and self-training evolution, guided by a Human-LLM cooperative judge system. Currently, RecGPT has been fully deployed on the Taobao App. Online experiments demonstrate that RecGPT achieves consistent performance gains across stakeholders: users benefit from increased content diversity and satisfaction, merchants and the platform gain greater exposure and conversions. These comprehensive improvement results across all stakeholders validates that LLM-driven, intent-centric design can foster a more sustainable and mutually beneficial recommendation ecosystem.

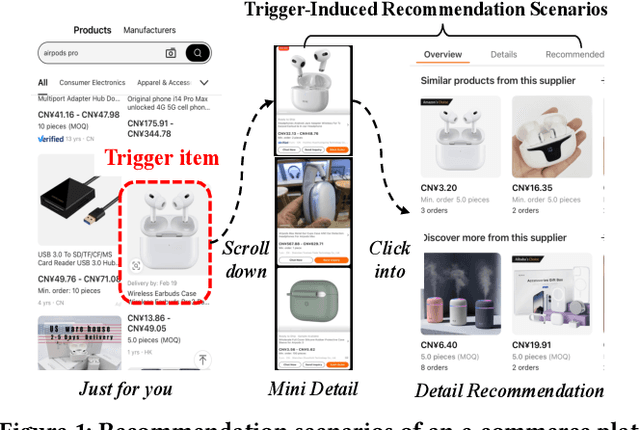

Modeling User Intent Beyond Trigger: Incorporating Uncertainty for Trigger-Induced Recommendation

Aug 07, 2024

Abstract:To cater to users' desire for an immersive browsing experience, numerous e-commerce platforms provide various recommendation scenarios, with a focus on Trigger-Induced Recommendation (TIR) tasks. However, the majority of current TIR methods heavily rely on the trigger item to understand user intent, lacking a higher-level exploration and exploitation of user intent (e.g., popular items and complementary items), which may result in an overly convergent understanding of users' short-term intent and can be detrimental to users' long-term purchasing experiences. Moreover, users' short-term intent shows uncertainty and is affected by various factors such as browsing context and historical behaviors, which poses challenges to user intent modeling. To address these challenges, we propose a novel model called Deep Uncertainty Intent Network (DUIN), comprising three essential modules: i) Explicit Intent Exploit Module extracting explicit user intent using the contrastive learning paradigm; ii) Latent Intent Explore Module exploring latent user intent by leveraging the multi-view relationships between items; iii) Intent Uncertainty Measurement Module offering a distributional estimation and capturing the uncertainty associated with user intent. Experiments on three real-world datasets demonstrate the superior performance of DUIN compared to existing baselines. Notably, DUIN has been deployed across all TIR scenarios in our e-commerce platform, with online A/B testing results conclusively validating its superiority.

On Practical Diversified Recommendation with Controllable Category Diversity Framework

Feb 06, 2024

Abstract:Recommender systems have made significant strides in various industries, primarily driven by extensive efforts to enhance recommendation accuracy. However, this pursuit of accuracy has inadvertently given rise to echo chamber/filter bubble effects. Especially in industry, it could impair user's experiences and prevent user from accessing a wider range of items. One of the solutions is to take diversity into account. However, most of existing works focus on user's explicit preferences, while rarely exploring user's non-interaction preferences. These neglected non-interaction preferences are especially important for broadening user's interests in alleviating echo chamber/filter bubble effects.Therefore, in this paper, we first define diversity as two distinct definitions, i.e., user-explicit diversity (U-diversity) and user-item non-interaction diversity (N-diversity) based on user historical behaviors. Then, we propose a succinct and effective method, named as Controllable Category Diversity Framework (CCDF) to achieve both high U-diversity and N-diversity simultaneously.Specifically, CCDF consists of two stages, User-Category Matching and Constrained Item Matching. The User-Category Matching utilizes the DeepU2C model and a combined loss to capture user's preferences in categories, and then selects the top-$K$ categories with a controllable parameter $K$.These top-$K$ categories will be used as trigger information in Constrained Item Matching. Offline experimental results show that our proposed DeepU2C outperforms state-of-the-art diversity-oriented methods, especially on N-diversity task. The whole framework is validated in a real-world production environment by conducting online A/B testing.

* A Two-stage Controllable Category Diversity Framework for Recommendation

Deep Evolutional Instant Interest Network for CTR Prediction in Trigger-Induced Recommendation

Jan 17, 2024

Abstract:The recommendation has been playing a key role in many industries, e.g., e-commerce, streaming media, social media, etc. Recently, a new recommendation scenario, called Trigger-Induced Recommendation (TIR), where users are able to explicitly express their instant interests via trigger items, is emerging as an essential role in many e-commerce platforms, e.g., Alibaba.com and Amazon. Without explicitly modeling the user's instant interest, traditional recommendation methods usually obtain sub-optimal results in TIR. Even though there are a few methods considering the trigger and target items simultaneously to solve this problem, they still haven't taken into account temporal information of user behaviors, the dynamic change of user instant interest when the user scrolls down and the interactions between the trigger and target items. To tackle these problems, we propose a novel method -- Deep Evolutional Instant Interest Network (DEI2N), for click-through rate prediction in TIR scenarios. Specifically, we design a User Instant Interest Modeling Layer to predict the dynamic change of the intensity of instant interest when the user scrolls down. Temporal information is utilized in user behavior modeling. Moreover, an Interaction Layer is introduced to learn better interactions between the trigger and target items. We evaluate our method on several offline and real-world industrial datasets. Experimental results show that our proposed DEI2N outperforms state-of-the-art baselines. In addition, online A/B testing demonstrates the superiority over the existing baseline in real-world production environments.

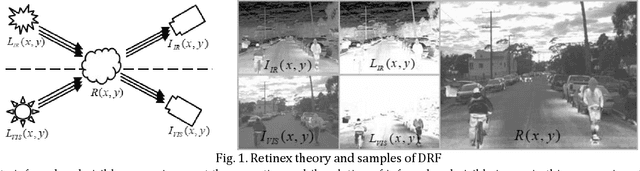

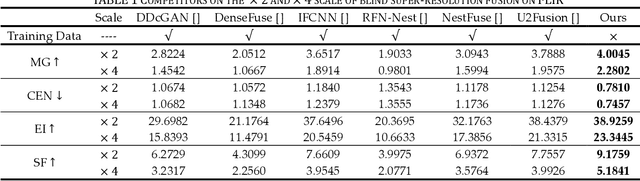

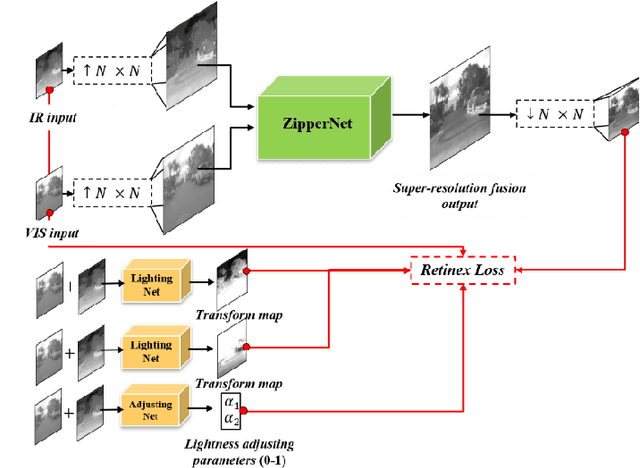

A Dataset-free Self-supervised Disentangled Learning Method for Adaptive Infrared and Visible Images Super-resolution Fusion

Dec 06, 2021

Abstract:This study proposes a novel general dataset-free self-supervised learning framework based-on physical model named self-supervised disentangled learning (SDL), and proposes a novel method named Deep Retinex fusion (DRF) which applies SDL framework with generative networks and Retinex theory in infrared and visible images super-resolution fusion. Meanwhile, a generative dual-path fusion network ZipperNet and adaptive fusion loss function Retinex loss are designed for effectively high-quality fusion. The core idea of DRF (based-on SDL) consists of two parts: one is generating components which are disentangled from physical model using generative networks; the other is loss functions which are designed based-on physical relation, and generated components are combined by loss functions in training phase. Furthermore, in order to verify the effectiveness of our proposed DRF, qualitative and quantitative comparisons compared with six state-of-the-art methods are performed on three different infrared and visible datasets. Our code will be open source available soon at https://github.com/GuYuanjie/Deep-Retinex-fusion.

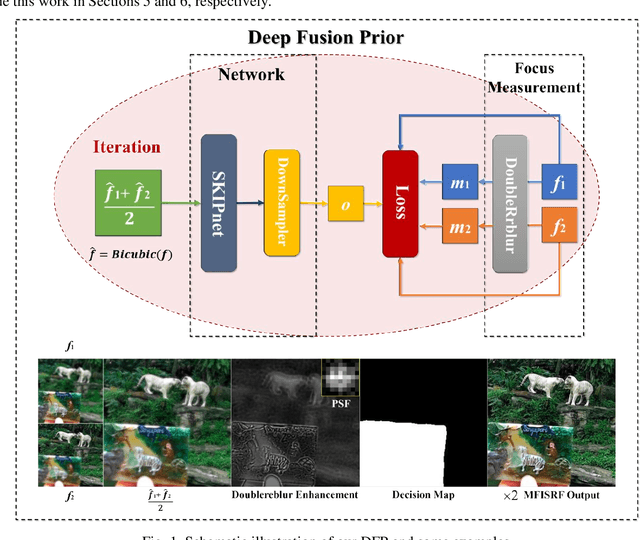

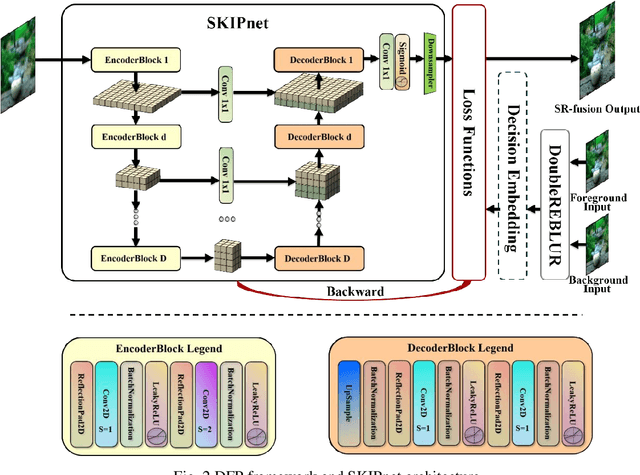

Deep Fusion Prior for Multi-Focus Image Super Resolution Fusion

Oct 12, 2021

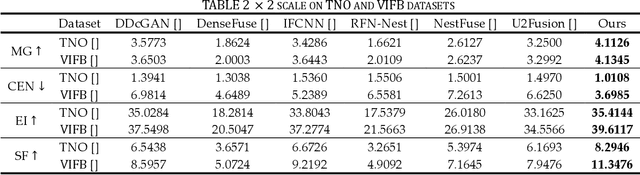

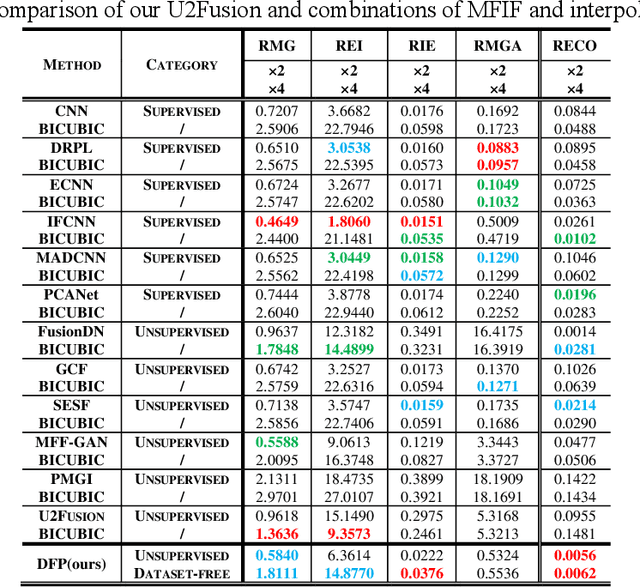

Abstract:This paper unifies the multi-focus images fusion (MFIF) and blind super resolution (SR) problems as the multi-focus image super resolution fusion (MFISRF) task, and proposes a novel unified dataset-free unsupervised framework named deep fusion prior (DFP) to address such MFISRF task. DFP consists of SKIPnet network, DoubleReblur focus measurement tactic, decision embedding module and loss functions. In particular, DFP can obtain MFISRF only from two low-resolution inputs without any extent dataset; SKIPnet implementing unsupervised learning via deep image prior is an end-to-end generated network acting as the engine of DFP; DoubleReblur is used to determine the primary decision map without learning but based on estimated PSF and Gaussian kernels convolution; decision embedding module optimizes the decision map via learning; and DFP losses composed of content loss, joint gradient loss and gradient limit loss can obtain high-quality MFISRF results robustly. Experiments have proved that our proposed DFP approaches and even outperforms those state-of-art MFIF and SR method combinations. Additionally, DFP is a general framework, thus its networks and focus measurement tactics can be continuously updated to further improve the MFISRF performance. DFP codes are open source and will be available soon at http://github.com/GuYuanjie/DeepFusionPrior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge