Hao Deng

Masked Diffusion Generative Recommendation

Jan 27, 2026Abstract:Generative recommendation (GR) typically first quantizes continuous item embeddings into multi-level semantic IDs (SIDs), and then generates the next item via autoregressive decoding. Although existing methods are already competitive in terms of recommendation performance, directly inheriting the autoregressive decoding paradigm from language models still suffers from three key limitations: (1) autoregressive decoding struggles to jointly capture global dependencies among the multi-dimensional features associated with different positions of SID; (2) using a unified, fixed decoding path for the same item implicitly assumes that all users attend to item attributes in the same order; (3) autoregressive decoding is inefficient at inference time and struggles to meet real-time requirements. To tackle these challenges, we propose MDGR, a Masked Diffusion Generative Recommendation framework that reshapes the GR pipeline from three perspectives: codebook, training, and inference. (1) We adopt a parallel codebook to provide a structural foundation for diffusion-based GR. (2) During training, we adaptively construct masking supervision signals along both the temporal and sample dimensions. (3) During inference, we develop a warm-up-based two-stage parallel decoding strategy for efficient generation of SIDs. Extensive experiments on multiple public and industrial-scale datasets show that MDGR outperforms ten state-of-the-art baselines by up to 10.78%. Furthermore, by deploying MDGR on a large-scale online advertising platform, we achieve a 1.20% increase in revenue, demonstrating its practical value. The code will be released upon acceptance.

AI-generated data contamination erodes pathological variability and diagnostic reliability

Jan 21, 2026Abstract:Generative artificial intelligence (AI) is rapidly populating medical records with synthetic content, creating a feedback loop where future models are increasingly at risk of training on uncurated AI-generated data. However, the clinical consequences of this AI-generated data contamination remain unexplored. Here, we show that in the absence of mandatory human verification, this self-referential cycle drives a rapid erosion of pathological variability and diagnostic reliability. By analysing more than 800,000 synthetic data points across clinical text generation, vision-language reporting, and medical image synthesis, we find that models progressively converge toward generic phenotypes regardless of the model architecture. Specifically, rare but critical findings, including pneumothorax and effusions, vanish from the synthetic content generated by AI models, while demographic representations skew heavily toward middle-aged male phenotypes. Crucially, this degradation is masked by false diagnostic confidence; models continue to issue reassuring reports while failing to detect life-threatening pathology, with false reassurance rates tripling to 40%. Blinded physician evaluation confirms that this decoupling of confidence and accuracy renders AI-generated documentation clinically useless after just two generations. We systematically evaluate three mitigation strategies, finding that while synthetic volume scaling fails to prevent collapse, mixing real data with quality-aware filtering effectively preserves diversity. Ultimately, our results suggest that without policy-mandated human oversight, the deployment of generative AI threatens to degrade the very healthcare data ecosystems it relies upon.

GADPN: Graph Adaptive Denoising and Perturbation Networks via Singular Value Decomposition

Jan 13, 2026Abstract:While Graph Neural Networks (GNNs) excel on graph-structured data, their performance is fundamentally limited by the quality of the observed graph, which often contains noise, missing links, or structural properties misaligned with GNNs' underlying assumptions. To address this, graph structure learning aims to infer a more optimal topology. Existing methods, however, often incur high computational costs due to complex generative models and iterative joint optimization, limiting their practical utility. In this paper, we propose GADPN, a simple yet effective graph structure learning framework that adaptively refines graph topology via low-rank denoising and generalized structural perturbation. Our approach makes two key contributions: (1) we introduce Bayesian optimization to adaptively determine the optimal denoising strength, tailoring the process to each graph's homophily level; and (2) we extend the structural perturbation method to arbitrary graphs via Singular Value Decomposition (SVD), overcoming its original limitation to symmetric structures. Extensive experiments on benchmark datasets demonstrate that GADPN achieves state-of-the-art performance while significantly improving efficiency. It shows particularly strong gains on challenging disassortative graphs, validating its ability to robustly learn enhanced graph structures across diverse network types.

Synergistic Integration and Discrepancy Resolution of Contextualized Knowledge for Personalized Recommendation

Oct 16, 2025Abstract:The integration of large language models (LLMs) into recommendation systems has revealed promising potential through their capacity to extract world knowledge for enhanced reasoning capabilities. However, current methodologies that adopt static schema-based prompting mechanisms encounter significant limitations: (1) they employ universal template structures that neglect the multi-faceted nature of user preference diversity; (2) they implement superficial alignment between semantic knowledge representations and behavioral feature spaces without achieving comprehensive latent space integration. To address these challenges, we introduce CoCo, an end-to-end framework that dynamically constructs user-specific contextual knowledge embeddings through a dual-mechanism approach. Our method realizes profound integration of semantic and behavioral latent dimensions via adaptive knowledge fusion and contradiction resolution modules. Experimental evaluations across diverse benchmark datasets and an enterprise-level e-commerce platform demonstrate CoCo's superiority, achieving a maximum 8.58% improvement over seven cutting-edge methods in recommendation accuracy. The framework's deployment on a production advertising system resulted in a 1.91% sales growth, validating its practical effectiveness. With its modular design and model-agnostic architecture, CoCo provides a versatile solution for next-generation recommendation systems requiring both knowledge-enhanced reasoning and personalized adaptation.

Duplex Self-Aligning Resonant Beam Communications and Power Transfer with Coupled Spatially Distributed Laser Resonator

May 08, 2025

Abstract:Sustainable energy supply and high-speed communications are two significant needs for mobile electronic devices. This paper introduces a self-aligning resonant beam system for simultaneous light information and power transfer (SLIPT), employing a novel coupled spatially distributed resonator (CSDR). The system utilizes a resonant beam for efficient power delivery and a second-harmonic beam for concurrent data transmission, inherently minimizing echo interference and enabling bidirectional communication. Through comprehensive analyses, we investigate the CSDR's stable region, beam evolution, and power characteristics in relation to working distance and device parameters. Numerical simulations validate the CSDR-SLIPT system's feasibility by identifying a stable beam waist location for achieving accurate mode-match coupling between two spatially distributed resonant cavities and demonstrating its operational range and efficient power delivery across varying distances. The research reveals the system's benefits in terms of both safety and energy transmission efficiency. We also demonstrate the trade-off among the reflectivities of the cavity mirrors in the CSDR. These findings offer valuable design insights for resonant beam systems, advancing SLIPT with significant potential for remote device connectivity.

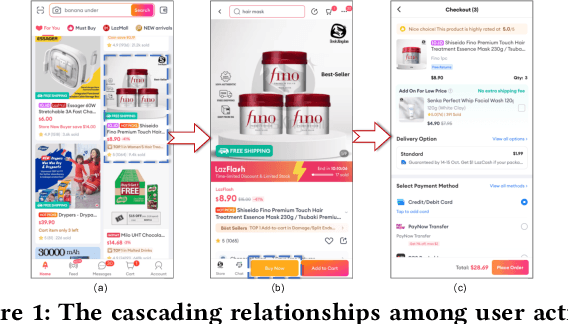

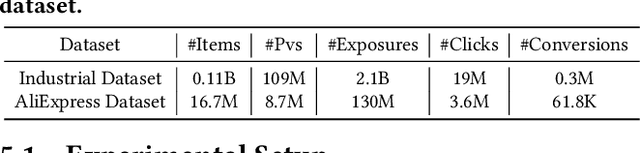

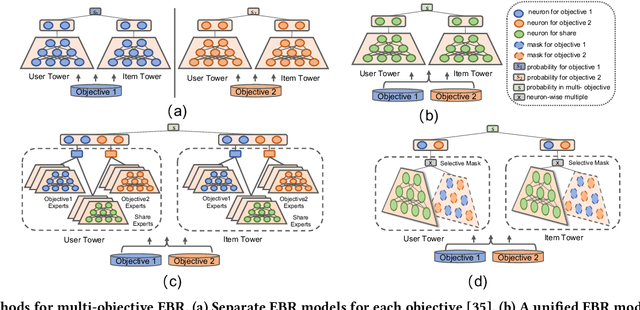

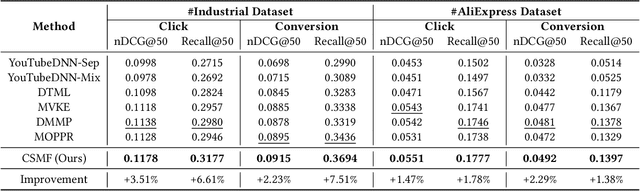

CSMF: Cascaded Selective Mask Fine-Tuning for Multi-Objective Embedding-Based Retrieval

Apr 17, 2025

Abstract:Multi-objective embedding-based retrieval (EBR) has become increasingly critical due to the growing complexity of user behaviors and commercial objectives. While traditional approaches often suffer from data sparsity and limited information sharing between objectives, recent methods utilizing a shared network alongside dedicated sub-networks for each objective partially address these limitations. However, such methods significantly increase the model parameters, leading to an increased retrieval latency and a limited ability to model causal relationships between objectives. To address these challenges, we propose the Cascaded Selective Mask Fine-Tuning (CSMF), a novel method that enhances both retrieval efficiency and serving performance for multi-objective EBR. The CSMF framework selectively masks model parameters to free up independent learning space for each objective, leveraging the cascading relationships between objectives during the sequential fine-tuning. Without increasing network parameters or online retrieval overhead, CSMF computes a linearly weighted fusion score for multiple objective probabilities while supporting flexible adjustment of each objective's weight across various recommendation scenarios. Experimental results on real-world datasets demonstrate the superior performance of CSMF, and online experiments validate its significant practical value.

H2-MARL: Multi-Agent Reinforcement Learning for Pareto Optimality in Hospital Capacity Strain and Human Mobility during Epidemic

Mar 13, 2025Abstract:The necessity of achieving an effective balance between minimizing the losses associated with restricting human mobility and ensuring hospital capacity has gained significant attention in the aftermath of COVID-19. Reinforcement learning (RL)-based strategies for human mobility management have recently advanced in addressing the dynamic evolution of cities and epidemics; however, they still face challenges in achieving coordinated control at the township level and adapting to cities of varying scales. To address the above issues, we propose a multi-agent RL approach that achieves Pareto optimality in managing hospital capacity and human mobility (H2-MARL), applicable across cities of different scales. We first develop a township-level infection model with online-updatable parameters to simulate disease transmission and construct a city-wide dynamic spatiotemporal epidemic simulator. On this basis, H2-MARL is designed to treat each division as an agent, with a trade-off dual-objective reward function formulated and an experience replay buffer enriched with expert knowledge built. To evaluate the effectiveness of the model, we construct a township-level human mobility dataset containing over one billion records from four representative cities of varying scales. Extensive experiments demonstrate that H2-MARL has the optimal dual-objective trade-off capability, which can minimize hospital capacity strain while minimizing human mobility restriction loss. Meanwhile, the applicability of the proposed model to epidemic control in cities of varying scales is verified, which showcases its feasibility and versatility in practical applications.

Hierarchical Causal Transformer with Heterogeneous Information for Expandable Sequential Recommendation

Mar 04, 2025

Abstract:Sequential recommendation systems leveraging transformer architectures have demonstrated exceptional capabilities in capturing user behavior patterns. At the core of these systems lies the critical challenge of constructing effective item representations. Traditional approaches employ feature fusion through simple concatenation or basic neural architectures to create uniform representation sequences. However, these conventional methods fail to address the intrinsic diversity of item attributes, thereby constraining the transformer's capacity to discern fine-grained patterns and hindering model extensibility. Although recent research has begun incorporating user-related heterogeneous features into item sequences, the equally crucial item-side heterogeneous feature continue to be neglected. To bridge this methodological gap, we present HeterRec - an innovative framework featuring two novel components: the Heterogeneous Token Flattening Layer (HTFL) and Hierarchical Causal Transformer (HCT). HTFL pioneers a sophisticated tokenization mechanism that decomposes items into multi-dimensional token sets and structures them into heterogeneous sequences, enabling scalable performance enhancement through model expansion. The HCT architecture further enhances pattern discovery through token-level and item-level attention mechanisms. furthermore, we develop a Listwise Multi-step Prediction (LMP) objective function to optimize learning process. Rigorous validation, including real-world industrial platforms, confirms HeterRec's state-of-the-art performance in both effective and efficiency.

PVBF: A Framework for Mitigating Parameter Variation Imbalance in Online Continual Learning

Feb 25, 2025Abstract:Online continual learning (OCL), which enables AI systems to adaptively learn from non-stationary data streams, is commonly achieved using experience replay (ER)-based methods that retain knowledge by replaying stored past during training. However, these methods face challenges of prediction bias, stemming from deviations in parameter update directions during task transitions. This paper identifies parameter variation imbalance as a critical factor contributing to prediction bias in ER-based OCL. Specifically, using the proposed parameter variation evaluation method, we highlight two types of imbalance: correlation-induced imbalance, where certain parameters are disproportionately updated across tasks, and layer-wise imbalance, where output layer parameters update faster than those in preceding layers. To mitigate the above imbalances, we propose the Parameter Variation Balancing Framework (PVBF), which incorporates: 1) a novel method to compute parameter correlations with previous tasks based on parameter variations, 2) an encourage-and-consolidate (E&C) method utilizing parameter correlations to perform gradient adjustments across all parameters during training, 3) a dual-layer copy weights with reinit (D-CWR) strategy to slowly update output layer parameters for frequently occuring sample categories. Experiments on short and long task sequences demonstrate that PVBF significantly reduces prediction bias and improves OCL performance, achieving up to 47\% higher accuracy compared to existing ER-based methods.

High-resolution Rainy Image Synthesis: Learning from Rendering

Feb 23, 2025

Abstract:Currently, there are few effective methods for synthesizing a mass of high-resolution rainy images in complex illumination conditions. However, these methods are essential for synthesizing large-scale high-quality paired rainy-clean image datasets, which can train deep learning-based single image rain removal models capable of generalizing to various illumination conditions. Therefore, we propose a practical two-stage learning-from-rendering pipeline for high-resolution rainy image synthesis. The pipeline combines the benefits of the realism of rendering-based methods and the high-efficiency of learning-based methods, providing the possibility of creating large-scale high-quality paired rainy-clean image datasets. In the rendering stage, we use a rendering-based method to create a High-resolution Rainy Image (HRI) dataset, which contains realistic high-resolution paired rainy-clean images of multiple scenes and various illumination conditions. In the learning stage, to learn illumination information from background images for high-resolution rainy image generation, we propose a High-resolution Rainy Image Generation Network (HRIGNet). HRIGNet is designed to introduce a guiding diffusion model in the Latent Diffusion Model, which provides additional guidance information for high-resolution image synthesis. In our experiments, HRIGNet is able to synthesize high-resolution rainy images up to 2048x1024 resolution. Rain removal experiments on real dataset validate that our method can help improve the robustness of deep derainers to real rainy images. To make our work reproducible, source codes and the dataset have been released at https://kb824999404.github.io/HRIG/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge