Bin He

Morphogenetic Assembly and Adaptive Control for Heterogeneous Modular Robots

Feb 11, 2026Abstract:This paper presents a closed-loop automation framework for heterogeneous modular robots, covering the full pipeline from morphological construction to adaptive control. In this framework, a mobile manipulator handles heterogeneous functional modules including structural, joint, and wheeled modules to dynamically assemble diverse robot configurations and provide them with immediate locomotion capability. To address the state-space explosion in large-scale heterogeneous reconfiguration, we propose a hierarchical planner: the high-level planner uses a bidirectional heuristic search with type-penalty terms to generate module-handling sequences, while the low level planner employs A* search to compute optimal execution trajectories. This design effectively decouples discrete configuration planning from continuous motion execution. For adaptive motion generation of unknown assembled configurations, we introduce a GPU accelerated Annealing-Variance Model Predictive Path Integral (MPPI) controller. By incorporating a multi stage variance annealing strategy to balance global exploration and local convergence, the controller enables configuration-agnostic, real-time motion control. Large scale simulations show that the type-penalty term is critical for planning robustness in heterogeneous scenarios. Moreover, the greedy heuristic produces plans with lower physical execution costs than the Hungarian heuristic. The proposed annealing-variance MPPI significantly outperforms standard MPPI in both velocity tracking accuracy and control frequency, achieving real time control at 50 Hz. The framework validates the full-cycle process, including module assembly, robot merging and splitting, and dynamic motion generation.

Embodied Arena: A Comprehensive, Unified, and Evolving Evaluation Platform for Embodied AI

Sep 18, 2025Abstract:Embodied AI development significantly lags behind large foundation models due to three critical challenges: (1) lack of systematic understanding of core capabilities needed for Embodied AI, making research lack clear objectives; (2) absence of unified and standardized evaluation systems, rendering cross-benchmark evaluation infeasible; and (3) underdeveloped automated and scalable acquisition methods for embodied data, creating critical bottlenecks for model scaling. To address these obstacles, we present Embodied Arena, a comprehensive, unified, and evolving evaluation platform for Embodied AI. Our platform establishes a systematic embodied capability taxonomy spanning three levels (perception, reasoning, task execution), seven core capabilities, and 25 fine-grained dimensions, enabling unified evaluation with systematic research objectives. We introduce a standardized evaluation system built upon unified infrastructure supporting flexible integration of 22 diverse benchmarks across three domains (2D/3D Embodied Q&A, Navigation, Task Planning) and 30+ advanced models from 20+ worldwide institutes. Additionally, we develop a novel LLM-driven automated generation pipeline ensuring scalable embodied evaluation data with continuous evolution for diversity and comprehensiveness. Embodied Arena publishes three real-time leaderboards (Embodied Q&A, Navigation, Task Planning) with dual perspectives (benchmark view and capability view), providing comprehensive overviews of advanced model capabilities. Especially, we present nine findings summarized from the evaluation results on the leaderboards of Embodied Arena. This helps to establish clear research veins and pinpoint critical research problems, thereby driving forward progress in the field of Embodied AI.

Data-driven solar forecasting enables near-optimal economic decisions

Sep 08, 2025Abstract:Solar energy adoption is critical to achieving net-zero emissions. However, it remains difficult for many industrial and commercial actors to decide on whether they should adopt distributed solar-battery systems, which is largely due to the unavailability of fast, low-cost, and high-resolution irradiance forecasts. Here, we present SunCastNet, a lightweight data-driven forecasting system that provides 0.05$^\circ$, 10-minute resolution predictions of surface solar radiation downwards (SSRD) up to 7 days ahead. SunCastNet, coupled with reinforcement learning (RL) for battery scheduling, reduces operational regret by 76--93\% compared to robust decision making (RDM). In 25-year investment backtests, it enables up to five of ten high-emitting industrial sectors per region to cross the commercial viability threshold of 12\% Internal Rate of Return (IRR). These results show that high-resolution, long-horizon solar forecasts can directly translate into measurable economic gains, supporting near-optimal energy operations and accelerating renewable deployment.

LLMs-guided adaptive compensator: Bringing Adaptivity to Automatic Control Systems with Large Language Models

Jul 28, 2025Abstract:With rapid advances in code generation, reasoning, and problem-solving, Large Language Models (LLMs) are increasingly applied in robotics. Most existing work focuses on high-level tasks such as task decomposition. A few studies have explored the use of LLMs in feedback controller design; however, these efforts are restricted to overly simplified systems, fixed-structure gain tuning, and lack real-world validation. To further investigate LLMs in automatic control, this work targets a key subfield: adaptive control. Inspired by the framework of model reference adaptive control (MRAC), we propose an LLM-guided adaptive compensator framework that avoids designing controllers from scratch. Instead, the LLMs are prompted using the discrepancies between an unknown system and a reference system to design a compensator that aligns the response of the unknown system with that of the reference, thereby achieving adaptivity. Experiments evaluate five methods: LLM-guided adaptive compensator, LLM-guided adaptive controller, indirect adaptive control, learning-based adaptive control, and MRAC, on soft and humanoid robots in both simulated and real-world environments. Results show that the LLM-guided adaptive compensator outperforms traditional adaptive controllers and significantly reduces reasoning complexity compared to the LLM-guided adaptive controller. The Lyapunov-based analysis and reasoning-path inspection demonstrate that the LLM-guided adaptive compensator enables a more structured design process by transforming mathematical derivation into a reasoning task, while exhibiting strong generalizability, adaptability, and robustness. This study opens a new direction for applying LLMs in the field of automatic control, offering greater deployability and practicality compared to vision-language models.

Duplex Self-Aligning Resonant Beam Communications and Power Transfer with Coupled Spatially Distributed Laser Resonator

May 08, 2025

Abstract:Sustainable energy supply and high-speed communications are two significant needs for mobile electronic devices. This paper introduces a self-aligning resonant beam system for simultaneous light information and power transfer (SLIPT), employing a novel coupled spatially distributed resonator (CSDR). The system utilizes a resonant beam for efficient power delivery and a second-harmonic beam for concurrent data transmission, inherently minimizing echo interference and enabling bidirectional communication. Through comprehensive analyses, we investigate the CSDR's stable region, beam evolution, and power characteristics in relation to working distance and device parameters. Numerical simulations validate the CSDR-SLIPT system's feasibility by identifying a stable beam waist location for achieving accurate mode-match coupling between two spatially distributed resonant cavities and demonstrating its operational range and efficient power delivery across varying distances. The research reveals the system's benefits in terms of both safety and energy transmission efficiency. We also demonstrate the trade-off among the reflectivities of the cavity mirrors in the CSDR. These findings offer valuable design insights for resonant beam systems, advancing SLIPT with significant potential for remote device connectivity.

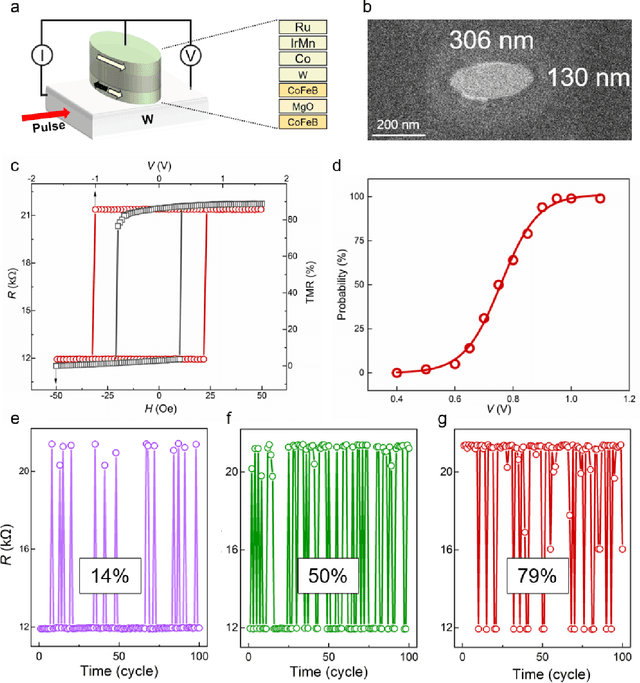

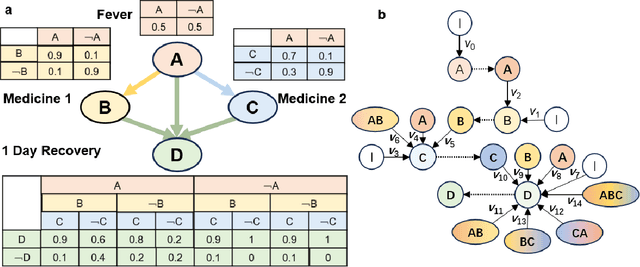

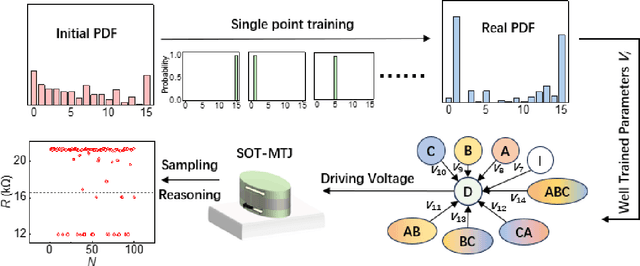

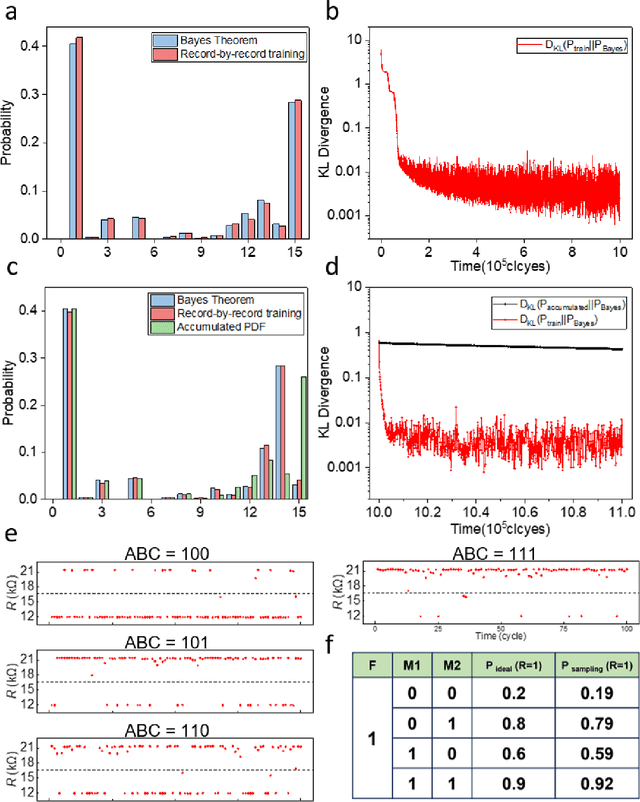

Bayesian Reasoning Enabled by Spin-Orbit Torque Magnetic Tunnel Junctions

Apr 11, 2025

Abstract:Bayesian networks play an increasingly important role in data mining, inference, and reasoning with the rapid development of artificial intelligence. In this paper, we present proof-of-concept experiments demonstrating the use of spin-orbit torque magnetic tunnel junctions (SOT-MTJs) in Bayesian network reasoning. Not only can the target probability distribution function (PDF) of a Bayesian network be precisely formulated by a conditional probability table as usual but also quantitatively parameterized by a probabilistic forward propagating neuron network. Moreover, the parameters of the network can also approach the optimum through a simple point-by point training algorithm, by leveraging which we do not need to memorize all historical data nor statistically summarize conditional probabilities behind them, significantly improving storage efficiency and economizing data pretreatment. Furthermore, we developed a simple medical diagnostic system using the SOT-MTJ as a random number generator and sampler, showcasing the application of SOT-MTJ-based Bayesian reasoning. This SOT-MTJ-based Bayesian reasoning shows great promise in the field of artificial probabilistic neural network, broadening the scope of spintronic device applications and providing an efficient and low-storage solution for complex reasoning tasks.

Kaiwu: A Multimodal Manipulation Dataset and Framework for Robot Learning and Human-Robot Interaction

Mar 07, 2025

Abstract:Cutting-edge robot learning techniques including foundation models and imitation learning from humans all pose huge demands on large-scale and high-quality datasets which constitute one of the bottleneck in the general intelligent robot fields. This paper presents the Kaiwu multimodal dataset to address the missing real-world synchronized multimodal data problems in the sophisticated assembling scenario,especially with dynamics information and its fine-grained labelling. The dataset first provides an integration of human,environment and robot data collection framework with 20 subjects and 30 interaction objects resulting in totally 11,664 instances of integrated actions. For each of the demonstration,hand motions,operation pressures,sounds of the assembling process,multi-view videos, high-precision motion capture information,eye gaze with first-person videos,electromyography signals are all recorded. Fine-grained multi-level annotation based on absolute timestamp,and semantic segmentation labelling are performed. Kaiwu dataset aims to facilitate robot learning,dexterous manipulation,human intention investigation and human-robot collaboration research.

Can video generation replace cinematographers? Research on the cinematic language of generated video

Dec 16, 2024

Abstract:Recent advancements in text-to-video (T2V) generation have leveraged diffusion models to enhance the visual coherence of videos generated from textual descriptions. However, most research has primarily focused on object motion, with limited attention given to cinematic language in videos, which is crucial for cinematographers to convey emotion and narrative pacing. To address this limitation, we propose a threefold approach to enhance the ability of T2V models to generate controllable cinematic language. Specifically, we introduce a cinematic language dataset that encompasses shot framing, angle, and camera movement, enabling models to learn diverse cinematic styles. Building on this, to facilitate robust cinematic alignment evaluation, we present CameraCLIP, a model fine-tuned on the proposed dataset that excels in understanding complex cinematic language in generated videos and can further provide valuable guidance in the multi-shot composition process. Finally, we propose CLIPLoRA, a cost-guided dynamic LoRA composition method that facilitates smooth transitions and realistic blending of cinematic language by dynamically fusing multiple pre-trained cinematic LoRAs within a single video. Our experiments demonstrate that CameraCLIP outperforms existing models in assessing the alignment between cinematic language and video, achieving an R@1 score of 0.81. Additionally, CLIPLoRA improves the ability for multi-shot composition, potentially bridging the gap between automatically generated videos and those shot by professional cinematographers.

Eco-Friendly 0G Networks: Unlocking the Power of Backscatter Communications for a Greener Future

Nov 20, 2024

Abstract:Backscatter Communication (BackCom) technology has emerged as a promising paradigm for the Green Internet of Things (IoT) ecosystem, offering advantages such as low power consumption, cost-effectiveness, and ease of deployment. While traditional BackCom systems, such as RFID technology, have found widespread applications, the advent of ambient backscatter presents new opportunities for expanding applications and enhancing capabilities. Moreover, ongoing standardization efforts are actively focusing on BackCom technologies, positioning them as a potential solution to meet the near-zero power consumption and massive connectivity requirements of next-generation wireless systems. 0G networks have the potential to provide advanced solutions by leveraging BackCom technology to deliver ultra-low-power, ubiquitous connectivity for the expanding IoT ecosystem, supporting billions of devices with minimal energy consumption. This paper investigates the integration of BackCom and 0G networks to enhance the capabilities of traditional BackCom systems and enable Green IoT. We conduct an in-depth analysis of BackCom-enabled 0G networks, exploring their architecture and operational objectives, and also explore the Waste Factor (WF) metric for evaluating energy efficiency and minimizing energy waste within integrated systems. By examining both structural and operational aspects, we demonstrate how this synergy enhances the performance, scalability, and sustainability of next-generation wireless networks. Moreover, we highlight possible applications, open challenges, and future directions, offering valuable insights for guiding future research and practical implementations aimed at achieving large-scale, sustainable IoT deployments.

SSFold: Learning to Fold Arbitrary Crumpled Cloth Using Graph Dynamics from Human Demonstration

Oct 24, 2024

Abstract:Robotic cloth manipulation faces challenges due to the fabric's complex dynamics and the high dimensionality of configuration spaces. Previous methods have largely focused on isolated smoothing or folding tasks and overly reliant on simulations, often failing to bridge the significant sim-to-real gap in deformable object manipulation. To overcome these challenges, we propose a two-stream architecture with sequential and spatial pathways, unifying smoothing and folding tasks into a single adaptable policy model that accommodates various cloth types and states. The sequential stream determines the pick and place positions for the cloth, while the spatial stream, using a connectivity dynamics model, constructs a visibility graph from partial point cloud data of the self-occluded cloth, allowing the robot to infer the cloth's full configuration from incomplete observations. To bridge the sim-to-real gap, we utilize a hand tracking detection algorithm to gather and integrate human demonstration data into our novel end-to-end neural network, improving real-world adaptability. Our method, validated on a UR5 robot across four distinct cloth folding tasks with different goal shapes, consistently achieves folded states from arbitrary crumpled initial configurations, with success rates of 99\%, 99\%, 83\%, and 67\%. It outperforms existing state-of-the-art cloth manipulation techniques and demonstrates strong generalization to unseen cloth with diverse colors, shapes, and stiffness in real-world experiments.Videos and source code are available at: https://zcswdt.github.io/SSFold/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge