Chengyang Li

HPTune: Hierarchical Proactive Tuning for Collision-Free Model Predictive Control

Jan 29, 2026Abstract:Parameter tuning is a powerful approach to enhance adaptability in model predictive control (MPC) motion planners. However, existing methods typically operate in a myopic fashion that only evaluates executed actions, leading to inefficient parameter updates due to the sparsity of failure events (e.g., obstacle nearness or collision). To cope with this issue, we propose to extend evaluation from executed to non-executed actions, yielding a hierarchical proactive tuning (HPTune) framework that combines both a fast-level tuning and a slow-level tuning. The fast one adopts risk indicators of predictive closing speed and predictive proximity distance, and the slow one leverages an extended evaluation loss for closed-loop backpropagation. Additionally, we integrate HPTune with the Doppler LiDAR that provides obstacle velocities apart from position-only measurements for enhanced motion predictions, thus facilitating the implementation of HPTune. Extensive experiments on high-fidelity simulator demonstrate that HPTune achieves efficient MPC tuning and outperforms various baseline schemes in complex environments. It is found that HPTune enables situation-tailored motion planning by formulating a safe, agile collision avoidance strategy.

GenDet: Painting Colored Bounding Boxes on Images via Diffusion Model for Object Detection

Jan 12, 2026Abstract:This paper presents GenDet, a novel framework that redefines object detection as an image generation task. In contrast to traditional approaches, GenDet adopts a pioneering approach by leveraging generative modeling: it conditions on the input image and directly generates bounding boxes with semantic annotations in the original image space. GenDet establishes a conditional generation architecture built upon the large-scale pre-trained Stable Diffusion model, formulating the detection task as semantic constraints within the latent space. It enables precise control over bounding box positions and category attributes, while preserving the flexibility of the generative model. This novel methodology effectively bridges the gap between generative models and discriminative tasks, providing a fresh perspective for constructing unified visual understanding systems. Systematic experiments demonstrate that GenDet achieves competitive accuracy compared to discriminative detectors, while retaining the flexibility characteristic of generative methods.

FaceRefiner: High-Fidelity Facial Texture Refinement with Differentiable Rendering-based Style Transfer

Jan 08, 2026Abstract:Recent facial texture generation methods prefer to use deep networks to synthesize image content and then fill in the UV map, thus generating a compelling full texture from a single image. Nevertheless, the synthesized texture UV map usually comes from a space constructed by the training data or the 2D face generator, which limits the methods' generalization ability for in-the-wild input images. Consequently, their facial details, structures and identity may not be consistent with the input. In this paper, we address this issue by proposing a style transfer-based facial texture refinement method named FaceRefiner. FaceRefiner treats the 3D sampled texture as style and the output of a texture generation method as content. The photo-realistic style is then expected to be transferred from the style image to the content image. Different from current style transfer methods that only transfer high and middle level information to the result, our style transfer method integrates differentiable rendering to also transfer low level (or pixel level) information in the visible face regions. The main benefit of such multi-level information transfer is that, the details, structures and semantics in the input can thus be well preserved. The extensive experiments on Multi-PIE, CelebA and FFHQ datasets demonstrate that our refinement method can improve the texture quality and the face identity preserving ability, compared with state-of-the-arts.

C-DGPA: Class-Centric Dual-Alignment Generative Prompt Adaptation

Dec 18, 2025Abstract:Unsupervised Domain Adaptation transfers knowledge from a labeled source domain to an unlabeled target domain. Directly deploying Vision-Language Models (VLMs) with prompt tuning in downstream UDA tasks faces the signifi cant challenge of mitigating domain discrepancies. Existing prompt-tuning strategies primarily align marginal distribu tion, but neglect conditional distribution discrepancies, lead ing to critical issues such as class prototype misalignment and degraded semantic discriminability. To address these lim itations, the work proposes C-DGPA: Class-Centric Dual Alignment Generative Prompt Adaptation. C-DGPA syner gistically optimizes marginal distribution alignment and con ditional distribution alignment through a novel dual-branch architecture. The marginal distribution alignment branch em ploys a dynamic adversarial training framework to bridge marginal distribution discrepancies. Simultaneously, the con ditional distribution alignment branch introduces a Class Mapping Mechanism (CMM) to align conditional distribu tion discrepancies by standardizing semantic prompt under standing and preventing source domain over-reliance. This dual alignment strategy effectively integrates domain knowl edge into prompt learning via synergistic optimization, ensur ing domain-invariant and semantically discriminative repre sentations. Extensive experiments on OfficeHome, Office31, and VisDA-2017 validate the superiority of C-DGPA. It achieves new state-of-the-art results on all benchmarks.

Myocardial Region-guided Feature Aggregation Net for Automatic Coronary artery Segmentation and Stenosis Assessment using Coronary Computed Tomography Angiography

Apr 27, 2025

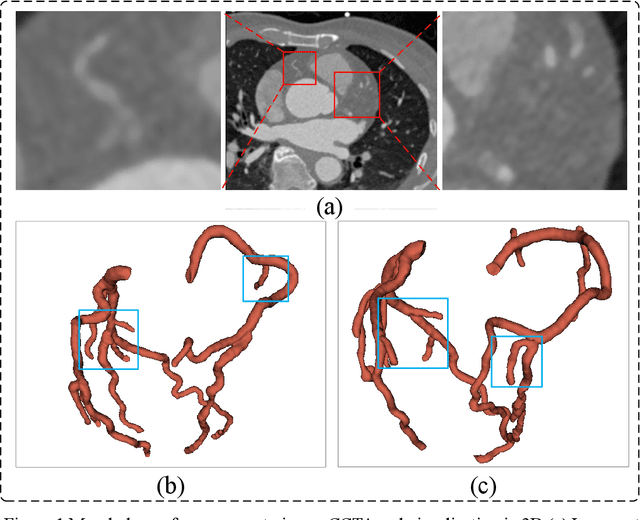

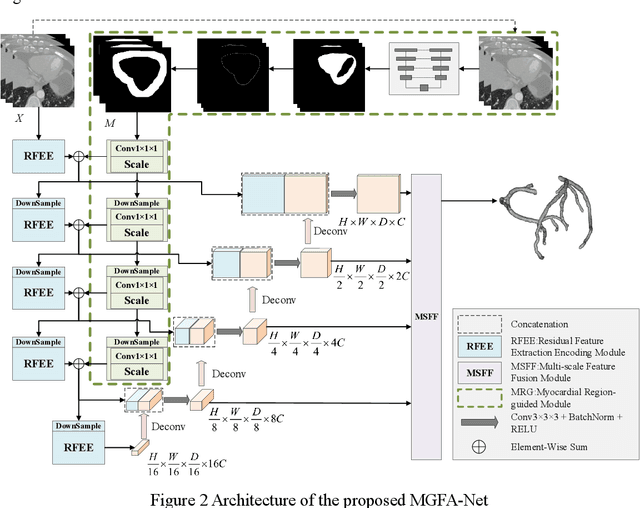

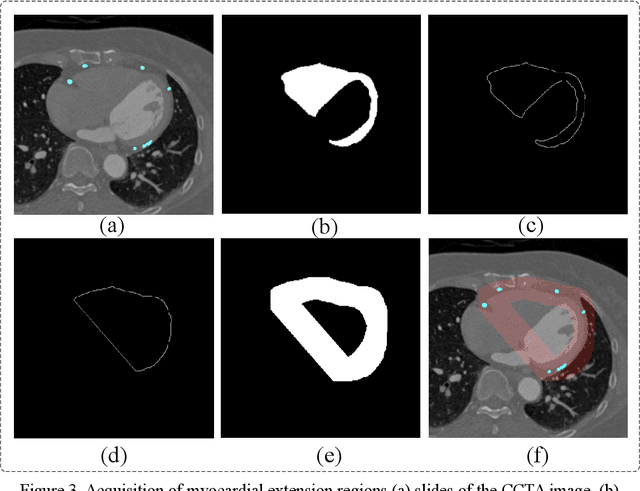

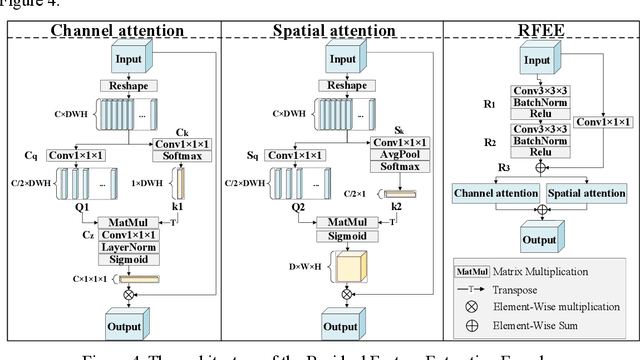

Abstract:Coronary artery disease (CAD) remains a leading cause of mortality worldwide, requiring accurate segmentation and stenosis detection using Coronary Computed Tomography angiography (CCTA). Existing methods struggle with challenges such as low contrast, morphological variability and small vessel segmentation. To address these limitations, we propose the Myocardial Region-guided Feature Aggregation Net, a novel U-shaped dual-encoder architecture that integrates anatomical prior knowledge to enhance robustness in coronary artery segmentation. Our framework incorporates three key innovations: (1) a Myocardial Region-guided Module that directs attention to coronary regions via myocardial contour expansion and multi-scale feature fusion, (2) a Residual Feature Extraction Encoding Module that combines parallel spatial channel attention with residual blocks to enhance local-global feature discrimination, and (3) a Multi-scale Feature Fusion Module for adaptive aggregation of hierarchical vascular features. Additionally, Monte Carlo dropout f quantifies prediction uncertainty, supporting clinical interpretability. For stenosis detection, a morphology-based centerline extraction algorithm separates the vascular tree into anatomical branches, enabling cross-sectional area quantification and stenosis grading. The superiority of MGFA-Net was demonstrated by achieving an Dice score of 85.04%, an accuracy of 84.24%, an HD95 of 6.1294 mm, and an improvement of 5.46% in true positive rate for stenosis detection compared to3D U-Net. The integrated segmentation-to-stenosis pipeline provides automated, clinically interpretable CAD assessment, bridging deep learning with anatomical prior knowledge for precision medicine. Our code is publicly available at http://github.com/chenzhao2023/MGFA_CCTA

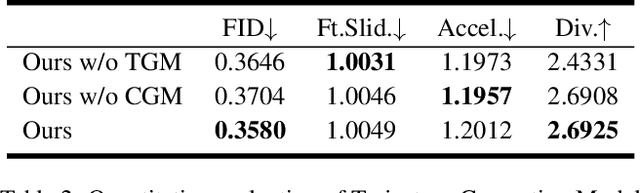

SMGDiff: Soccer Motion Generation using diffusion probabilistic models

Nov 25, 2024

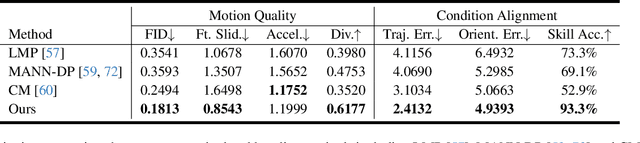

Abstract:Soccer is a globally renowned sport with significant applications in video games and VR/AR. However, generating realistic soccer motions remains challenging due to the intricate interactions between the human player and the ball. In this paper, we introduce SMGDiff, a novel two-stage framework for generating real-time and user-controllable soccer motions. Our key idea is to integrate real-time character control with a powerful diffusion-based generative model, ensuring high-quality and diverse output motion. In the first stage, we instantly transform coarse user controls into diverse global trajectories of the character. In the second stage, we employ a transformer-based autoregressive diffusion model to generate soccer motions based on trajectory conditioning. We further incorporate a contact guidance module during inference to optimize the contact details for realistic ball-foot interactions. Moreover, we contribute a large-scale soccer motion dataset consisting of over 1.08 million frames of diverse soccer motions. Extensive experiments demonstrate that our SMGDiff significantly outperforms existing methods in terms of motion quality and condition alignment.

Multi-scale Frequency Enhancement Network for Blind Image Deblurring

Nov 11, 2024Abstract:Image deblurring is an essential image preprocessing technique, aiming to recover clear and detailed images form blurry ones. However, existing algorithms often fail to effectively integrate multi-scale feature extraction with frequency enhancement, limiting their ability to reconstruct fine textures. Additionally, non-uniform blur in images also restricts the effectiveness of image restoration. To address these issues, we propose a multi-scale frequency enhancement network (MFENet) for blind image deblurring. To capture the multi-scale spatial and channel information of blurred images, we introduce a multi-scale feature extraction module (MS-FE) based on depthwise separable convolutions, which provides rich target features for deblurring. We propose a frequency enhanced blur perception module (FEBP) that employs wavelet transforms to extract high-frequency details and utilizes multi-strip pooling to perceive non-uniform blur, combining multi-scale information with frequency enhancement to improve the restoration of image texture details. Experimental results on the GoPro and HIDE datasets demonstrate that the proposed method achieves superior deblurring performance in both visual quality and objective evaluation metrics. Furthermore, in downstream object detection tasks, the proposed blind image deblurring algorithm significantly improves detection accuracy, further validating its effectiveness androbustness in the field of image deblurring.

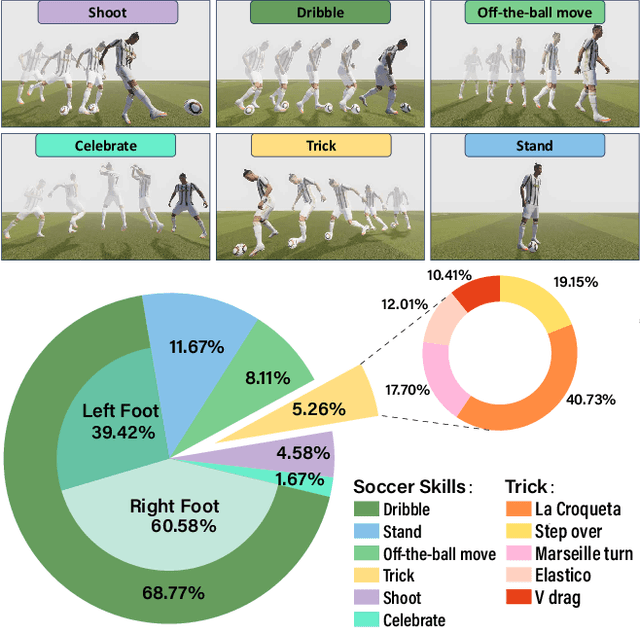

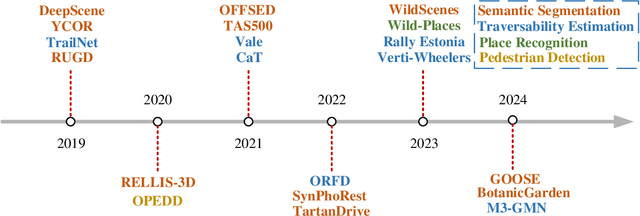

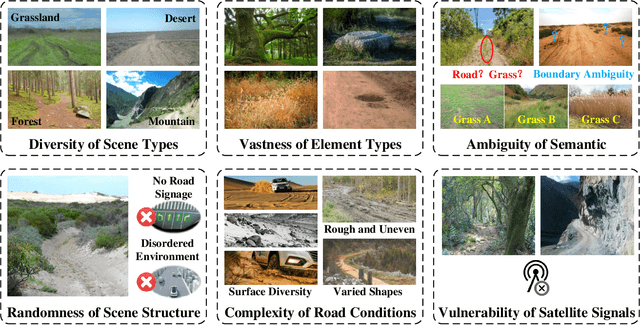

Autonomous Driving in Unstructured Environments: How Far Have We Come?

Oct 10, 2024

Abstract:Research on autonomous driving in unstructured outdoor environments is less advanced than in structured urban settings due to challenges like environmental diversities and scene complexity. These environments-such as rural areas and rugged terrains-pose unique obstacles that are not common in structured urban areas. Despite these difficulties, autonomous driving in unstructured outdoor environments is crucial for applications in agriculture, mining, and military operations. Our survey reviews over 250 papers for autonomous driving in unstructured outdoor environments, covering offline mapping, pose estimation, environmental perception, path planning, end-to-end autonomous driving, datasets, and relevant challenges. We also discuss emerging trends and future research directions. This review aims to consolidate knowledge and encourage further research for autonomous driving in unstructured environments. To support ongoing work, we maintain an active repository with up-to-date literature and open-source projects at: https://github.com/chaytonmin/Survey-Autonomous-Driving-in-Unstructured-Environments.

Learn2Talk: 3D Talking Face Learns from 2D Talking Face

Apr 19, 2024

Abstract:Speech-driven facial animation methods usually contain two main classes, 3D and 2D talking face, both of which attract considerable research attention in recent years. However, to the best of our knowledge, the research on 3D talking face does not go deeper as 2D talking face, in the aspect of lip-synchronization (lip-sync) and speech perception. To mind the gap between the two sub-fields, we propose a learning framework named Learn2Talk, which can construct a better 3D talking face network by exploiting two expertise points from the field of 2D talking face. Firstly, inspired by the audio-video sync network, a 3D sync-lip expert model is devised for the pursuit of lip-sync between audio and 3D facial motion. Secondly, a teacher model selected from 2D talking face methods is used to guide the training of the audio-to-3D motions regression network to yield more 3D vertex accuracy. Extensive experiments show the advantages of the proposed framework in terms of lip-sync, vertex accuracy and speech perception, compared with state-of-the-arts. Finally, we show two applications of the proposed framework: audio-visual speech recognition and speech-driven 3D Gaussian Splatting based avatar animation.

OmniColor: A Global Camera Pose Optimization Approach of LiDAR-360Camera Fusion for Colorizing Point Clouds

Apr 06, 2024

Abstract:A Colored point cloud, as a simple and efficient 3D representation, has many advantages in various fields, including robotic navigation and scene reconstruction. This representation is now commonly used in 3D reconstruction tasks relying on cameras and LiDARs. However, fusing data from these two types of sensors is poorly performed in many existing frameworks, leading to unsatisfactory mapping results, mainly due to inaccurate camera poses. This paper presents OmniColor, a novel and efficient algorithm to colorize point clouds using an independent 360-degree camera. Given a LiDAR-based point cloud and a sequence of panorama images with initial coarse camera poses, our objective is to jointly optimize the poses of all frames for mapping images onto geometric reconstructions. Our pipeline works in an off-the-shelf manner that does not require any feature extraction or matching process. Instead, we find optimal poses by directly maximizing the photometric consistency of LiDAR maps. In experiments, we show that our method can overcome the severe visual distortion of omnidirectional images and greatly benefit from the wide field of view (FOV) of 360-degree cameras to reconstruct various scenarios with accuracy and stability. The code will be released at https://github.com/liubonan123/OmniColor/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge