Guoyang Zhao

ALORE: Autonomous Large-Object Rearrangement with a Legged Manipulator

Feb 04, 2026Abstract:Endowing robots with the ability to rearrange various large and heavy objects, such as furniture, can substantially alleviate human workload. However, this task is extremely challenging due to the need to interact with diverse objects and efficiently rearrange multiple objects in complex environments while ensuring collision-free loco-manipulation. In this work, we present ALORE, an autonomous large-object rearrangement system for a legged manipulator that can rearrange various large objects across diverse scenarios. The proposed system is characterized by three main features: (i) a hierarchical reinforcement learning training pipeline for multi-object environment learning, where a high-level object velocity controller is trained on top of a low-level whole-body controller to achieve efficient and stable joint learning across multiple objects; (ii) two key modules, a unified interaction configuration representation and an object velocity estimator, that allow a single policy to regulate planar velocity of diverse objects accurately; and (iii) a task-and-motion planning framework that jointly optimizes object visitation order and object-to-target assignment, improving task efficiency while enabling online replanning. Comparisons against strong baselines show consistent superiority in policy generalization, object-velocity tracking accuracy, and multi-object rearrangement efficiency. Key modules are systematically evaluated, and extensive simulations and real-world experiments are conducted to validate the robustness and effectiveness of the entire system, which successfully completes 8 continuous loops to rearrange 32 chairs over nearly 40 minutes without a single failure, and executes long-distance autonomous rearrangement over an approximately 40 m route. The open-source packages are available at https://zhihaibi.github.io/Alore/.

Vision-Language-Action Models for Autonomous Driving: Past, Present, and Future

Dec 18, 2025Abstract:Autonomous driving has long relied on modular "Perception-Decision-Action" pipelines, where hand-crafted interfaces and rule-based components often break down in complex or long-tailed scenarios. Their cascaded design further propagates perception errors, degrading downstream planning and control. Vision-Action (VA) models address some limitations by learning direct mappings from visual inputs to actions, but they remain opaque, sensitive to distribution shifts, and lack structured reasoning or instruction-following capabilities. Recent progress in Large Language Models (LLMs) and multimodal learning has motivated the emergence of Vision-Language-Action (VLA) frameworks, which integrate perception with language-grounded decision making. By unifying visual understanding, linguistic reasoning, and actionable outputs, VLAs offer a pathway toward more interpretable, generalizable, and human-aligned driving policies. This work provides a structured characterization of the emerging VLA landscape for autonomous driving. We trace the evolution from early VA approaches to modern VLA frameworks and organize existing methods into two principal paradigms: End-to-End VLA, which integrates perception, reasoning, and planning within a single model, and Dual-System VLA, which separates slow deliberation (via VLMs) from fast, safety-critical execution (via planners). Within these paradigms, we further distinguish subclasses such as textual vs. numerical action generators and explicit vs. implicit guidance mechanisms. We also summarize representative datasets and benchmarks for evaluating VLA-based driving systems and highlight key challenges and open directions, including robustness, interpretability, and instruction fidelity. Overall, this work aims to establish a coherent foundation for advancing human-compatible autonomous driving systems.

GP3: A 3D Geometry-Aware Policy with Multi-View Images for Robotic Manipulation

Sep 19, 2025Abstract:Effective robotic manipulation relies on a precise understanding of 3D scene geometry, and one of the most straightforward ways to acquire such geometry is through multi-view observations. Motivated by this, we present GP3 -- a 3D geometry-aware robotic manipulation policy that leverages multi-view input. GP3 employs a spatial encoder to infer dense spatial features from RGB observations, which enable the estimation of depth and camera parameters, leading to a compact yet expressive 3D scene representation tailored for manipulation. This representation is fused with language instructions and translated into continuous actions via a lightweight policy head. Comprehensive experiments demonstrate that GP3 consistently outperforms state-of-the-art methods on simulated benchmarks. Furthermore, GP3 transfers effectively to real-world robots without depth sensors or pre-mapped environments, requiring only minimal fine-tuning. These results highlight GP3 as a practical, sensor-agnostic solution for geometry-aware robotic manipulation.

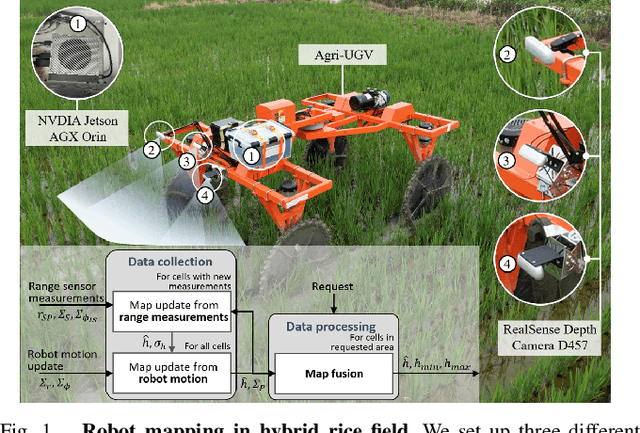

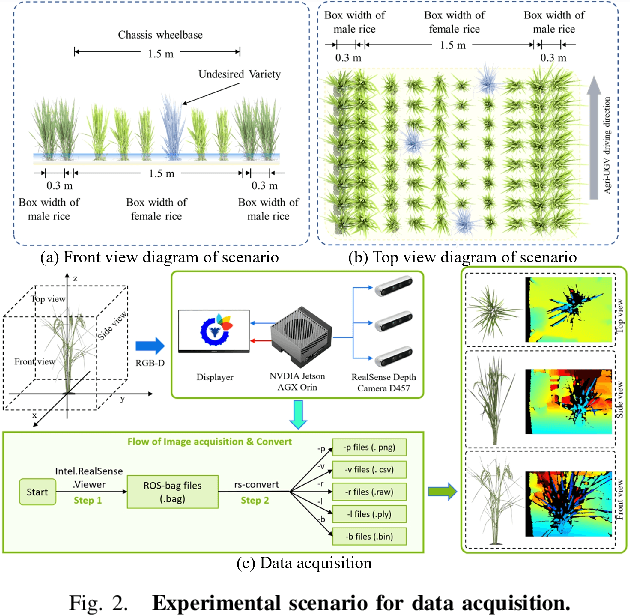

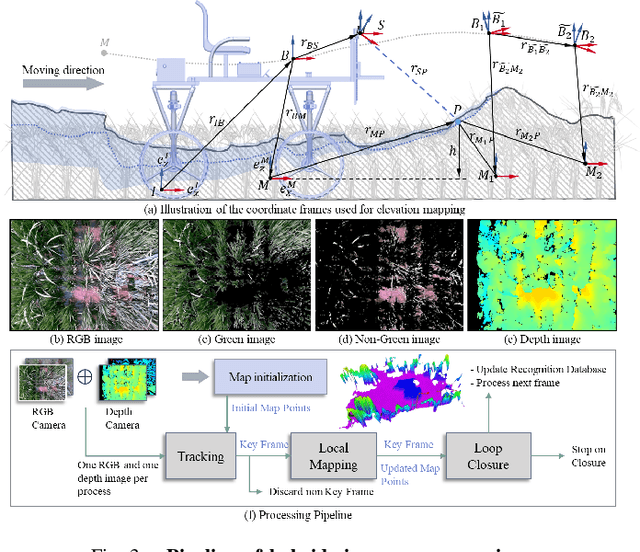

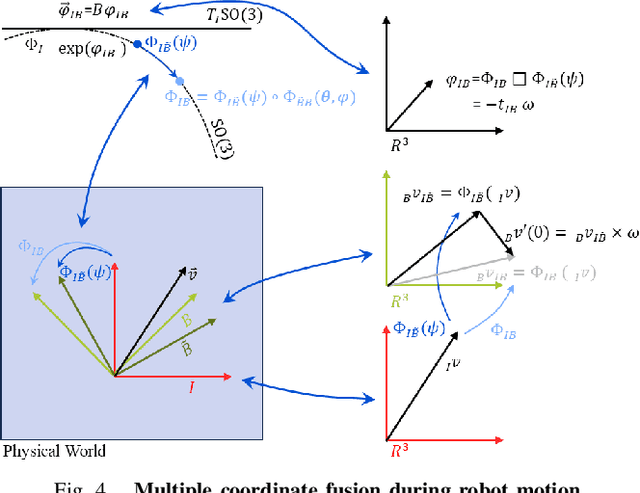

Motion-Coupled Mapping Algorithm for Hybrid Rice Canopy

Feb 22, 2025

Abstract:This paper presents a motion-coupled mapping algorithm for contour mapping of hybrid rice canopies, specifically designed for Agricultural Unmanned Ground Vehicles (Agri-UGV) navigating complex and unknown rice fields. Precise canopy mapping is essential for Agri-UGVs to plan efficient routes and avoid protected zones. The motion control of Agri-UGVs, tasked with impurity removal and other operations, depends heavily on accurate estimation of rice canopy height and structure. To achieve this, the proposed algorithm integrates real-time RGB-D sensor data with kinematic and inertial measurements, enabling efficient mapping and proprioceptive localization. The algorithm produces grid-based elevation maps that reflect the probabilistic distribution of canopy contours, accounting for motion-induced uncertainties. It is implemented on a high-clearance Agri-UGV platform and tested in various environments, including both controlled and dynamic rice field settings. This approach significantly enhances the mapping accuracy and operational reliability of Agri-UGVs, contributing to more efficient autonomous agricultural operations.

* Best Paper Award First Place - IROS 2024 Workshop on AI and Robotics For Future Farming

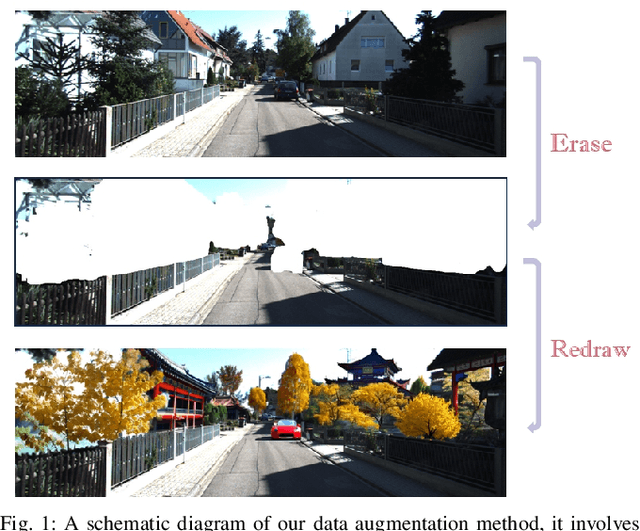

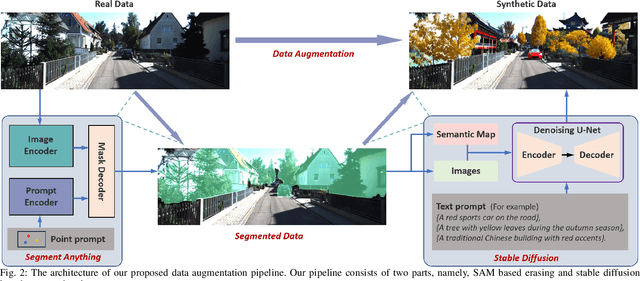

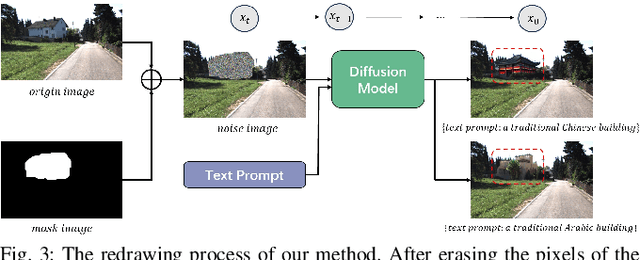

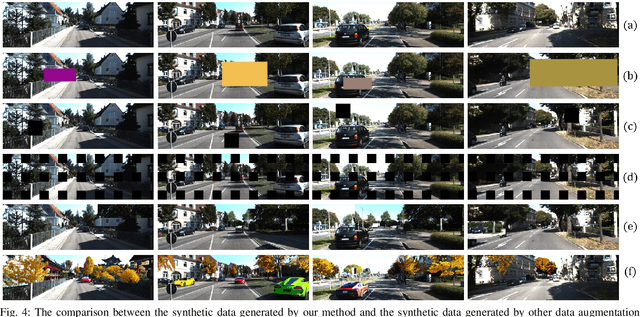

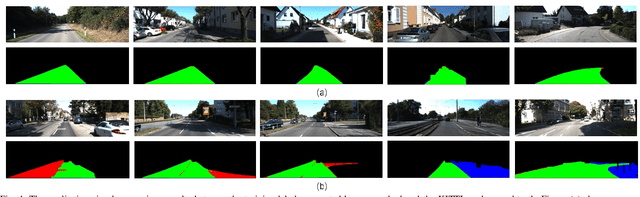

Erase, then Redraw: A Novel Data Augmentation Approach for Free Space Detection Using Diffusion Model

Sep 30, 2024

Abstract:Data augmentation is one of the most common tools in deep learning, underpinning many recent advances including tasks such as classification, detection, and semantic segmentation. The standard approach to data augmentation involves simple transformations like rotation and flipping to generate new images. However, these new images often lack diversity along the main semantic dimensions within the data. Traditional data augmentation methods cannot alter high-level semantic attributes such as the presence of vehicles, trees, and buildings in a scene to enhance data diversity. In recent years, the rapid development of generative models has injected new vitality into the field of data augmentation. In this paper, we address the lack of diversity in data augmentation for road detection task by using a pre-trained text-to-image diffusion model to parameterize image-to-image transformations. Our method involves editing images using these diffusion models to change their semantics. In essence, we achieve this goal by erasing instances of real objects from the original dataset and generating new instances with similar semantics in the erased regions using the diffusion model, thereby expanding the original dataset. We evaluate our approach on the KITTI road dataset and achieve the best results compared to other data augmentation methods, which demonstrates the effectiveness of our proposed development.

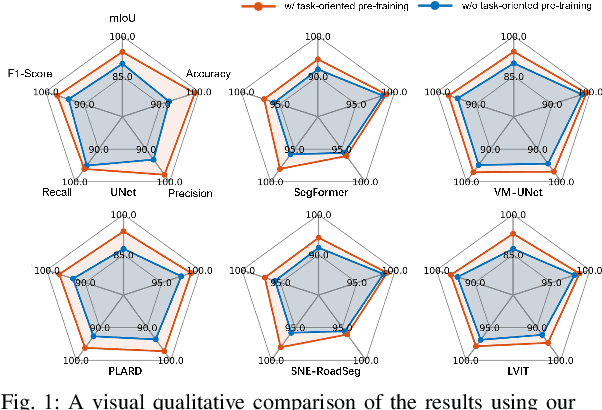

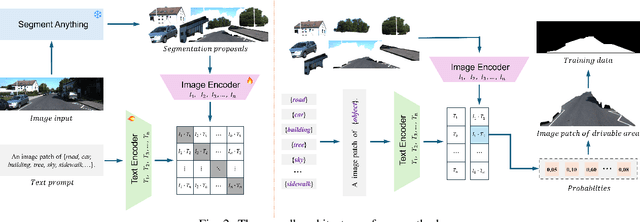

Task-Oriented Pre-Training for Drivable Area Detection

Sep 30, 2024

Abstract:Pre-training techniques play a crucial role in deep learning, enhancing models' performance across a variety of tasks. By initially training on large datasets and subsequently fine-tuning on task-specific data, pre-training provides a solid foundation for models, improving generalization abilities and accelerating convergence rates. This approach has seen significant success in the fields of natural language processing and computer vision. However, traditional pre-training methods necessitate large datasets and substantial computational resources, and they can only learn shared features through prolonged training and struggle to capture deeper, task-specific features. In this paper, we propose a task-oriented pre-training method that begins with generating redundant segmentation proposals using the Segment Anything (SAM) model. We then introduce a Specific Category Enhancement Fine-tuning (SCEF) strategy for fine-tuning the Contrastive Language-Image Pre-training (CLIP) model to select proposals most closely related to the drivable area from those generated by SAM. This approach can generate a lot of coarse training data for pre-training models, which are further fine-tuned using manually annotated data, thereby improving model's performance. Comprehensive experiments conducted on the KITTI road dataset demonstrate that our task-oriented pre-training method achieves an all-around performance improvement compared to models without pre-training. Moreover, our pre-training method not only surpasses traditional pre-training approach but also achieves the best performance compared to state-of-the-art self-training methods.

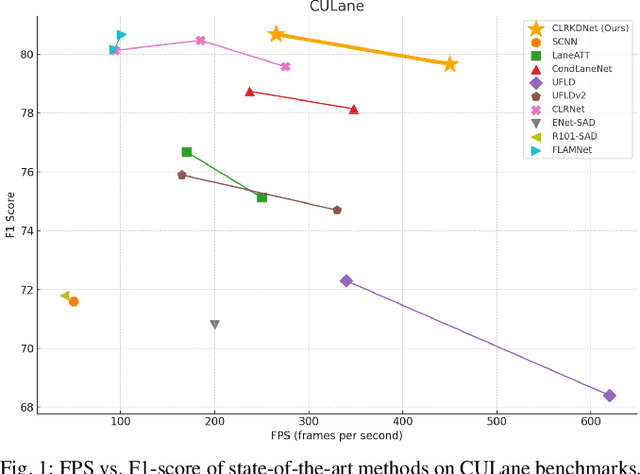

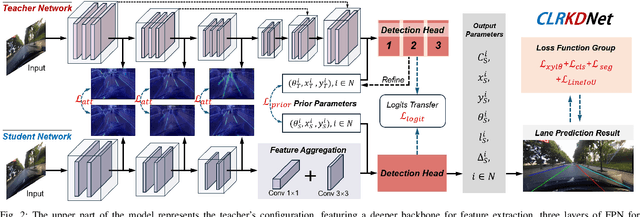

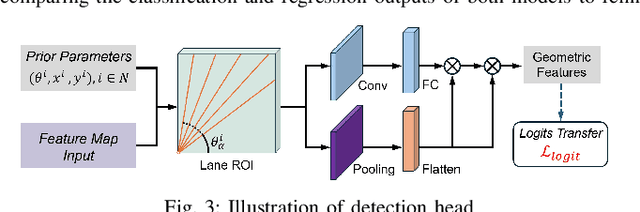

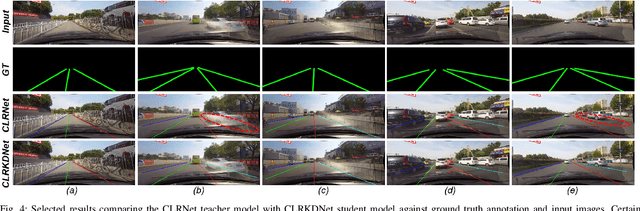

CLRKDNet: Speeding up Lane Detection with Knowledge Distillation

May 21, 2024

Abstract:Road lanes are integral components of the visual perception systems in intelligent vehicles, playing a pivotal role in safe navigation. In lane detection tasks, balancing accuracy with real-time performance is essential, yet existing methods often sacrifice one for the other. To address this trade-off, we introduce CLRKDNet, a streamlined model that balances detection accuracy with real-time performance. The state-of-the-art model CLRNet has demonstrated exceptional performance across various datasets, yet its computational overhead is substantial due to its Feature Pyramid Network (FPN) and muti-layer detection head architecture. Our method simplifies both the FPN structure and detection heads, redesigning them to incorporate a novel teacher-student distillation process alongside a newly introduced series of distillation losses. This combination reduces inference time by up to 60% while maintaining detection accuracy comparable to CLRNet. This strategic balance of accuracy and speed makes CLRKDNet a viable solution for real-time lane detection tasks in autonomous driving applications.

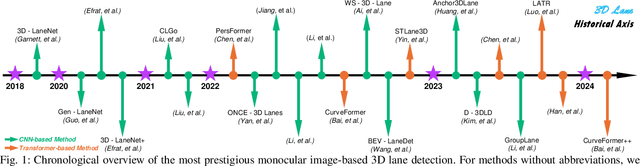

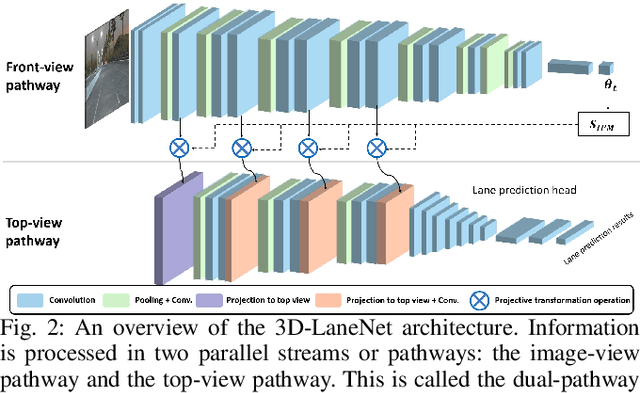

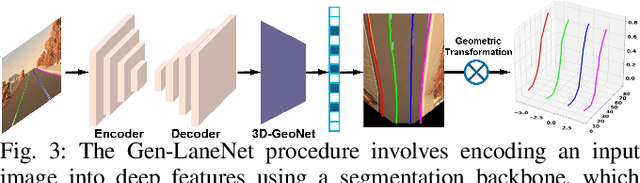

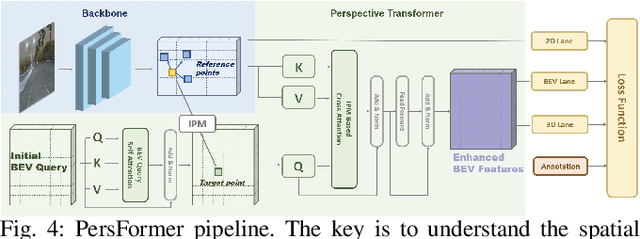

Monocular 3D lane detection for Autonomous Driving: Recent Achievements, Challenges, and Outlooks

Apr 10, 2024

Abstract:3D lane detection plays a crucial role in autonomous driving by extracting structural and traffic information from the road in 3D space to assist the self-driving car in rational, safe, and comfortable path planning and motion control. Due to the consideration of sensor costs and the advantages of visual data in color information, in practical applications, 3D lane detection based on monocular vision is one of the important research directions in the field of autonomous driving, which has attracted more and more attention in both industry and academia. Unfortunately, recent progress in visual perception seems insufficient to develop completely reliable 3D lane detection algorithms, which also hinders the development of vision-based fully autonomous self-driving cars, i.e., achieving level 5 autonomous driving, driving like human-controlled cars. This is one of the conclusions drawn from this review paper: there is still a lot of room for improvement and significant improvements are still needed in the 3D lane detection algorithm for autonomous driving cars using visual sensors. Motivated by this, this review defines, analyzes, and reviews the current achievements in the field of 3D lane detection research, and the vast majority of the current progress relies heavily on computationally complex deep learning models. In addition, this review covers the 3D lane detection pipeline, investigates the performance of state-of-the-art algorithms, analyzes the time complexity of cutting-edge modeling choices, and highlights the main achievements and limitations of current research efforts. The survey also includes a comprehensive discussion of available 3D lane detection datasets and the challenges that researchers have faced but have not yet resolved. Finally, our work outlines future research directions and welcomes researchers and practitioners to enter this exciting field.

OmniColor: A Global Camera Pose Optimization Approach of LiDAR-360Camera Fusion for Colorizing Point Clouds

Apr 06, 2024

Abstract:A Colored point cloud, as a simple and efficient 3D representation, has many advantages in various fields, including robotic navigation and scene reconstruction. This representation is now commonly used in 3D reconstruction tasks relying on cameras and LiDARs. However, fusing data from these two types of sensors is poorly performed in many existing frameworks, leading to unsatisfactory mapping results, mainly due to inaccurate camera poses. This paper presents OmniColor, a novel and efficient algorithm to colorize point clouds using an independent 360-degree camera. Given a LiDAR-based point cloud and a sequence of panorama images with initial coarse camera poses, our objective is to jointly optimize the poses of all frames for mapping images onto geometric reconstructions. Our pipeline works in an off-the-shelf manner that does not require any feature extraction or matching process. Instead, we find optimal poses by directly maximizing the photometric consistency of LiDAR maps. In experiments, we show that our method can overcome the severe visual distortion of omnidirectional images and greatly benefit from the wide field of view (FOV) of 360-degree cameras to reconstruct various scenarios with accuracy and stability. The code will be released at https://github.com/liubonan123/OmniColor/.

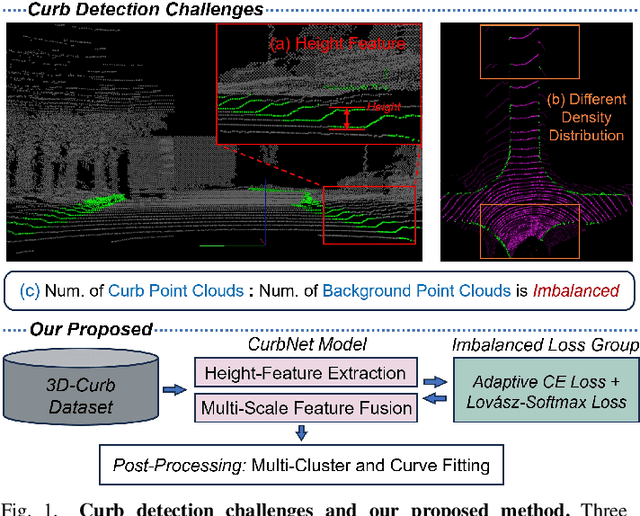

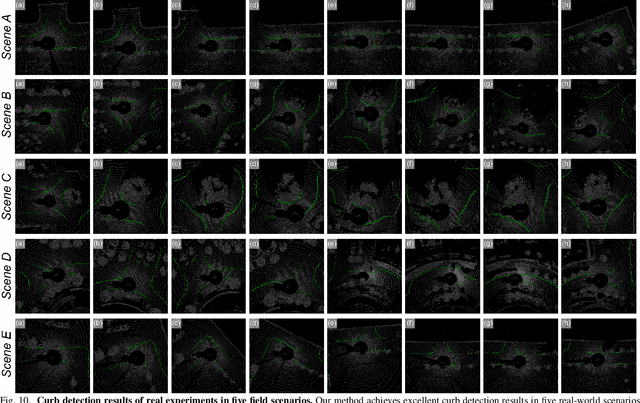

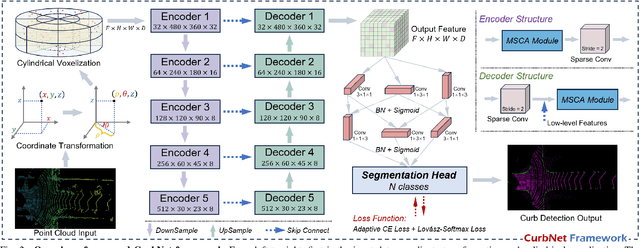

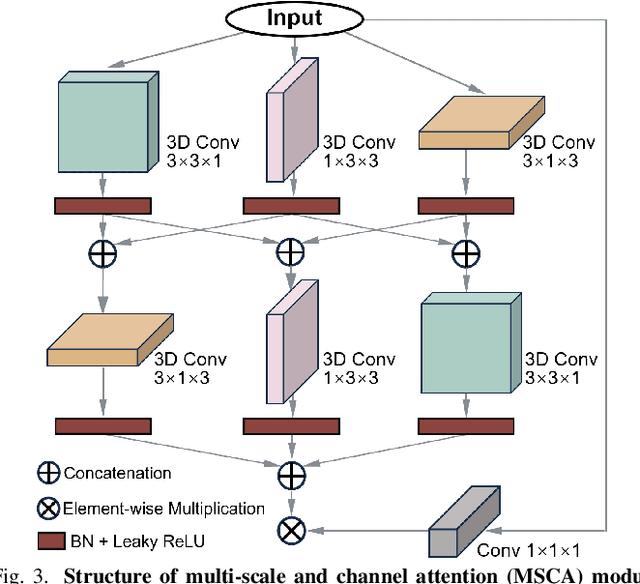

CurbNet: Curb Detection Framework Based on LiDAR Point Cloud Segmentation

Mar 25, 2024

Abstract:Curb detection is an important function in intelligent driving and can be used to determine drivable areas of the road. However, curbs are difficult to detect due to the complex road environment. This paper introduces CurbNet, a novel framework for curb detection, leveraging point cloud segmentation. Addressing the dearth of comprehensive curb datasets and the absence of 3D annotations, we have developed the 3D-Curb dataset, encompassing 7,100 frames, which represents the largest and most categorically diverse collection of curb point clouds currently available. Recognizing that curbs are primarily characterized by height variations, our approach harnesses spatially-rich 3D point clouds for training. To tackle the challenges presented by the uneven distribution of curb features on the xy-plane and their reliance on z-axis high-frequency features, we introduce the multi-scale and channel attention (MSCA) module, a bespoke solution designed to optimize detection performance. Moreover, we propose an adaptive weighted loss function group, specifically formulated to counteract the imbalance in the distribution of curb point clouds relative to other categories. Our extensive experimentation on 2 major datasets has yielded results that surpass existing benchmarks set by leading curb detection and point cloud segmentation models. By integrating multi-clustering and curve fitting techniques in our post-processing stage, we have substantially reduced noise in curb detection, thereby enhancing precision to 0.8744. Notably, CurbNet has achieved an exceptional average metrics of over 0.95 at a tolerance of just 0.15m, thereby establishing a new benchmark. Furthermore, corroborative real-world experiments and dataset analyzes mutually validate each other, solidifying CurbNet's superior detection proficiency and its robust generalizability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge