Fulong Ma

LHPF: Look back the History and Plan for the Future in Autonomous Driving

Nov 26, 2024Abstract:Decision-making and planning in autonomous driving critically reflect the safety of the system, making effective planning imperative. Current imitation learning-based planning algorithms often merge historical trajectories with present observations to predict future candidate paths. However, these algorithms typically assess the current and historical plans independently, leading to discontinuities in driving intentions and an accumulation of errors with each step in a discontinuous plan. To tackle this challenge, this paper introduces LHPF, an imitation learning planner that integrates historical planning information. Our approach employs a historical intention aggregation module that pools historical planning intentions, which are then combined with a spatial query vector to decode the final planning trajectory. Furthermore, we incorporate a comfort auxiliary task to enhance the human-like quality of the driving behavior. Extensive experiments using both real-world and synthetic data demonstrate that LHPF not only surpasses existing advanced learning-based planners in planning performance but also marks the first instance of a purely learning-based planner outperforming the expert. Additionally, the application of the historical intention aggregation module across various backbones highlights the considerable potential of the proposed method. The code will be made publicly available.

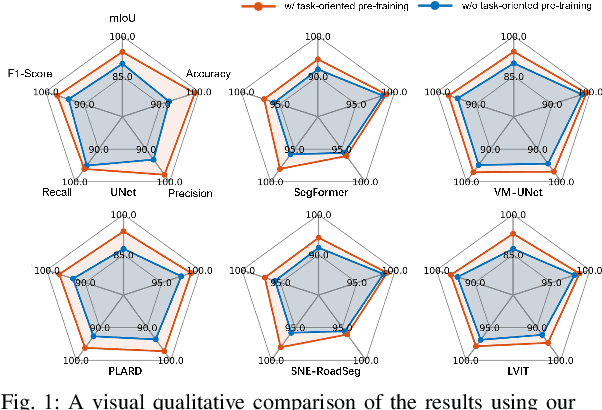

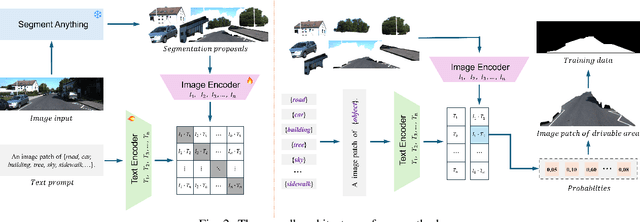

Task-Oriented Pre-Training for Drivable Area Detection

Sep 30, 2024

Abstract:Pre-training techniques play a crucial role in deep learning, enhancing models' performance across a variety of tasks. By initially training on large datasets and subsequently fine-tuning on task-specific data, pre-training provides a solid foundation for models, improving generalization abilities and accelerating convergence rates. This approach has seen significant success in the fields of natural language processing and computer vision. However, traditional pre-training methods necessitate large datasets and substantial computational resources, and they can only learn shared features through prolonged training and struggle to capture deeper, task-specific features. In this paper, we propose a task-oriented pre-training method that begins with generating redundant segmentation proposals using the Segment Anything (SAM) model. We then introduce a Specific Category Enhancement Fine-tuning (SCEF) strategy for fine-tuning the Contrastive Language-Image Pre-training (CLIP) model to select proposals most closely related to the drivable area from those generated by SAM. This approach can generate a lot of coarse training data for pre-training models, which are further fine-tuned using manually annotated data, thereby improving model's performance. Comprehensive experiments conducted on the KITTI road dataset demonstrate that our task-oriented pre-training method achieves an all-around performance improvement compared to models without pre-training. Moreover, our pre-training method not only surpasses traditional pre-training approach but also achieves the best performance compared to state-of-the-art self-training methods.

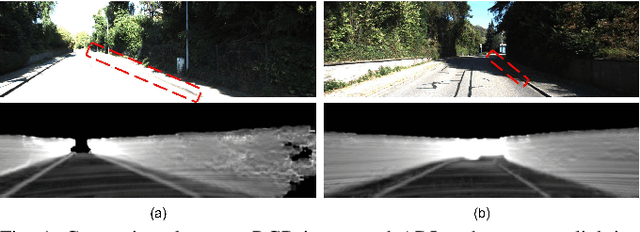

Annotation-Free Curb Detection Leveraging Altitude Difference Image

Sep 30, 2024

Abstract:Road curbs are considered as one of the crucial and ubiquitous traffic features, which are essential for ensuring the safety of autonomous vehicles. Current methods for detecting curbs primarily rely on camera imagery or LiDAR point clouds. Image-based methods are vulnerable to fluctuations in lighting conditions and exhibit poor robustness, while methods based on point clouds circumvent the issues associated with lighting variations. However, it is the typical case that significant processing delays are encountered due to the voluminous amount of 3D points contained in each frame of the point cloud data. Furthermore, the inherently unstructured characteristics of point clouds poses challenges for integrating the latest deep learning advancements into point cloud data applications. To address these issues, this work proposes an annotation-free curb detection method leveraging Altitude Difference Image (ADI), which effectively mitigates the aforementioned challenges. Given that methods based on deep learning generally demand extensive, manually annotated datasets, which are both expensive and labor-intensive to create, we present an Automatic Curb Annotator (ACA) module. This module utilizes a deterministic curb detection algorithm to automatically generate a vast quantity of training data. Consequently, it facilitates the training of the curb detection model without necessitating any manual annotation of data. Finally, by incorporating a post-processing module, we manage to achieve state-of-the-art results on the KITTI 3D curb dataset with considerably reduced processing delays compared to existing methods, which underscores the effectiveness of our approach in curb detection tasks.

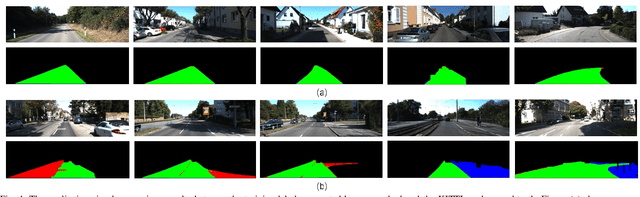

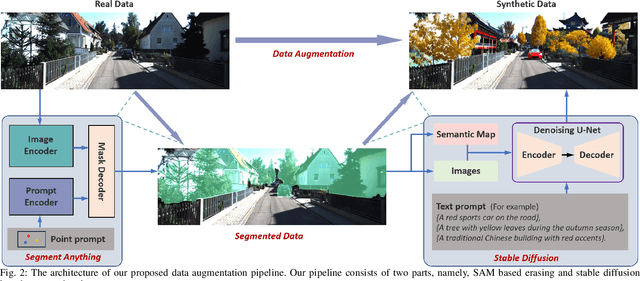

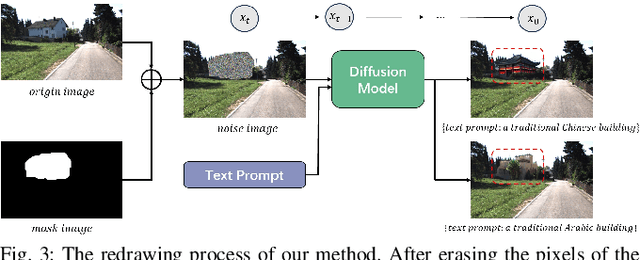

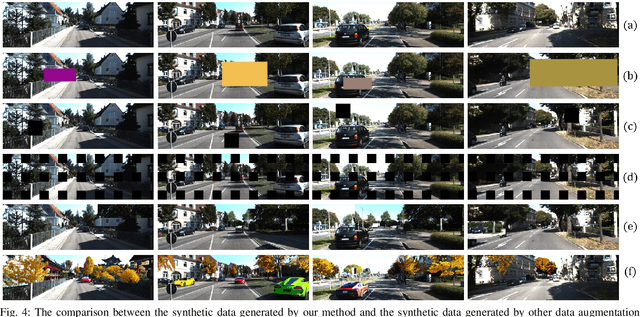

Erase, then Redraw: A Novel Data Augmentation Approach for Free Space Detection Using Diffusion Model

Sep 30, 2024

Abstract:Data augmentation is one of the most common tools in deep learning, underpinning many recent advances including tasks such as classification, detection, and semantic segmentation. The standard approach to data augmentation involves simple transformations like rotation and flipping to generate new images. However, these new images often lack diversity along the main semantic dimensions within the data. Traditional data augmentation methods cannot alter high-level semantic attributes such as the presence of vehicles, trees, and buildings in a scene to enhance data diversity. In recent years, the rapid development of generative models has injected new vitality into the field of data augmentation. In this paper, we address the lack of diversity in data augmentation for road detection task by using a pre-trained text-to-image diffusion model to parameterize image-to-image transformations. Our method involves editing images using these diffusion models to change their semantics. In essence, we achieve this goal by erasing instances of real objects from the original dataset and generating new instances with similar semantics in the erased regions using the diffusion model, thereby expanding the original dataset. We evaluate our approach on the KITTI road dataset and achieve the best results compared to other data augmentation methods, which demonstrates the effectiveness of our proposed development.

From Bird's-Eye to Street View: Crafting Diverse and Condition-Aligned Images with Latent Diffusion Model

Sep 02, 2024

Abstract:We explore Bird's-Eye View (BEV) generation, converting a BEV map into its corresponding multi-view street images. Valued for its unified spatial representation aiding multi-sensor fusion, BEV is pivotal for various autonomous driving applications. Creating accurate street-view images from BEV maps is essential for portraying complex traffic scenarios and enhancing driving algorithms. Concurrently, diffusion-based conditional image generation models have demonstrated remarkable outcomes, adept at producing diverse, high-quality, and condition-aligned results. Nonetheless, the training of these models demands substantial data and computational resources. Hence, exploring methods to fine-tune these advanced models, like Stable Diffusion, for specific conditional generation tasks emerges as a promising avenue. In this paper, we introduce a practical framework for generating images from a BEV layout. Our approach comprises two main components: the Neural View Transformation and the Street Image Generation. The Neural View Transformation phase converts the BEV map into aligned multi-view semantic segmentation maps by learning the shape correspondence between the BEV and perspective views. Subsequently, the Street Image Generation phase utilizes these segmentations as a condition to guide a fine-tuned latent diffusion model. This finetuning process ensures both view and style consistency. Our model leverages the generative capacity of large pretrained diffusion models within traffic contexts, effectively yielding diverse and condition-coherent street view images.

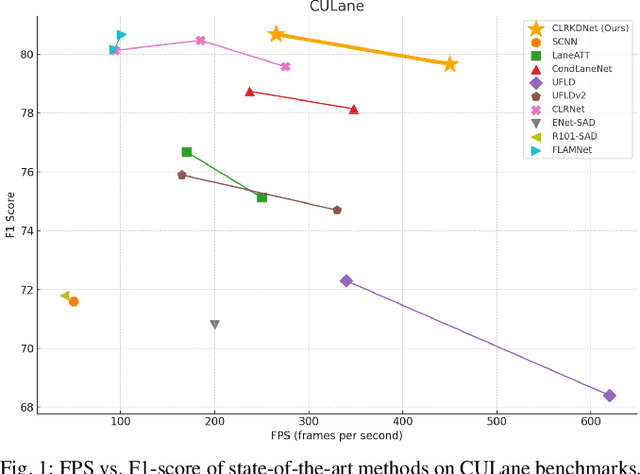

CLRKDNet: Speeding up Lane Detection with Knowledge Distillation

May 21, 2024

Abstract:Road lanes are integral components of the visual perception systems in intelligent vehicles, playing a pivotal role in safe navigation. In lane detection tasks, balancing accuracy with real-time performance is essential, yet existing methods often sacrifice one for the other. To address this trade-off, we introduce CLRKDNet, a streamlined model that balances detection accuracy with real-time performance. The state-of-the-art model CLRNet has demonstrated exceptional performance across various datasets, yet its computational overhead is substantial due to its Feature Pyramid Network (FPN) and muti-layer detection head architecture. Our method simplifies both the FPN structure and detection heads, redesigning them to incorporate a novel teacher-student distillation process alongside a newly introduced series of distillation losses. This combination reduces inference time by up to 60% while maintaining detection accuracy comparable to CLRNet. This strategic balance of accuracy and speed makes CLRKDNet a viable solution for real-time lane detection tasks in autonomous driving applications.

Dragtraffic: A Non-Expert Interactive and Point-Based Controllable Traffic Scene Generation Framework

Apr 19, 2024

Abstract:The evaluation and training of autonomous driving systems require diverse and scalable corner cases. However, most existing scene generation methods lack controllability, accuracy, and versatility, resulting in unsatisfactory generation results. To address this problem, we propose Dragtraffic, a generalized, point-based, and controllable traffic scene generation framework based on conditional diffusion. Dragtraffic enables non-experts to generate a variety of realistic driving scenarios for different types of traffic agents through an adaptive mixture expert architecture. We use a regression model to provide a general initial solution and a refinement process based on the conditional diffusion model to ensure diversity. User-customized context is introduced through cross-attention to ensure high controllability. Experiments on a real-world driving dataset show that Dragtraffic outperforms existing methods in terms of authenticity, diversity, and freedom.

Monocular 3D lane detection for Autonomous Driving: Recent Achievements, Challenges, and Outlooks

Apr 10, 2024

Abstract:3D lane detection plays a crucial role in autonomous driving by extracting structural and traffic information from the road in 3D space to assist the self-driving car in rational, safe, and comfortable path planning and motion control. Due to the consideration of sensor costs and the advantages of visual data in color information, in practical applications, 3D lane detection based on monocular vision is one of the important research directions in the field of autonomous driving, which has attracted more and more attention in both industry and academia. Unfortunately, recent progress in visual perception seems insufficient to develop completely reliable 3D lane detection algorithms, which also hinders the development of vision-based fully autonomous self-driving cars, i.e., achieving level 5 autonomous driving, driving like human-controlled cars. This is one of the conclusions drawn from this review paper: there is still a lot of room for improvement and significant improvements are still needed in the 3D lane detection algorithm for autonomous driving cars using visual sensors. Motivated by this, this review defines, analyzes, and reviews the current achievements in the field of 3D lane detection research, and the vast majority of the current progress relies heavily on computationally complex deep learning models. In addition, this review covers the 3D lane detection pipeline, investigates the performance of state-of-the-art algorithms, analyzes the time complexity of cutting-edge modeling choices, and highlights the main achievements and limitations of current research efforts. The survey also includes a comprehensive discussion of available 3D lane detection datasets and the challenges that researchers have faced but have not yet resolved. Finally, our work outlines future research directions and welcomes researchers and practitioners to enter this exciting field.

CurbNet: Curb Detection Framework Based on LiDAR Point Cloud Segmentation

Mar 25, 2024

Abstract:Curb detection is an important function in intelligent driving and can be used to determine drivable areas of the road. However, curbs are difficult to detect due to the complex road environment. This paper introduces CurbNet, a novel framework for curb detection, leveraging point cloud segmentation. Addressing the dearth of comprehensive curb datasets and the absence of 3D annotations, we have developed the 3D-Curb dataset, encompassing 7,100 frames, which represents the largest and most categorically diverse collection of curb point clouds currently available. Recognizing that curbs are primarily characterized by height variations, our approach harnesses spatially-rich 3D point clouds for training. To tackle the challenges presented by the uneven distribution of curb features on the xy-plane and their reliance on z-axis high-frequency features, we introduce the multi-scale and channel attention (MSCA) module, a bespoke solution designed to optimize detection performance. Moreover, we propose an adaptive weighted loss function group, specifically formulated to counteract the imbalance in the distribution of curb point clouds relative to other categories. Our extensive experimentation on 2 major datasets has yielded results that surpass existing benchmarks set by leading curb detection and point cloud segmentation models. By integrating multi-clustering and curve fitting techniques in our post-processing stage, we have substantially reduced noise in curb detection, thereby enhancing precision to 0.8744. Notably, CurbNet has achieved an exceptional average metrics of over 0.95 at a tolerance of just 0.15m, thereby establishing a new benchmark. Furthermore, corroborative real-world experiments and dataset analyzes mutually validate each other, solidifying CurbNet's superior detection proficiency and its robust generalizability.

Every Dataset Counts: Scaling up Monocular 3D Object Detection with Joint Datasets Training

Oct 02, 2023

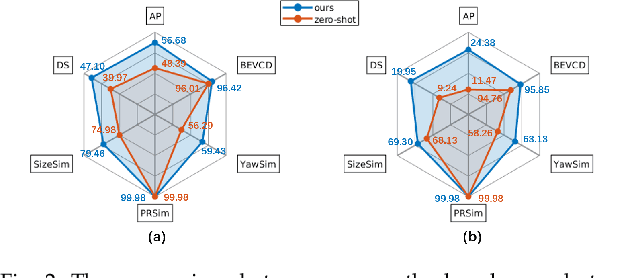

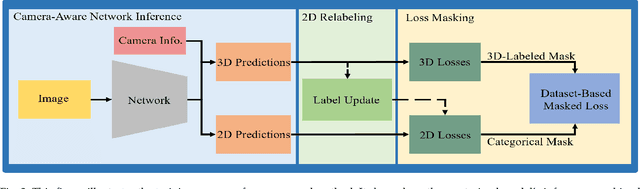

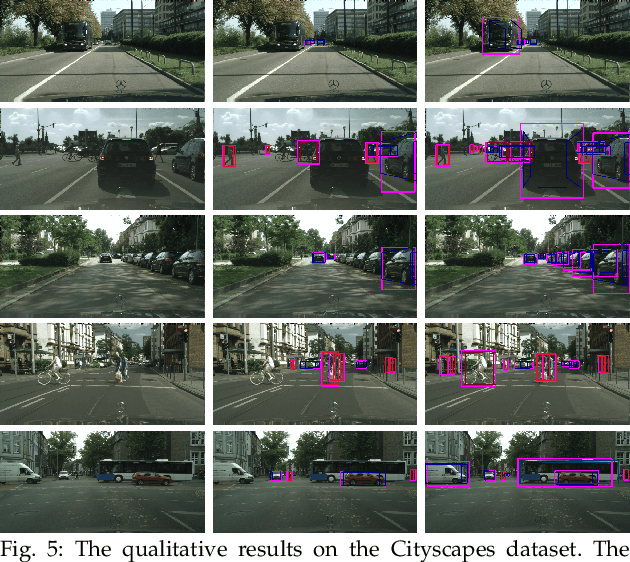

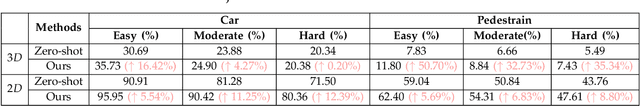

Abstract:Monocular 3D object detection plays a crucial role in autonomous driving. However, existing monocular 3D detection algorithms depend on 3D labels derived from LiDAR measurements, which are costly to acquire for new datasets and challenging to deploy in novel environments. Specifically, this study investigates the pipeline for training a monocular 3D object detection model on a diverse collection of 3D and 2D datasets. The proposed framework comprises three components: (1) a robust monocular 3D model capable of functioning across various camera settings, (2) a selective-training strategy to accommodate datasets with differing class annotations, and (3) a pseudo 3D training approach using 2D labels to enhance detection performance in scenes containing only 2D labels. With this framework, we could train models on a joint set of various open 3D/2D datasets to obtain models with significantly stronger generalization capability and enhanced performance on new dataset with only 2D labels. We conduct extensive experiments on KITTI/nuScenes/ONCE/Cityscapes/BDD100K datasets to demonstrate the scaling ability of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge