Peng Hou

Annotation-Free Curb Detection Leveraging Altitude Difference Image

Sep 30, 2024

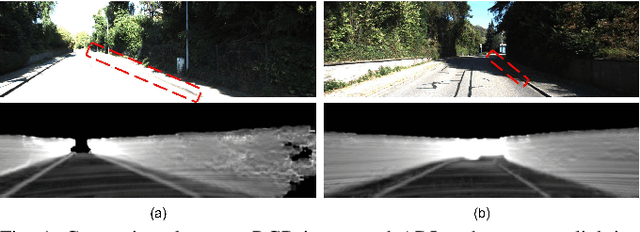

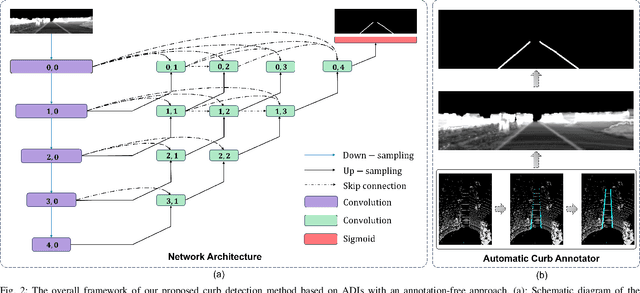

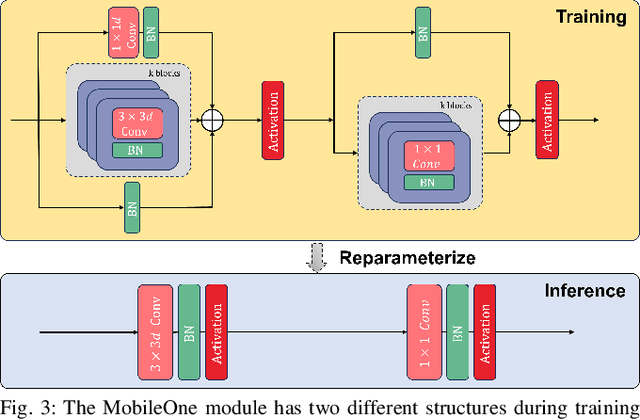

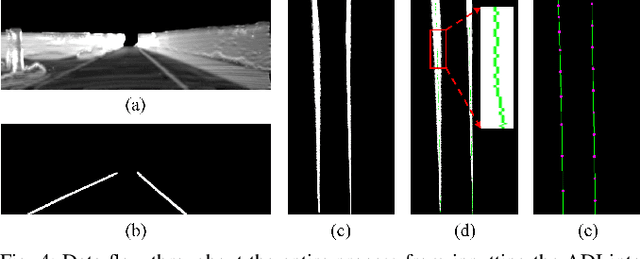

Abstract:Road curbs are considered as one of the crucial and ubiquitous traffic features, which are essential for ensuring the safety of autonomous vehicles. Current methods for detecting curbs primarily rely on camera imagery or LiDAR point clouds. Image-based methods are vulnerable to fluctuations in lighting conditions and exhibit poor robustness, while methods based on point clouds circumvent the issues associated with lighting variations. However, it is the typical case that significant processing delays are encountered due to the voluminous amount of 3D points contained in each frame of the point cloud data. Furthermore, the inherently unstructured characteristics of point clouds poses challenges for integrating the latest deep learning advancements into point cloud data applications. To address these issues, this work proposes an annotation-free curb detection method leveraging Altitude Difference Image (ADI), which effectively mitigates the aforementioned challenges. Given that methods based on deep learning generally demand extensive, manually annotated datasets, which are both expensive and labor-intensive to create, we present an Automatic Curb Annotator (ACA) module. This module utilizes a deterministic curb detection algorithm to automatically generate a vast quantity of training data. Consequently, it facilitates the training of the curb detection model without necessitating any manual annotation of data. Finally, by incorporating a post-processing module, we manage to achieve state-of-the-art results on the KITTI 3D curb dataset with considerably reduced processing delays compared to existing methods, which underscores the effectiveness of our approach in curb detection tasks.

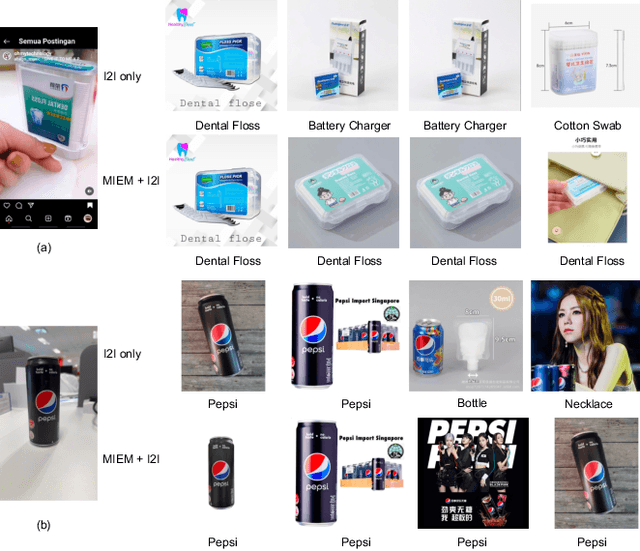

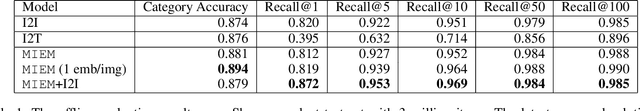

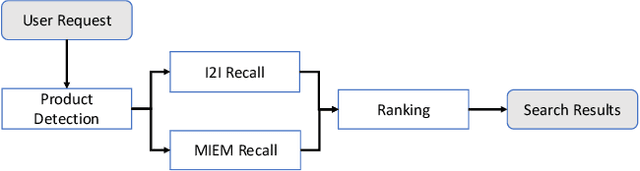

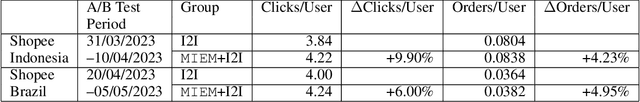

Transformer-empowered Multi-modal Item Embedding for Enhanced Image Search in E-Commerce

Nov 29, 2023

Abstract:Over the past decade, significant advances have been made in the field of image search for e-commerce applications. Traditional image-to-image retrieval models, which focus solely on image details such as texture, tend to overlook useful semantic information contained within the images. As a result, the retrieved products might possess similar image details, but fail to fulfil the user's search goals. Moreover, the use of image-to-image retrieval models for products containing multiple images results in significant online product feature storage overhead and complex mapping implementations. In this paper, we report the design and deployment of the proposed Multi-modal Item Embedding Model (MIEM) to address these limitations. It is capable of utilizing both textual information and multiple images about a product to construct meaningful product features. By leveraging semantic information from images, MIEM effectively supplements the image search process, improving the overall accuracy of retrieval results. MIEM has become an integral part of the Shopee image search platform. Since its deployment in March 2023, it has achieved a remarkable 9.90% increase in terms of clicks per user and a 4.23% boost in terms of orders per user for the image search feature on the Shopee e-commerce platform.

Hearing Lips: Improving Lip Reading by Distilling Speech Recognizers

Nov 26, 2019

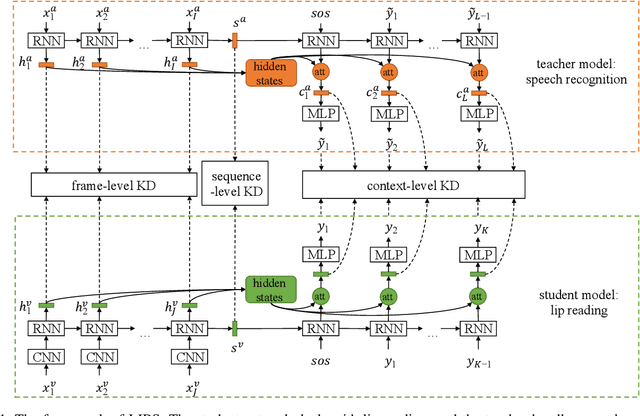

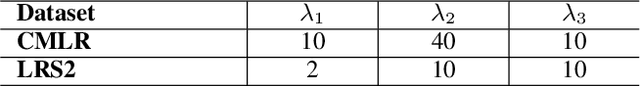

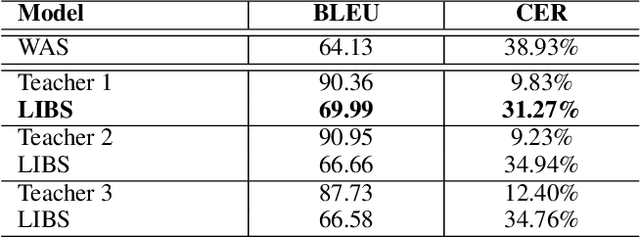

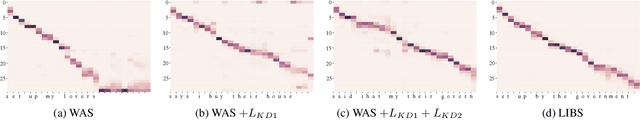

Abstract:Lip reading has witnessed unparalleled development in recent years thanks to deep learning and the availability of large-scale datasets. Despite the encouraging results achieved, the performance of lip reading, unfortunately, remains inferior to the one of its counterpart speech recognition, due to the ambiguous nature of its actuations that makes it challenging to extract discriminant features from the lip movement videos. In this paper, we propose a new method, termed as Lip by Speech (LIBS), of which the goal is to strengthen lip reading by learning from speech recognizers. The rationale behind our approach is that the features extracted from speech recognizers may provide complementary and discriminant clues, which are formidable to be obtained from the subtle movements of the lips, and consequently facilitate the training of lip readers. This is achieved, specifically, by distilling multi-granularity knowledge from speech recognizers to lip readers. To conduct this cross-modal knowledge distillation, we utilize an efficacious alignment scheme to handle the inconsistent lengths of the audios and videos, as well as an innovative filtering strategy to refine the speech recognizer's prediction. The proposed method achieves the new state-of-the-art performance on the CMLR and LRS2 datasets, outperforming the baseline by a margin of 7.66% and 2.75% in character error rate, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge