Ge Sun

MGTraj: Multi-Granularity Goal-Guided Human Trajectory Prediction with Recursive Refinement Network

Sep 11, 2025Abstract:Accurate human trajectory prediction is crucial for robotics navigation and autonomous driving. Recent research has demonstrated that incorporating goal guidance significantly enhances prediction accuracy by reducing uncertainty and leveraging prior knowledge. Most goal-guided approaches decouple the prediction task into two stages: goal prediction and subsequent trajectory completion based on the predicted goal, which operate at extreme granularities: coarse-grained goal prediction forecasts the overall intention, while fine-grained trajectory completion needs to generate the positions for all future timesteps. The potential utility of intermediate temporal granularity remains largely unexplored, which motivates multi-granularity trajectory modeling. While prior work has shown that multi-granularity representations capture diverse scales of human dynamics and motion patterns, effectively integrating this concept into goal-guided frameworks remains challenging. In this paper, we propose MGTraj, a novel Multi-Granularity goal-guided model for human Trajectory prediction. MGTraj recursively encodes trajectory proposals from coarse to fine granularity levels. At each level, a transformer-based recursive refinement network (RRN) captures features and predicts progressive refinements. Features across different granularities are integrated using a weight-sharing strategy, and velocity prediction is employed as an auxiliary task to further enhance performance. Comprehensive experimental results in EHT/UCY and Stanford Drone Dataset indicate that MGTraj outperforms baseline methods and achieves state-of-the-art performance among goal-guided methods.

The Athenian Academy: A Seven-Layer Architecture Model for Multi-Agent Systems

Apr 18, 2025Abstract:This paper proposes the "Academy of Athens" multi-agent seven-layer framework, aimed at systematically addressing challenges in multi-agent systems (MAS) within artificial intelligence (AI) art creation, such as collaboration efficiency, role allocation, environmental adaptation, and task parallelism. The framework divides MAS into seven layers: multi-agent collaboration, single-agent multi-role playing, single-agent multi-scene traversal, single-agent multi-capability incarnation, different single agents using the same large model to achieve the same target agent, single-agent using different large models to achieve the same target agent, and multi-agent synthesis of the same target agent. Through experimental validation in art creation, the framework demonstrates its unique advantages in task collaboration, cross-scene adaptation, and model fusion. This paper further discusses current challenges such as collaboration mechanism optimization, model stability, and system security, proposing future exploration through technologies like meta-learning and federated learning. The framework provides a structured methodology for multi-agent collaboration in AI art creation and promotes innovative applications in the art field.

SATA: Safe and Adaptive Torque-Based Locomotion Policies Inspired by Animal Learning

Feb 18, 2025

Abstract:Despite recent advances in learning-based controllers for legged robots, deployments in human-centric environments remain limited by safety concerns. Most of these approaches use position-based control, where policies output target joint angles that must be processed by a low-level controller (e.g., PD or impedance controllers) to compute joint torques. Although impressive results have been achieved in controlled real-world scenarios, these methods often struggle with compliance and adaptability when encountering environments or disturbances unseen during training, potentially resulting in extreme or unsafe behaviors. Inspired by how animals achieve smooth and adaptive movements by controlling muscle extension and contraction, torque-based policies offer a promising alternative by enabling precise and direct control of the actuators in torque space. In principle, this approach facilitates more effective interactions with the environment, resulting in safer and more adaptable behaviors. However, challenges such as a highly nonlinear state space and inefficient exploration during training have hindered their broader adoption. To address these limitations, we propose SATA, a bio-inspired framework that mimics key biomechanical principles and adaptive learning mechanisms observed in animal locomotion. Our approach effectively addresses the inherent challenges of learning torque-based policies by significantly improving early-stage exploration, leading to high-performance final policies. Remarkably, our method achieves zero-shot sim-to-real transfer. Our experimental results indicate that SATA demonstrates remarkable compliance and safety, even in challenging environments such as soft/slippery terrain or narrow passages, and under significant external disturbances, highlighting its potential for practical deployments in human-centric and safety-critical scenarios.

LHPF: Look back the History and Plan for the Future in Autonomous Driving

Nov 26, 2024Abstract:Decision-making and planning in autonomous driving critically reflect the safety of the system, making effective planning imperative. Current imitation learning-based planning algorithms often merge historical trajectories with present observations to predict future candidate paths. However, these algorithms typically assess the current and historical plans independently, leading to discontinuities in driving intentions and an accumulation of errors with each step in a discontinuous plan. To tackle this challenge, this paper introduces LHPF, an imitation learning planner that integrates historical planning information. Our approach employs a historical intention aggregation module that pools historical planning intentions, which are then combined with a spatial query vector to decode the final planning trajectory. Furthermore, we incorporate a comfort auxiliary task to enhance the human-like quality of the driving behavior. Extensive experiments using both real-world and synthetic data demonstrate that LHPF not only surpasses existing advanced learning-based planners in planning performance but also marks the first instance of a purely learning-based planner outperforming the expert. Additionally, the application of the historical intention aggregation module across various backbones highlights the considerable potential of the proposed method. The code will be made publicly available.

Learning-based Hierarchical Control: Emulating the Central Nervous System for Bio-Inspired Legged Robot Locomotion

Apr 27, 2024

Abstract:Animals possess a remarkable ability to navigate challenging terrains, achieved through the interplay of various pathways between the brain, central pattern generators (CPGs) in the spinal cord, and musculoskeletal system. Traditional bioinspired control frameworks often rely on a singular control policy that models both higher (supraspinal) and spinal cord functions. In this work, we build upon our previous research by introducing two distinct neural networks: one tasked with modulating the frequency and amplitude of CPGs to generate the basic locomotor rhythm (referred to as the spinal policy, SCP), and the other responsible for receiving environmental perception data and directly modulating the rhythmic output from the SCP to execute precise movements on challenging terrains (referred to as the descending modulation policy). This division of labor more closely mimics the hierarchical locomotor control systems observed in legged animals, thereby enhancing the robot's ability to navigate various uneven surfaces, including steps, high obstacles, and terrains with gaps. Additionally, we investigate the impact of sensorimotor delays within our framework, validating several biological assumptions about animal locomotion systems. Specifically, we demonstrate that spinal circuits play a crucial role in generating the basic locomotor rhythm, while descending pathways are essential for enabling appropriate gait modifications to accommodate uneven terrain. Notably, our findings also reveal that the multi-layered control inherent in animals exhibits remarkable robustness against time delays. Through these investigations, this paper contributes to a deeper understanding of the fundamental principles of interplay between spinal and supraspinal mechanisms in biological locomotion. It also supports the development of locomotion controllers in parallel to biological structures which are ...

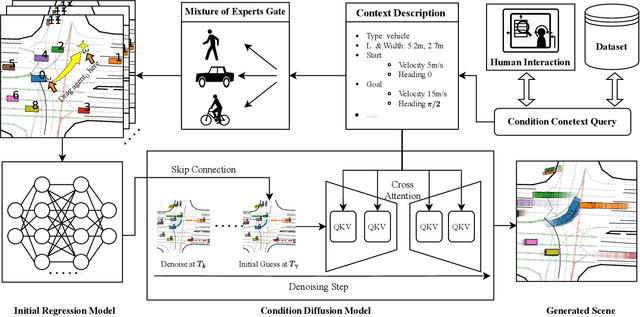

Dragtraffic: A Non-Expert Interactive and Point-Based Controllable Traffic Scene Generation Framework

Apr 19, 2024

Abstract:The evaluation and training of autonomous driving systems require diverse and scalable corner cases. However, most existing scene generation methods lack controllability, accuracy, and versatility, resulting in unsatisfactory generation results. To address this problem, we propose Dragtraffic, a generalized, point-based, and controllable traffic scene generation framework based on conditional diffusion. Dragtraffic enables non-experts to generate a variety of realistic driving scenarios for different types of traffic agents through an adaptive mixture expert architecture. We use a regression model to provide a general initial solution and a refinement process based on the conditional diffusion model to ensure diversity. User-customized context is introduced through cross-attention to ensure high controllability. Experiments on a real-world driving dataset show that Dragtraffic outperforms existing methods in terms of authenticity, diversity, and freedom.

Enhancing Campus Mobility: Achievements and Challenges of Autonomous Shuttle "Snow Lion''

Jan 17, 2024Abstract:The rapid evolution of autonomous vehicles (AVs) has significantly influenced global transportation systems. In this context, we present ``Snow Lion'', an autonomous shuttle meticulously designed to revolutionize on-campus transportation, offering a safer and more efficient mobility solution for students, faculty, and visitors. The primary objective of this research is to enhance campus mobility by providing a reliable, efficient, and eco-friendly transportation solution that seamlessly integrates with existing infrastructure and meets the diverse needs of a university setting. To achieve this goal, we delve into the intricacies of the system design, encompassing sensing, perception, localization, planning, and control aspects. We evaluate the autonomous shuttle's performance in real-world scenarios, involving a 1146-kilometer road haul and the transportation of 442 passengers over a two-month period. These experiments demonstrate the effectiveness of our system and offer valuable insights into the intricate process of integrating an autonomous vehicle within campus shuttle operations. Furthermore, a thorough analysis of the lessons derived from this experience furnishes a valuable real-world case study, accompanied by recommendations for future research and development in the field of autonomous driving.

GBD-TS: Goal-based Pedestrian Trajectory Prediction with Diffusion using Tree Sampling Algorithm

Nov 25, 2023

Abstract:Predicting pedestrian trajectories is crucial for improving the safety and effectiveness of autonomous driving and mobile robots. However, this task is nontrivial due to the inherent stochasticity of human motion, which naturally requires the predictor to generate multi-model prediction. Previous works have used various generative methods, such as GAN and VAE, for pedestrian trajectory prediction. Nevertheless, these methods may suffer from problems, including mode collapse and relatively low-quality results. The denoising diffusion probabilistic model (DDPM) has recently been applied to trajectory prediction due to its simple training process and powerful reconstruction ability. However, current diffusion-based methods are straightforward without fully leveraging input information and usually require many denoising iterations leading to a long inference time or an additional network for initialization. To address these challenges and promote the application of diffusion models in trajectory prediction, we propose a novel scene-aware multi-modal pedestrian trajectory prediction framework called GBD. GBD combines goal prediction with the diffusion network. First, the goal predictor produces multiple goals, and then the diffusion network generates multi-modal trajectories conditioned on these goals. Furthermore, we introduce a new diffusion sampling algorithm named tree sampling (TS), which leverages common feature to reduce the inference time and improve accuracy for multi-modal prediction. Experimental results demonstrate that our GBD-TS method achieves state-of-the-art performance with real-time inference speed.

DecAP: Decaying Action Priors for Accelerated Learning of Torque-Based Legged Locomotion Policies

Oct 09, 2023

Abstract:Optimal Control for legged robots has gone through a paradigm shift from position-based to torque-based control, owing to the latter's compliant and robust nature. In parallel to this shift, the community has also turned to Deep Reinforcement Learning (DRL) as a promising approach to directly learn locomotion policies for complex real-life tasks. However, most end-to-end DRL approaches still operate in position space, mainly because learning in torque space is often sample-inefficient and does not consistently converge to natural gaits. To address these challenges, we introduce Decaying Action Priors (DecAP), a novel three-stage framework to learn and deploy torque policies for legged locomotion. In the first stage, we generate our own imitation data by training a position policy, eliminating the need for expert knowledge in designing optimal controllers. The second stage incorporates decaying action priors to enhance the exploration of torque-based policies aided by imitation rewards. We show that our approach consistently outperforms imitation learning alone and is significantly robust to the scaling of these rewards. Finally, our third stage facilitates safe sim-to-real transfer by directly deploying our learned torques, alongside low-gain PID control from our trained position policy. We demonstrate the generality of our approach by training torque-based locomotion policies for a biped, a quadruped, and a hexapod robot in simulation, and experimentally demonstrate our learned policies on a quadruped (Unitree Go1).

Prediction of Diblock Copolymer Morphology via Machine Learning

Aug 31, 2023

Abstract:A machine learning approach is presented to accelerate the computation of block polymer morphology evolution for large domains over long timescales. The strategy exploits the separation of characteristic times between coarse-grained particle evolution on the monomer scale and slow morphological evolution over mesoscopic scales. In contrast to empirical continuum models, the proposed approach learns stochastically driven defect annihilation processes directly from particle-based simulations. A UNet architecture that respects different boundary conditions is adopted, thereby allowing periodic and fixed substrate boundary conditions of arbitrary shape. Physical concepts are also introduced via the loss function and symmetries are incorporated via data augmentation. The model is validated using three different use cases. Explainable artificial intelligence methods are applied to visualize the morphology evolution over time. This approach enables the generation of large system sizes and long trajectories to investigate defect densities and their evolution under different types of confinement. As an application, we demonstrate the importance of accessing late-stage morphologies for understanding particle diffusion inside a single block. This work has implications for directed self-assembly and materials design in micro-electronics, battery materials, and membranes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge