Tianshuo Xu

A Mechanistic View on Video Generation as World Models: State and Dynamics

Jan 22, 2026Abstract:Large-scale video generation models have demonstrated emergent physical coherence, positioning them as potential world models. However, a gap remains between contemporary "stateless" video architectures and classic state-centric world model theories. This work bridges this gap by proposing a novel taxonomy centered on two pillars: State Construction and Dynamics Modeling. We categorize state construction into implicit paradigms (context management) and explicit paradigms (latent compression), while dynamics modeling is analyzed through knowledge integration and architectural reformulation. Furthermore, we advocate for a transition in evaluation from visual fidelity to functional benchmarks, testing physical persistence and causal reasoning. We conclude by identifying two critical frontiers: enhancing persistence via data-driven memory and compressed fidelity, and advancing causality through latent factor decoupling and reasoning-prior integration. By addressing these challenges, the field can evolve from generating visually plausible videos to building robust, general-purpose world simulators.

Occ-LLM: Enhancing Autonomous Driving with Occupancy-Based Large Language Models

Feb 10, 2025Abstract:Large Language Models (LLMs) have made substantial advancements in the field of robotic and autonomous driving. This study presents the first Occupancy-based Large Language Model (Occ-LLM), which represents a pioneering effort to integrate LLMs with an important representation. To effectively encode occupancy as input for the LLM and address the category imbalances associated with occupancy, we propose Motion Separation Variational Autoencoder (MS-VAE). This innovative approach utilizes prior knowledge to distinguish dynamic objects from static scenes before inputting them into a tailored Variational Autoencoder (VAE). This separation enhances the model's capacity to concentrate on dynamic trajectories while effectively reconstructing static scenes. The efficacy of Occ-LLM has been validated across key tasks, including 4D occupancy forecasting, self-ego planning, and occupancy-based scene question answering. Comprehensive evaluations demonstrate that Occ-LLM significantly surpasses existing state-of-the-art methodologies, achieving gains of about 6\% in Intersection over Union (IoU) and 4\% in mean Intersection over Union (mIoU) for the task of 4D occupancy forecasting. These findings highlight the transformative potential of Occ-LLM in reshaping current paradigms within robotic and autonomous driving.

Uni-Renderer: Unifying Rendering and Inverse Rendering Via Dual Stream Diffusion

Dec 19, 2024

Abstract:Rendering and inverse rendering are pivotal tasks in both computer vision and graphics. The rendering equation is the core of the two tasks, as an ideal conditional distribution transfer function from intrinsic properties to RGB images. Despite achieving promising results of existing rendering methods, they merely approximate the ideal estimation for a specific scene and come with a high computational cost. Additionally, the inverse conditional distribution transfer is intractable due to the inherent ambiguity. To address these challenges, we propose a data-driven method that jointly models rendering and inverse rendering as two conditional generation tasks within a single diffusion framework. Inspired by UniDiffuser, we utilize two distinct time schedules to model both tasks, and with a tailored dual streaming module, we achieve cross-conditioning of two pre-trained diffusion models. This unified approach, named Uni-Renderer, allows the two processes to facilitate each other through a cycle-consistent constrain, mitigating ambiguity by enforcing consistency between intrinsic properties and rendered images. Combined with a meticulously prepared dataset, our method effectively decomposition of intrinsic properties and demonstrates a strong capability to recognize changes during rendering. We will open-source our training and inference code to the public, fostering further research and development in this area.

DrivingRecon: Large 4D Gaussian Reconstruction Model For Autonomous Driving

Dec 12, 2024

Abstract:Photorealistic 4D reconstruction of street scenes is essential for developing real-world simulators in autonomous driving. However, most existing methods perform this task offline and rely on time-consuming iterative processes, limiting their practical applications. To this end, we introduce the Large 4D Gaussian Reconstruction Model (DrivingRecon), a generalizable driving scene reconstruction model, which directly predicts 4D Gaussian from surround view videos. To better integrate the surround-view images, the Prune and Dilate Block (PD-Block) is proposed to eliminate overlapping Gaussian points between adjacent views and remove redundant background points. To enhance cross-temporal information, dynamic and static decoupling is tailored to better learn geometry and motion features. Experimental results demonstrate that DrivingRecon significantly improves scene reconstruction quality and novel view synthesis compared to existing methods. Furthermore, we explore applications of DrivingRecon in model pre-training, vehicle adaptation, and scene editing. Our code is available at https://github.com/EnVision-Research/DriveRecon.

Motion Dreamer: Realizing Physically Coherent Video Generation through Scene-Aware Motion Reasoning

Nov 30, 2024

Abstract:Recent numerous video generation models, also known as world models, have demonstrated the ability to generate plausible real-world videos. However, many studies have shown that these models often produce motion results lacking logical or physical coherence. In this paper, we revisit video generation models and find that single-stage approaches struggle to produce high-quality results while maintaining coherent motion reasoning. To address this issue, we propose \textbf{Motion Dreamer}, a two-stage video generation framework. In Stage I, the model generates an intermediate motion representation-such as a segmentation map or depth map-based on the input image and motion conditions, focusing solely on the motion itself. In Stage II, the model uses this intermediate motion representation as a condition to generate a high-detail video. By decoupling motion reasoning from high-fidelity video synthesis, our approach allows for more accurate and physically plausible motion generation. We validate the effectiveness of our approach on the Physion dataset and in autonomous driving scenarios. For example, given a single push, our model can synthesize the sequential toppling of a set of dominoes. Similarly, by varying the movements of ego-cars, our model can produce different effects on other vehicles. Our work opens new avenues in creating models that can reason about physical interactions in a more coherent and realistic manner.

From Bird's-Eye to Street View: Crafting Diverse and Condition-Aligned Images with Latent Diffusion Model

Sep 02, 2024

Abstract:We explore Bird's-Eye View (BEV) generation, converting a BEV map into its corresponding multi-view street images. Valued for its unified spatial representation aiding multi-sensor fusion, BEV is pivotal for various autonomous driving applications. Creating accurate street-view images from BEV maps is essential for portraying complex traffic scenarios and enhancing driving algorithms. Concurrently, diffusion-based conditional image generation models have demonstrated remarkable outcomes, adept at producing diverse, high-quality, and condition-aligned results. Nonetheless, the training of these models demands substantial data and computational resources. Hence, exploring methods to fine-tune these advanced models, like Stable Diffusion, for specific conditional generation tasks emerges as a promising avenue. In this paper, we introduce a practical framework for generating images from a BEV layout. Our approach comprises two main components: the Neural View Transformation and the Street Image Generation. The Neural View Transformation phase converts the BEV map into aligned multi-view semantic segmentation maps by learning the shape correspondence between the BEV and perspective views. Subsequently, the Street Image Generation phase utilizes these segmentations as a condition to guide a fine-tuned latent diffusion model. This finetuning process ensures both view and style consistency. Our model leverages the generative capacity of large pretrained diffusion models within traffic contexts, effectively yielding diverse and condition-coherent street view images.

Systematic Investigation of Sparse Perturbed Sharpness-Aware Minimization Optimizer

Jun 30, 2023

Abstract:Deep neural networks often suffer from poor generalization due to complex and non-convex loss landscapes. Sharpness-Aware Minimization (SAM) is a popular solution that smooths the loss landscape by minimizing the maximized change of training loss when adding a perturbation to the weight. However, indiscriminate perturbation of SAM on all parameters is suboptimal and results in excessive computation, double the overhead of common optimizers like Stochastic Gradient Descent (SGD). In this paper, we propose Sparse SAM (SSAM), an efficient and effective training scheme that achieves sparse perturbation by a binary mask. To obtain the sparse mask, we provide two solutions based on Fisher information and dynamic sparse training, respectively. We investigate the impact of different masks, including unstructured, structured, and $N$:$M$ structured patterns, as well as explicit and implicit forms of implementing sparse perturbation. We theoretically prove that SSAM can converge at the same rate as SAM, i.e., $O(\log T/\sqrt{T})$. Sparse SAM has the potential to accelerate training and smooth the loss landscape effectively. Extensive experimental results on CIFAR and ImageNet-1K confirm that our method is superior to SAM in terms of efficiency, and the performance is preserved or even improved with a perturbation of merely 50\% sparsity. Code is available at https://github.com/Mi-Peng/Systematic-Investigation-of-Sparse-Perturbed-Sharpness-Aware-Minimization-Optimizer.

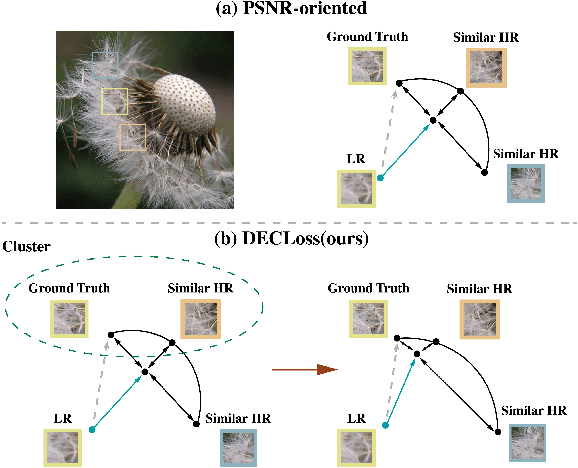

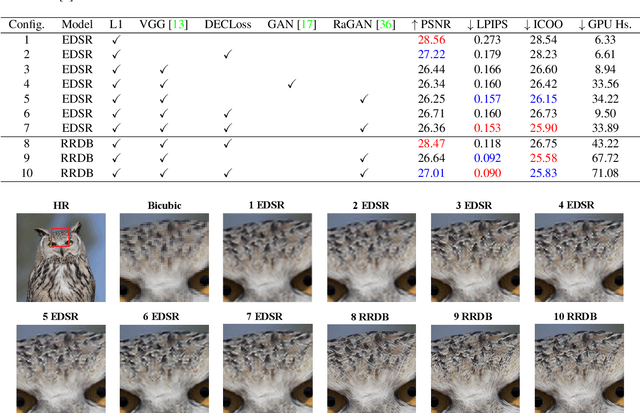

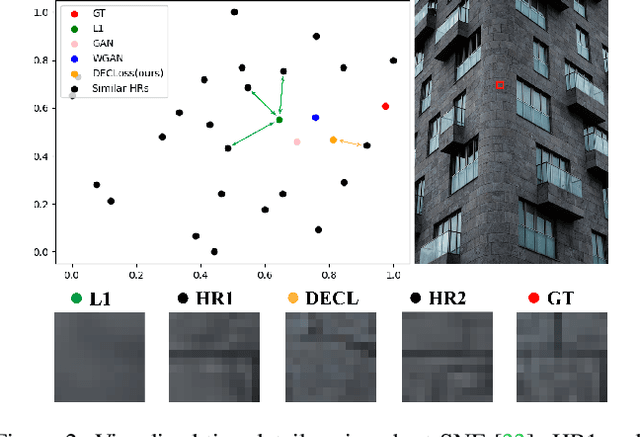

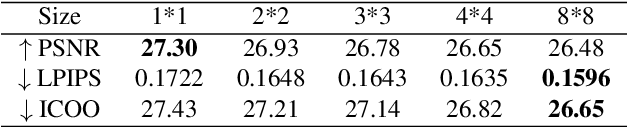

What Hinders Perceptual Quality of PSNR-oriented Methods?

Jan 04, 2022

Abstract:In this paper, we discover two factors that inhibit POMs from achieving high perceptual quality: 1) center-oriented optimization (COO) problem and 2) model's low-frequency tendency. First, POMs tend to generate an SR image whose position in the feature space is closest to the distribution center of all potential high-resolution (HR) images, resulting in such POMs losing high-frequency details. Second, $90\%$ area of an image consists of low-frequency signals; in contrast, human perception relies on an image's high-frequency details. However, POMs apply the same calculation to process different-frequency areas, so that POMs tend to restore the low-frequency regions. Based on these two factors, we propose a Detail Enhanced Contrastive Loss (DECLoss), by combining a high-frequency enhancement module and spatial contrastive learning module, to reduce the influence of the COO problem and low-Frequency tendency. Experimental results show the efficiency and effectiveness when applying DECLoss on several regular SR models. E.g, in EDSR, our proposed method achieves 3.60$\times$ faster learning speed compared to a GAN-based method with a subtle degradation in visual quality. In addition, our final results show that an SR network equipped with our DECLoss generates more realistic and visually pleasing textures compared to state-of-the-art methods. %The source code of the proposed method is included in the supplementary material and will be made publicly available in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge