Ping Yang

Multi-Granularity Distribution Modeling for Video Watch Time Prediction via Exponential-Gaussian Mixture Network

Aug 18, 2025

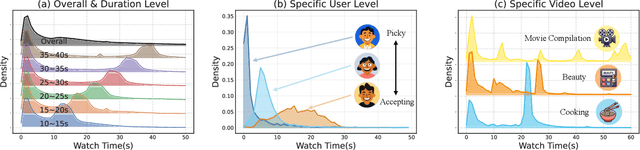

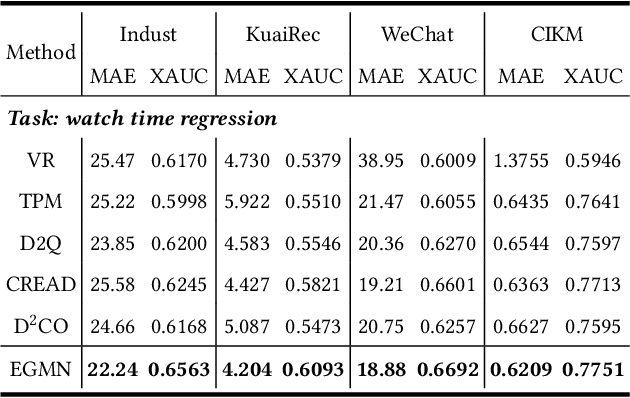

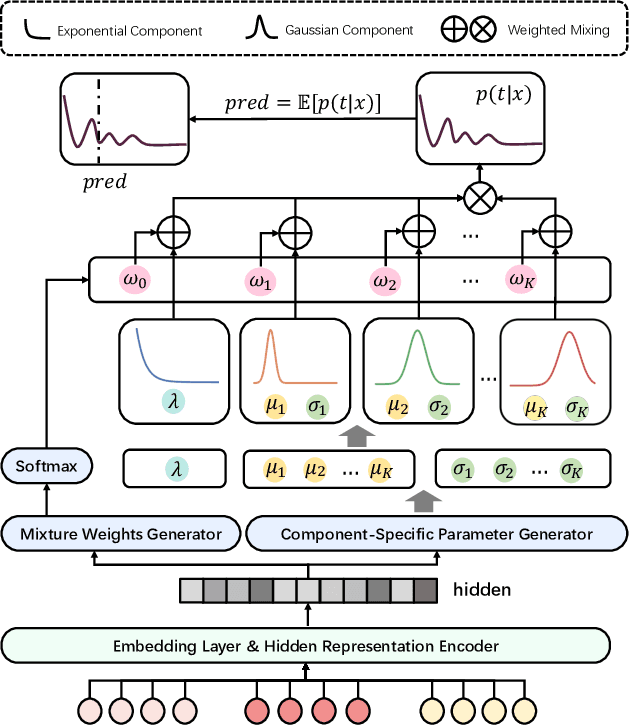

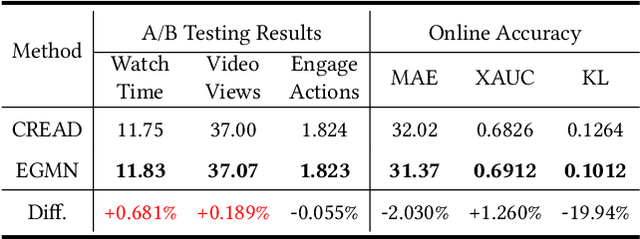

Abstract:Accurate watch time prediction is crucial for enhancing user engagement in streaming short-video platforms, although it is challenged by complex distribution characteristics across multi-granularity levels. Through systematic analysis of real-world industrial data, we uncover two critical challenges in watch time prediction from a distribution aspect: (1) coarse-grained skewness induced by a significant concentration of quick-skips1, (2) fine-grained diversity arising from various user-video interaction patterns. Consequently, we assume that the watch time follows the Exponential-Gaussian Mixture (EGM) distribution, where the exponential and Gaussian components respectively characterize the skewness and diversity. Accordingly, an Exponential-Gaussian Mixture Network (EGMN) is proposed for the parameterization of EGM distribution, which consists of two key modules: a hidden representation encoder and a mixture parameter generator. We conducted extensive offline experiments on public datasets and online A/B tests on the industrial short-video feeding scenario of Xiaohongshu App to validate the superiority of EGMN compared with existing state-of-the-art methods. Remarkably, comprehensive experimental results have proven that EGMN exhibits excellent distribution fitting ability across coarse-to-fine-grained levels. We open source related code on Github: https://github.com/BestActionNow/EGMN.

Go-Oracle: Automated Test Oracle for Go Concurrency Bugs

Dec 11, 2024

Abstract:The Go programming language has gained significant traction for developing software, especially in various infrastructure systems. Nonetheless, concurrency bugs have become a prevalent issue within Go, presenting a unique challenge due to the language's dual concurrency mechanisms-communicating sequential processes and shared memory. Detecting concurrency bugs and accurately classifying program executions as pass or fail presents an immense challenge, even for domain experts. We conducted a survey with expert developers at Bytedance that confirmed this challenge. Our work seeks to address the test oracle problem for Go programs, to automatically classify test executions as pass or fail. This problem has not been investigated in the literature for Go programs owing to its distinctive programming model. Our approach involves collecting both passing and failing execution traces from various subject Go programs. We capture a comprehensive array of execution events using the native Go execution tracer. Subsequently, we preprocess and encode these traces before training a transformer-based neural network to effectively classify the traces as either passing or failing. The evaluation of our approach encompasses 8 subject programs sourced from the GoBench repository. These subject programs are routinely used as benchmarks in an industry setting. Encouragingly, our test oracle, Go-Oracle, demonstrates high accuracies even when operating with a limited dataset, showcasing the efficacy and potential of our methodology. Developers at Bytedance strongly agreed that they would use the Go-Oracle tool over the current practice of manual inspections to classify tests for Go programs as pass or fail.

RepoMasterEval: Evaluating Code Completion via Real-World Repositories

Aug 07, 2024Abstract:With the growing reliance on automated code completion tools in software development, the need for robust evaluation benchmarks has become critical. However, existing benchmarks focus more on code generation tasks in function and class level and provide rich text description to prompt the model. By contrast, such descriptive prompt is commonly unavailable in real development and code completion can occur in wider range of situations such as in the middle of a function or a code block. These limitations makes the evaluation poorly align with the practical scenarios of code completion tools. In this paper, we propose RepoMasterEval, a novel benchmark for evaluating code completion models constructed from real-world Python and TypeScript repositories. Each benchmark datum is generated by masking a code snippet (ground truth) from one source code file with existing test suites. To improve test accuracy of model generated code, we employ mutation testing to measure the effectiveness of the test cases and we manually crafted new test cases for those test suites with low mutation score. Our empirical evaluation on 6 state-of-the-art models shows that test argumentation is critical in improving the accuracy of the benchmark and RepoMasterEval is able to report difference in model performance in real-world scenarios. The deployment of RepoMasterEval in a collaborated company for one month also revealed that the benchmark is useful to give accurate feedback during model training and the score is in high correlation with the model's performance in practice. Based on our findings, we call for the software engineering community to build more LLM benchmarks tailored for code generation tools taking the practical and complex development environment into consideration.

VersiCode: Towards Version-controllable Code Generation

Jun 11, 2024

Abstract:Significant research has focused on improving the performance of large language model on code-related tasks due to their practical importance. Although performance is typically evaluated using public benchmark datasets, the existing datasets do not account for the concept of \emph{version}, which is crucial in professional software development. In this paper, we introduce VersiCode, the first comprehensive dataset designed to assess the ability of large language models to generate verifiable code for specific library versions. VersiCode encompasses 300 libraries across more than 2,000 versions spanning 9 years. We design two dedicated evaluation tasks: version-specific code completion (VSCC) and version-aware code editing (VACE). Comprehensive experiments are conducted to benchmark the performance of LLMs, revealing the challenging nature of these tasks and VersiCode, that even state-of-the-art LLMs struggle to generate version-correct code. This dataset, together with the proposed tasks, sheds light on LLMs' capabilities and limitations in handling version-specific code generation, and opens up an important new area of research for further investigation. The resources can be found at https://github.com/wutong8023/VersiCode.

Ray-driven Spectral CT Reconstruction Based on Neural Base-Material Fields

Apr 10, 2024

Abstract:In spectral CT reconstruction, the basis materials decomposition involves solving a large-scale nonlinear system of integral equations, which is highly ill-posed mathematically. This paper proposes a model that parameterizes the attenuation coefficients of the object using a neural field representation, thereby avoiding the complex calculations of pixel-driven projection coefficient matrices during the discretization process of line integrals. It introduces a lightweight discretization method for line integrals based on a ray-driven neural field, enhancing the accuracy of the integral approximation during the discretization process. The basis materials are represented as continuous vector-valued implicit functions to establish a neural field parameterization model for the basis materials. The auto-differentiation framework of deep learning is then used to solve the implicit continuous function of the neural base-material fields. This method is not limited by the spatial resolution of reconstructed images, and the network has compact and regular properties. Experimental validation shows that our method performs exceptionally well in addressing the spectral CT reconstruction. Additionally, it fulfils the requirements for the generation of high-resolution reconstruction images.

Self-Evolving Wireless Communications: A Novel Intelligence Trend for 6G and Beyond

Apr 07, 2024Abstract:Wireless communication is rapidly evolving, and future wireless communications (6G and beyond) will be more heterogeneous, multi-layered, and complex, which poses challenges to traditional communications. Adaptive technologies in traditional communication systems respond to environmental changes by modifying system parameters and structures on their own and are not flexible and agile enough to satisfy requirements in future communications. To tackle these challenges, we propose a novel self-evolving communication framework, which consists of three layers: data layer, information layer, and knowledge layer. The first two layers allow communication systems to sense environments, fuse data, and generate a knowledge base for the knowledge layer. When dealing with a variety of application scenarios and environments, the generated knowledge is subsequently fed back to the first two layers for communication in practical application scenarios to obtain self-evolving ability and enhance the robustness of the system. In this paper, we first highlight the limitations of current adaptive communication systems and the need for intelligence, automation, and self-evolution in future wireless communications. We overview the development of self-evolving technologies and conceive the concept of self-evolving communications with its hypothetical architecture. To demonstrate the power of self-evolving modules, we compare the performances of a communication system with and without evolution. We then provide some potential techniques that enable self-evolving communications and challenges in implementing them.

DevBench: A Comprehensive Benchmark for Software Development

Mar 15, 2024

Abstract:Recent advancements in large language models (LLMs) have significantly enhanced their coding capabilities. However, existing benchmarks predominantly focused on simplified or isolated aspects of programming, such as single-file code generation or repository issue debugging, falling short of measuring the full spectrum of challenges raised by real-world programming activities. To this end, we propose DevBench, a comprehensive benchmark that evaluates LLMs across various stages of the software development lifecycle, including software design, environment setup, implementation, acceptance testing, and unit testing. DevBench features a wide range of programming languages and domains, high-quality data collection, and carefully designed and verified metrics for each task. Empirical studies show that current LLMs, including GPT-4-Turbo, fail to solve the challenges presented within DevBench. Analyses reveal that models struggle with understanding the complex structures in the repository, managing the compilation process, and grasping advanced programming concepts. Our findings offer actionable insights for the future development of LLMs toward real-world programming applications. Our benchmark is available at https://github.com/open-compass/DevBench

Ziya2: Data-centric Learning is All LLMs Need

Nov 06, 2023

Abstract:Various large language models (LLMs) have been proposed in recent years, including closed- and open-source ones, continually setting new records on multiple benchmarks. However, the development of LLMs still faces several issues, such as high cost of training models from scratch, and continual pre-training leading to catastrophic forgetting, etc. Although many such issues are addressed along the line of research on LLMs, an important yet practical limitation is that many studies overly pursue enlarging model sizes without comprehensively analyzing and optimizing the use of pre-training data in their learning process, as well as appropriate organization and leveraging of such data in training LLMs under cost-effective settings. In this work, we propose Ziya2, a model with 13 billion parameters adopting LLaMA2 as the foundation model, and further pre-trained on 700 billion tokens, where we focus on pre-training techniques and use data-centric optimization to enhance the learning process of Ziya2 on different stages. Experiments show that Ziya2 significantly outperforms other models in multiple benchmarks especially with promising results compared to representative open-source ones. Ziya2 (Base) is released at https://huggingface.co/IDEA-CCNL/Ziya2-13B-Base and https://modelscope.cn/models/Fengshenbang/Ziya2-13B-Base/summary.

Hawkeye: Change-targeted Testing for Android Apps based on Deep Reinforcement Learning

Sep 04, 2023Abstract:Android Apps are frequently updated to keep up with changing user, hardware, and business demands. Ensuring the correctness of App updates through extensive testing is crucial to avoid potential bugs reaching the end user. Existing Android testing tools generate GUI events focussing on improving the test coverage of the entire App rather than prioritising updates and its impacted elements. Recent research has proposed change-focused testing but relies on random exploration to exercise the updates and impacted GUI elements that is ineffective and slow for large complex Apps with a huge input exploration space. We propose directed testing of App updates with Hawkeye that is able to prioritise executing GUI actions associated with code changes based on deep reinforcement learning from historical exploration data. Our empirical evaluation compares Hawkeye with state-of-the-art model-based and reinforcement learning-based testing tools FastBot2 and ARES using 10 popular open-source and 1 commercial App. We find that Hawkeye is able to generate GUI event sequences targeting changed functions more reliably than FastBot2 and ARES for the open source Apps and the large commercial App. Hawkeye achieves comparable performance on smaller open source Apps with a more tractable exploration space. The industrial deployment of Hawkeye in the development pipeline also shows that Hawkeye is ideal to perform smoke testing for merge requests of a complicated commercial App.

UniEX: An Effective and Efficient Framework for Unified Information Extraction via a Span-extractive Perspective

May 22, 2023Abstract:We propose a new paradigm for universal information extraction (IE) that is compatible with any schema format and applicable to a list of IE tasks, such as named entity recognition, relation extraction, event extraction and sentiment analysis. Our approach converts the text-based IE tasks as the token-pair problem, which uniformly disassembles all extraction targets into joint span detection, classification and association problems with a unified extractive framework, namely UniEX. UniEX can synchronously encode schema-based prompt and textual information, and collaboratively learn the generalized knowledge from pre-defined information using the auto-encoder language models. We develop a traffine attention mechanism to integrate heterogeneous factors including tasks, labels and inside tokens, and obtain the extraction target via a scoring matrix. Experiment results show that UniEX can outperform generative universal IE models in terms of performance and inference-speed on $14$ benchmarks IE datasets with the supervised setting. The state-of-the-art performance in low-resource scenarios also verifies the transferability and effectiveness of UniEX.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge