Yiming Zhao

Vision-DeepResearch Benchmark: Rethinking Visual and Textual Search for Multimodal Large Language Models

Feb 02, 2026Abstract:Multimodal Large Language Models (MLLMs) have advanced VQA and now support Vision-DeepResearch systems that use search engines for complex visual-textual fact-finding. However, evaluating these visual and textual search abilities is still difficult, and existing benchmarks have two major limitations. First, existing benchmarks are not visual search-centric: answers that should require visual search are often leaked through cross-textual cues in the text questions or can be inferred from the prior world knowledge in current MLLMs. Second, overly idealized evaluation scenario: On the image-search side, the required information can often be obtained via near-exact matching against the full image, while the text-search side is overly direct and insufficiently challenging. To address these issues, we construct the Vision-DeepResearch benchmark (VDR-Bench) comprising 2,000 VQA instances. All questions are created via a careful, multi-stage curation pipeline and rigorous expert review, designed to assess the behavior of Vision-DeepResearch systems under realistic real-world conditions. Moreover, to address the insufficient visual retrieval capabilities of current MLLMs, we propose a simple multi-round cropped-search workflow. This strategy is shown to effectively improve model performance in realistic visual retrieval scenarios. Overall, our results provide practical guidance for the design of future multimodal deep-research systems. The code will be released in https://github.com/Osilly/Vision-DeepResearch.

UTDesign: A Unified Framework for Stylized Text Editing and Generation in Graphic Design Images

Dec 23, 2025Abstract:AI-assisted graphic design has emerged as a powerful tool for automating the creation and editing of design elements such as posters, banners, and advertisements. While diffusion-based text-to-image models have demonstrated strong capabilities in visual content generation, their text rendering performance, particularly for small-scale typography and non-Latin scripts, remains limited. In this paper, we propose UTDesign, a unified framework for high-precision stylized text editing and conditional text generation in design images, supporting both English and Chinese scripts. Our framework introduces a novel DiT-based text style transfer model trained from scratch on a synthetic dataset, capable of generating transparent RGBA text foregrounds that preserve the style of reference glyphs. We further extend this model into a conditional text generation framework by training a multi-modal condition encoder on a curated dataset with detailed text annotations, enabling accurate, style-consistent text synthesis conditioned on background images, prompts, and layout specifications. Finally, we integrate our approach into a fully automated text-to-design (T2D) pipeline by incorporating pre-trained text-to-image (T2I) models and an MLLM-based layout planner. Extensive experiments demonstrate that UTDesign achieves state-of-the-art performance among open-source methods in terms of stylistic consistency and text accuracy, and also exhibits unique advantages compared to proprietary commercial approaches. Code and data for this paper are available at https://github.com/ZYM-PKU/UTDesign.

MaP-AVR: A Meta-Action Planner for Agents Leveraging Vision Language Models and Retrieval-Augmented Generation

Dec 22, 2025Abstract:Embodied robotic AI systems designed to manage complex daily tasks rely on a task planner to understand and decompose high-level tasks. While most research focuses on enhancing the task-understanding abilities of LLMs/VLMs through fine-tuning or chain-of-thought prompting, this paper argues that defining the planned skill set is equally crucial. To handle the complexity of daily environments, the skill set should possess a high degree of generalization ability. Empirically, more abstract expressions tend to be more generalizable. Therefore, we propose to abstract the planned result as a set of meta-actions. Each meta-action comprises three components: {move/rotate, end-effector status change, relationship with the environment}. This abstraction replaces human-centric concepts, such as grasping or pushing, with the robot's intrinsic functionalities. As a result, the planned outcomes align seamlessly with the complete range of actions that the robot is capable of performing. Furthermore, to ensure that the LLM/VLM accurately produces the desired meta-action format, we employ the Retrieval-Augmented Generation (RAG) technique, which leverages a database of human-annotated planning demonstrations to facilitate in-context learning. As the system successfully completes more tasks, the database will self-augment to continue supporting diversity. The meta-action set and its integration with RAG are two novel contributions of our planner, denoted as MaP-AVR, the meta-action planner for agents composed of VLM and RAG. To validate its efficacy, we design experiments using GPT-4o as the pre-trained LLM/VLM model and OmniGibson as our robotic platform. Our approach demonstrates promising performance compared to the current state-of-the-art method. Project page: https://map-avr.github.io/.

CoS: Towards Optimal Event Scheduling via Chain-of-Scheduling

Nov 17, 2025Abstract:Recommending event schedules is a key issue in Event-based Social Networks (EBSNs) in order to maintain user activity. An effective recommendation is required to maximize the user's preference, subjecting to both time and geographical constraints. Existing methods face an inherent trade-off among efficiency, effectiveness, and generalization, due to the NP-hard nature of the problem. This paper proposes the Chain-of-Scheduling (CoS) framework, which activates the event scheduling capability of Large Language Models (LLMs) through a guided, efficient scheduling process. CoS enhances LLM by formulating the schedule task into three atomic stages, i.e., exploration, verification and integration. Then we enable the LLMs to generate CoS autonomously via Knowledge Distillation (KD). Experimental results show that CoS achieves near-theoretical optimal effectiveness with high efficiency on three real-world datasets in a interpretable manner. Moreover, it demonstrates strong zero-shot learning ability on out-of-domain data.

Comparison Research of Millimeter-Wave/Infrared Co-aperture Reflector Antenna Systems Based on a Specialized Film

Apr 27, 2025Abstract:This paper presents a novel co-aperture reflector antenna operating in millimeter-wave (MMW) and infrared (IR) for cloud detection radar. The proposed design combines a back-fed dual-reflector antenna, an IR optical reflection system, and a specialize thin film with IR-reflective/MMW-transmissive properties. Simulations demonstrate a gain exceeding 50 dBi within 94 GHz plush or minus 500 MHz bandwidth, with 0.46{\deg} beamwidth in both azimuth (E-plane) and elevation (H-plane) and sidelobe levels below -25 dB. This co-aperture architecture addresses the limitations of standalone MMW and IR radars, enabling high-resolution cloud microphysical parameter retrieval while minimizing system footprint. The design lays a foundation for airborne/spaceborne multi-mode detection systems.

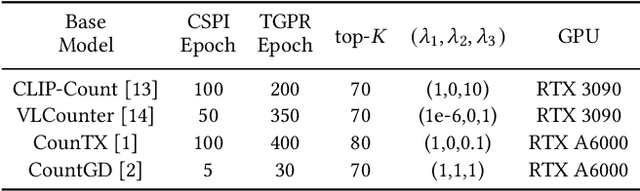

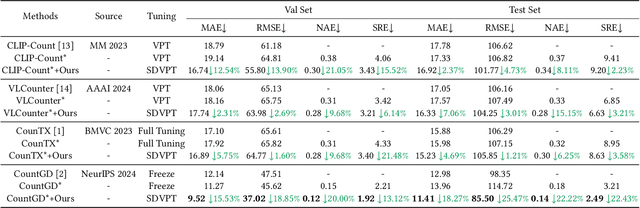

SDVPT: Semantic-Driven Visual Prompt Tuning for Open-World Object Counting

Apr 24, 2025

Abstract:Open-world object counting leverages the robust text-image alignment of pre-trained vision-language models (VLMs) to enable counting of arbitrary categories in images specified by textual queries. However, widely adopted naive fine-tuning strategies concentrate exclusively on text-image consistency for categories contained in training, which leads to limited generalizability for unseen categories. In this work, we propose a plug-and-play Semantic-Driven Visual Prompt Tuning framework (SDVPT) that transfers knowledge from the training set to unseen categories with minimal overhead in parameters and inference time. First, we introduce a two-stage visual prompt learning strategy composed of Category-Specific Prompt Initialization (CSPI) and Topology-Guided Prompt Refinement (TGPR). The CSPI generates category-specific visual prompts, and then TGPR distills latent structural patterns from the VLM's text encoder to refine these prompts. During inference, we dynamically synthesize the visual prompts for unseen categories based on the semantic correlation between unseen and training categories, facilitating robust text-image alignment for unseen categories. Extensive experiments integrating SDVPT with all available open-world object counting models demonstrate its effectiveness and adaptability across three widely used datasets: FSC-147, CARPK, and PUCPR+.

VCR-Bench: A Comprehensive Evaluation Framework for Video Chain-of-Thought Reasoning

Apr 10, 2025Abstract:The advancement of Chain-of-Thought (CoT) reasoning has significantly enhanced the capabilities of large language models (LLMs) and large vision-language models (LVLMs). However, a rigorous evaluation framework for video CoT reasoning remains absent. Current video benchmarks fail to adequately assess the reasoning process and expose whether failures stem from deficiencies in perception or reasoning capabilities. Therefore, we introduce VCR-Bench, a novel benchmark designed to comprehensively evaluate LVLMs' Video Chain-of-Thought Reasoning capabilities. VCR-Bench comprises 859 videos spanning a variety of video content and durations, along with 1,034 high-quality question-answer pairs. Each pair is manually annotated with a stepwise CoT rationale, where every step is tagged to indicate its association with the perception or reasoning capabilities. Furthermore, we design seven distinct task dimensions and propose the CoT score to assess the entire CoT process based on the stepwise tagged CoT rationals. Extensive experiments on VCR-Bench highlight substantial limitations in current LVLMs. Even the top-performing model, o1, only achieves a 62.8% CoT score and an 56.7% accuracy, while most models score below 40%. Experiments show most models score lower on perception than reasoning steps, revealing LVLMs' key bottleneck in temporal-spatial information processing for complex video reasoning. A robust positive correlation between the CoT score and accuracy confirms the validity of our evaluation framework and underscores the critical role of CoT reasoning in solving complex video reasoning tasks. We hope VCR-Bench to serve as a standardized evaluation framework and expose the actual drawbacks in complex video reasoning task.

ART: Anonymous Region Transformer for Variable Multi-Layer Transparent Image Generation

Feb 25, 2025Abstract:Multi-layer image generation is a fundamental task that enables users to isolate, select, and edit specific image layers, thereby revolutionizing interactions with generative models. In this paper, we introduce the Anonymous Region Transformer (ART), which facilitates the direct generation of variable multi-layer transparent images based on a global text prompt and an anonymous region layout. Inspired by Schema theory suggests that knowledge is organized in frameworks (schemas) that enable people to interpret and learn from new information by linking it to prior knowledge.}, this anonymous region layout allows the generative model to autonomously determine which set of visual tokens should align with which text tokens, which is in contrast to the previously dominant semantic layout for the image generation task. In addition, the layer-wise region crop mechanism, which only selects the visual tokens belonging to each anonymous region, significantly reduces attention computation costs and enables the efficient generation of images with numerous distinct layers (e.g., 50+). When compared to the full attention approach, our method is over 12 times faster and exhibits fewer layer conflicts. Furthermore, we propose a high-quality multi-layer transparent image autoencoder that supports the direct encoding and decoding of the transparency of variable multi-layer images in a joint manner. By enabling precise control and scalable layer generation, ART establishes a new paradigm for interactive content creation.

Optimization-free Smooth Control Barrier Function for Polygonal Collision Avoidance

Feb 22, 2025Abstract:Polygonal collision avoidance (PCA) is short for the problem of collision avoidance between two polygons (i.e., polytopes in planar) that own their dynamic equations. This problem suffers the inherent difficulty in dealing with non-smooth boundaries and recently optimization-defined metrics, such as signed distance field (SDF) and its variants, have been proposed as control barrier functions (CBFs) to tackle PCA problems. In contrast, we propose an optimization-free smooth CBF method in this paper, which is computationally efficient and proved to be nonconservative. It is achieved by three main steps: a lower bound of SDF is expressed as a nested Boolean logic composition first, then its smooth approximation is established by applying the latest log-sum-exp method, after which a specified CBF-based safety filter is proposed to address this class of problems. To illustrate its wide applications, the optimization-free smooth CBF method is extended to solve distributed collision avoidance of two underactuated nonholonomic vehicles and drive an underactuated container crane to avoid a moving obstacle respectively, for which numerical simulations are also performed.

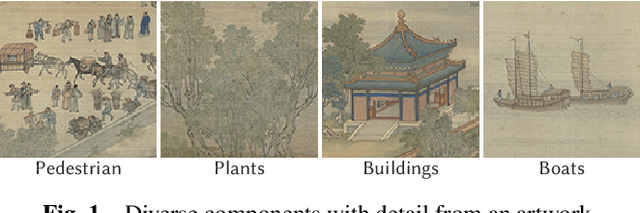

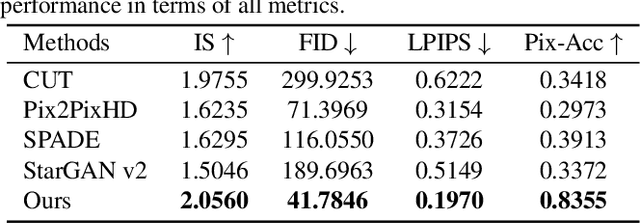

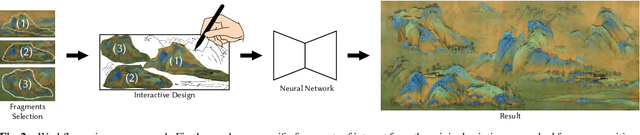

Neural-Polyptych: Content Controllable Painting Recreation for Diverse Genres

Sep 29, 2024

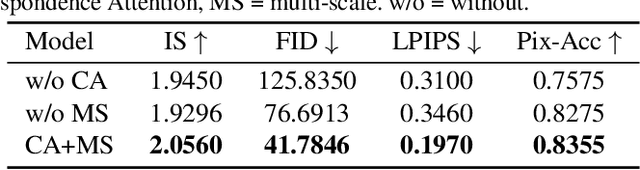

Abstract:To bridge the gap between artists and non-specialists, we present a unified framework, Neural-Polyptych, to facilitate the creation of expansive, high-resolution paintings by seamlessly incorporating interactive hand-drawn sketches with fragments from original paintings. We have designed a multi-scale GAN-based architecture to decompose the generation process into two parts, each responsible for identifying global and local features. To enhance the fidelity of semantic details generated from users' sketched outlines, we introduce a Correspondence Attention module utilizing our Reference Bank strategy. This ensures the creation of high-quality, intricately detailed elements within the artwork. The final result is achieved by carefully blending these local elements while preserving coherent global consistency. Consequently, this methodology enables the production of digital paintings at megapixel scale, accommodating diverse artistic expressions and enabling users to recreate content in a controlled manner. We validate our approach to diverse genres of both Eastern and Western paintings. Applications such as large painting extension, texture shuffling, genre switching, mural art restoration, and recomposition can be successfully based on our framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge