Hai Lin

Victor

MA-enhanced Mixed Near-field and Far-field Covert Communications

Nov 11, 2025Abstract:In this paper, we propose to employ a modular-based movable extremely large-scale array (XL-array) at Alice for enhancing covert communication performance. Compared with existing work that mostly considered either far-field or near-field covert communications, we consider in this paper a more general and practical mixed-field scenario, where multiple Bobs are located in either the near-field or far-field of Alice, in the presence of multiple near-field Willies. Specifically, we first consider a two-Bob-one-Willie system and show that conventional fixed-position XL-arrays suffer degraded sum-rate performance due to the energy-spread effect in mixed-field systems, which, however, can be greatly improved by subarray movement. On the other hand, for transmission covertness, it is revealed that sufficient angle difference between far-field Bob and Willie as well as adequate range difference between near-field Bob and Willie are necessary for ensuring covertness in fixed-position XL-array systems, while this requirement can be relaxed in movable XL-array systems thanks to flexible channel correlation control between Bobs and Willie. Next, for general system setups, we formulate an optimization problem to maximize the achievable sum-rate under covertness constraint. To solve this non-convex optimization problem, we first decompose it into two subproblems, corresponding to an inner problem for beamforming optimization given positions of subarrays and an outer problem for subarray movement optimization. Although these two subproblems are still non-convex, we obtain their high-quality solutions by using the successive convex approximation technique and devising a customized differential evolution algorithm, respectively. Last, numerical results demonstrate the effectiveness of proposed movable XL-array in balancing sum-rate and covert communication requirements.

LexSemBridge: Fine-Grained Dense Representation Enhancement through Token-Aware Embedding Augmentation

Aug 25, 2025Abstract:As queries in retrieval-augmented generation (RAG) pipelines powered by large language models (LLMs) become increasingly complex and diverse, dense retrieval models have demonstrated strong performance in semantic matching. Nevertheless, they often struggle with fine-grained retrieval tasks, where precise keyword alignment and span-level localization are required, even in cases with high lexical overlap that would intuitively suggest easier retrieval. To systematically evaluate this limitation, we introduce two targeted tasks, keyword retrieval and part-of-passage retrieval, designed to simulate practical fine-grained scenarios. Motivated by these observations, we propose LexSemBridge, a unified framework that enhances dense query representations through fine-grained, input-aware vector modulation. LexSemBridge constructs latent enhancement vectors from input tokens using three paradigms: Statistical (SLR), Learned (LLR), and Contextual (CLR), and integrates them with dense embeddings via element-wise interaction. Theoretically, we show that this modulation preserves the semantic direction while selectively amplifying discriminative dimensions. LexSemBridge operates as a plug-in without modifying the backbone encoder and naturally extends to both text and vision modalities. Extensive experiments across semantic and fine-grained retrieval tasks validate the effectiveness and generality of our approach. All code and models are publicly available at https://github.com/Jasaxion/LexSemBridge/

Traffic-Aware Pedestrian Intention Prediction

Jul 16, 2025Abstract:Accurate pedestrian intention estimation is crucial for the safe navigation of autonomous vehicles (AVs) and hence attracts a lot of research attention. However, current models often fail to adequately consider dynamic traffic signals and contextual scene information, which are critical for real-world applications. This paper presents a Traffic-Aware Spatio-Temporal Graph Convolutional Network (TA-STGCN) that integrates traffic signs and their states (Red, Yellow, Green) into pedestrian intention prediction. Our approach introduces the integration of dynamic traffic signal states and bounding box size as key features, allowing the model to capture both spatial and temporal dependencies in complex urban environments. The model surpasses existing methods in accuracy. Specifically, TA-STGCN achieves a 4.75% higher accuracy compared to the baseline model on the PIE dataset, demonstrating its effectiveness in improving pedestrian intention prediction.

Advancing Generalizable Tumor Segmentation with Anomaly-Aware Open-Vocabulary Attention Maps and Frozen Foundation Diffusion Models

May 05, 2025Abstract:We explore Generalizable Tumor Segmentation, aiming to train a single model for zero-shot tumor segmentation across diverse anatomical regions. Existing methods face limitations related to segmentation quality, scalability, and the range of applicable imaging modalities. In this paper, we uncover the potential of the internal representations within frozen medical foundation diffusion models as highly efficient zero-shot learners for tumor segmentation by introducing a novel framework named DiffuGTS. DiffuGTS creates anomaly-aware open-vocabulary attention maps based on text prompts to enable generalizable anomaly segmentation without being restricted by a predefined training category list. To further improve and refine anomaly segmentation masks, DiffuGTS leverages the diffusion model, transforming pathological regions into high-quality pseudo-healthy counterparts through latent space inpainting, and applies a novel pixel-level and feature-level residual learning approach, resulting in segmentation masks with significantly enhanced quality and generalization. Comprehensive experiments on four datasets and seven tumor categories demonstrate the superior performance of our method, surpassing current state-of-the-art models across multiple zero-shot settings. Codes are available at https://github.com/Yankai96/DiffuGTS.

A Primer on Orthogonal Delay-Doppler Division Multiplexing (ODDM)

Apr 15, 2025

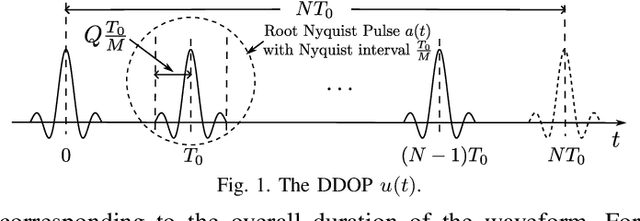

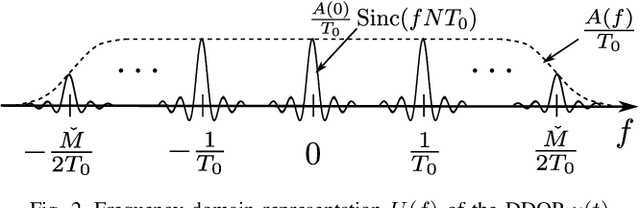

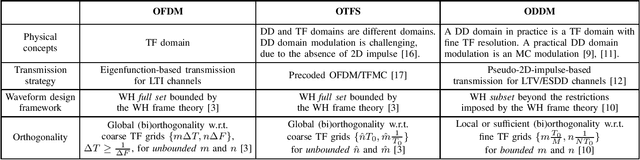

Abstract:As a new type of multicarrier (MC) scheme built upon the recently discovered delay-Doppler domain orthogonal pulse (DDOP), orthogonal delay-Doppler division multiplexing (ODDM) aims to address the challenges of waveform design in linear time-varying channels. In this paper, we explore the design principles of ODDM and clarify the key ideas underlying the DDOP. We then derive an alternative representation of the DDOP and highlight the fundamental differences between ODDM and conventional MC schemes. Finally, we discuss and compare two implementation methods for ODDM.

Graph Neural Network-Based Distributed Optimal Control for Linear Networked Systems: An Online Distributed Training Approach

Apr 08, 2025Abstract:In this paper, we consider the distributed optimal control problem for linear networked systems. In particular, we are interested in learning distributed optimal controllers using graph recurrent neural networks (GRNNs). Most of the existing approaches result in centralized optimal controllers with offline training processes. However, as the increasing demand of network resilience, the optimal controllers are further expected to be distributed, and are desirable to be trained in an online distributed fashion, which are also the main contributions of our work. To solve this problem, we first propose a GRNN-based distributed optimal control method, and we cast the problem as a self-supervised learning problem. Then, the distributed online training is achieved via distributed gradient computation, and inspired by the (consensus-based) distributed optimization idea, a distributed online training optimizer is designed. Furthermore, the local closed-loop stability of the linear networked system under our proposed GRNN-based controller is provided by assuming that the nonlinear activation function of the GRNN-based controller is both local sector-bounded and slope-restricted. The effectiveness of our proposed method is illustrated by numerical simulations using a specifically developed simulator.

An Advantage-based Optimization Method for Reinforcement Learning in Large Action Space

Dec 17, 2024

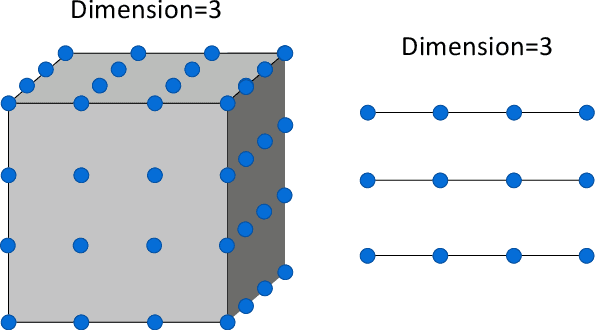

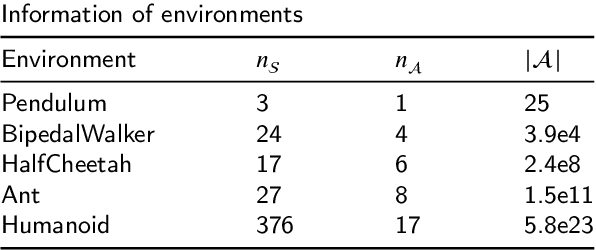

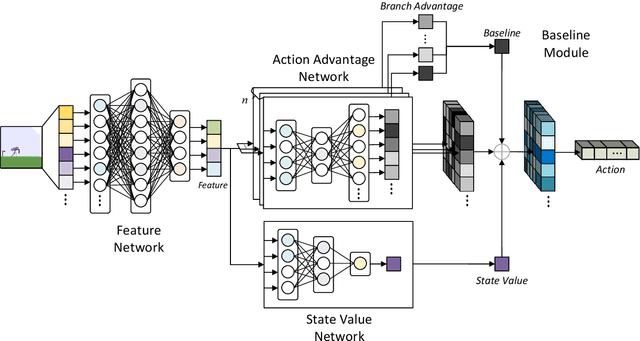

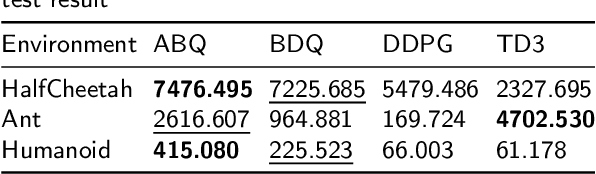

Abstract:Reinforcement learning tasks in real-world scenarios often involve large, high-dimensional action spaces, leading to challenges such as convergence difficulties, instability, and high computational complexity. It is widely acknowledged that traditional value-based reinforcement learning algorithms struggle to address these issues effectively. A prevalent approach involves generating independent sub-actions within each dimension of the action space. However, this method introduces bias, hindering the learning of optimal policies. In this paper, we propose an advantage-based optimization method and an algorithm named Advantage Branching Dueling Q-network (ABQ). ABQ incorporates a baseline mechanism to tune the action value of each dimension, leveraging the advantage relationship across different sub-actions. With this approach, the learned policy can be optimized for each dimension. Empirical results demonstrate that ABQ outperforms BDQ, achieving 3%, 171%, and 84% more cumulative rewards in HalfCheetah, Ant, and Humanoid environments, respectively. Furthermore, ABQ exhibits competitive performance when compared against two continuous action benchmark algorithms, DDPG and TD3.

On the Time-Frequency Localization Characteristics of the Delay-Doppler Plane Orthogonal Pulse

Dec 14, 2024

Abstract:In this work, we study the time-frequency (TF) localization characteristics of the prototype pulse of orthogonal delay-Doppler (DD) division multiplexing modulation, namely, the DD plane orthogonal pulse (DDOP). The TF localization characteristics examine how concentrated or spread out the energy of a pulse is in the joint TF domain, the time domain (TD), and the frequency domain (FD). We first derive the TF localization metrics of the DDOP, including its TF area, its time and frequency dispersions, and its direction parameter. Based on these results, we demonstrate that the DDOP exhibits a high energy spread in the TD, FD, and the joint TF domain, while adhering to the Heisenberg uncertainty principle. Thereafter, we discuss the potential advantages brought by the energy spread of the DDOP, especially with regard to harnessing both time and frequency diversities and enabling fine-resolution sensing. Subsequently, we examine the relationships between the time and frequency dispersions of the DDOP and those of the envelope functions of DDOP's TD and FD representations, paving the way for simplified determination of the TF localization metrics for more generalized variants of the DDOP and the pulses used in other DD domain modulation schemes. Finally, using numerical results, we validate our analysis and find further insights.

Assessing data-driven predictions of band gap and electrical conductivity for transparent conducting materials

Nov 21, 2024Abstract:Machine Learning (ML) has offered innovative perspectives for accelerating the discovery of new functional materials, leveraging the increasing availability of material databases. Despite the promising advances, data-driven methods face constraints imposed by the quantity and quality of available data. Moreover, ML is often employed in tandem with simulated datasets originating from density functional theory (DFT), and assessed through in-sample evaluation schemes. This scenario raises questions about the practical utility of ML in uncovering new and significant material classes for industrial applications. Here, we propose a data-driven framework aimed at accelerating the discovery of new transparent conducting materials (TCMs), an important category of semiconductors with a wide range of applications. To mitigate the shortage of available data, we create and validate unique experimental databases, comprising several examples of existing TCMs. We assess state-of-the-art (SOTA) ML models for property prediction from the stoichiometry alone. We propose a bespoke evaluation scheme to provide empirical evidence on the ability of ML to uncover new, previously unseen materials of interest. We test our approach on a list of 55 compositions containing typical elements of known TCMs. Although our study indicates that ML tends to identify new TCMs compositionally similar to those in the training data, we empirically demonstrate that it can highlight material candidates that may have been previously overlooked, offering a systematic approach to identify materials that are likely to display TCMs characteristics.

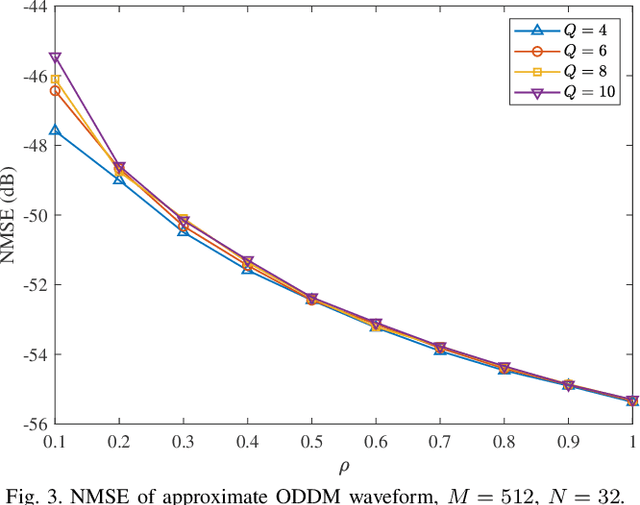

Performance of orthogonal delay-doppler division multiplexing modulation with imperfect channel estimation

Oct 23, 2024Abstract:The orthogonal delay-Doppler division multiplexing (ODDM) modulation is a recently proposed multi-carrier modulation that features a realizable pulse orthogonal with respect to the delay-Doppler (DD) plane's fine resolutions. In this paper, we investigate the performance of ODDM systems with imperfect channel estimation considering three detectors, namely the message passing algorithm (MPA) detector, iterative maximum-ratio combining (MRC) detector, and successive interference cancellation with minimum mean square error (SIC-MMSE) detector. We derive the post-equalization signal-to-interference-plus-noise ratio (SINR) for MRC and SIC-MMSE and analyze their bit error rate (BER) performance. Based on this analysis, we propose the MRC with subtractive dither (MRC-SD) and soft SIC-MMSE initialized MRC (SSMI-MRC) detector to improve the BER of iterative MRC. Our results demonstrate that soft SIC-MMSE consistently outperforms the other detectors in BER performance under perfect and imperfect CSI. While MRC exhibits a BER floor above $10^{-5}$, MRC-SD effectively lowers the BER with a negligible increase in detection complexity. SSMI-MRC achieves better BER than hard SIC-MMSE with the same detection complexity order. Additionally, we show that MPA has an error floor and is sensitive to imperfect CSI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge