Xin Su

LexSemBridge: Fine-Grained Dense Representation Enhancement through Token-Aware Embedding Augmentation

Aug 25, 2025Abstract:As queries in retrieval-augmented generation (RAG) pipelines powered by large language models (LLMs) become increasingly complex and diverse, dense retrieval models have demonstrated strong performance in semantic matching. Nevertheless, they often struggle with fine-grained retrieval tasks, where precise keyword alignment and span-level localization are required, even in cases with high lexical overlap that would intuitively suggest easier retrieval. To systematically evaluate this limitation, we introduce two targeted tasks, keyword retrieval and part-of-passage retrieval, designed to simulate practical fine-grained scenarios. Motivated by these observations, we propose LexSemBridge, a unified framework that enhances dense query representations through fine-grained, input-aware vector modulation. LexSemBridge constructs latent enhancement vectors from input tokens using three paradigms: Statistical (SLR), Learned (LLR), and Contextual (CLR), and integrates them with dense embeddings via element-wise interaction. Theoretically, we show that this modulation preserves the semantic direction while selectively amplifying discriminative dimensions. LexSemBridge operates as a plug-in without modifying the backbone encoder and naturally extends to both text and vision modalities. Extensive experiments across semantic and fine-grained retrieval tasks validate the effectiveness and generality of our approach. All code and models are publicly available at https://github.com/Jasaxion/LexSemBridge/

Investigating the Robustness of Retrieval-Augmented Generation at the Query Level

Jul 09, 2025Abstract:Large language models (LLMs) are very costly and inefficient to update with new information. To address this limitation, retrieval-augmented generation (RAG) has been proposed as a solution that dynamically incorporates external knowledge during inference, improving factual consistency and reducing hallucinations. Despite its promise, RAG systems face practical challenges-most notably, a strong dependence on the quality of the input query for accurate retrieval. In this paper, we investigate the sensitivity of different components in the RAG pipeline to various types of query perturbations. Our analysis reveals that the performance of commonly used retrievers can degrade significantly even under minor query variations. We study each module in isolation as well as their combined effect in an end-to-end question answering setting, using both general-domain and domain-specific datasets. Additionally, we propose an evaluation framework to systematically assess the query-level robustness of RAG pipelines and offer actionable recommendations for practitioners based on the results of more than 1092 experiments we performed.

A Semantic Parsing Framework for End-to-End Time Normalization

Jul 08, 2025Abstract:Time normalization is the task of converting natural language temporal expressions into machine-readable representations. It underpins many downstream applications in information retrieval, question answering, and clinical decision-making. Traditional systems based on the ISO-TimeML schema limit expressivity and struggle with complex constructs such as compositional, event-relative, and multi-span time expressions. In this work, we introduce a novel formulation of time normalization as a code generation task grounded in the SCATE framework, which defines temporal semantics through symbolic and compositional operators. We implement a fully executable SCATE Python library and demonstrate that large language models (LLMs) can generate executable SCATE code. Leveraging this capability, we develop an automatic data augmentation pipeline using LLMs to synthesize large-scale annotated data with code-level validation. Our experiments show that small, locally deployable models trained on this augmented data can achieve strong performance, outperforming even their LLM parents and enabling practical, accurate, and interpretable time normalization.

TiMo: Spatiotemporal Foundation Model for Satellite Image Time Series

May 13, 2025

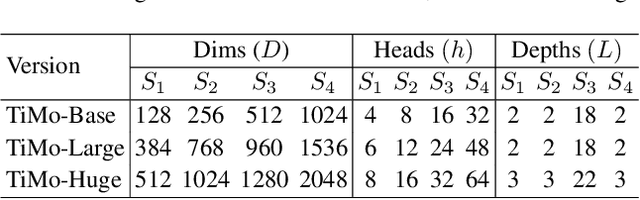

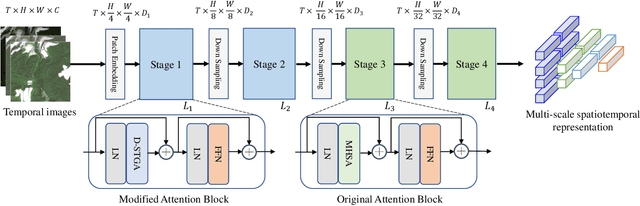

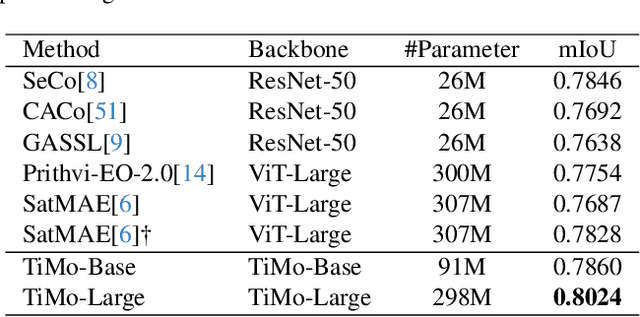

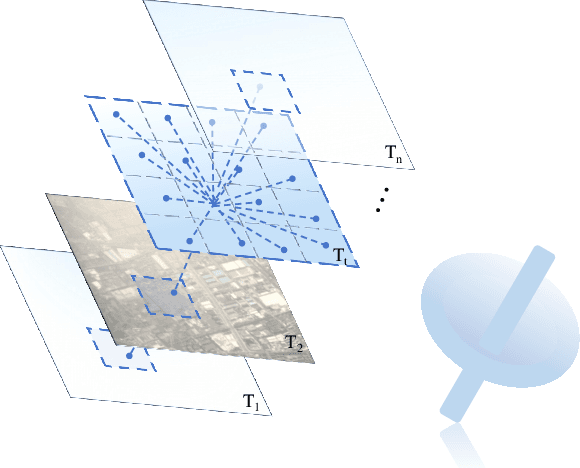

Abstract:Satellite image time series (SITS) provide continuous observations of the Earth's surface, making them essential for applications such as environmental management and disaster assessment. However, existing spatiotemporal foundation models rely on plain vision transformers, which encode entire temporal sequences without explicitly capturing multiscale spatiotemporal relationships between land objects. This limitation hinders their effectiveness in downstream tasks. To overcome this challenge, we propose TiMo, a novel hierarchical vision transformer foundation model tailored for SITS analysis. At its core, we introduce a spatiotemporal gyroscope attention mechanism that dynamically captures evolving multiscale patterns across both time and space. For pre-training, we curate MillionST, a large-scale dataset of one million images from 100,000 geographic locations, each captured across 10 temporal phases over five years, encompassing diverse geospatial changes and seasonal variations. Leveraging this dataset, we adapt masked image modeling to pre-train TiMo, enabling it to effectively learn and encode generalizable spatiotemporal representations.Extensive experiments across multiple spatiotemporal tasks-including deforestation monitoring, land cover segmentation, crop type classification, and flood detection-demonstrate TiMo's superiority over state-of-the-art methods. Code, model, and dataset will be released at https://github.com/MiliLab/TiMo.

Distribution-aware Dataset Distillation for Efficient Image Restoration

Apr 21, 2025Abstract:With the exponential increase in image data, training an image restoration model is laborious. Dataset distillation is a potential solution to this problem, yet current distillation techniques are a blank canvas in the field of image restoration. To fill this gap, we propose the Distribution-aware Dataset Distillation method (TripleD), a new framework that extends the principles of dataset distillation to image restoration. Specifically, TripleD uses a pre-trained vision Transformer to extract features from images for complexity evaluation, and the subset (the number of samples is much smaller than the original training set) is selected based on complexity. The selected subset is then fed through a lightweight CNN that fine-tunes the image distribution to align with the distribution of the original dataset at the feature level. To efficiently condense knowledge, the training is divided into two stages. Early stages focus on simpler, low-complexity samples to build foundational knowledge, while later stages select more complex and uncertain samples as the model matures. Our method achieves promising performance on multiple image restoration tasks, including multi-task image restoration, all-in-one image restoration, and ultra-high-definition image restoration tasks. Note that we can train a state-of-the-art image restoration model on an ultra-high-definition (4K resolution) dataset using only one consumer-grade GPU in less than 8 hours (500 savings in computing resources and immeasurable training time).

AdaQual-Diff: Diffusion-Based Image Restoration via Adaptive Quality Prompting

Apr 17, 2025Abstract:Restoring images afflicted by complex real-world degradations remains challenging, as conventional methods often fail to adapt to the unique mixture and severity of artifacts present. This stems from a reliance on indirect cues which poorly capture the true perceptual quality deficit. To address this fundamental limitation, we introduce AdaQual-Diff, a diffusion-based framework that integrates perceptual quality assessment directly into the generative restoration process. Our approach establishes a mathematical relationship between regional quality scores from DeQAScore and optimal guidance complexity, implemented through an Adaptive Quality Prompting mechanism. This mechanism systematically modulates prompt structure according to measured degradation severity: regions with lower perceptual quality receive computationally intensive, structurally complex prompts with precise restoration directives, while higher quality regions receive minimal prompts focused on preservation rather than intervention. The technical core of our method lies in the dynamic allocation of computational resources proportional to degradation severity, creating a spatially-varying guidance field that directs the diffusion process with mathematical precision. By combining this quality-guided approach with content-specific conditioning, our framework achieves fine-grained control over regional restoration intensity without requiring additional parameters or inference iterations. Experimental results demonstrate that AdaQual-Diff achieves visually superior restorations across diverse synthetic and real-world datasets.

Transformer-Based Temporal Information Extraction and Application: A Review

Apr 10, 2025

Abstract:Temporal information extraction (IE) aims to extract structured temporal information from unstructured text, thereby uncovering the implicit timelines within. This technique is applied across domains such as healthcare, newswire, and intelligence analysis, aiding models in these areas to perform temporal reasoning and enabling human users to grasp the temporal structure of text. Transformer-based pre-trained language models have produced revolutionary advancements in natural language processing, demonstrating exceptional performance across a multitude of tasks. Despite the achievements garnered by Transformer-based approaches in temporal IE, there is a lack of comprehensive reviews on these endeavors. In this paper, we aim to bridge this gap by systematically summarizing and analyzing the body of work on temporal IE using Transformers while highlighting potential future research directions.

Conformal Robust Beamforming via Generative Channel Models

Apr 09, 2025

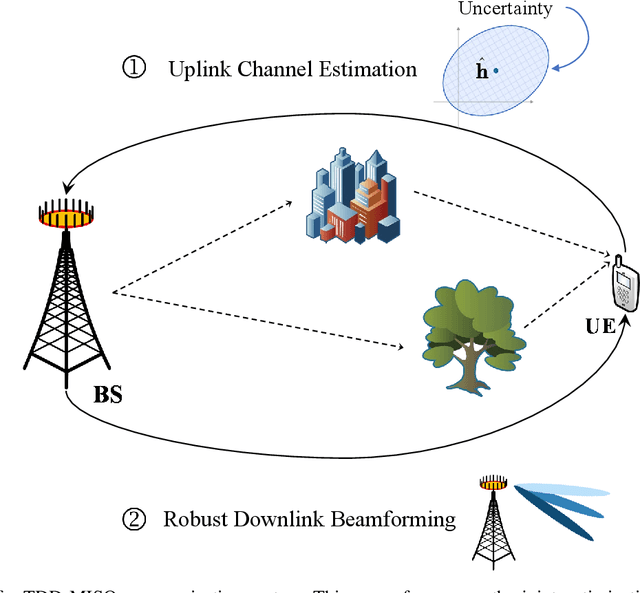

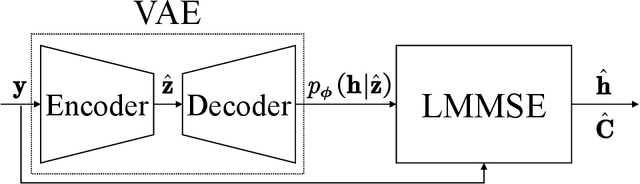

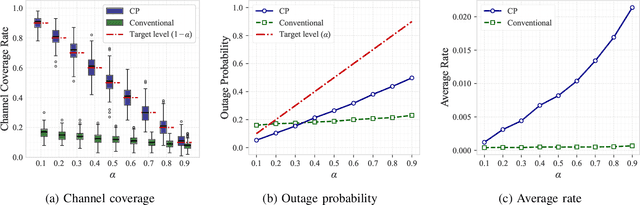

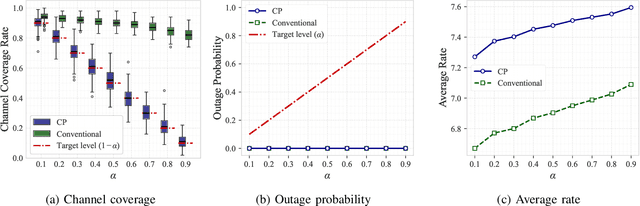

Abstract:Traditional approaches to outage-constrained beamforming optimization rely on statistical assumptions about channel distributions and estimation errors. However, the resulting outage probability guarantees are only valid when these assumptions accurately reflect reality. This paper tackles the fundamental challenge of providing outage probability guarantees that remain robust regardless of specific channel or estimation error models. To achieve this, we propose a two-stage framework: (i) construction of a channel uncertainty set using a generative channel model combined with conformal prediction, and (ii) robust beamforming via the solution of a min-max optimization problem. The proposed method separates the modeling and optimization tasks, enabling principled uncertainty quantification and robust decision-making. Simulation results confirm the effectiveness and reliability of the framework in achieving model-agnostic outage guarantees.

Bridge the Gap between SNN and ANN for Image Restoration

Apr 02, 2025

Abstract:Models of dense prediction based on traditional Artificial Neural Networks (ANNs) require a lot of energy, especially for image restoration tasks. Currently, neural networks based on the SNN (Spiking Neural Network) framework are beginning to make their mark in the field of image restoration, especially as they typically use less than 10\% of the energy of ANNs with the same architecture. However, training an SNN is much more expensive than training an ANN, due to the use of the heuristic gradient descent strategy. In other words, the process of SNN's potential membrane signal changing from sparse to dense is very slow, which affects the convergence of the whole model.To tackle this problem, we propose a novel distillation technique, called asymmetric framework (ANN-SNN) distillation, in which the teacher is an ANN and the student is an SNN. Specifically, we leverage the intermediate features (feature maps) learned by the ANN as hints to guide the training process of the SNN. This approach not only accelerates the convergence of the SNN but also improves its final performance, effectively bridging the gap between the efficiency of the SNN and the superior learning capabilities of ANN. Extensive experimental results show that our designed SNN-based image restoration model, which has only 1/300 the number of parameters of the teacher network and 1/50 the energy consumption of the teacher network, is as good as the teacher network in some denoising tasks.

DAST: Context-Aware Compression in LLMs via Dynamic Allocation of Soft Tokens

Feb 17, 2025

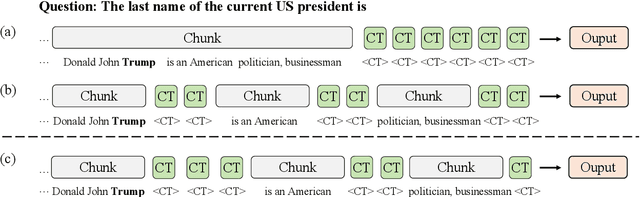

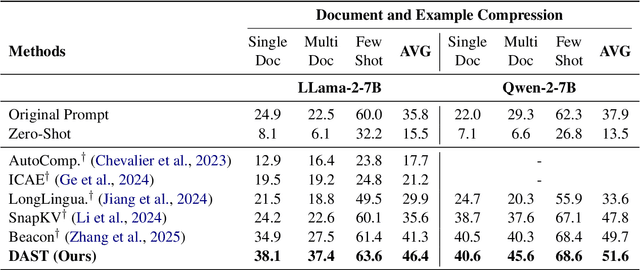

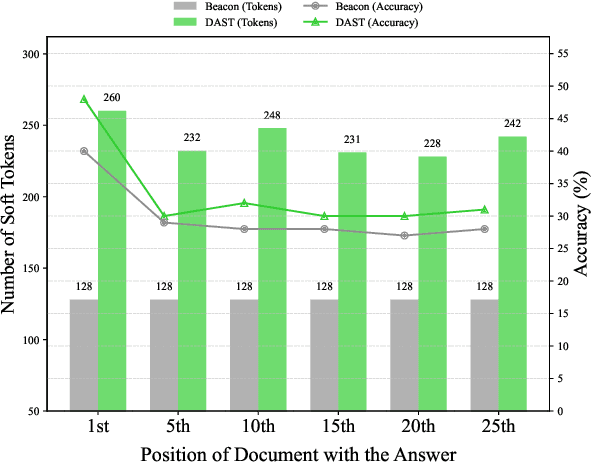

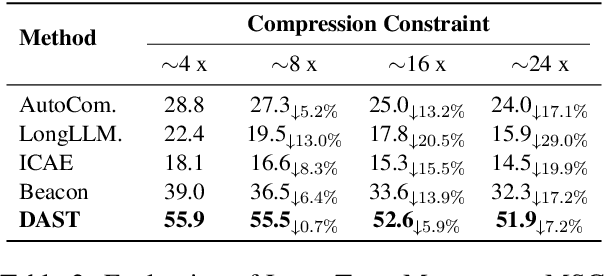

Abstract:Large Language Models (LLMs) face computational inefficiencies and redundant processing when handling long context inputs, prompting a focus on compression techniques. While existing semantic vector-based compression methods achieve promising performance, these methods fail to account for the intrinsic information density variations between context chunks, instead allocating soft tokens uniformly across context chunks. This uniform distribution inevitably diminishes allocation to information-critical regions. To address this, we propose Dynamic Allocation of Soft Tokens (DAST), a simple yet effective method that leverages the LLM's intrinsic understanding of contextual relevance to guide compression. DAST combines perplexity-based local information with attention-driven global information to dynamically allocate soft tokens to the informative-rich chunks, enabling effective, context-aware compression. Experimental results across multiple benchmarks demonstrate that DAST surpasses state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge