Osvaldo Simeone

Shitz

Prediction-Powered Risk Monitoring of Deployed Models for Detecting Harmful Distribution Shifts

Feb 02, 2026Abstract:We study the problem of monitoring model performance in dynamic environments where labeled data are limited. To this end, we propose prediction-powered risk monitoring (PPRM), a semi-supervised risk-monitoring approach based on prediction-powered inference (PPI). PPRM constructs anytime-valid lower bounds on the running risk by combining synthetic labels with a small set of true labels. Harmful shifts are detected via a threshold-based comparison with an upper bound on the nominal risk, satisfying assumption-free finite-sample guarantees in the probability of false alarm. We demonstrate the effectiveness of PPRM through extensive experiments on image classification, large language model (LLM), and telecommunications monitoring tasks.

Should I Have Expressed a Different Intent? Counterfactual Generation for LLM-Based Autonomous Control

Jan 29, 2026Abstract:Large language model (LLM)-powered agents can translate high-level user intents into plans and actions in an environment. Yet after observing an outcome, users may wonder: What if I had phrased my intent differently? We introduce a framework that enables such counterfactual reasoning in agentic LLM-driven control scenarios, while providing formal reliability guarantees. Our approach models the closed-loop interaction between a user, an LLM-based agent, and an environment as a structural causal model (SCM), and leverages test-time scaling to generate multiple candidate counterfactual outcomes via probabilistic abduction. Through an offline calibration phase, the proposed conformal counterfactual generation (CCG) yields sets of counterfactual outcomes that are guaranteed to contain the true counterfactual outcome with high probability. We showcase the performance of CCG on a wireless network control use case, demonstrating significant advantages compared to naive re-execution baselines.

Modern Neuromorphic AI: From Intra-Token to Inter-Token Processing

Jan 08, 2026Abstract:The rapid growth of artificial intelligence (AI) has brought novel data processing and generative capabilities but also escalating energy requirements. This challenge motivates renewed interest in neuromorphic computing principles, which promise brain-like efficiency through discrete and sparse activations, recurrent dynamics, and non-linear feedback. In fact, modern AI architectures increasingly embody neuromorphic principles through heavily quantized activations, state-space dynamics, and sparse attention mechanisms. This paper elaborates on the connections between neuromorphic models, state-space models, and transformer architectures through the lens of the distinction between intra-token processing and inter-token processing. Most early work on neuromorphic AI was based on spiking neural networks (SNNs) for intra-token processing, i.e., for transformations involving multiple channels, or features, of the same vector input, such as the pixels of an image. In contrast, more recent research has explored how neuromorphic principles can be leveraged to design efficient inter-token processing methods, which selectively combine different information elements depending on their contextual relevance. Implementing associative memorization mechanisms, these approaches leverage state-space dynamics or sparse self-attention. Along with a systematic presentation of modern neuromorphic AI models through the lens of intra-token and inter-token processing, training methodologies for neuromorphic AI models are also reviewed. These range from surrogate gradients leveraging parallel convolutional processing to local learning rules based on reinforcement learning mechanisms.

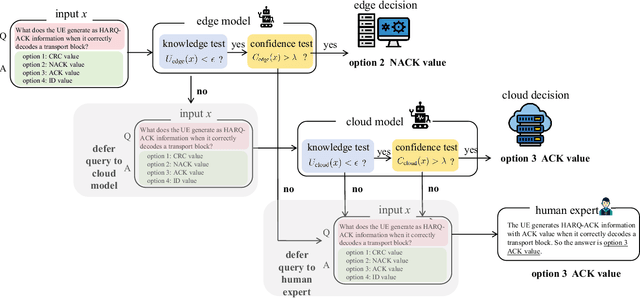

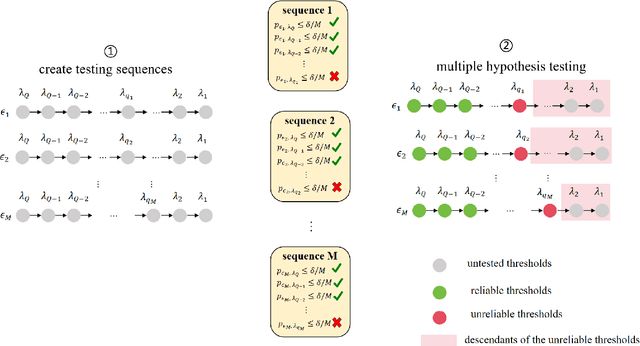

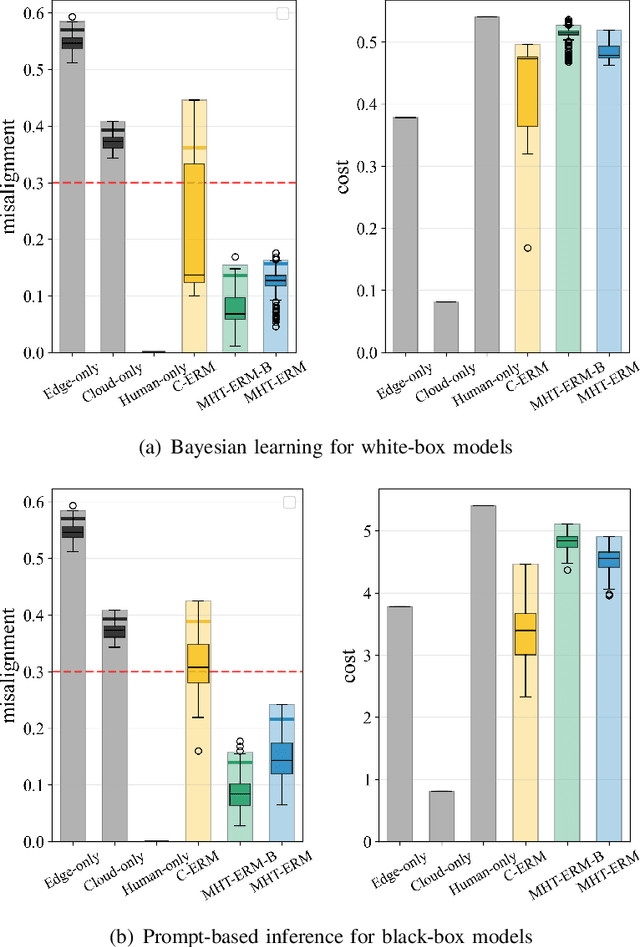

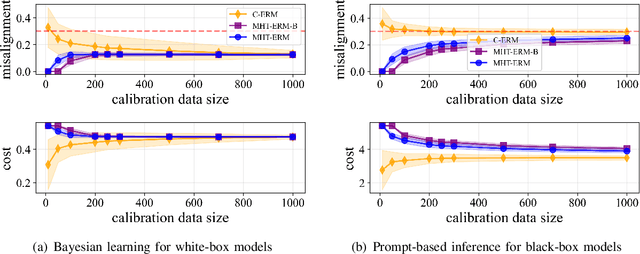

Reliable LLM-Based Edge-Cloud-Expert Cascades for Telecom Knowledge Systems

Dec 23, 2025

Abstract:Large language models (LLMs) are emerging as key enablers of automation in domains such as telecommunications, assisting with tasks including troubleshooting, standards interpretation, and network optimization. However, their deployment in practice must balance inference cost, latency, and reliability. In this work, we study an edge-cloud-expert cascaded LLM-based knowledge system that supports decision-making through a question-and-answer pipeline. In it, an efficient edge model handles routine queries, a more capable cloud model addresses complex cases, and human experts are involved only when necessary. We define a misalignment-cost constrained optimization problem, aiming to minimize average processing cost, while guaranteeing alignment of automated answers with expert judgments. We propose a statistically rigorous threshold selection method based on multiple hypothesis testing (MHT) for a query processing mechanism based on knowledge and confidence tests. The approach provides finite-sample guarantees on misalignment risk. Experiments on the TeleQnA dataset -- a telecom-specific benchmark -- demonstrate that the proposed method achieves superior cost-efficiency compared to conventional cascaded baselines, while ensuring reliability at prescribed confidence levels.

On-Device Fine-Tuning via Backprop-Free Zeroth-Order Optimization

Nov 14, 2025Abstract:On-device fine-tuning is a critical capability for edge AI systems, which must support adaptation to different agentic tasks under stringent memory constraints. Conventional backpropagation (BP)-based training requires storing layer activations and optimizer states, a demand that can be only partially alleviated through checkpointing. In edge deployments in which the model weights must reside entirely in device memory, this overhead severely limits the maximum model size that can be deployed. Memory-efficient zeroth-order optimization (MeZO) alleviates this bottleneck by estimating gradients using forward evaluations alone, eliminating the need for storing intermediate activations or optimizer states. This enables significantly larger models to fit within on-chip memory, albeit at the cost of potentially longer fine-tuning wall-clock time. This paper first provides a theoretical estimate of the relative model sizes that can be accommodated under BP and MeZO training. We then numerically validate the analysis, demonstrating that MeZO exhibits accuracy advantages under on-device memory constraints, provided sufficient wall-clock time is available for fine-tuning.

Online Learning of Modular Bayesian Deep Receivers: Single-Step Adaptation with Streaming Data

Nov 08, 2025Abstract:Deep neural network (DNN)-based receivers offer a powerful alternative to classical model-based designs for wireless communication, especially in complex and nonlinear propagation environments. However, their adoption is challenged by the rapid variability of wireless channels, which makes pre-trained static DNN-based receivers ineffective, and by the latency and computational burden of online stochastic gradient descent (SGD)-based learning. In this work, we propose an online learning framework that enables rapid low-complexity adaptation of DNN-based receivers. Our approach is based on two main tenets. First, we cast online learning as Bayesian tracking in parameter space, enabling a single-step adaptation, which deviates from multi-epoch SGD . Second, we focus on modular DNN architectures that enable parallel, online, and localized variational Bayesian updates. Simulations with practical communication channels demonstrate that our proposed online learning framework can maintain a low error rate with markedly reduced update latency and increased robustness to channel dynamics as compared to traditional gradient descent based method.

Distributed Associative Memory via Online Convex Optimization

Sep 26, 2025Abstract:An associative memory (AM) enables cue-response recall, and associative memorization has recently been noted to underlie the operation of modern neural architectures such as Transformers. This work addresses a distributed setting where agents maintain a local AM to recall their own associations as well as selective information from others. Specifically, we introduce a distributed online gradient descent method that optimizes local AMs at different agents through communication over routing trees. Our theoretical analysis establishes sublinear regret guarantees, and experiments demonstrate that the proposed protocol consistently outperforms existing online optimization baselines.

Multi-Fidelity Hybrid Reinforcement Learning via Information Gain Maximization

Sep 18, 2025Abstract:Optimizing a reinforcement learning (RL) policy typically requires extensive interactions with a high-fidelity simulator of the environment, which are often costly or impractical. Offline RL addresses this problem by allowing training from pre-collected data, but its effectiveness is strongly constrained by the size and quality of the dataset. Hybrid offline-online RL leverages both offline data and interactions with a single simulator of the environment. In many real-world scenarios, however, multiple simulators with varying levels of fidelity and computational cost are available. In this work, we study multi-fidelity hybrid RL for policy optimization under a fixed cost budget. We introduce multi-fidelity hybrid RL via information gain maximization (MF-HRL-IGM), a hybrid offline-online RL algorithm that implements fidelity selection based on information gain maximization through a bootstrapping approach. Theoretical analysis establishes the no-regret property of MF-HRL-IGM, while empirical evaluations demonstrate its superior performance compared to existing benchmarks.

Synthetic Counterfactual Labels for Efficient Conformal Counterfactual Inference

Sep 04, 2025Abstract:This work addresses the problem of constructing reliable prediction intervals for individual counterfactual outcomes. Existing conformal counterfactual inference (CCI) methods provide marginal coverage guarantees but often produce overly conservative intervals, particularly under treatment imbalance when counterfactual samples are scarce. We introduce synthetic data-powered CCI (SP-CCI), a new framework that augments the calibration set with synthetic counterfactual labels generated by a pre-trained counterfactual model. To ensure validity, SP-CCI incorporates synthetic samples into a conformal calibration procedure based on risk-controlling prediction sets (RCPS) with a debiasing step informed by prediction-powered inference (PPI). We prove that SP-CCI achieves tighter prediction intervals while preserving marginal coverage, with theoretical guarantees under both exact and approximate importance weighting. Empirical results on different datasets confirm that SP-CCI consistently reduces interval width compared to standard CCI across all settings.

Data-Efficient Prediction-Powered Calibration via Cross-Validation

Jul 27, 2025Abstract:Calibration data are necessary to formally quantify the uncertainty of the decisions produced by an existing artificial intelligence (AI) model. To overcome the common issue of scarce calibration data, a promising approach is to employ synthetic labels produced by a (generally different) predictive model. However, fine-tuning the label-generating predictor on the inference task of interest, as well as estimating the residual bias of the synthetic labels, demand additional data, potentially exacerbating the calibration data scarcity problem. This paper introduces a novel approach that efficiently utilizes limited calibration data to simultaneously fine-tune a predictor and estimate the bias of the synthetic labels. The proposed method yields prediction sets with rigorous coverage guarantees for AI-generated decisions. Experimental results on an indoor localization problem validate the effectiveness and performance gains of our solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge