Annie E. Paine

Conservative quantum offline model-based optimization

Jun 24, 2025Abstract:Offline model-based optimization (MBO) refers to the task of optimizing a black-box objective function using only a fixed set of prior input-output data, without any active experimentation. Recent work has introduced quantum extremal learning (QEL), which leverages the expressive power of variational quantum circuits to learn accurate surrogate functions by training on a few data points. However, as widely studied in the classical machine learning literature, predictive models may incorrectly extrapolate objective values in unexplored regions, leading to the selection of overly optimistic solutions. In this paper, we propose integrating QEL with conservative objective models (COM) - a regularization technique aimed at ensuring cautious predictions on out-of-distribution inputs. The resulting hybrid algorithm, COM-QEL, builds on the expressive power of quantum neural networks while safeguarding generalization via conservative modeling. Empirical results on benchmark optimization tasks demonstrate that COM-QEL reliably finds solutions with higher true objective values compared to the original QEL, validating its superiority for offline design problems.

What can we learn from quantum convolutional neural networks?

Aug 31, 2023Abstract:We can learn from analyzing quantum convolutional neural networks (QCNNs) that: 1) working with quantum data can be perceived as embedding physical system parameters through a hidden feature map; 2) their high performance for quantum phase recognition can be attributed to generation of a very suitable basis set during the ground state embedding, where quantum criticality of spin models leads to basis functions with rapidly changing features; 3) pooling layers of QCNNs are responsible for picking those basis functions that can contribute to forming a high-performing decision boundary, and the learning process corresponds to adapting the measurement such that few-qubit operators are mapped to full-register observables; 4) generalization of QCNN models strongly depends on the embedding type, and that rotation-based feature maps with the Fourier basis require careful feature engineering; 5) accuracy and generalization of QCNNs with readout based on a limited number of shots favor the ground state embeddings and associated physics-informed models. We demonstrate these points in simulation, where our results shed light on classification for physical processes, relevant for applications in sensing. Finally, we show that QCNNs with properly chosen ground state embeddings can be used for fluid dynamics problems, expressing shock wave solutions with good generalization and proven trainability.

Quantum Quantile Mechanics: Solving Stochastic Differential Equations for Generating Time-Series

Aug 10, 2021

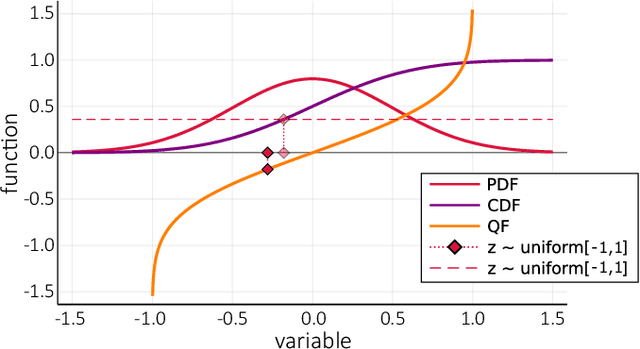

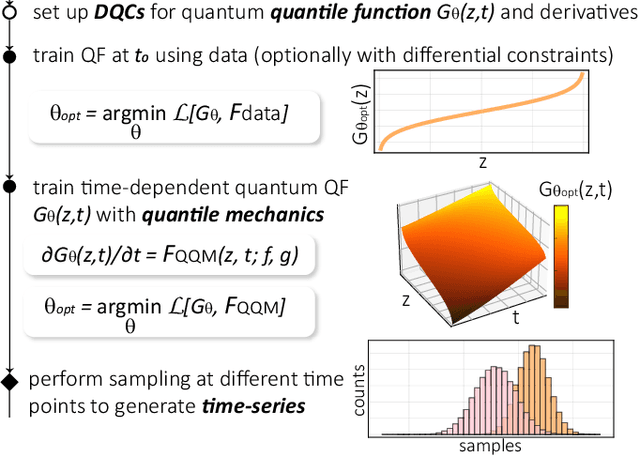

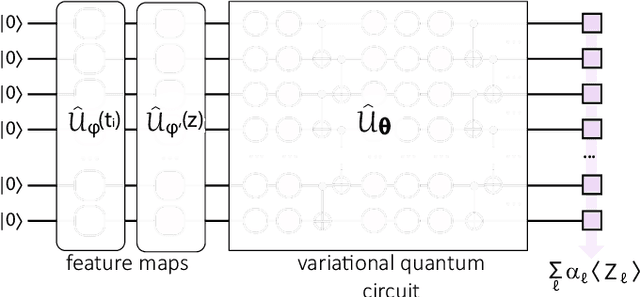

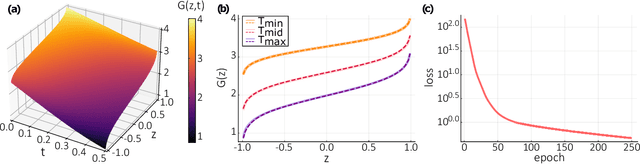

Abstract:We propose a quantum algorithm for sampling from a solution of stochastic differential equations (SDEs). Using differentiable quantum circuits (DQCs) with a feature map encoding of latent variables, we represent the quantile function for an underlying probability distribution and extract samples as DQC expectation values. Using quantile mechanics we propagate the system in time, thereby allowing for time-series generation. We test the method by simulating the Ornstein-Uhlenbeck process and sampling at times different from the initial point, as required in financial analysis and dataset augmentation. Additionally, we analyse continuous quantum generative adversarial networks (qGANs), and show that they represent quantile functions with a modified (reordered) shape that impedes their efficient time-propagation. Our results shed light on the connection between quantum quantile mechanics (QQM) and qGANs for SDE-based distributions, and point the importance of differential constraints for model training, analogously with the recent success of physics informed neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge