Vincent E. Elfving

Experimental differentiation and extremization with analog quantum circuits

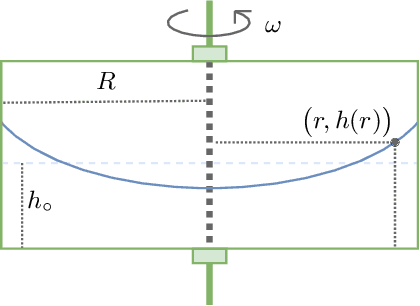

Oct 23, 2025Abstract:Solving and optimizing differential equations (DEs) is ubiquitous in both engineering and fundamental science. The promise of quantum architectures to accelerate scientific computing thus naturally involved interest towards how efficiently quantum algorithms can solve DEs. Differentiable quantum circuits (DQC) offer a viable route to compute DE solutions using a variational approach amenable to existing quantum computers, by producing a machine-learnable surrogate of the solution. Quantum extremal learning (QEL) complements such approach by finding extreme points in the output of learnable models of unknown (implicit) functions, offering a powerful tool to bypass a full DE solution, in cases where the crux consists in retrieving solution extrema. In this work, we provide the results from the first experimental demonstration of both DQC and QEL, displaying their performance on a synthetic usecase. Whilst both DQC and QEL are expected to require digital quantum hardware, we successfully challenge this assumption by running a closed-loop instance on a commercial analog quantum computer, based upon neutral atom technology.

Geometric quantum machine learning of BQP$^A$ protocols and latent graph classifiers

Feb 06, 2024Abstract:Geometric quantum machine learning (GQML) aims to embed problem symmetries for learning efficient solving protocols. However, the question remains if (G)QML can be routinely used for constructing protocols with an exponential separation from classical analogs. In this Letter we consider Simon's problem for learning properties of Boolean functions, and show that this can be related to an unsupervised circuit classification problem. Using the workflow of geometric QML, we learn from first principles Simon's algorithm, thus discovering an example of BQP$^A\neq$BPP protocol with respect to some dataset (oracle $A$). Our key findings include the development of an equivariant feature map for embedding Boolean functions, based on twirling with respect to identified bitflip and permutational symmetries, and measurement based on invariant observables with a sampling advantage. The proposed workflow points to the importance of data embeddings and classical post-processing, while keeping the variational circuit as a trivial identity operator. Next, developing the intuition for the function learning, we visualize instances as directed computational hypergraphs, and observe that the GQML protocol can access their global topological features for distinguishing bijective and surjective functions. Finally, we discuss the prospects for learning other BQP$^A$-type protocols, and conjecture that this depends on the ability of simplifying embeddings-based oracles $A$ applied as a linear combination of unitaries.

Qadence: a differentiable interface for digital-analog programs

Jan 18, 2024Abstract:Digital-analog quantum computing (DAQC) is an alternative paradigm for universal quantum computation combining digital single-qubit gates with global analog operations acting on a register of interacting qubits. Currently, no available open-source software is tailored to express, differentiate, and execute programs within the DAQC paradigm. In this work, we address this shortfall by presenting Qadence, a high-level programming interface for building complex digital-analog quantum programs developed at Pasqal. Thanks to its flexible interface, native differentiability, and focus on real-device execution, Qadence aims at advancing research on variational quantum algorithms built for native DAQC platforms such as Rydberg atom arrays.

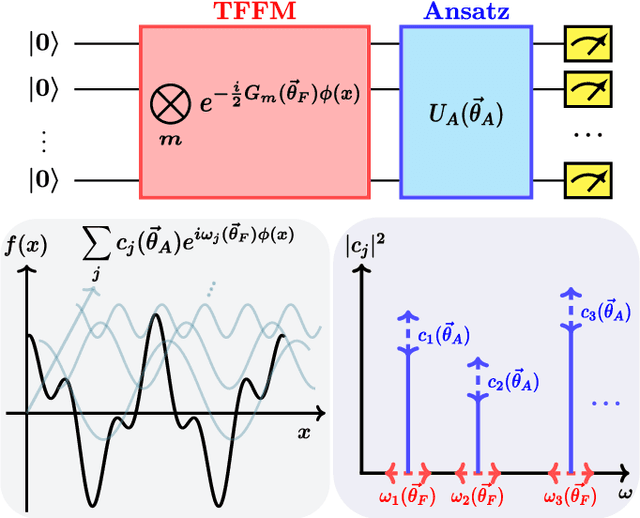

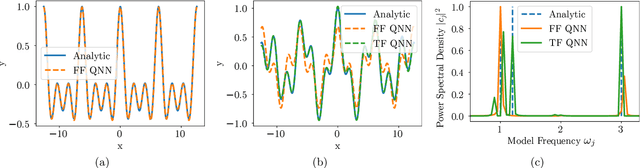

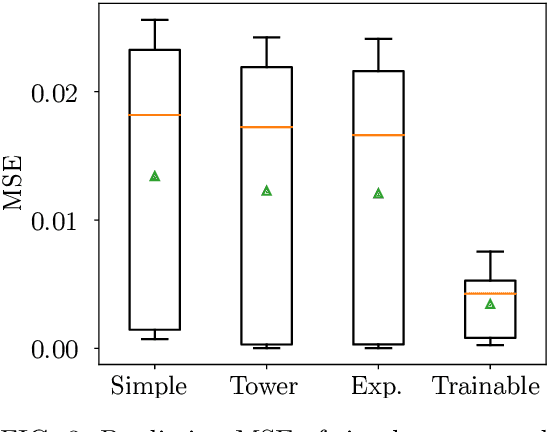

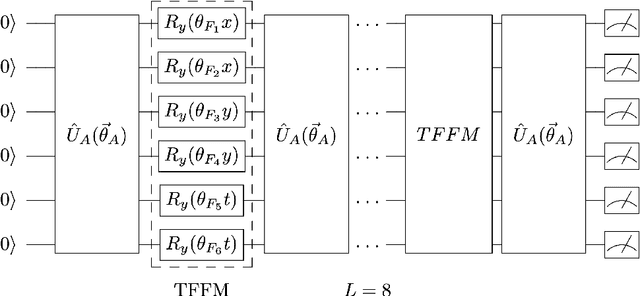

Let Quantum Neural Networks Choose Their Own Frequencies

Sep 06, 2023

Abstract:Parameterized quantum circuits as machine learning models are typically well described by their representation as a partial Fourier series of the input features, with frequencies uniquely determined by the feature map's generator Hamiltonians. Ordinarily, these data-encoding generators are chosen in advance, fixing the space of functions that can be represented. In this work we consider a generalization of quantum models to include a set of trainable parameters in the generator, leading to a trainable frequency (TF) quantum model. We numerically demonstrate how TF models can learn generators with desirable properties for solving the task at hand, including non-regularly spaced frequencies in their spectra and flexible spectral richness. Finally, we showcase the real-world effectiveness of our approach, demonstrating an improved accuracy in solving the Navier-Stokes equations using a TF model with only a single parameter added to each encoding operation. Since TF models encompass conventional fixed frequency models, they may offer a sensible default choice for variational quantum machine learning.

What can we learn from quantum convolutional neural networks?

Aug 31, 2023Abstract:We can learn from analyzing quantum convolutional neural networks (QCNNs) that: 1) working with quantum data can be perceived as embedding physical system parameters through a hidden feature map; 2) their high performance for quantum phase recognition can be attributed to generation of a very suitable basis set during the ground state embedding, where quantum criticality of spin models leads to basis functions with rapidly changing features; 3) pooling layers of QCNNs are responsible for picking those basis functions that can contribute to forming a high-performing decision boundary, and the learning process corresponds to adapting the measurement such that few-qubit operators are mapped to full-register observables; 4) generalization of QCNN models strongly depends on the embedding type, and that rotation-based feature maps with the Fourier basis require careful feature engineering; 5) accuracy and generalization of QCNNs with readout based on a limited number of shots favor the ground state embeddings and associated physics-informed models. We demonstrate these points in simulation, where our results shed light on classification for physical processes, relevant for applications in sensing. Finally, we show that QCNNs with properly chosen ground state embeddings can be used for fluid dynamics problems, expressing shock wave solutions with good generalization and proven trainability.

Harmonic (Quantum) Neural Networks

Dec 14, 2022

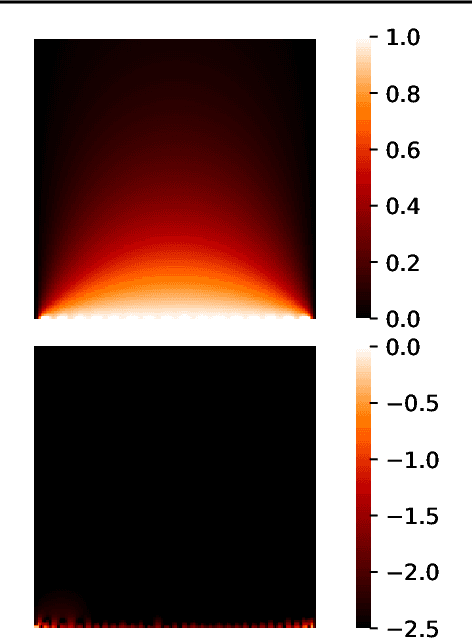

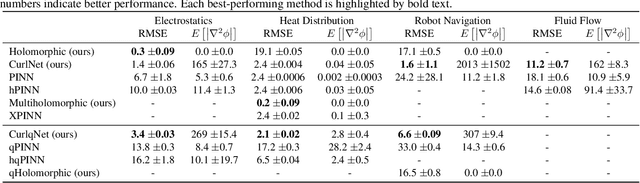

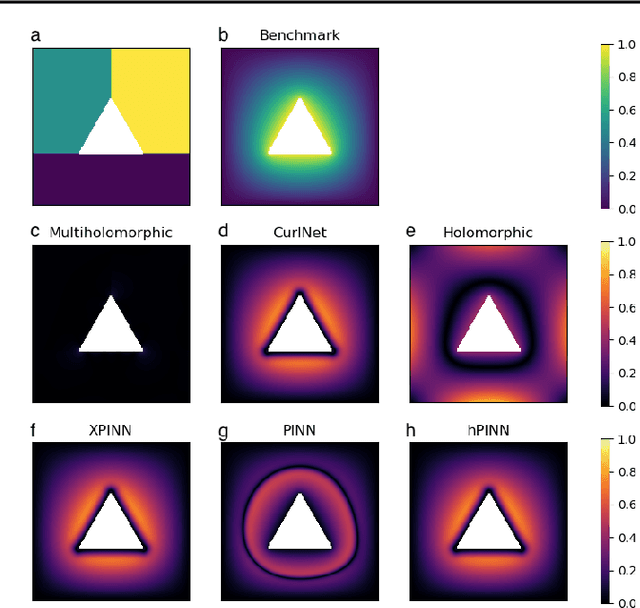

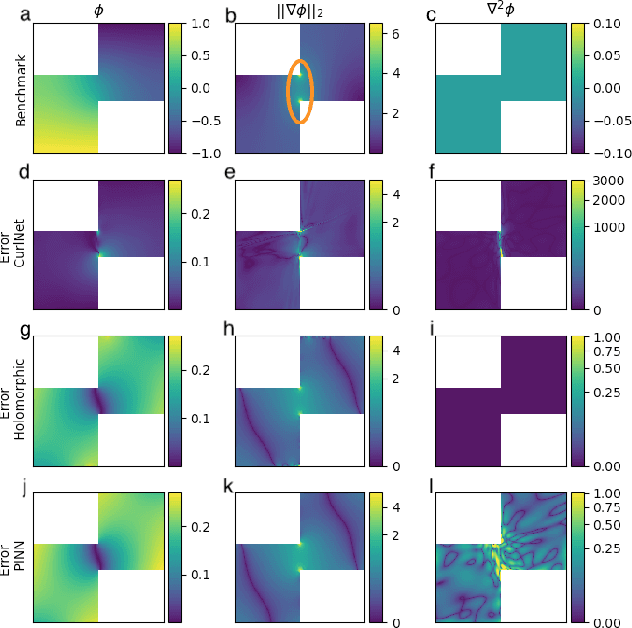

Abstract:Harmonic functions are abundant in nature, appearing in limiting cases of Maxwell's, Navier-Stokes equations, the heat and the wave equation. Consequently, there are many applications of harmonic functions, spanning applications from industrial process optimisation to robotic path planning and the calculation of first exit times of random walks. Despite their ubiquity and relevance, there have been few attempts to develop effective means of representing harmonic functions in the context of machine learning architectures, either in machine learning on classical computers, or in the nascent field of quantum machine learning. Architectures which impose or encourage an inductive bias towards harmonic functions would facilitate data-driven modelling and the solution of inverse problems in a range of applications. For classical neural networks, it has already been established how leveraging inductive biases can in general lead to improved performance of learning algorithms. The introduction of such inductive biases within a quantum machine learning setting is instead still in its nascent stages. In this work, we derive exactly-harmonic (conventional- and quantum-) neural networks in two dimensions for simply-connected domains by leveraging the characteristics of holomorphic complex functions. We then demonstrate how these can be approximately extended to multiply-connected two-dimensional domains using techniques inspired by domain decomposition in physics-informed neural networks. We further provide architectures and training protocols to effectively impose approximately harmonic constraints in three dimensions and higher, and as a corollary we report divergence-free network architectures in arbitrary dimensions. Our approaches are demonstrated with applications to heat transfer, electrostatics and robot navigation, with comparisons to physics-informed neural networks included.

Financial Risk Management on a Neutral Atom Quantum Processor

Dec 06, 2022

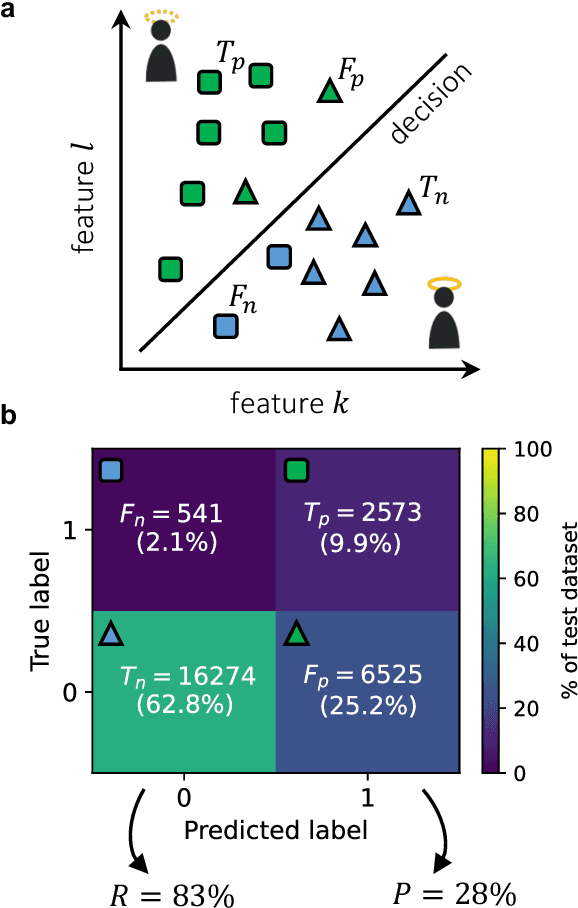

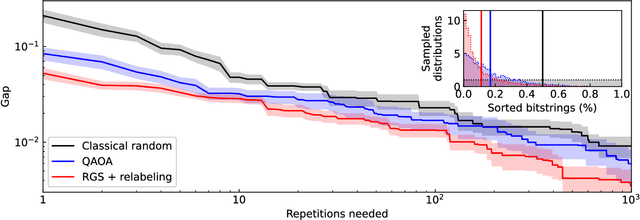

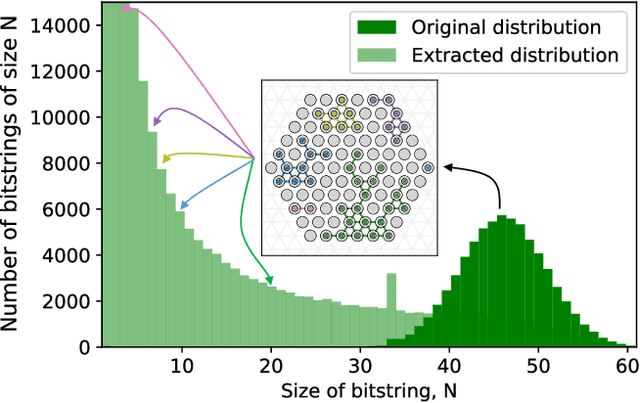

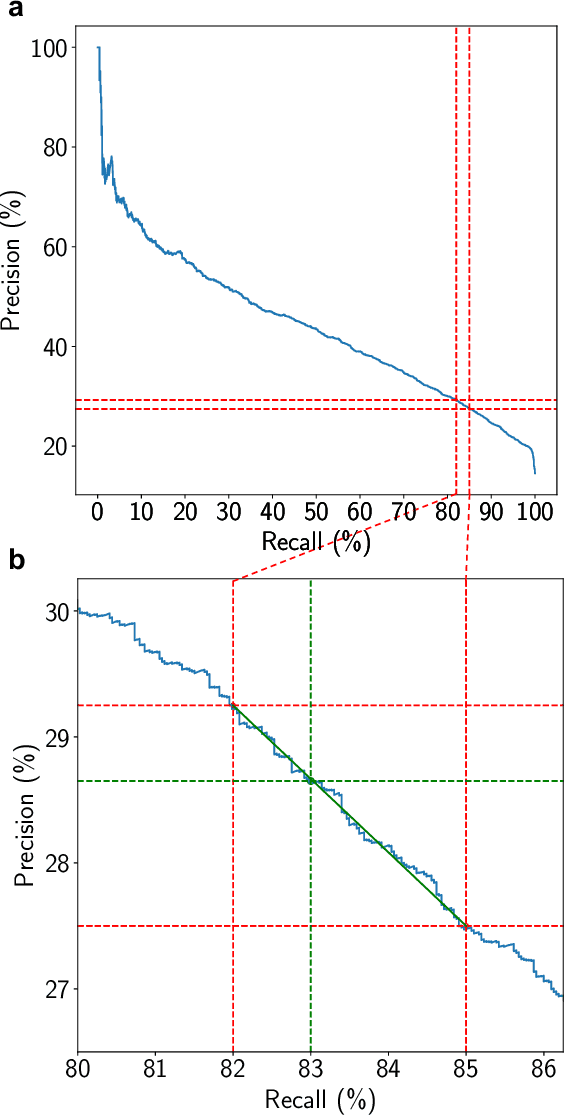

Abstract:Machine Learning models capable of handling the large datasets collected in the financial world can often become black boxes expensive to run. The quantum computing paradigm suggests new optimization techniques, that combined with classical algorithms, may deliver competitive, faster and more interpretable models. In this work we propose a quantum-enhanced machine learning solution for the prediction of credit rating downgrades, also known as fallen-angels forecasting in the financial risk management field. We implement this solution on a neutral atom Quantum Processing Unit with up to 60 qubits on a real-life dataset. We report competitive performances against the state-of-the-art Random Forest benchmark whilst our model achieves better interpretability and comparable training times. We examine how to improve performance in the near-term validating our ideas with Tensor Networks-based numerical simulations.

Protocols for classically training quantum generative models on probability distributions

Oct 24, 2022Abstract:Quantum Generative Modelling (QGM) relies on preparing quantum states and generating samples from these states as hidden - or known - probability distributions. As distributions from some classes of quantum states (circuits) are inherently hard to sample classically, QGM represents an excellent testbed for quantum supremacy experiments. Furthermore, generative tasks are increasingly relevant for industrial machine learning applications, and thus QGM is a strong candidate for demonstrating a practical quantum advantage. However, this requires that quantum circuits are trained to represent industrially relevant distributions, and the corresponding training stage has an extensive training cost for current quantum hardware in practice. In this work, we propose protocols for classical training of QGMs based on circuits of the specific type that admit an efficient gradient computation, while remaining hard to sample. In particular, we consider Instantaneous Quantum Polynomial (IQP) circuits and their extensions. Showing their classical simulability in terms of the time complexity, sparsity and anti-concentration properties, we develop a classically tractable way of simulating their output probability distributions, allowing classical training to a target probability distribution. The corresponding quantum sampling from IQPs can be performed efficiently, unlike when using classical sampling. We numerically demonstrate the end-to-end training of IQP circuits using probability distributions for up to 30 qubits on a regular desktop computer. When applied to industrially relevant distributions this combination of classical training with quantum sampling represents an avenue for reaching advantage in the NISQ era.

Integral Transforms in a Physics-Informed (Quantum) Neural Network setting: Applications & Use-Cases

Jun 28, 2022

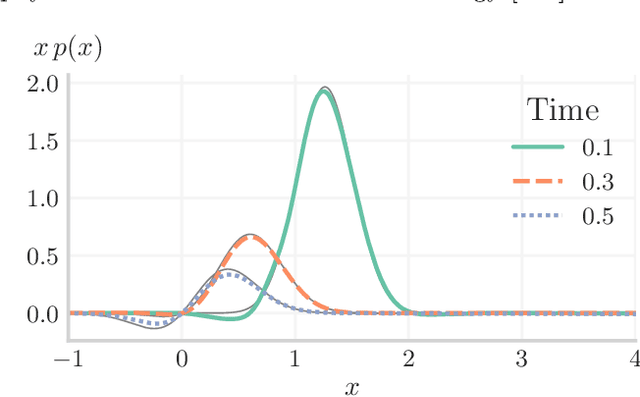

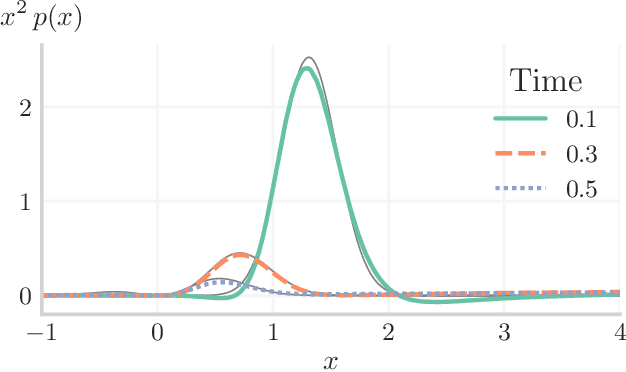

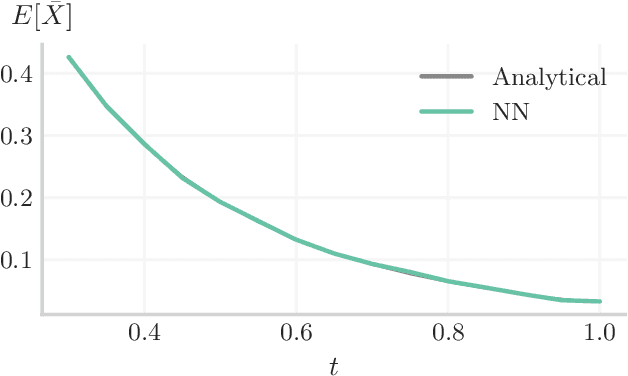

Abstract:In many computational problems in engineering and science, function or model differentiation is essential, but also integration is needed. An important class of computational problems include so-called integro-differential equations which include both integrals and derivatives of a function. In another example, stochastic differential equations can be written in terms of a partial differential equation of a probability density function of the stochastic variable. To learn characteristics of the stochastic variable based on the density function, specific integral transforms, namely moments, of the density function need to be calculated. Recently, the machine learning paradigm of Physics-Informed Neural Networks emerged with increasing popularity as a method to solve differential equations by leveraging automatic differentiation. In this work, we propose to augment the paradigm of Physics-Informed Neural Networks with automatic integration in order to compute complex integral transforms on trained solutions, and to solve integro-differential equations where integrals are computed on-the-fly during training. Furthermore, we showcase the techniques in various application settings, numerically simulating quantum computer-based neural networks as well as classical neural networks.

Quantum Extremal Learning

May 05, 2022

Abstract:We propose a quantum algorithm for `extremal learning', which is the process of finding the input to a hidden function that extremizes the function output, without having direct access to the hidden function, given only partial input-output (training) data. The algorithm, called quantum extremal learning (QEL), consists of a parametric quantum circuit that is variationally trained to model data input-output relationships and where a trainable quantum feature map, that encodes the input data, is analytically differentiated in order to find the coordinate that extremizes the model. This enables the combination of established quantum machine learning modelling with established quantum optimization, on a single circuit/quantum computer. We have tested our algorithm on a range of classical datasets based on either discrete or continuous input variables, both of which are compatible with the algorithm. In case of discrete variables, we test our algorithm on synthetic problems formulated based on Max-Cut problem generators and also considering higher order correlations in the input-output relationships. In case of the continuous variables, we test our algorithm on synthetic datasets in 1D and simple ordinary differential functions. We find that the algorithm is able to successfully find the extremal value of such problems, even when the training dataset is sparse or a small fraction of the input configuration space. We additionally show how the algorithm can be used for much more general cases of higher dimensionality, complex differential equations, and with full flexibility in the choice of both modeling and optimization ansatz. We envision that due to its general framework and simple construction, the QEL algorithm will be able to solve a wide variety of applications in different fields, opening up areas of further research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge