Oleksandr Kyriienko

Photonics-Enhanced Graph Convolutional Networks

Dec 17, 2025Abstract:Photonics can offer a hardware-native route for machine learning (ML). However, efficient deployment of photonics-enhanced ML requires hybrid workflows that integrate optical processing with conventional CPU/GPU based neural network architectures. Here, we propose such a workflow that combines photonic positional embeddings (PEs) with advanced graph ML models. We introduce a photonics-based method that augments graph convolutional networks (GCNs) with PEs derived from light propagation on synthetic frequency lattices whose couplings match the input graph. We simulate propagation and readout to obtain internode intensity correlation matrices, which are used as PEs in GCNs to provide global structural information. Evaluated on Long Range Graph Benchmark molecular datasets, the method outperforms baseline GCNs with Laplacian based PEs, achieving $6.3\%$ lower mean absolute error for regression and $2.3\%$ higher average precision for classification tasks using a two-layer GCN as a baseline. When implemented in high repetition rate photonic hardware, correlation measurements can enable fast feature generation by bypassing digital simulation of PEs. Our results show that photonic PEs improve GCN performance and support optical acceleration of graph ML.

Can Geometric Quantum Machine Learning Lead to Advantage in Barcode Classification?

Sep 02, 2024

Abstract:We consider the problem of distinguishing two vectors (visualized as images or barcodes) and learning if they are related to one another. For this, we develop a geometric quantum machine learning (GQML) approach with embedded symmetries that allows for the classification of similar and dissimilar pairs based on global correlations, and enables generalization from just a few samples. Unlike GQML algorithms developed to date, we propose to focus on symmetry-aware measurement adaptation that outperforms unitary parametrizations. We compare GQML for similarity testing against classical deep neural networks and convolutional neural networks with Siamese architectures. We show that quantum networks largely outperform their classical counterparts. We explain this difference in performance by analyzing correlated distributions used for composing our dataset. We relate the similarity testing with problems that showcase a proven maximal separation between the BQP complexity class and the polynomial hierarchy. While the ability to achieve advantage largely depends on how data are loaded, we discuss how similar problems can benefit from quantum machine learning.

Geometric quantum machine learning of BQP$^A$ protocols and latent graph classifiers

Feb 06, 2024Abstract:Geometric quantum machine learning (GQML) aims to embed problem symmetries for learning efficient solving protocols. However, the question remains if (G)QML can be routinely used for constructing protocols with an exponential separation from classical analogs. In this Letter we consider Simon's problem for learning properties of Boolean functions, and show that this can be related to an unsupervised circuit classification problem. Using the workflow of geometric QML, we learn from first principles Simon's algorithm, thus discovering an example of BQP$^A\neq$BPP protocol with respect to some dataset (oracle $A$). Our key findings include the development of an equivariant feature map for embedding Boolean functions, based on twirling with respect to identified bitflip and permutational symmetries, and measurement based on invariant observables with a sampling advantage. The proposed workflow points to the importance of data embeddings and classical post-processing, while keeping the variational circuit as a trivial identity operator. Next, developing the intuition for the function learning, we visualize instances as directed computational hypergraphs, and observe that the GQML protocol can access their global topological features for distinguishing bijective and surjective functions. Finally, we discuss the prospects for learning other BQP$^A$-type protocols, and conjecture that this depends on the ability of simplifying embeddings-based oracles $A$ applied as a linear combination of unitaries.

What can we learn from quantum convolutional neural networks?

Aug 31, 2023Abstract:We can learn from analyzing quantum convolutional neural networks (QCNNs) that: 1) working with quantum data can be perceived as embedding physical system parameters through a hidden feature map; 2) their high performance for quantum phase recognition can be attributed to generation of a very suitable basis set during the ground state embedding, where quantum criticality of spin models leads to basis functions with rapidly changing features; 3) pooling layers of QCNNs are responsible for picking those basis functions that can contribute to forming a high-performing decision boundary, and the learning process corresponds to adapting the measurement such that few-qubit operators are mapped to full-register observables; 4) generalization of QCNN models strongly depends on the embedding type, and that rotation-based feature maps with the Fourier basis require careful feature engineering; 5) accuracy and generalization of QCNNs with readout based on a limited number of shots favor the ground state embeddings and associated physics-informed models. We demonstrate these points in simulation, where our results shed light on classification for physical processes, relevant for applications in sensing. Finally, we show that QCNNs with properly chosen ground state embeddings can be used for fluid dynamics problems, expressing shock wave solutions with good generalization and proven trainability.

Protocols for classically training quantum generative models on probability distributions

Oct 24, 2022Abstract:Quantum Generative Modelling (QGM) relies on preparing quantum states and generating samples from these states as hidden - or known - probability distributions. As distributions from some classes of quantum states (circuits) are inherently hard to sample classically, QGM represents an excellent testbed for quantum supremacy experiments. Furthermore, generative tasks are increasingly relevant for industrial machine learning applications, and thus QGM is a strong candidate for demonstrating a practical quantum advantage. However, this requires that quantum circuits are trained to represent industrially relevant distributions, and the corresponding training stage has an extensive training cost for current quantum hardware in practice. In this work, we propose protocols for classical training of QGMs based on circuits of the specific type that admit an efficient gradient computation, while remaining hard to sample. In particular, we consider Instantaneous Quantum Polynomial (IQP) circuits and their extensions. Showing their classical simulability in terms of the time complexity, sparsity and anti-concentration properties, we develop a classically tractable way of simulating their output probability distributions, allowing classical training to a target probability distribution. The corresponding quantum sampling from IQPs can be performed efficiently, unlike when using classical sampling. We numerically demonstrate the end-to-end training of IQP circuits using probability distributions for up to 30 qubits on a regular desktop computer. When applied to industrially relevant distributions this combination of classical training with quantum sampling represents an avenue for reaching advantage in the NISQ era.

Quantum Model-Discovery

Nov 11, 2021

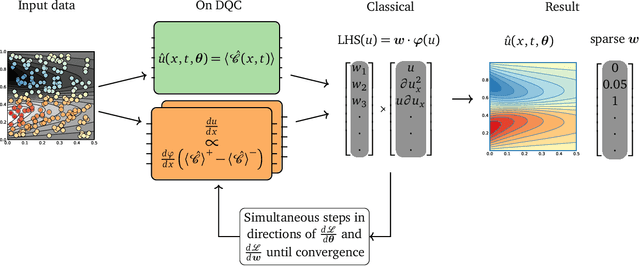

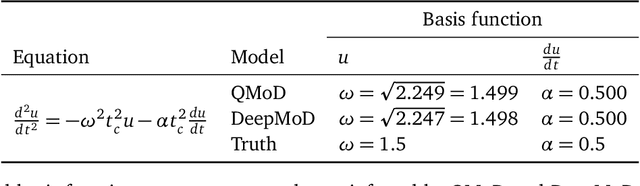

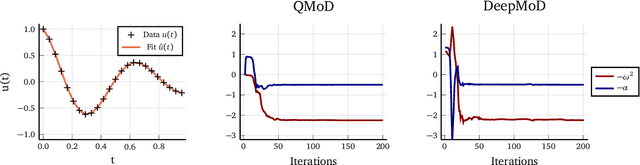

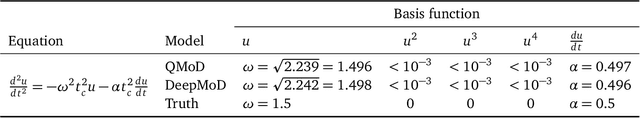

Abstract:Quantum computing promises to speed up some of the most challenging problems in science and engineering. Quantum algorithms have been proposed showing theoretical advantages in applications ranging from chemistry to logistics optimization. Many problems appearing in science and engineering can be rewritten as a set of differential equations. Quantum algorithms for solving differential equations have shown a provable advantage in the fault-tolerant quantum computing regime, where deep and wide quantum circuits can be used to solve large linear systems like partial differential equations (PDEs) efficiently. Recently, variational approaches to solving non-linear PDEs also with near-term quantum devices were proposed. One of the most promising general approaches is based on recent developments in the field of scientific machine learning for solving PDEs. We extend the applicability of near-term quantum computers to more general scientific machine learning tasks, including the discovery of differential equations from a dataset of measurements. We use differentiable quantum circuits (DQCs) to solve equations parameterized by a library of operators, and perform regression on a combination of data and equations. Our results show a promising path to Quantum Model Discovery (QMoD), on the interface between classical and quantum machine learning approaches. We demonstrate successful parameter inference and equation discovery using QMoD on different systems including a second-order, ordinary differential equation and a non-linear, partial differential equation.

Quantum Quantile Mechanics: Solving Stochastic Differential Equations for Generating Time-Series

Aug 10, 2021

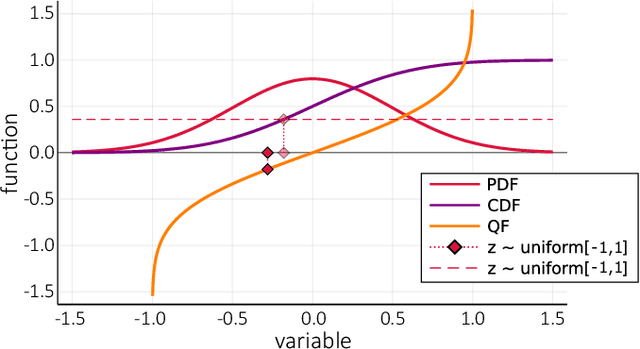

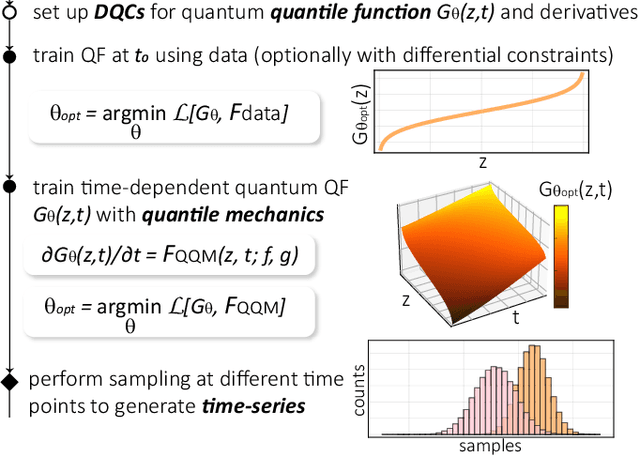

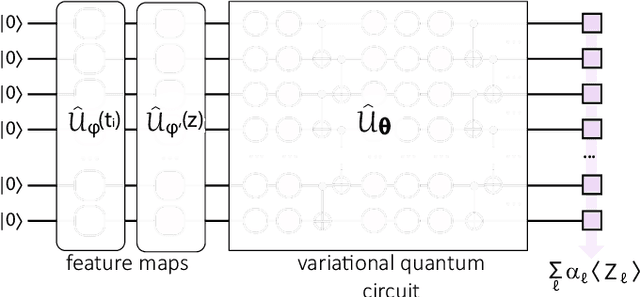

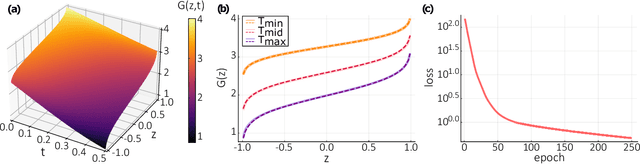

Abstract:We propose a quantum algorithm for sampling from a solution of stochastic differential equations (SDEs). Using differentiable quantum circuits (DQCs) with a feature map encoding of latent variables, we represent the quantile function for an underlying probability distribution and extract samples as DQC expectation values. Using quantile mechanics we propagate the system in time, thereby allowing for time-series generation. We test the method by simulating the Ornstein-Uhlenbeck process and sampling at times different from the initial point, as required in financial analysis and dataset augmentation. Additionally, we analyse continuous quantum generative adversarial networks (qGANs), and show that they represent quantile functions with a modified (reordered) shape that impedes their efficient time-propagation. Our results shed light on the connection between quantum quantile mechanics (QQM) and qGANs for SDE-based distributions, and point the importance of differential constraints for model training, analogously with the recent success of physics informed neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge