Niklas Heim

Surrogate-Based Differentiable Pipeline for Shape Optimization

Nov 13, 2025Abstract:Gradient-based optimization of engineering designs is limited by non-differentiable components in the typical computer-aided engineering (CAE) workflow, which calculates performance metrics from design parameters. While gradient-based methods could provide noticeable speed-ups in high-dimensional design spaces, codes for meshing, physical simulations, and other common components are not differentiable even if the math or physics underneath them is. We propose replacing non-differentiable pipeline components with surrogate models which are inherently differentiable. Using a toy example of aerodynamic shape optimization, we demonstrate an end-to-end differentiable pipeline where a 3D U-Net full-field surrogate replaces both meshing and simulation steps by training it on the mapping between the signed distance field (SDF) of the shape and the fields of interest. This approach enables gradient-based shape optimization without the need for differentiable solvers, which can be useful in situations where adjoint methods are unavailable and/or hard to implement.

Qadence: a differentiable interface for digital-analog programs

Jan 18, 2024Abstract:Digital-analog quantum computing (DAQC) is an alternative paradigm for universal quantum computation combining digital single-qubit gates with global analog operations acting on a register of interacting qubits. Currently, no available open-source software is tailored to express, differentiate, and execute programs within the DAQC paradigm. In this work, we address this shortfall by presenting Qadence, a high-level programming interface for building complex digital-analog quantum programs developed at Pasqal. Thanks to its flexible interface, native differentiability, and focus on real-device execution, Qadence aims at advancing research on variational quantum algorithms built for native DAQC platforms such as Rydberg atom arrays.

Quantum Model-Discovery

Nov 11, 2021

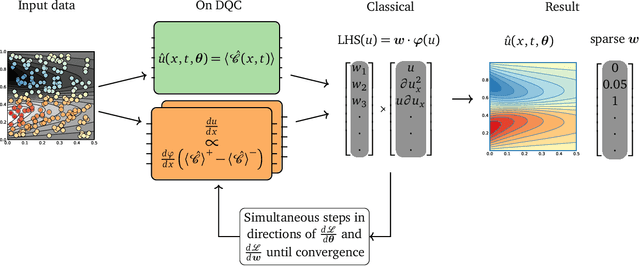

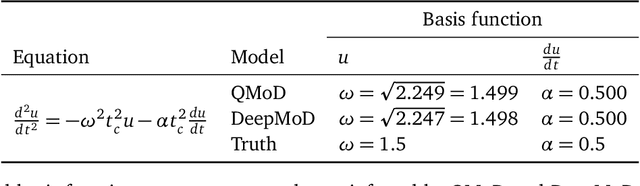

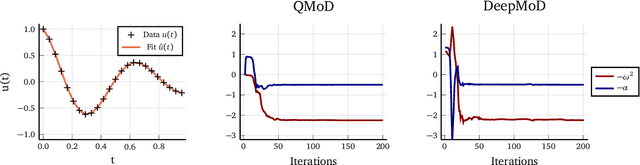

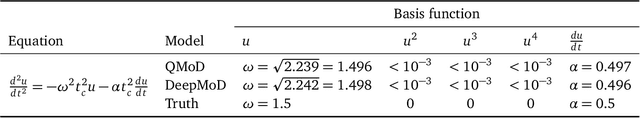

Abstract:Quantum computing promises to speed up some of the most challenging problems in science and engineering. Quantum algorithms have been proposed showing theoretical advantages in applications ranging from chemistry to logistics optimization. Many problems appearing in science and engineering can be rewritten as a set of differential equations. Quantum algorithms for solving differential equations have shown a provable advantage in the fault-tolerant quantum computing regime, where deep and wide quantum circuits can be used to solve large linear systems like partial differential equations (PDEs) efficiently. Recently, variational approaches to solving non-linear PDEs also with near-term quantum devices were proposed. One of the most promising general approaches is based on recent developments in the field of scientific machine learning for solving PDEs. We extend the applicability of near-term quantum computers to more general scientific machine learning tasks, including the discovery of differential equations from a dataset of measurements. We use differentiable quantum circuits (DQCs) to solve equations parameterized by a library of operators, and perform regression on a combination of data and equations. Our results show a promising path to Quantum Model Discovery (QMoD), on the interface between classical and quantum machine learning approaches. We demonstrate successful parameter inference and equation discovery using QMoD on different systems including a second-order, ordinary differential equation and a non-linear, partial differential equation.

Neural Power Units

Jun 05, 2020

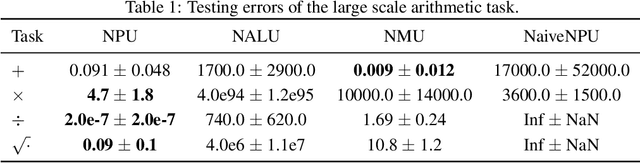

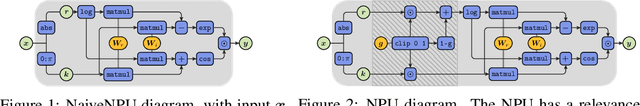

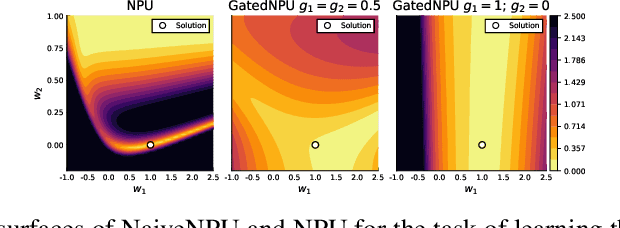

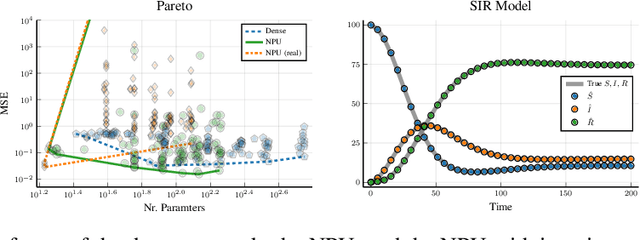

Abstract:Conventional Neural Networks can approximate simple arithmetic operations, but fail to generalize beyond the range of numbers that were seen during training. Neural Arithmetic Units aim to overcome this difficulty, but current arithmetic units are either limited to operate on positive numbers or can only represent a subset of arithmetic operations. We introduce the Neural Power Unit (NPU) that operates on the full domain of real numbers and is capable of learning arbitrary power functions in a single layer. The NPU thus fixes the shortcomings of existing arithmetic units and extends their expressivity. We achieve this by using complex arithmetic without requiring a conversion of the network to complex numbers. A simplification of the unit to the RealNPU yields a highly interpretable model. We show that the NPUs outperform their competitors in terms of accuracy and sparsity on artificial arithmetic datasets, and that the RealNPU can discover the governing equations of a dynamical systems only from data.

Rodent: Relevance determination in ODE

Dec 02, 2019

Abstract:From a set of observed trajectories of a partially observed system, we aim to learn its underlying (physical) process without having to make too many assumptions about the generating model. We start with a very general, over-parameterized ordinary differential equation (ODE) of order N and learn the minimal complexity of the model, by which we mean both the order of the ODE as well as the minimum number of non-zero parameters that are needed to solve the problem. The minimal complexity is found by combining the Variational Auto-Encoder (VAE) with Automatic Relevance Determination (ARD) to the problem of learning the parameters of an ODE which we call Rodent. We show that it is possible to learn not only one specific model for a single process, but a manifold of models representing harmonic signals in general.

Adaptive Anomaly Detection in Chaotic Time Series with a Spatially Aware Echo State Network

Sep 02, 2019

Abstract:This work builds an automated anomaly detection method for chaotic time series, and more concretely for turbulent, high-dimensional, ocean simulations. We solve this task by extending the Echo State Network by spatially aware input maps, such as convolutions, gradients, cosine transforms, et cetera, as well as a spatially aware loss function. The spatial ESN is used to create predictions which reduce the detection problem to thresholding of the prediction error. We benchmark our detection framework on different tasks of increasing difficulty to show the generality of the framework before applying it to raw climate model output in the region of the Japanese ocean current Kuroshio, which exhibits a bimodality that is not easily detected by the naked eye. The code is available as an open source Python package, Torsk, available at https://github.com/nmheim/torsk, where we also provide supplementary material and programs that reproduce the results shown in this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge