Zihang Song

Turbo-ICL: In-Context Learning-Based Turbo Equalization

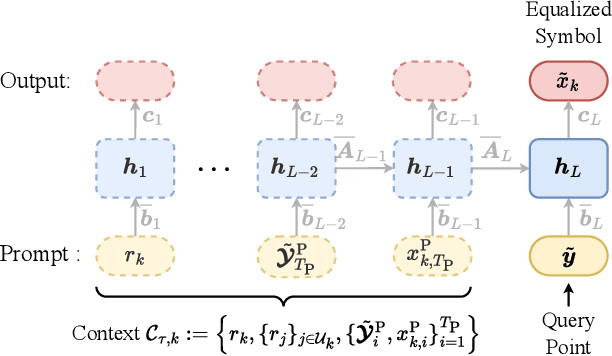

May 09, 2025Abstract:This paper introduces a novel in-context learning (ICL) framework, inspired by large language models (LLMs), for soft-input soft-output channel equalization in coded multiple-input multiple-output (MIMO) systems. The proposed approach learns to infer posterior symbol distributions directly from a prompt of pilot signals and decoder feedback. A key innovation is the use of prompt augmentation to incorporate extrinsic information from the decoder output as additional context, enabling the ICL model to refine its symbol estimates iteratively across turbo decoding iterations. Two model variants, based on Transformer and state-space architectures, are developed and evaluated. Extensive simulations demonstrate that, when traditional linear assumptions break down, e.g., in the presence of low-resolution quantization, ICL equalizers consistently outperform conventional model-based baselines, even when the latter are provided with perfect channel state information. Results also highlight the advantage of Transformer-based models under limited training diversity, as well as the efficiency of state-space models in resource-constrained scenarios.

Compressive Spectrum Sensing with 1-bit ADCs

Nov 07, 2024

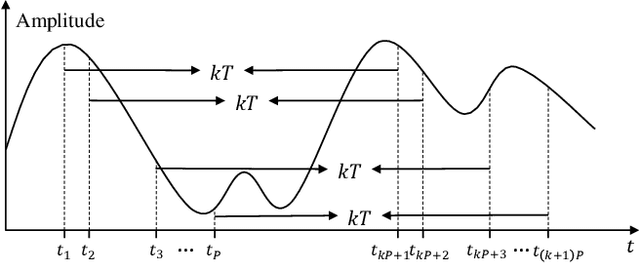

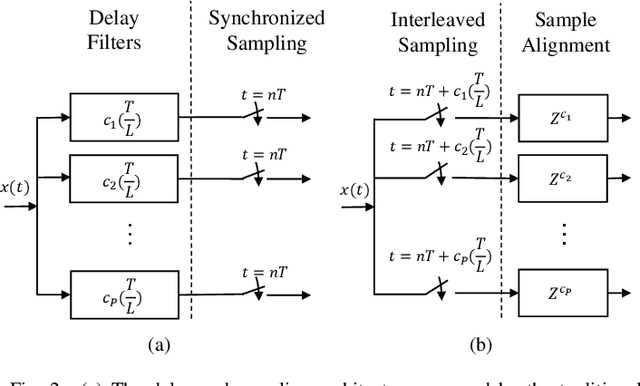

Abstract:Efficient wideband spectrum sensing (WSS) is essential for managing spectrum scarcity in wireless communications. However, existing compressed sensing (CS)-based WSS methods require high sampling rates and power consumption, particularly with high-precision analog-to-digital converters (ADCs). Although 1-bit CS with low-precision ADCs can mitigate these demands, most approaches still depend on multi-user cooperation and prior sparsity information, which are often unavailable in WSS scenarios. This paper introduces a non-cooperative WSS method using multicoset sampling with 1-bit ADCs to achieve sub-Nyquist sampling without requiring sparsity knowledge. We analyze the impact of 1-bit quantization on multiband signals, then apply eigenvalue decomposition to isolate the signal subspace from noise, enabling spectrum support estimation without signal reconstruction. This approach provides a power-efficient solution for WSS that eliminates the need for cooperation and prior information.

In-Context Learned Equalization in Cell-Free Massive MIMO via State-Space Models

Oct 31, 2024

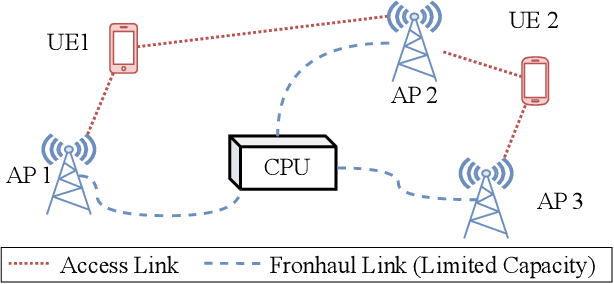

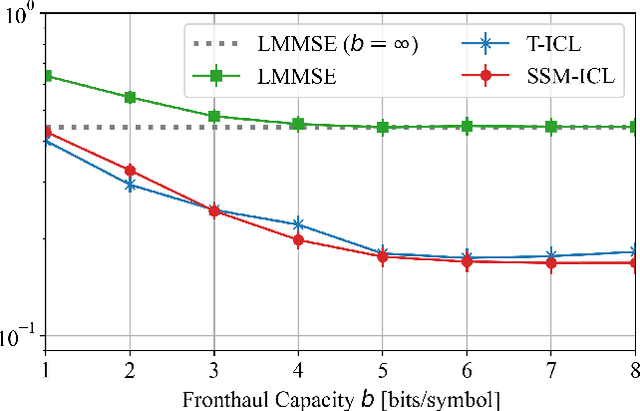

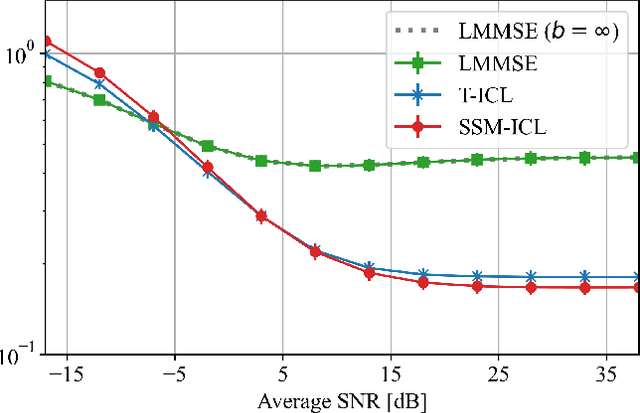

Abstract:Sequence models have demonstrated the ability to perform tasks like channel equalization and symbol detection by automatically adapting to current channel conditions. This is done without requiring any explicit optimization and by leveraging not only short pilot sequences but also contextual information such as long-term channel statistics. The operating principle underlying automatic adaptation is in-context learning (ICL), an emerging property of sequence models. Prior art adopted transformer-based sequence models, which, however, have a computational complexity scaling quadratically with the context length due to batch processing. Recently, state-space models (SSMs) have emerged as a more efficient alternative, affording a linear inference complexity in the context size. This work explores the potential of SSMs for ICL-based equalization in cell-free massive MIMO systems. Results show that selective SSMs achieve comparable performance to transformer-based models while requiring approximately eight times fewer parameters and five times fewer floating-point operations.

GBSense: A GHz-Bandwidth Compressed Spectrum Sensing System

Oct 15, 2024

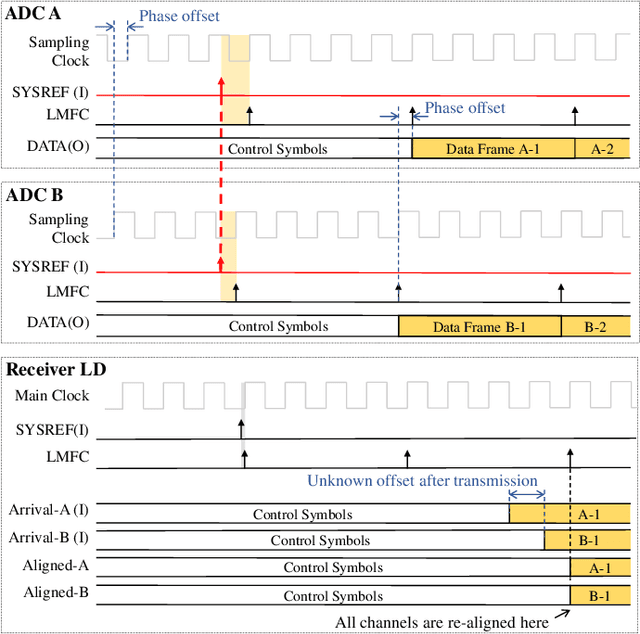

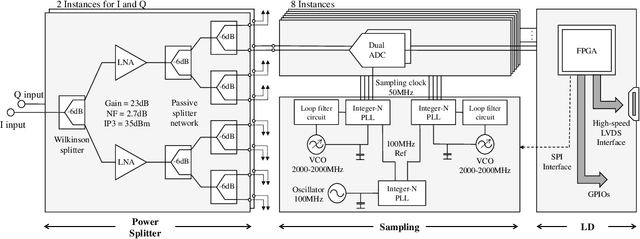

Abstract:This paper presents GBSense, an innovative compressed spectrum sensing system designed for GHz-bandwidth signals. GBSense introduces a novel approach to realize periodic nonuniform sampling that efficiently captures wideband signals using significantly lower sampling rates compared to traditional Nyquist sampling. The system incorporates time-interleaved analog-to-digital conversion, which eliminates the need for the complex analog delays typically required in multicoset sampling architectures, and offers real-time adjustable sampling patterns. The hardware design includes a dedicated clock distribution circuit and the implementation of a standard protocol to ensure precise synchronization of nonuniform samples. GBSense can process signals with a 2 GHz radio frequency bandwidth using only a 400 MHz average sampling rate. Lab tests demonstrate 100\% accurate spectrum reconstruction when the spectrum occupancy is below 100 MHz and over 80\% accuracy for occupancy up to 200 MHz. Additionally, an integrated system built around the GBSense core and a low-power Raspberry Pi processor achieves a low processing latency of around 30 ms per frame, showcasing strong real-time performance. This work highlights the potential of GBSense as a high-efficiency solution for dynamic spectrum access in future wireless communication systems.

SATSense: Multi-Satellite Collaborative Framework for Spectrum Sensing

May 24, 2024Abstract:Low Earth Orbit satellite Internet has recently been deployed, providing worldwide service with non-terrestrial networks. With the large-scale deployment of both non-terrestrial and terrestrial networks, limited spectrum resources will not be allocated enough. Consequently, dynamic spectrum sharing is crucial for their coexistence in the same spectrum, where accurate spectrum sensing is essential. However, spectrum sensing in space is more challenging than in terrestrial networks due to variable channel conditions, making single-satellite sensing unstable. Therefore, we first attempt to design a collaborative sensing scheme utilizing diverse data from multiple satellites. However, it is non-trivial to achieve this collaboration due to heterogeneous channel quality, considerable raw sampling data, and packet loss. To address the above challenges, we first establish connections between the satellites by modeling their sensing data as a graph and devising a graph neural network-based algorithm to achieve effective spectrum sensing. Meanwhile, we establish a joint sub-Nyquist sampling and autoencoder data compression framework to reduce the amount of transmitted sensing data. Finally, we propose a contrastive learning-based mechanism compensates for missing packets. Extensive experiments demonstrate that our proposed strategy can achieve efficient spectrum sensing performance and outperform the conventional deep learning algorithm in spectrum sensing accuracy.

Neuromorphic In-Context Learning for Energy-Efficient MIMO Symbol Detection

Apr 09, 2024

Abstract:In-context learning (ICL), a property demonstrated by transformer-based sequence models, refers to the automatic inference of an input-output mapping based on examples of the mapping provided as context. ICL requires no explicit learning, i.e., no explicit updates of model weights, directly mapping context and new input to the new output. Prior work has proved the usefulness of ICL for detection in MIMO channels. In this setting, the context is given by pilot symbols, and ICL automatically adapts a detector, or equalizer, to apply to newly received signals. However, the implementation tested in prior art was based on conventional artificial neural networks (ANNs), which may prove too energy-demanding to be run on mobile devices. This paper evaluates a neuromorphic implementation of the transformer for ICL-based MIMO detection. This approach replaces ANNs with spiking neural networks (SNNs), and implements the attention mechanism via stochastic computing, requiring no multiplications, but only logical AND operations and counting. When using conventional digital CMOS hardware, the proposed implementation is shown to preserve accuracy, with a reduction in power consumption ranging from $5.4\times$ to $26.8\times$, depending on the model sizes, as compared to ANN-based implementations.

Stochastic Spiking Attention: Accelerating Attention with Stochastic Computing in Spiking Networks

Feb 14, 2024

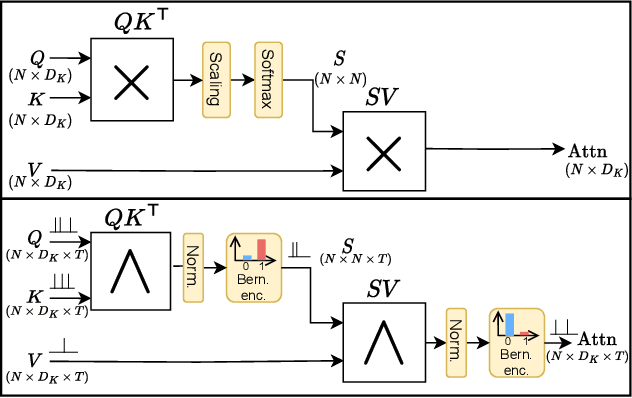

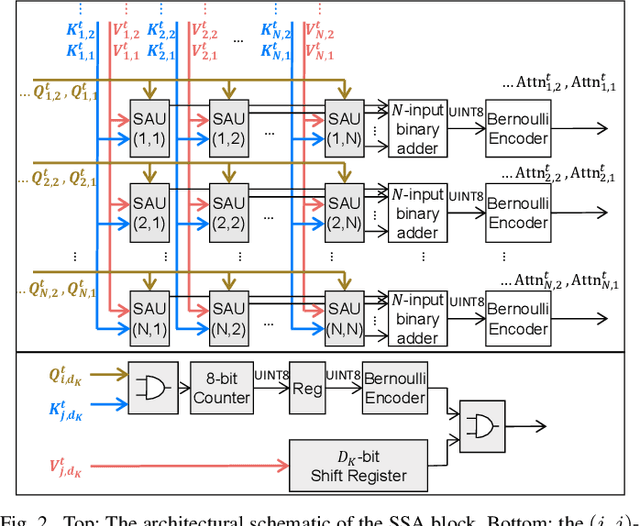

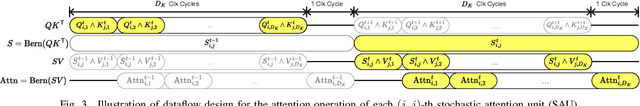

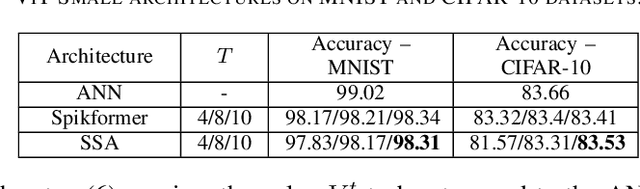

Abstract:Spiking Neural Networks (SNNs) have been recently integrated into Transformer architectures due to their potential to reduce computational demands and to improve power efficiency. Yet, the implementation of the attention mechanism using spiking signals on general-purpose computing platforms remains inefficient. In this paper, we propose a novel framework leveraging stochastic computing (SC) to effectively execute the dot-product attention for SNN-based Transformers. We demonstrate that our approach can achieve high classification accuracy ($83.53\%$) on CIFAR-10 within 10 time steps, which is comparable to the performance of a baseline artificial neural network implementation ($83.66\%$). We estimate that the proposed SC approach can lead to over $6.3\times$ reduction in computing energy and $1.7\times$ reduction in memory access costs for a digital CMOS-based ASIC design. We experimentally validate our stochastic attention block design through an FPGA implementation, which is shown to achieve $48\times$ lower latency as compared to a GPU implementation, while consuming $15\times$ less power.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge