Shlomo Shamai

Shitz

SIM-Enabled Hybrid Digital-Wave Beamforming for Fronthaul-Constrained Cell-Free Massive MIMO Systems

Jun 23, 2025Abstract:As the dense deployment of access points (APs) in cell-free massive multiple-input multiple-output (CF-mMIMO) systems presents significant challenges, per-AP coverage can be expanded using large-scale antenna arrays (LAAs). However, this approach incurs high implementation costs and substantial fronthaul demands due to the need for dedicated RF chains for all antennas. To address these challenges, we propose a hybrid beamforming framework that integrates wave-domain beamforming via stacked intelligent metasurfaces (SIM) with conventional digital processing. By dynamically manipulating electromagnetic waves, SIM-equipped APs enhance beamforming gains while significantly reducing RF chain requirements. We formulate a joint optimization problem for digital and wave-domain beamforming along with fronthaul compression to maximize the weighted sum-rate for both uplink and downlink transmission under finite-capacity fronthaul constraints. Given the high dimensionality and non-convexity of the problem, we develop alternating optimization-based algorithms that iteratively optimize digital and wave-domain variables. Numerical results demonstrate that the proposed hybrid schemes outperform conventional hybrid schemes, that rely on randomly set wave-domain beamformers or restrict digital beamforming to simple power control. Moreover, the proposed scheme employing sufficiently deep SIMs achieves near fully-digital performance with fewer RF chains in most simulated cases, except in the downlink at low signal-to-noise ratios.

A Memory-Based Reinforcement Learning Approach to Integrated Sensing and Communication

Dec 02, 2024

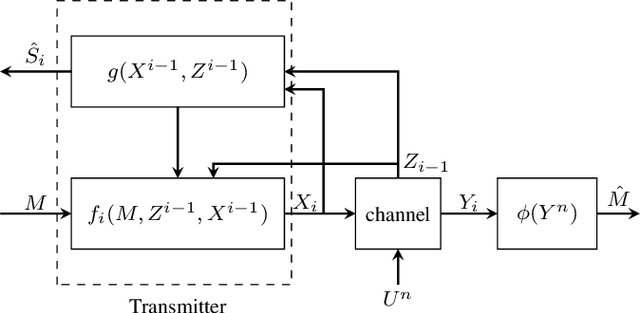

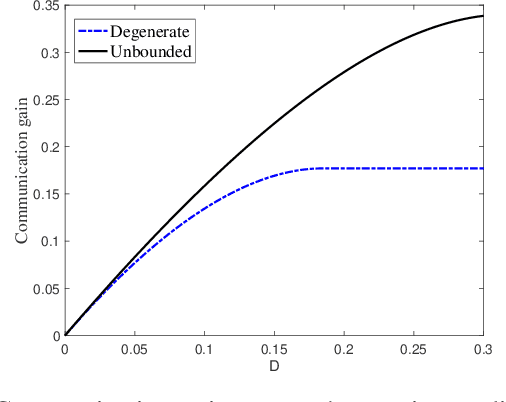

Abstract:In this paper, we consider a point-to-point integrated sensing and communication (ISAC) system, where a transmitter conveys a message to a receiver over a channel with memory and simultaneously estimates the state of the channel through the backscattered signals from the emitted waveform. Using Massey's concept of directed information for channels with memory, we formulate the capacity-distortion tradeoff for the ISAC problem when sensing is performed in an online fashion. Optimizing the transmit waveform for this system to simultaneously achieve good communication and sensing performance is a complicated task, and thus we propose a deep reinforcement learning (RL) approach to find a solution. The proposed approach enables the agent to optimize the ISAC performance by learning a reward that reflects the difference between the communication gain and the sensing loss. Since the state-space in our RL model is \`a priori unbounded, we employ deep deterministic policy gradient algorithm (DDPG). Our numerical results suggest a significant performance improvement when one considers unbounded state-space as opposed to a simpler RL problem with reduced state-space. In the extreme case of degenerate state-space only memoryless signaling strategies are possible. Our results thus emphasize the necessity of well exploiting the memory inherent in ISAC systems.

Accelerating Multi-UAV Collaborative Sensing Data Collection: A Hybrid TDMA-NOMA-Cooperative Transmission in Cell-Free MIMO Networks

Nov 04, 2024Abstract:This work investigates a collaborative sensing and data collection system in which multiple unmanned aerial vehicles (UAVs) sense an area of interest and transmit images to a cloud server (CS) for processing. To accelerate the completion of sensing missions, including data transmission, the sensing task is divided into individual private sensing tasks for each UAV and a common sensing task that is executed by all UAVs to enable cooperative transmission. Unlike existing studies, we explore the use of an advanced cell-free multiple-input multiple-output (MIMO) network, which effectively manages inter-UAV interference. To further optimize wireless channel utilization, we propose a hybrid transmission strategy that combines time-division multiple access (TDMA), non-orthogonal multiple access (NOMA), and cooperative transmission. The problem of jointly optimizing task splitting ratios and the hybrid TDMA-NOMA-cooperative transmission strategy is formulated with the objective of minimizing mission completion time. Extensive numerical results demonstrate the effectiveness of the proposed task allocation and hybrid transmission scheme in accelerating the completion of sensing missions.

Cell-Free MIMO Perceptive Mobile Networks: Cloud vs. Edge Processing

Mar 28, 2024Abstract:Perceptive mobile networks implement sensing and communication by reusing existing cellular infrastructure. Cell-free multiple-input multiple-output, thanks to the cooperation among distributed access points, supports the deployment of multistatic radar sensing, while providing high spectral efficiency for data communication services. To this end, the distributed access points communicate over fronthaul links with a central processing unit acting as a cloud processor. This work explores four different types of PMN uplink solutions based on Cell-free multiple-input multiple-output, in which the sensing and decoding functionalities are carried out at either cloud or edge. Accordingly, we investigate and compare joint cloud-based decoding and sensing (CDCS), hybrid cloud-based decoding and edge-based sensing (CDES), hybrid edge-based decoding and cloud-based sensing (EDCS) and edge-based decoding and sensing (EDES). In all cases, we target a unified design problem formulation whereby the fronthaul quantization of signals received in the training and data phases are jointly designed to maximize the achievable rate under sensing requirements and fronthaul capacity constraints. Via numerical results, the four implementation scenarios are compared as a function of the available fronthaul resources by highlighting the relative merits of edge- and cloud-based sensing and communications. This study provides guidelines on the optimal functional allocation in fronthaul-constrained networks implementing integrated sensing and communications.

Successive Refinement in Large-Scale Computation: Advancing Model Inference Applications

Feb 11, 2024Abstract:Modern computationally-intensive applications often operate under time constraints, necessitating acceleration methods and distribution of computational workloads across multiple entities. However, the outcome is either achieved within the desired timeline or not, and in the latter case, valuable resources are wasted. In this paper, we introduce solutions for layered-resolution computation. These solutions allow lower-resolution results to be obtained at an earlier stage than the final result. This innovation notably enhances the deadline-based systems, as if a computational job is terminated due to time constraints, an approximate version of the final result can still be generated. Moreover, in certain operational regimes, a high-resolution result might be unnecessary, because the low-resolution result may already deviate significantly from the decision threshold, for example in AI-based decision-making systems. Therefore, operators can decide whether higher resolution is needed or not based on intermediate results, enabling computations with adaptive resolution. We present our framework for two critical and computationally demanding jobs: distributed matrix multiplication (linear) and model inference in machine learning (nonlinear). Our theoretical and empirical results demonstrate that the execution delay for the first resolution is significantly shorter than that for the final resolution, while maintaining overall complexity comparable to the conventional one-shot approach. Our experiments further illustrate how the layering feature increases the likelihood of meeting deadlines and enables adaptability and transparency in massive, large-scale computations.

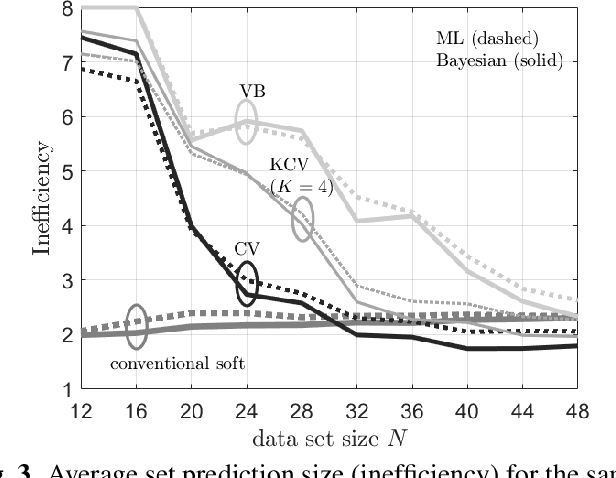

Cross-Validation Conformal Risk Control

Jan 22, 2024

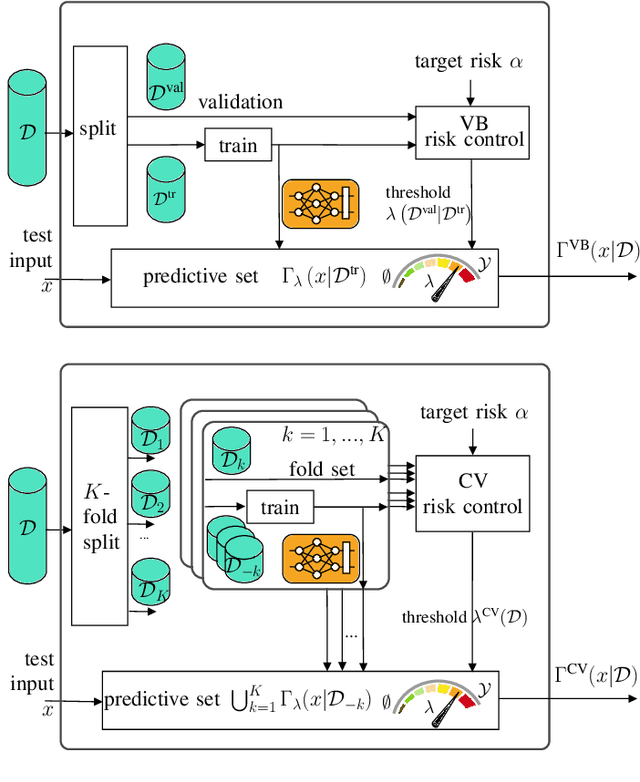

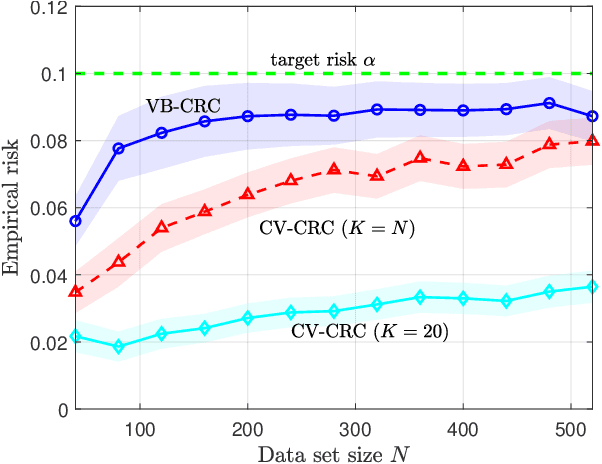

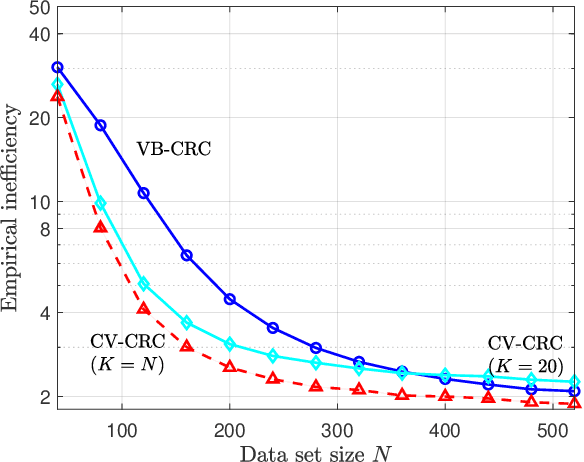

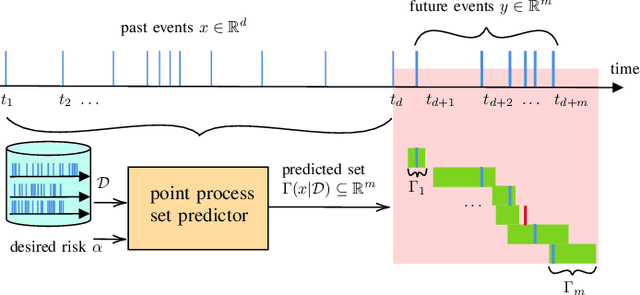

Abstract:Conformal risk control (CRC) is a recently proposed technique that applies post-hoc to a conventional point predictor to provide calibration guarantees. Generalizing conformal prediction (CP), with CRC, calibration is ensured for a set predictor that is extracted from the point predictor to control a risk function such as the probability of miscoverage or the false negative rate. The original CRC requires the available data set to be split between training and validation data sets. This can be problematic when data availability is limited, resulting in inefficient set predictors. In this paper, a novel CRC method is introduced that is based on cross-validation, rather than on validation as the original CRC. The proposed cross-validation CRC (CV-CRC) extends a version of the jackknife-minmax from CP to CRC, allowing for the control of a broader range of risk functions. CV-CRC is proved to offer theoretical guarantees on the average risk of the set predictor. Furthermore, numerical experiments show that CV-CRC can reduce the average set size with respect to CRC when the available data are limited.

Deep Learning Assisted Multiuser MIMO Load Modulated Systems for Enhanced Downlink mmWave Communications

Nov 08, 2023

Abstract:This paper is focused on multiuser load modulation arrays (MU-LMAs) which are attractive due to their low system complexity and reduced cost for millimeter wave (mmWave) multi-input multi-output (MIMO) systems. The existing precoding algorithm for downlink MU-LMA relies on a sub-array structured (SAS) transmitter which may suffer from decreased degrees of freedom and complex system configuration. Furthermore, a conventional LMA codebook with codewords uniformly distributed on a hypersphere may not be channel-adaptive and may lead to increased signal detection complexity. In this paper, we conceive an MU-LMA system employing a full-array structured (FAS) transmitter and propose two algorithms accordingly. The proposed FAS-based system addresses the SAS structural problems and can support larger numbers of users. For LMA-imposed constant-power downlink precoding, we propose an FAS-based normalized block diagonalization (FAS-NBD) algorithm. However, the forced normalization may result in performance degradation. This degradation, together with the aforementioned codebook design problems, is difficult to solve analytically. This motivates us to propose a Deep Learning-enhanced (FAS-DL-NBD) algorithm for adaptive codebook design and codebook-independent decoding. It is shown that the proposed algorithms are robust to imperfect knowledge of channel state information and yield excellent error performance. Moreover, the FAS-DL-NBD algorithm enables signal detection with low complexity as the number of bits per codeword increases.

Guaranteed Dynamic Scheduling of Ultra-Reliable Low-Latency Traffic via Conformal Prediction

Feb 15, 2023Abstract:The dynamic scheduling of ultra-reliable and low-latency traffic (URLLC) in the uplink can significantly enhance the efficiency of coexisting services, such as enhanced mobile broadband (eMBB) devices, by only allocating resources when necessary. The main challenge is posed by the uncertainty in the process of URLLC packet generation, which mandates the use of predictors for URLLC traffic in the coming frames. In practice, such prediction may overestimate or underestimate the amount of URLLC data to be generated, yielding either an excessive or an insufficient amount of resources to be pre-emptively allocated for URLLC packets. In this paper, we introduce a novel scheduler for URLLC packets that provides formal guarantees on reliability and latency irrespective of the quality of the URLLC traffic predictor. The proposed method leverages recent advances in online conformal prediction (CP), and follows the principle of dynamically adjusting the amount of allocated resources so as to meet reliability and latency requirements set by the designer.

Calibrating AI Models for Wireless Communications via Conformal Prediction

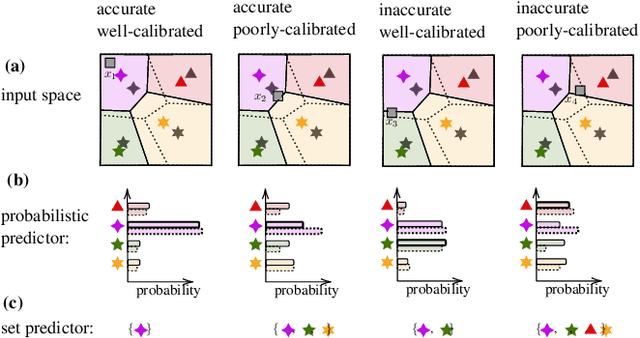

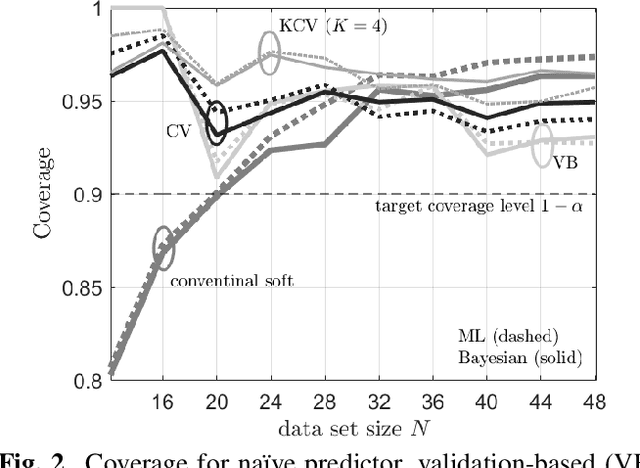

Dec 15, 2022Abstract:When used in complex engineered systems, such as communication networks, artificial intelligence (AI) models should be not only as accurate as possible, but also well calibrated. A well-calibrated AI model is one that can reliably quantify the uncertainty of its decisions, assigning high confidence levels to decisions that are likely to be correct and low confidence levels to decisions that are likely to be erroneous. This paper investigates the application of conformal prediction as a general framework to obtain AI models that produce decisions with formal calibration guarantees. Conformal prediction transforms probabilistic predictors into set predictors that are guaranteed to contain the correct answer with a probability chosen by the designer. Such formal calibration guarantees hold irrespective of the true, unknown, distribution underlying the generation of the variables of interest, and can be defined in terms of ensemble or time-averaged probabilities. In this paper, conformal prediction is applied for the first time to the design of AI for communication systems in conjunction to both frequentist and Bayesian learning, focusing on demodulation, modulation classification, and channel prediction.

Calibrating AI Models for Few-Shot Demodulation via Conformal Prediction

Oct 10, 2022

Abstract:AI tools can be useful to address model deficits in the design of communication systems. However, conventional learning-based AI algorithms yield poorly calibrated decisions, unabling to quantify their outputs uncertainty. While Bayesian learning can enhance calibration by capturing epistemic uncertainty caused by limited data availability, formal calibration guarantees only hold under strong assumptions about the ground-truth, unknown, data generation mechanism. We propose to leverage the conformal prediction framework to obtain data-driven set predictions whose calibration properties hold irrespective of the data distribution. Specifically, we investigate the design of baseband demodulators in the presence of hard-to-model nonlinearities such as hardware imperfections, and propose set-based demodulators based on conformal prediction. Numerical results confirm the theoretical validity of the proposed demodulators, and bring insights into their average prediction set size efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge