Alejandro Cohen

Shitz

Adaptive Deadline and Batch Layered Synchronized Federated Learning

May 29, 2025

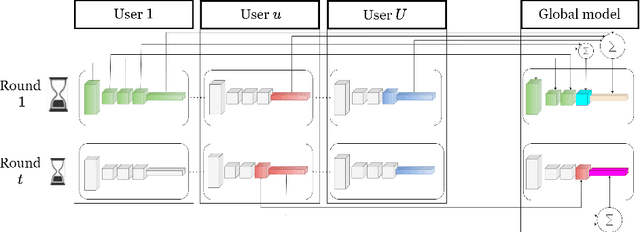

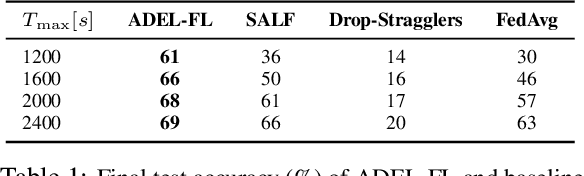

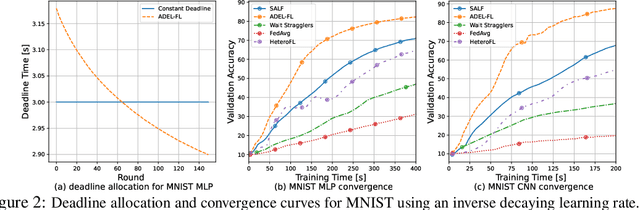

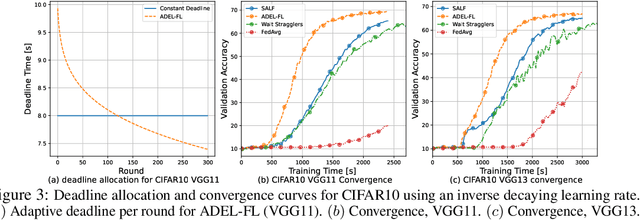

Abstract:Federated learning (FL) enables collaborative model training across distributed edge devices while preserving data privacy, and typically operates in a round-based synchronous manner. However, synchronous FL suffers from latency bottlenecks due to device heterogeneity, where slower clients (stragglers) delay or degrade global updates. Prior solutions, such as fixed deadlines, client selection, and layer-wise partial aggregation, alleviate the effect of stragglers, but treat round timing and local workload as static parameters, limiting their effectiveness under strict time constraints. We propose ADEL-FL, a novel framework that jointly optimizes per-round deadlines and user-specific batch sizes for layer-wise aggregation. Our approach formulates a constrained optimization problem minimizing the expected L2 distance to the global optimum under total training time and global rounds. We provide a convergence analysis under exponential compute models and prove that ADEL-FL yields unbiased updates with bounded variance. Extensive experiments demonstrate that ADEL-FL outperforms alternative methods in both convergence rate and final accuracy under heterogeneous conditions.

Cryptanalysis via Machine Learning Based Information Theoretic Metrics

Jan 25, 2025Abstract:The fields of machine learning (ML) and cryptanalysis share an interestingly common objective of creating a function, based on a given set of inputs and outputs. However, the approaches and methods in doing so vary vastly between the two fields. In this paper, we explore integrating the knowledge from the ML domain to provide empirical evaluations of cryptosystems. Particularly, we utilize information theoretic metrics to perform ML-based distribution estimation. We propose two novel applications of ML algorithms that can be applied in a known plaintext setting to perform cryptanalysis on any cryptosystem. We use mutual information neural estimation to calculate a cryptosystem's mutual information leakage, and a binary cross entropy classification to model an indistinguishability under chosen plaintext attack (CPA). These algorithms can be readily applied in an audit setting to evaluate the robustness of a cryptosystem and the results can provide a useful empirical bound. We evaluate the efficacy of our methodologies by empirically analyzing several encryption schemes. Furthermore, we extend the analysis to novel network coding-based cryptosystems and provide other use cases for our algorithms. We show that our classification model correctly identifies the encryption schemes that are not IND-CPA secure, such as DES, RSA, and AES ECB, with high accuracy. It also identifies the faults in CPA-secure cryptosystems with faulty parameters, such a reduced counter version of AES-CTR. We also conclude that with our algorithms, in most cases a smaller-sized neural network using less computing power can identify vulnerabilities in cryptosystems, providing a quick check of the sanity of the cryptosystem and help to decide whether to spend more resources to deploy larger networks that are able to break the cryptosystem.

Stragglers-Aware Low-Latency Synchronous Federated Learning via Layer-Wise Model Updates

Mar 27, 2024Abstract:Synchronous federated learning (FL) is a popular paradigm for collaborative edge learning. It typically involves a set of heterogeneous devices locally training neural network (NN) models in parallel with periodic centralized aggregations. As some of the devices may have limited computational resources and varying availability, FL latency is highly sensitive to stragglers. Conventional approaches discard incomplete intra-model updates done by stragglers, alter the amount of local workload and architecture, or resort to asynchronous settings; which all affect the trained model performance under tight training latency constraints. In this work, we propose straggler-aware layer-wise federated learning (SALF) that leverages the optimization procedure of NNs via backpropagation to update the global model in a layer-wise fashion. SALF allows stragglers to synchronously convey partial gradients, having each layer of the global model be updated independently with a different contributing set of users. We provide a theoretical analysis, establishing convergence guarantees for the global model under mild assumptions on the distribution of the participating devices, revealing that SALF converges at the same asymptotic rate as FL with no timing limitations. This insight is matched with empirical observations, demonstrating the performance gains of SALF compared to alternative mechanisms mitigating the device heterogeneity gap in FL.

Adaptive Integrate-and-Fire Time Encoding Machine

Mar 05, 2024Abstract:An integrate-and-fire time-encoding machine (IF-TEM) is an effective asynchronous sampler that translates amplitude information into non-uniform time sequences. In this work, we propose a novel Adaptive IF-TEM (AIF-TEM) approach. This design dynamically adjusts the TEM's sensitivity to changes in the input signal's amplitude and frequency in real-time. We provide a comprehensive analysis of AIF-TEM's oversampling and distortion properties. By the adaptive adjustments, AIF-TEM as we show can achieve significant performance improvements in practical finite regime, in terms of sampling rate-distortion. We demonstrate empirically that in the scenarios tested AIF-TEM outperforms classical IF-TEM and traditional Nyquist (i.e., periodic) sampling methods for band-limited signals. In terms of Mean Square Error (MSE), the reduction reaches at least 12dB (fixing the oversampling rate).

Successive Refinement in Large-Scale Computation: Advancing Model Inference Applications

Feb 11, 2024Abstract:Modern computationally-intensive applications often operate under time constraints, necessitating acceleration methods and distribution of computational workloads across multiple entities. However, the outcome is either achieved within the desired timeline or not, and in the latter case, valuable resources are wasted. In this paper, we introduce solutions for layered-resolution computation. These solutions allow lower-resolution results to be obtained at an earlier stage than the final result. This innovation notably enhances the deadline-based systems, as if a computational job is terminated due to time constraints, an approximate version of the final result can still be generated. Moreover, in certain operational regimes, a high-resolution result might be unnecessary, because the low-resolution result may already deviate significantly from the decision threshold, for example in AI-based decision-making systems. Therefore, operators can decide whether higher resolution is needed or not based on intermediate results, enabling computations with adaptive resolution. We present our framework for two critical and computationally demanding jobs: distributed matrix multiplication (linear) and model inference in machine learning (nonlinear). Our theoretical and empirical results demonstrate that the execution delay for the first resolution is significantly shorter than that for the final resolution, while maintaining overall complexity comparable to the conventional one-shot approach. Our experiments further illustrate how the layering feature increases the likelihood of meeting deadlines and enables adaptability and transparency in massive, large-scale computations.

CRYPTO-MINE: Cryptanalysis via Mutual Information Neural Estimation

Sep 18, 2023Abstract:The use of Mutual Information (MI) as a measure to evaluate the efficiency of cryptosystems has an extensive history. However, estimating MI between unknown random variables in a high-dimensional space is challenging. Recent advances in machine learning have enabled progress in estimating MI using neural networks. This work presents a novel application of MI estimation in the field of cryptography. We propose applying this methodology directly to estimate the MI between plaintext and ciphertext in a chosen plaintext attack. The leaked information, if any, from the encryption could potentially be exploited by adversaries to compromise the computational security of the cryptosystem. We evaluate the efficiency of our approach by empirically analyzing multiple encryption schemes and baseline approaches. Furthermore, we extend the analysis to novel network coding-based cryptosystems that provide individual secrecy and study the relationship between information leakage and input distribution.

Time-Based Quantization for FRI and Bandlimited signals

Oct 05, 2021

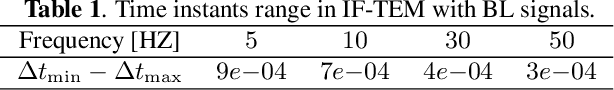

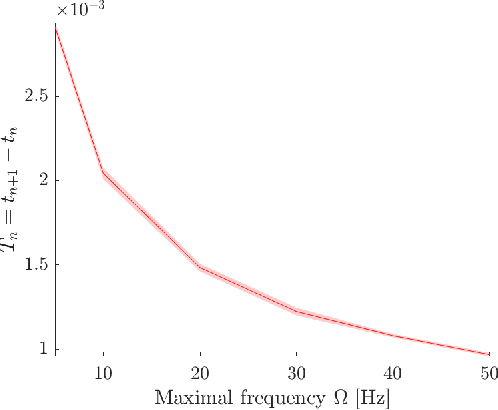

Abstract:We consider the problem of quantizing samples of finite-rate-of-innovation (FRI) and bandlimited (BL) signals by using an integrate-and-fire time encoding machine (IF-TEM). We propose a uniform design of the quantization levels and show by numerical simulations that quantization using IF-TEM improves the recovery of FRI and BL signals in comparison with classical uniform sampling in the Fourier-domain and Nyquist methods, respectively. In terms of mean square error (MSE), the reduction reaches at least 5 dB for both classes of signals. Our numerical evaluations also demonstrate that the MSE further decreases when the number of pulses comprising the FRI signal increases. A similar observation is demonstrated for BL signals. In particular, we show that, in contrast to the classical method, increasing the frequency of the IF-TEM input decreases the quantization step size, which can reduce the MSE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge