Thomas Stahlbuhk

Cryptanalysis via Machine Learning Based Information Theoretic Metrics

Jan 25, 2025Abstract:The fields of machine learning (ML) and cryptanalysis share an interestingly common objective of creating a function, based on a given set of inputs and outputs. However, the approaches and methods in doing so vary vastly between the two fields. In this paper, we explore integrating the knowledge from the ML domain to provide empirical evaluations of cryptosystems. Particularly, we utilize information theoretic metrics to perform ML-based distribution estimation. We propose two novel applications of ML algorithms that can be applied in a known plaintext setting to perform cryptanalysis on any cryptosystem. We use mutual information neural estimation to calculate a cryptosystem's mutual information leakage, and a binary cross entropy classification to model an indistinguishability under chosen plaintext attack (CPA). These algorithms can be readily applied in an audit setting to evaluate the robustness of a cryptosystem and the results can provide a useful empirical bound. We evaluate the efficacy of our methodologies by empirically analyzing several encryption schemes. Furthermore, we extend the analysis to novel network coding-based cryptosystems and provide other use cases for our algorithms. We show that our classification model correctly identifies the encryption schemes that are not IND-CPA secure, such as DES, RSA, and AES ECB, with high accuracy. It also identifies the faults in CPA-secure cryptosystems with faulty parameters, such a reduced counter version of AES-CTR. We also conclude that with our algorithms, in most cases a smaller-sized neural network using less computing power can identify vulnerabilities in cryptosystems, providing a quick check of the sanity of the cryptosystem and help to decide whether to spend more resources to deploy larger networks that are able to break the cryptosystem.

CRYPTO-MINE: Cryptanalysis via Mutual Information Neural Estimation

Sep 18, 2023Abstract:The use of Mutual Information (MI) as a measure to evaluate the efficiency of cryptosystems has an extensive history. However, estimating MI between unknown random variables in a high-dimensional space is challenging. Recent advances in machine learning have enabled progress in estimating MI using neural networks. This work presents a novel application of MI estimation in the field of cryptography. We propose applying this methodology directly to estimate the MI between plaintext and ciphertext in a chosen plaintext attack. The leaked information, if any, from the encryption could potentially be exploited by adversaries to compromise the computational security of the cryptosystem. We evaluate the efficiency of our approach by empirically analyzing multiple encryption schemes and baseline approaches. Furthermore, we extend the analysis to novel network coding-based cryptosystems that provide individual secrecy and study the relationship between information leakage and input distribution.

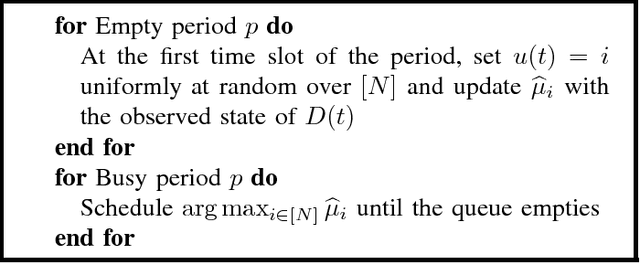

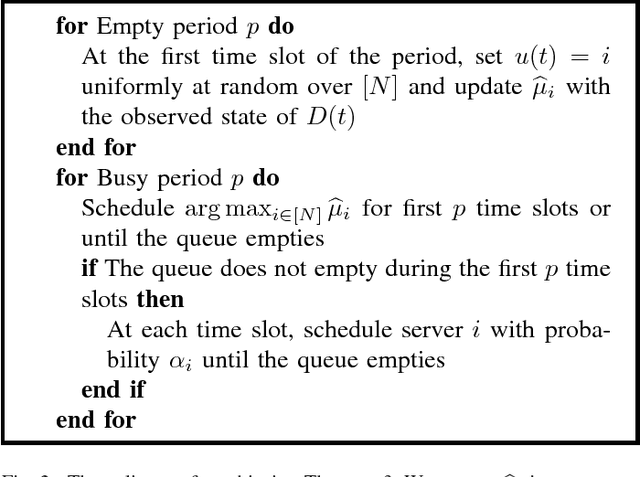

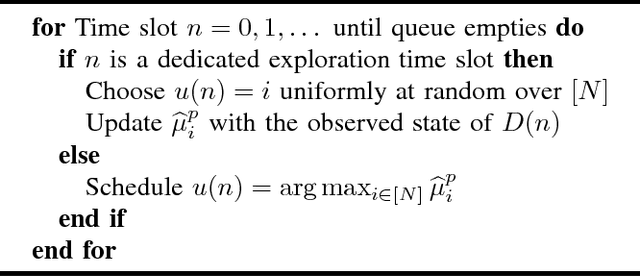

Learning Algorithms for Minimizing Queue Length Regret

May 14, 2020

Abstract:We consider a system consisting of a single transmitter/receiver pair and $N$ channels over which they may communicate. Packets randomly arrive to the transmitter's queue and wait to be successfully sent to the receiver. The transmitter may attempt a frame transmission on one channel at a time, where each frame includes a packet if one is in the queue. For each channel, an attempted transmission is successful with an unknown probability. The transmitter's objective is to quickly identify the best channel to minimize the number of packets in the queue over $T$ time slots. To analyze system performance, we introduce queue length regret, which is the expected difference between the total queue length of a learning policy and a controller that knows the rates, a priori. One approach to designing a transmission policy would be to apply algorithms from the literature that solve the closely-related stochastic multi-armed bandit problem. These policies would focus on maximizing the number of successful frame transmissions over time. However, we show that these methods have $\Omega(\log{T})$ queue length regret. On the other hand, we show that there exists a set of queue-length based policies that can obtain order optimal $O(1)$ queue length regret. We use our theoretical analysis to devise heuristic methods that are shown to perform well in simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge