Ruud van Sloun

Dynamic Estimation Loss Control in Variational Quantum Sensing via Online Conformal Inference

May 29, 2025Abstract:Quantum sensing exploits non-classical effects to overcome limitations of classical sensors, with applications ranging from gravitational-wave detection to nanoscale imaging. However, practical quantum sensors built on noisy intermediate-scale quantum (NISQ) devices face significant noise and sampling constraints, and current variational quantum sensing (VQS) methods lack rigorous performance guarantees. This paper proposes an online control framework for VQS that dynamically updates the variational parameters while providing deterministic error bars on the estimates. By leveraging online conformal inference techniques, the approach produces sequential estimation sets with a guaranteed long-term risk level. Experiments on a quantum magnetometry task confirm that the proposed dynamic VQS approach maintains the required reliability over time, while still yielding precise estimates. The results demonstrate the practical benefits of combining variational quantum algorithms with online conformal inference to achieve reliable quantum sensing on NISQ devices.

Learning Structured Compressed Sensing with Automatic Resource Allocation

Oct 24, 2024

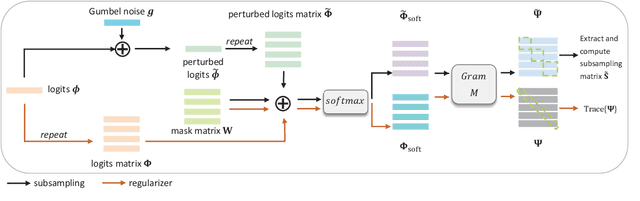

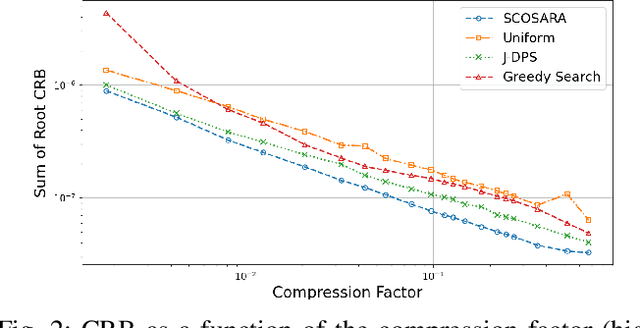

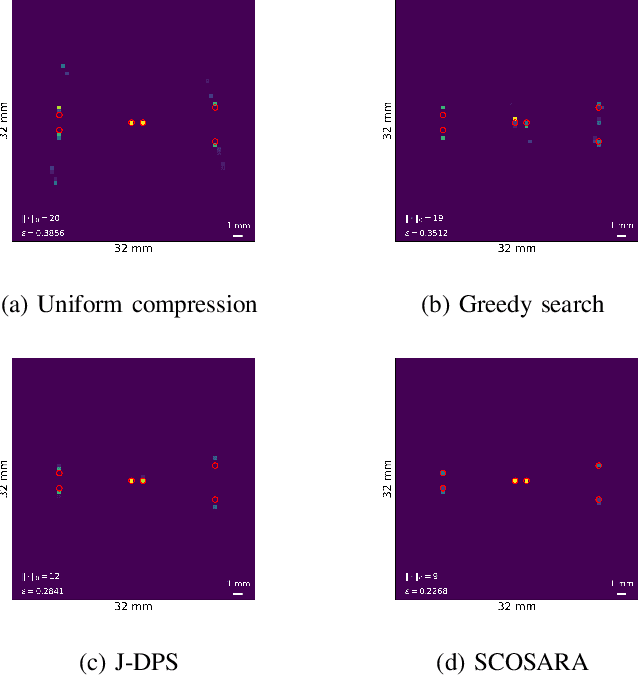

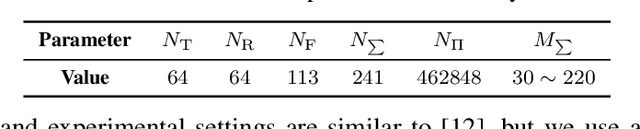

Abstract:Multidimensional data acquisition often requires extensive time and poses significant challenges for hardware and software regarding data storage and processing. Rather than designing a single compression matrix as in conventional compressed sensing, structured compressed sensing yields dimension-specific compression matrices, reducing the number of optimizable parameters. Recent advances in machine learning (ML) have enabled task-based supervised learning of subsampling matrices, albeit at the expense of complex downstream models. Additionally, the sampling resource allocation across dimensions is often determined in advance through heuristics. To address these challenges, we introduce Structured COmpressed Sensing with Automatic Resource Allocation (SCOSARA) with an information theory-based unsupervised learning strategy. SCOSARA adaptively distributes samples across sampling dimensions while maximizing Fisher information content. Using ultrasound localization as a case study, we compare SCOSARA to state-of-the-art ML-based and greedy search algorithms. Simulation results demonstrate that SCOSARA can produce high-quality subsampling matrices that achieve lower Cram\'er-Rao Bound values than the baselines. In addition, SCOSARA outperforms other ML-based algorithms in terms of the number of trainable parameters, computational complexity, and memory requirements while automatically choosing the number of samples per axis.

Residual Quantization with Implicit Neural Codebooks

Jan 26, 2024

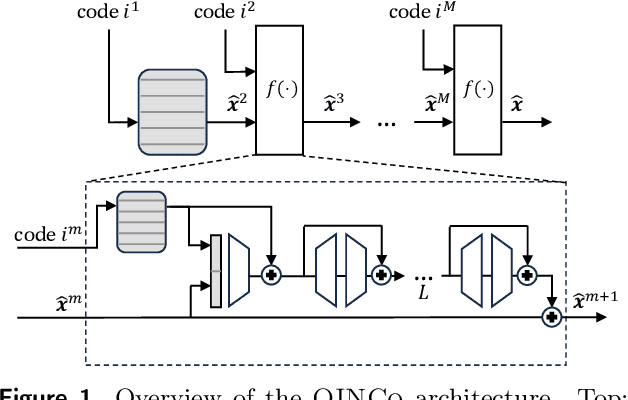

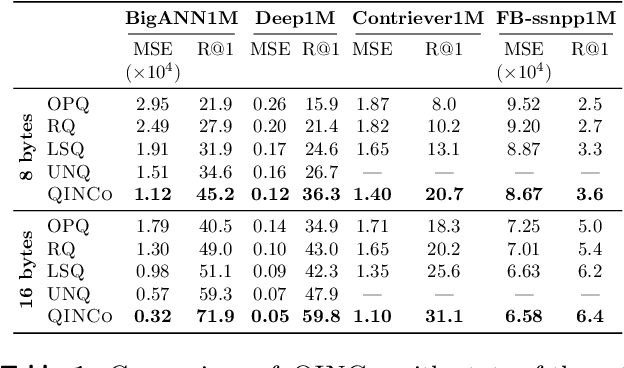

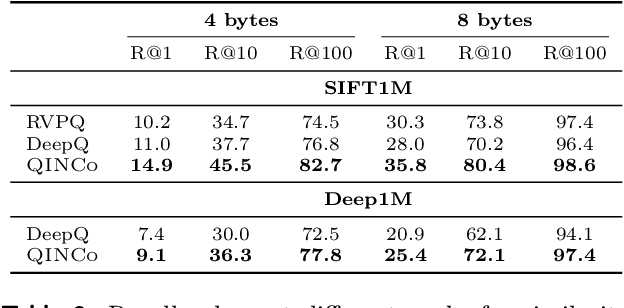

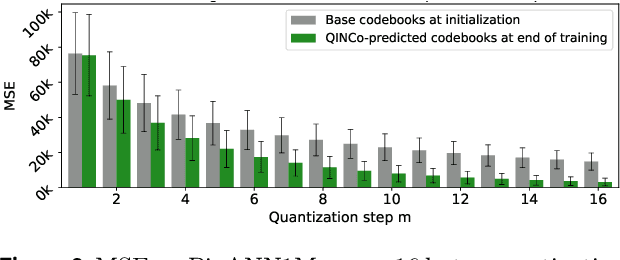

Abstract:Vector quantization is a fundamental operation for data compression and vector search. To obtain high accuracy, multi-codebook methods increase the rate by representing each vector using codewords across multiple codebooks. Residual quantization (RQ) is one such method, which increases accuracy by iteratively quantizing the error of the previous step. The error distribution is dependent on previously selected codewords. This dependency is, however, not accounted for in conventional RQ as it uses a generic codebook per quantization step. In this paper, we propose QINCo, a neural RQ variant which predicts specialized codebooks per vector using a neural network that is conditioned on the approximation of the vector from previous steps. Experiments show that QINCo outperforms state-of-the-art methods by a large margin on several datasets and code sizes. For example, QINCo achieves better nearest-neighbor search accuracy using 12 bytes codes than other methods using 16 bytes on the BigANN and Deep1B dataset.

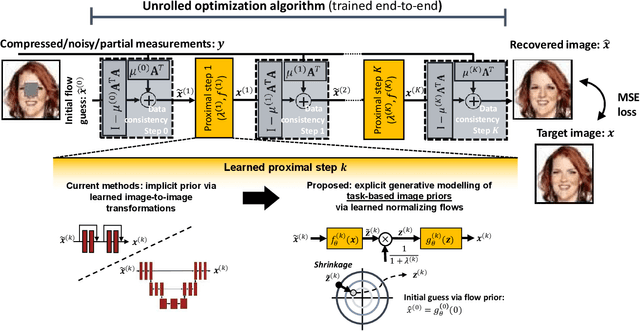

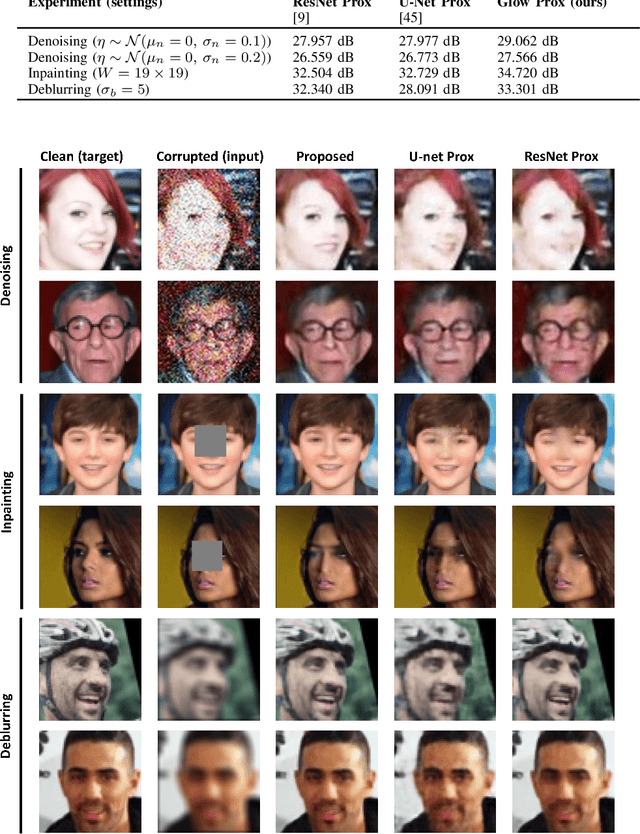

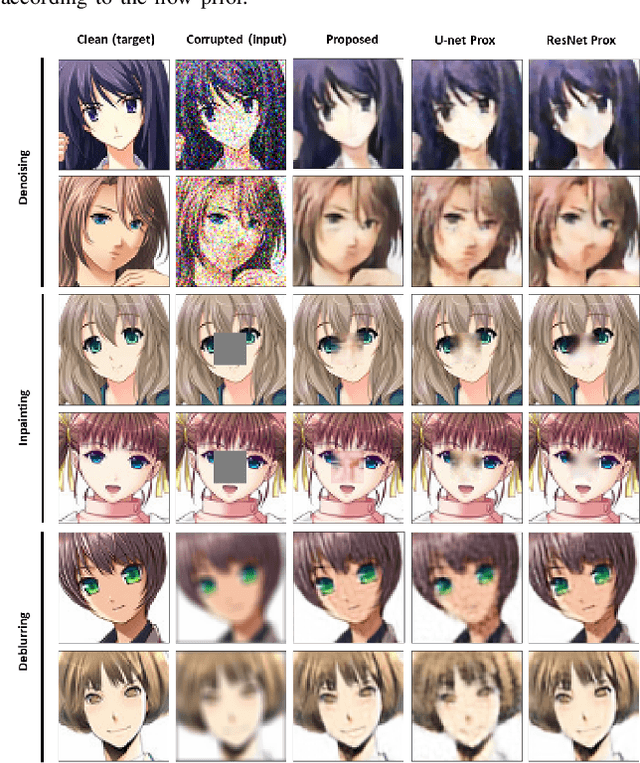

Deep Unfolding with Normalizing Flow Priors for Inverse Problems

Jul 06, 2021

Abstract:Many application domains, spanning from computational photography to medical imaging, require recovery of high-fidelity images from noisy, incomplete or partial/compressed measurements. State of the art methods for solving these inverse problems combine deep learning with iterative model-based solvers, a concept known as deep algorithm unfolding. By combining a-priori knowledge of the forward measurement model with learned (proximal) mappings based on deep networks, these methods yield solutions that are both physically feasible (data-consistent) and perceptually plausible. However, current proximal mappings only implicitly learn such image priors. In this paper, we propose to make these image priors fully explicit by embedding deep generative models in the form of normalizing flows within the unfolded proximal gradient algorithm. We demonstrate that the proposed method outperforms competitive baselines on various image recovery tasks, spanning from image denoising to inpainting and deblurring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge