Han Wang

OmniOCR: Generalist OCR for Ethnic Minority Languages

Feb 24, 2026Abstract:Optical character recognition (OCR) has advanced rapidly with deep learning and multimodal models, yet most methods focus on well-resourced scripts such as Latin and Chinese. Ethnic minority languages remain underexplored due to complex writing systems, scarce annotations, and diverse historical and modern forms, making generalization in low-resource or zero-shot settings challenging. To address these challenges, we present OmniOCR, a universal framework for ethnic minority scripts. OmniOCR introduces Dynamic Low-Rank Adaptation (Dynamic LoRA) to allocate model capacity across layers and scripts, enabling effective adaptation while preserving knowledge.A sparsity regularization prunes redundant updates, ensuring compact and efficient adaptation without extra inference cost. Evaluations on TibetanMNIST, Shui, ancient Yi, and Dongba show that OmniOCR outperforms zero-shot foundation models and standard post training, achieving state-of-the-art accuracy with superior parameter efficiency, and compared with the state-of-the-art baseline models, it improves accuracy by 39%-66% on these four datasets. Code: https://github.com/AIGeeksGroup/OmniOCR.

City Editing: Hierarchical Agentic Execution for Dependency-Aware Urban Geospatial Modification

Feb 22, 2026Abstract:As cities evolve over time, challenges such as traffic congestion and functional imbalance increasingly necessitate urban renewal through efficient modification of existing plans, rather than complete re-planning. In practice, even minor urban changes require substantial manual effort to redraw geospatial layouts, slowing the iterative planning and decision-making procedure. Motivated by recent advances in agentic systems and multimodal reasoning, we formulate urban renewal as a machine-executable task that iteratively modifies existing urban plans represented in structured geospatial formats. More specifically, we represent urban layouts using GeoJSON and decompose natural-language editing instructions into hierarchical geometric intents spanning polygon-, line-, and point-level operations. To coordinate interdependent edits across spatial elements and abstraction levels, we propose a hierarchical agentic framework that jointly performs multi-level planning and execution with explicit propagation of intermediate spatial constraints. We further introduce an iterative execution-validation mechanism that mitigates error accumulation and enforces global spatial consistency during multi-step editing. Extensive experiments across diverse urban editing scenarios demonstrate significant improvements in efficiency, robustness, correctness, and spatial validity over existing baselines.

Fusing Pixels and Genes: Spatially-Aware Learning in Computational Pathology

Feb 15, 2026Abstract:Recent years have witnessed remarkable progress in multimodal learning within computational pathology. Existing models primarily rely on vision and language modalities; however, language alone lacks molecular specificity and offers limited pathological supervision, leading to representational bottlenecks. In this paper, we propose STAMP, a Spatial Transcriptomics-Augmented Multimodal Pathology representation learning framework that integrates spatially-resolved gene expression profiles to enable molecule-guided joint embedding of pathology images and transcriptomic data. Our study shows that self-supervised, gene-guided training provides a robust and task-agnostic signal for learning pathology image representations. Incorporating spatial context and multi-scale information further enhances model performance and generalizability. To support this, we constructed SpaVis-6M, the largest Visium-based spatial transcriptomics dataset to date, and trained a spatially-aware gene encoder on this resource. Leveraging hierarchical multi-scale contrastive alignment and cross-scale patch localization mechanisms, STAMP effectively aligns spatial transcriptomics with pathology images, capturing spatial structure and molecular variation. We validate STAMP across six datasets and four downstream tasks, where it consistently achieves strong performance. These results highlight the value and necessity of integrating spatially resolved molecular supervision for advancing multimodal learning in computational pathology. The code is included in the supplementary materials. The pretrained weights and SpaVis-6M are available at: https://github.com/Hanminghao/STAMP.

Multimodal Fact-Level Attribution for Verifiable Reasoning

Feb 12, 2026Abstract:Multimodal large language models (MLLMs) are increasingly used for real-world tasks involving multi-step reasoning and long-form generation, where reliability requires grounding model outputs in heterogeneous input sources and verifying individual factual claims. However, existing multimodal grounding benchmarks and evaluation methods focus on simplified, observation-based scenarios or limited modalities and fail to assess attribution in complex multimodal reasoning. We introduce MuRGAt (Multimodal Reasoning with Grounded Attribution), a benchmark for evaluating fact-level multimodal attribution in settings that require reasoning beyond direct observation. Given inputs spanning video, audio, and other modalities, MuRGAt requires models to generate answers with explicit reasoning and precise citations, where each citation specifies both modality and temporal segments. To enable reliable assessment, we introduce an automatic evaluation framework that strongly correlates with human judgments. Benchmarking with human and automated scores reveals that even strong MLLMs frequently hallucinate citations despite correct reasoning. Moreover, we observe a key trade-off: increasing reasoning depth or enforcing structured grounding often degrades accuracy, highlighting a significant gap between internal reasoning and verifiable attribution.

StreamSense: Streaming Social Task Detection with Selective Vision-Language Model Routing

Jan 30, 2026Abstract:Live streaming platforms require real-time monitoring and reaction to social signals, utilizing partial and asynchronous evidence from video, text, and audio. We propose StreamSense, a streaming detector that couples a lightweight streaming encoder with selective routing to a Vision-Language Model (VLM) expert. StreamSense handles most timestamps with the lightweight streaming encoder, escalates hard/ambiguous cases to the VLM, and defers decisions when context is insufficient. The encoder is trained using (i) a cross-modal contrastive term to align visual/audio cues with textual signals, and (ii) an IoU-weighted loss that down-weights poorly overlapping target segments, mitigating label interference across segment boundaries. We evaluate StreamSense on multiple social streaming detection tasks (e.g., sentiment classification and hate content moderation), and the results show that StreamSense achieves higher accuracy than VLM-only streaming while only occasionally invoking the VLM, thereby reducing average latency and compute. Our results indicate that selective escalation and deferral are effective primitives for understanding streaming social tasks. Code is publicly available on GitHub.

Compressed BC-LISTA via Low-Rank Convolutional Decomposition

Jan 30, 2026Abstract:We study Sparse Signal Recovery (SSR) methods for multichannel imaging with compressed {forward and backward} operators that preserve reconstruction accuracy. We propose a Compressed Block-Convolutional (C-BC) measurement model based on a low-rank Convolutional Neural Network (CNN) decomposition that is analytically initialized from a low-rank factorization of physics-derived forward/backward operators in time delay-based measurements. We use Orthogonal Matching Pursuit (OMP) to select a compact set of basis filters from the analytic model and compute linear mixing coefficients to approximate the full model. We consider the Learned Iterative Shrinkage-Thresholding Algorithm (LISTA) network as a representative example for which the C-BC-LISTA extension is presented. In simulated multichannel ultrasound imaging across multiple Signal-to-Noise Ratios (SNRs), C-BC-LISTA requires substantially fewer parameters and smaller model size than other state-of-the-art (SOTA) methods while improving reconstruction accuracy. In ablations over OMP, Singular Value Decomposition (SVD)-based, and random initializations, OMP-initialized structured compression performs best, yielding the most efficient training and the best performance.

How do Visual Attributes Influence Web Agents? A Comprehensive Evaluation of User Interface Design Factors

Jan 29, 2026Abstract:Web agents have demonstrated strong performance on a wide range of web-based tasks. However, existing research on the effect of environmental variation has mostly focused on robustness to adversarial attacks, with less attention to agents' preferences in benign scenarios. Although early studies have examined how textual attributes influence agent behavior, a systematic understanding of how visual attributes shape agent decision-making remains limited. To address this, we introduce VAF, a controlled evaluation pipeline for quantifying how webpage Visual Attribute Factors influence web-agent decision-making. Specifically, VAF consists of three stages: (i) variant generation, which ensures the variants share identical semantics as the original item while only differ in visual attributes; (ii) browsing interaction, where agents navigate the page via scrolling and clicking the interested item, mirroring how human users browse online; (iii) validating through both click action and reasoning from agents, which we use the Target Click Rate and Target Mention Rate to jointly evaluate the effect of visual attributes. By quantitatively measuring the decision-making difference between the original and variant, we identify which visual attributes influence agents' behavior most. Extensive experiments, across 8 variant families (48 variants total), 5 real-world websites (including shopping, travel, and news browsing), and 4 representative web agents, show that background color contrast, item size, position, and card clarity have a strong influence on agents' actions, whereas font styling, text color, and item image clarity exhibit minor effects.

V-Zero: Self-Improving Multimodal Reasoning with Zero Annotation

Jan 15, 2026Abstract:Recent advances in multimodal learning have significantly enhanced the reasoning capabilities of vision-language models (VLMs). However, state-of-the-art approaches rely heavily on large-scale human-annotated datasets, which are costly and time-consuming to acquire. To overcome this limitation, we introduce V-Zero, a general post-training framework that facilitates self-improvement using exclusively unlabeled images. V-Zero establishes a co-evolutionary loop by instantiating two distinct roles: a Questioner and a Solver. The Questioner learns to synthesize high-quality, challenging questions by leveraging a dual-track reasoning reward that contrasts intuitive guesses with reasoned results. The Solver is optimized using pseudo-labels derived from majority voting over its own sampled responses. Both roles are trained iteratively via Group Relative Policy Optimization (GRPO), driving a cycle of mutual enhancement. Remarkably, without a single human annotation, V-Zero achieves consistent performance gains on Qwen2.5-VL-7B-Instruct, improving visual mathematical reasoning by +1.7 and general vision-centric by +2.6, demonstrating the potential of self-improvement in multimodal systems. Code is available at https://github.com/SatonoDia/V-Zero

You Only Look Omni Gradient Backpropagation for Moving Infrared Small Target Detection

Nov 17, 2025Abstract:Moving infrared small target detection is a key component of infrared search and tracking systems, yet it remains extremely challenging due to low signal-to-clutter ratios, severe target-background imbalance, and weak discriminative features. Existing deep learning methods primarily focus on spatio-temporal feature aggregation, but their gains are limited, revealing that the fundamental bottleneck lies in ambiguous per-frame feature representations rather than spatio-temporal modeling itself. Motivated by this insight, we propose BP-FPN, a backpropagation-driven feature pyramid architecture that fundamentally rethinks feature learning for small target. BP-FPN introduces Gradient-Isolated Low-Level Shortcut (GILS) to efficiently incorporate fine-grained target details without inducing shortcut learning, and Directional Gradient Regularization (DGR) to enforce hierarchical feature consistency during backpropagation. The design is theoretically grounded, introduces negligible computational overhead, and can be seamlessly integrated into existing frameworks. Extensive experiments on multiple public datasets show that BP-FPN consistently establishes new state-of-the-art performance. To the best of our knowledge, it is the first FPN designed for this task entirely from the backpropagation perspective.

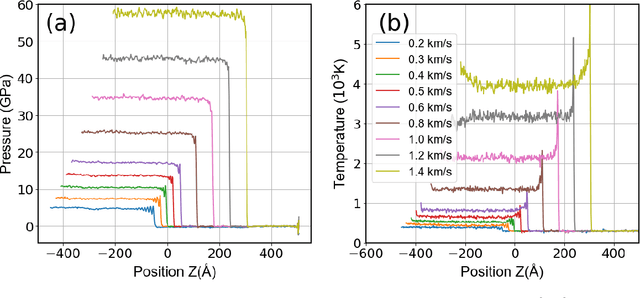

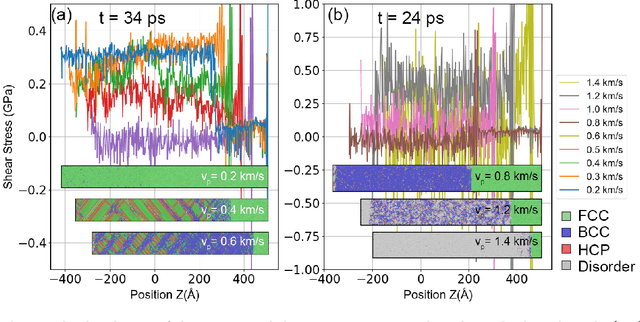

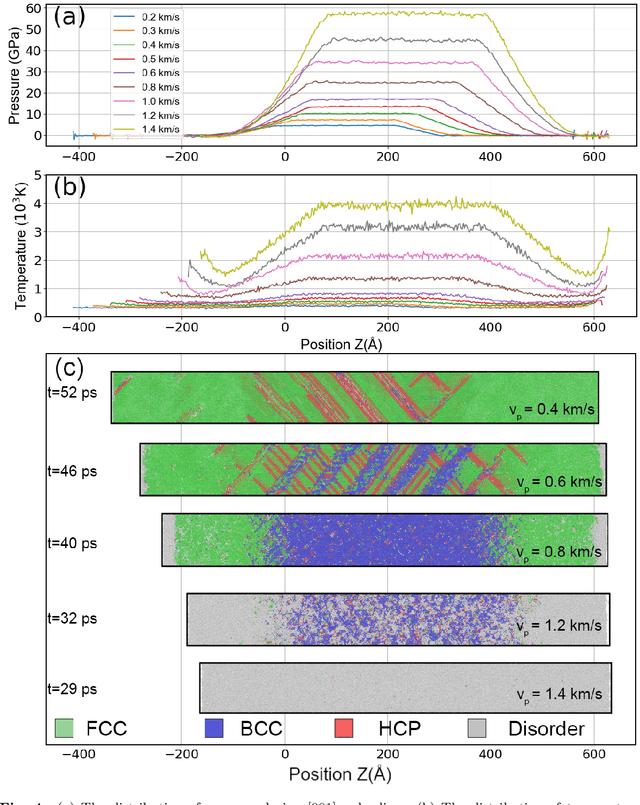

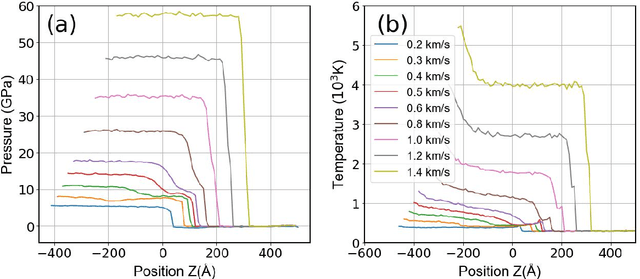

Revealing the dynamic responses of Pb under shock loading based on DFT-accuracy machine learning potential

Nov 17, 2025

Abstract:Lead (Pb) is a typical low-melting-point ductile metal and serves as an important model material in the study of dynamic responses. Under shock-wave loading, its dynamic mechanical behavior comprises two key phenomena: plastic deformation and shock induced phase transitions. The underlying mechanisms of these processes are still poorly understood. Revealing these mechanisms remains challenging for experimental approaches. Non-equilibrium molecular dynamics (NEMD) simulations are an alternative theoretical tool for studying dynamic responses, as they capture atomic-scale mechanisms such as defect evolution and deformation pathways. However, due to the limited accuracy of empirical interatomic potentials, the reliability of previous NEMD studies is questioned. Using our newly developed machine learning potential for Pb-Sn alloys, we revisited the microstructure evolution in response to shock loading under various shock orientations. The results reveal that shock loading along the [001] orientation of Pb exhibits a fast, reversible, and massive phase transition and stacking fault evolution. The behavior of Pb differs from previous studies by the absence of twinning during plastic deformation. Loading along the [011] orientation leads to slow, irreversible plastic deformation, and a localized FCC-BCC phase transition in the Pitsch orientation relationship. This study provides crucial theoretical insights into the dynamic mechanical response of Pb, offering a theoretical input for understanding the microstructure-performance relationship under extreme conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge