Yuhong Zhang

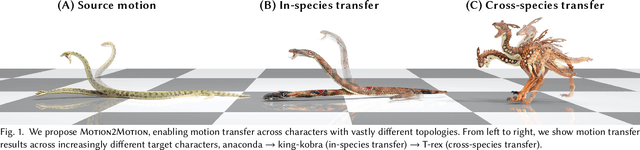

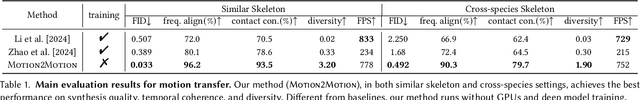

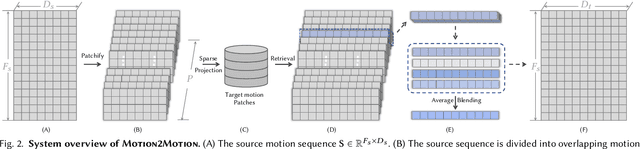

Motion2Motion: Cross-topology Motion Transfer with Sparse Correspondence

Aug 18, 2025

Abstract:This work studies the challenge of transfer animations between characters whose skeletal topologies differ substantially. While many techniques have advanced retargeting techniques in decades, transfer motions across diverse topologies remains less-explored. The primary obstacle lies in the inherent topological inconsistency between source and target skeletons, which restricts the establishment of straightforward one-to-one bone correspondences. Besides, the current lack of large-scale paired motion datasets spanning different topological structures severely constrains the development of data-driven approaches. To address these limitations, we introduce Motion2Motion, a novel, training-free framework. Simply yet effectively, Motion2Motion works with only one or a few example motions on the target skeleton, by accessing a sparse set of bone correspondences between the source and target skeletons. Through comprehensive qualitative and quantitative evaluations, we demonstrate that Motion2Motion achieves efficient and reliable performance in both similar-skeleton and cross-species skeleton transfer scenarios. The practical utility of our approach is further evidenced by its successful integration in downstream applications and user interfaces, highlighting its potential for industrial applications. Code and data are available at https://lhchen.top/Motion2Motion.

AnimeColor: Reference-based Animation Colorization with Diffusion Transformers

Jul 27, 2025Abstract:Animation colorization plays a vital role in animation production, yet existing methods struggle to achieve color accuracy and temporal consistency. To address these challenges, we propose \textbf{AnimeColor}, a novel reference-based animation colorization framework leveraging Diffusion Transformers (DiT). Our approach integrates sketch sequences into a DiT-based video diffusion model, enabling sketch-controlled animation generation. We introduce two key components: a High-level Color Extractor (HCE) to capture semantic color information and a Low-level Color Guider (LCG) to extract fine-grained color details from reference images. These components work synergistically to guide the video diffusion process. Additionally, we employ a multi-stage training strategy to maximize the utilization of reference image color information. Extensive experiments demonstrate that AnimeColor outperforms existing methods in color accuracy, sketch alignment, temporal consistency, and visual quality. Our framework not only advances the state of the art in animation colorization but also provides a practical solution for industrial applications. The code will be made publicly available at \href{https://github.com/IamCreateAI/AnimeColor}{https://github.com/IamCreateAI/AnimeColor}.

Graph Representations for Reading Comprehension Analysis using Large Language Model and Eye-Tracking Biomarker

Jul 16, 2025Abstract:Reading comprehension is a fundamental skill in human cognitive development. With the advancement of Large Language Models (LLMs), there is a growing need to compare how humans and LLMs understand language across different contexts and apply this understanding to functional tasks such as inference, emotion interpretation, and information retrieval. Our previous work used LLMs and human biomarkers to study the reading comprehension process. The results showed that the biomarkers corresponding to words with high and low relevance to the inference target, as labeled by the LLMs, exhibited distinct patterns, particularly when validated using eye-tracking data. However, focusing solely on individual words limited the depth of understanding, which made the conclusions somewhat simplistic despite their potential significance. This study used an LLM-based AI agent to group words from a reading passage into nodes and edges, forming a graph-based text representation based on semantic meaning and question-oriented prompts. We then compare the distribution of eye fixations on important nodes and edges. Our findings indicate that LLMs exhibit high consistency in language understanding at the level of graph topological structure. These results build on our previous findings and offer insights into effective human-AI co-learning strategies.

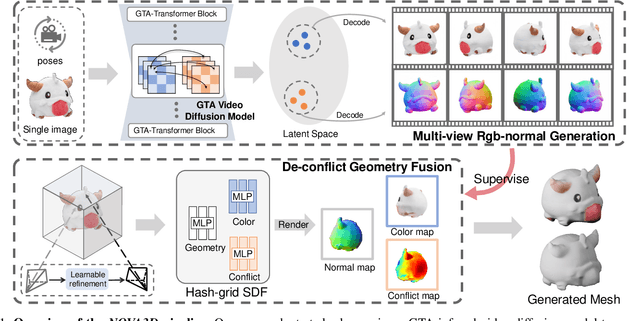

NOVA3D: Normal Aligned Video Diffusion Model for Single Image to 3D Generation

Jun 09, 2025

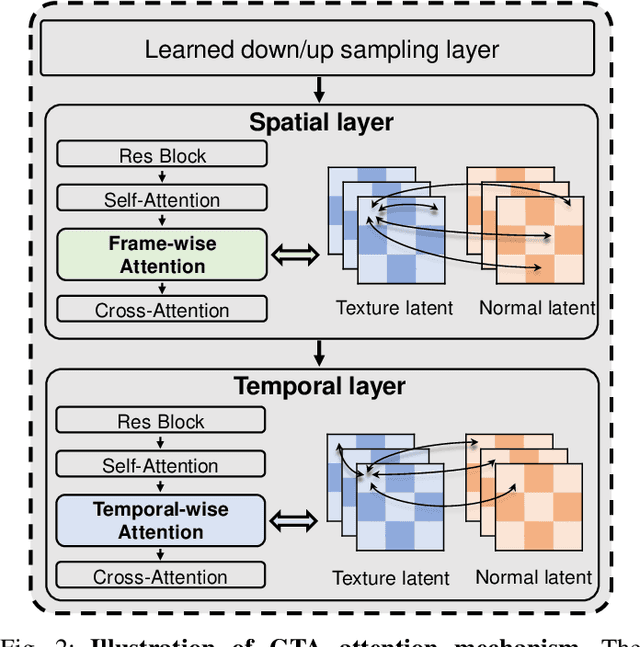

Abstract:3D AI-generated content (AIGC) has made it increasingly accessible for anyone to become a 3D content creator. While recent methods leverage Score Distillation Sampling to distill 3D objects from pretrained image diffusion models, they often suffer from inadequate 3D priors, leading to insufficient multi-view consistency. In this work, we introduce NOVA3D, an innovative single-image-to-3D generation framework. Our key insight lies in leveraging strong 3D priors from a pretrained video diffusion model and integrating geometric information during multi-view video fine-tuning. To facilitate information exchange between color and geometric domains, we propose the Geometry-Temporal Alignment (GTA) attention mechanism, thereby improving generalization and multi-view consistency. Moreover, we introduce the de-conflict geometry fusion algorithm, which improves texture fidelity by addressing multi-view inaccuracies and resolving discrepancies in pose alignment. Extensive experiments validate the superiority of NOVA3D over existing baselines.

HumanMM: Global Human Motion Recovery from Multi-shot Videos

Mar 10, 2025Abstract:In this paper, we present a novel framework designed to reconstruct long-sequence 3D human motion in the world coordinates from in-the-wild videos with multiple shot transitions. Such long-sequence in-the-wild motions are highly valuable to applications such as motion generation and motion understanding, but are of great challenge to be recovered due to abrupt shot transitions, partial occlusions, and dynamic backgrounds presented in such videos. Existing methods primarily focus on single-shot videos, where continuity is maintained within a single camera view, or simplify multi-shot alignment in camera space only. In this work, we tackle the challenges by integrating an enhanced camera pose estimation with Human Motion Recovery (HMR) by incorporating a shot transition detector and a robust alignment module for accurate pose and orientation continuity across shots. By leveraging a custom motion integrator, we effectively mitigate the problem of foot sliding and ensure temporal consistency in human pose. Extensive evaluations on our created multi-shot dataset from public 3D human datasets demonstrate the robustness of our method in reconstructing realistic human motion in world coordinates.

Frequency-Based Alignment of EEG and Audio Signals Using Contrastive Learning and SincNet for Auditory Attention Detection

Mar 06, 2025Abstract:Humans exhibit a remarkable ability to focus auditory attention in complex acoustic environments, such as cocktail parties. Auditory attention detection (AAD) aims to identify the attended speaker by analyzing brain signals, such as electroencephalography (EEG) data. Existing AAD algorithms often leverage deep learning's powerful nonlinear modeling capabilities, few consider the neural mechanisms underlying auditory processing in the brain. In this paper, we propose SincAlignNet, a novel network based on an improved SincNet and contrastive learning, designed to align audio and EEG features for auditory attention detection. The SincNet component simulates the brain's processing of audio during auditory attention, while contrastive learning guides the model to learn the relationship between EEG signals and attended speech. During inference, we calculate the cosine similarity between EEG and audio features and also explore direct inference of the attended speaker using EEG data. Cross-trial evaluations results demonstrate that SincAlignNet outperforms state-of-the-art AAD methods on two publicly available datasets, KUL and DTU, achieving average accuracies of 78.3% and 92.2%, respectively, with a 1-second decision window. The model exhibits strong interpretability, revealing that the left and right temporal lobes are more active during both male and female speaker scenarios. Furthermore, we found that using data from only six electrodes near the temporal lobes maintains similar or even better performance compared to using 64 electrodes. These findings indicate that efficient low-density EEG online decoding is achievable, marking an important step toward the practical implementation of neuro-guided hearing aids in real-world applications. Code is available at: https://github.com/LiaoEuan/SincAlignNet.

Consistent Video Colorization via Palette Guidance

Jan 31, 2025

Abstract:Colorization is a traditional computer vision task and it plays an important role in many time-consuming tasks, such as old film restoration. Existing methods suffer from unsaturated color and temporally inconsistency. In this paper, we propose a novel pipeline to overcome the challenges. We regard the colorization task as a generative task and introduce Stable Video Diffusion (SVD) as our base model. We design a palette-based color guider to assist the model in generating vivid and consistent colors. The color context introduced by the palette not only provides guidance for color generation, but also enhances the stability of the generated colors through a unified color context across multiple sequences. Experiments demonstrate that the proposed method can provide vivid and stable colors for videos, surpassing previous methods.

Motion-X++: A Large-Scale Multimodal 3D Whole-body Human Motion Dataset

Jan 09, 2025

Abstract:In this paper, we introduce Motion-X++, a large-scale multimodal 3D expressive whole-body human motion dataset. Existing motion datasets predominantly capture body-only poses, lacking facial expressions, hand gestures, and fine-grained pose descriptions, and are typically limited to lab settings with manually labeled text descriptions, thereby restricting their scalability. To address this issue, we develop a scalable annotation pipeline that can automatically capture 3D whole-body human motion and comprehensive textural labels from RGB videos and build the Motion-X dataset comprising 81.1K text-motion pairs. Furthermore, we extend Motion-X into Motion-X++ by improving the annotation pipeline, introducing more data modalities, and scaling up the data quantities. Motion-X++ provides 19.5M 3D whole-body pose annotations covering 120.5K motion sequences from massive scenes, 80.8K RGB videos, 45.3K audios, 19.5M frame-level whole-body pose descriptions, and 120.5K sequence-level semantic labels. Comprehensive experiments validate the accuracy of our annotation pipeline and highlight Motion-X++'s significant benefits for generating expressive, precise, and natural motion with paired multimodal labels supporting several downstream tasks, including text-driven whole-body motion generation,audio-driven motion generation, 3D whole-body human mesh recovery, and 2D whole-body keypoints estimation, etc.

Towards Effective Graph Rationalization via Boosting Environment Diversity

Dec 17, 2024

Abstract:Graph Neural Networks (GNNs) perform effectively when training and testing graphs are drawn from the same distribution, but struggle to generalize well in the face of distribution shifts. To address this issue, existing mainstreaming graph rationalization methods first identify rationale and environment subgraphs from input graphs, and then diversify training distributions by augmenting the environment subgraphs. However, these methods merely combine the learned rationale subgraphs with environment subgraphs in the representation space to produce augmentation samples, failing to produce sufficiently diverse distributions. Thus, in this paper, we propose to achieve an effective Graph Rationalization by Boosting Environmental diversity, a GRBE approach that generates the augmented samples in the original graph space to improve the diversity of the environment subgraph. Firstly, to ensure the effectiveness of augmentation samples, we propose a precise rationale subgraph extraction strategy in GRBE to refine the rationale subgraph learning process in the original graph space. Secondly, to ensure the diversity of augmented samples, we propose an environment diversity augmentation strategy in GRBE that mixes the environment subgraphs of different graphs in the original graph space and then combines the new environment subgraphs with rationale subgraphs to generate augmented graphs. The average improvements of 7.65% and 6.11% in rationalization and classification performance on benchmark datasets demonstrate the superiority of GRBE over state-of-the-art approaches.

DINO-X: A Unified Vision Model for Open-World Object Detection and Understanding

Nov 21, 2024

Abstract:In this paper, we introduce DINO-X, which is a unified object-centric vision model developed by IDEA Research with the best open-world object detection performance to date. DINO-X employs the same Transformer-based encoder-decoder architecture as Grounding DINO 1.5 to pursue an object-level representation for open-world object understanding. To make long-tailed object detection easy, DINO-X extends its input options to support text prompt, visual prompt, and customized prompt. With such flexible prompt options, we develop a universal object prompt to support prompt-free open-world detection, making it possible to detect anything in an image without requiring users to provide any prompt. To enhance the model's core grounding capability, we have constructed a large-scale dataset with over 100 million high-quality grounding samples, referred to as Grounding-100M, for advancing the model's open-vocabulary detection performance. Pre-training on such a large-scale grounding dataset leads to a foundational object-level representation, which enables DINO-X to integrate multiple perception heads to simultaneously support multiple object perception and understanding tasks, including detection, segmentation, pose estimation, object captioning, object-based QA, etc. Experimental results demonstrate the superior performance of DINO-X. Specifically, the DINO-X Pro model achieves 56.0 AP, 59.8 AP, and 52.4 AP on the COCO, LVIS-minival, and LVIS-val zero-shot object detection benchmarks, respectively. Notably, it scores 63.3 AP and 56.5 AP on the rare classes of LVIS-minival and LVIS-val benchmarks, both improving the previous SOTA performance by 5.8 AP. Such a result underscores its significantly improved capacity for recognizing long-tailed objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge