Zhuoran Zheng

SSPFormer: Self-Supervised Pretrained Transformer for MRI Images

Jan 19, 2026Abstract:The pre-trained transformer demonstrates remarkable generalization ability in natural image processing. However, directly transferring it to magnetic resonance images faces two key challenges: the inability to adapt to the specificity of medical anatomical structures and the limitations brought about by the privacy and scarcity of medical data. To address these issues, this paper proposes a Self-Supervised Pretrained Transformer (SSPFormer) for MRI images, which effectively learns domain-specific feature representations of medical images by leveraging unlabeled raw imaging data. To tackle the domain gap and data scarcity, we introduce inverse frequency projection masking, which prioritizes the reconstruction of high-frequency anatomical regions to enforce structure-aware representation learning. Simultaneously, to enhance robustness against real-world MRI artifacts, we employ frequency-weighted FFT noise enhancement that injects physiologically realistic noise into the Fourier domain. Together, these strategies enable the model to learn domain-invariant and artifact-robust features directly from raw scans. Through extensive experiments on segmentation, super-resolution, and denoising tasks, the proposed SSPFormer achieves state-of-the-art performance, fully verifying its ability to capture fine-grained MRI image fidelity and adapt to clinical application requirements.

DCA-LUT: Deep Chromatic Alignment with 5D LUT for Purple Fringing Removal

Nov 15, 2025

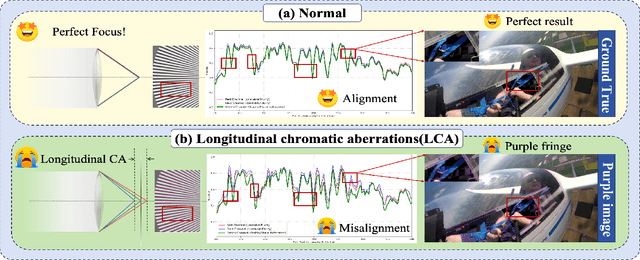

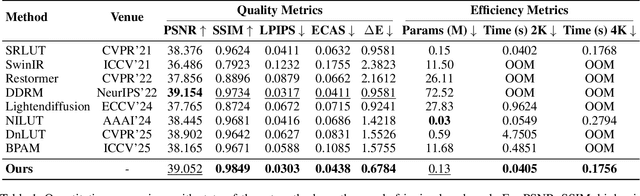

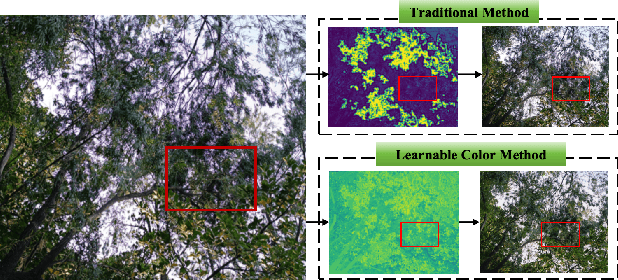

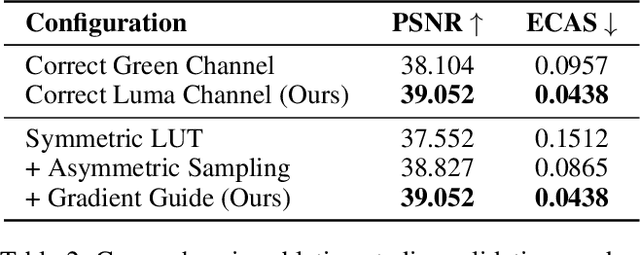

Abstract:Purple fringing, a persistent artifact caused by Longitudinal Chromatic Aberration (LCA) in camera lenses, has long degraded the clarity and realism of digital imaging. Traditional solutions rely on complex and expensive apochromatic (APO) lens hardware and the extraction of handcrafted features, ignoring the data-driven approach. To fill this gap, we introduce DCA-LUT, the first deep learning framework for purple fringing removal. Inspired by the physical root of the problem, the spatial misalignment of RGB color channels due to lens dispersion, we introduce a novel Chromatic-Aware Coordinate Transformation (CA-CT) module, learning an image-adaptive color space to decouple and isolate fringing into a dedicated dimension. This targeted separation allows the network to learn a precise ``purple fringe channel", which then guides the accurate restoration of the luminance channel. The final color correction is performed by a learned 5D Look-Up Table (5D LUT), enabling efficient and powerful% non-linear color mapping. To enable robust training and fair evaluation, we constructed a large-scale synthetic purple fringing dataset (PF-Synth). Extensive experiments in synthetic and real-world datasets demonstrate that our method achieves state-of-the-art performance in purple fringing removal.

4KDehazeFlow: Ultra-High-Definition Image Dehazing via Flow Matching

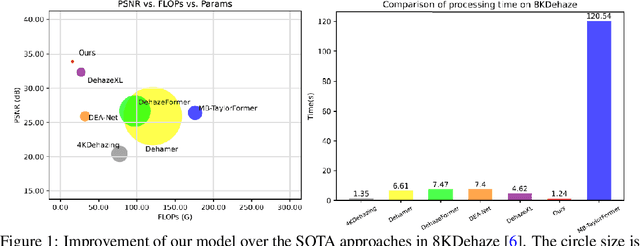

Nov 12, 2025Abstract:Ultra-High-Definition (UHD) image dehazing faces challenges such as limited scene adaptability in prior-based methods and high computational complexity with color distortion in deep learning approaches. To address these issues, we propose 4KDehazeFlow, a novel method based on Flow Matching and the Haze-Aware vector field. This method models the dehazing process as a progressive optimization of continuous vector field flow, providing efficient data-driven adaptive nonlinear color transformation for high-quality dehazing. Specifically, our method has the following advantages: 1) 4KDehazeFlow is a general method compatible with various deep learning networks, without relying on any specific network architecture. 2) We propose a learnable 3D lookup table (LUT) that encodes haze transformation parameters into a compact 3D mapping matrix, enabling efficient inference through precomputed mappings. 3) We utilize a fourth-order Runge-Kutta (RK4) ordinary differential equation (ODE) solver to stably solve the dehazing flow field through an accurate step-by-step iterative method, effectively suppressing artifacts. Extensive experiments show that 4KDehazeFlow exceeds seven state-of-the-art methods. It delivers a 2dB PSNR increase and better performance in dense haze and color fidelity.

CAST-LUT: Tokenizer-Guided HSV Look-Up Tables for Purple Flare Removal

Nov 10, 2025Abstract:Purple flare, a diffuse chromatic aberration artifact commonly found around highlight areas, severely degrades the tone transition and color of the image. Existing traditional methods are based on hand-crafted features, which lack flexibility and rely entirely on fixed priors, while the scarcity of paired training data critically hampers deep learning. To address this issue, we propose a novel network built upon decoupled HSV Look-Up Tables (LUTs). The method aims to simplify color correction by adjusting the Hue (H), Saturation (S), and Value (V) components independently. This approach resolves the inherent color coupling problems in traditional methods. Our model adopts a two-stage architecture: First, a Chroma-Aware Spectral Tokenizer (CAST) converts the input image from RGB space to HSV space and independently encodes the Hue (H) and Value (V) channels into a set of semantic tokens describing the Purple flare status; second, the HSV-LUT module takes these tokens as input and dynamically generates independent correction curves (1D-LUTs) for the three channels H, S, and V. To effectively train and validate our model, we built the first large-scale purple flare dataset with diverse scenes. We also proposed new metrics and a loss function specifically designed for this task. Extensive experiments demonstrate that our model not only significantly outperforms existing methods in visual effects but also achieves state-of-the-art performance on all quantitative metrics.

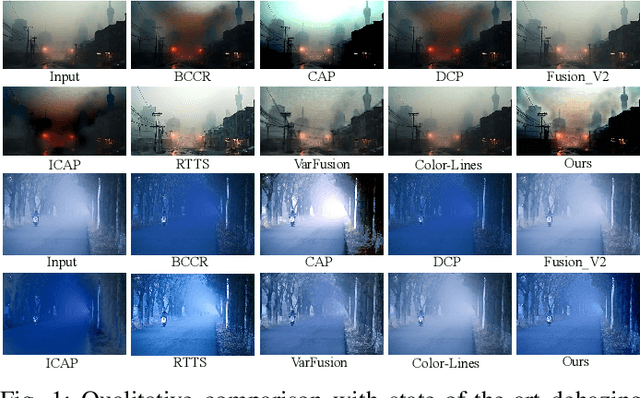

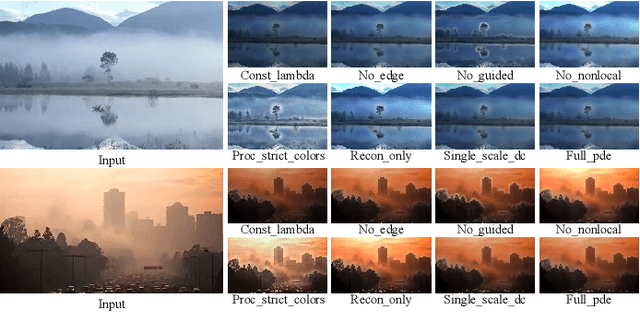

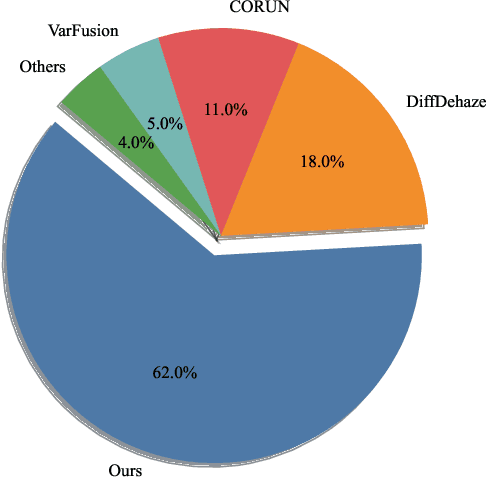

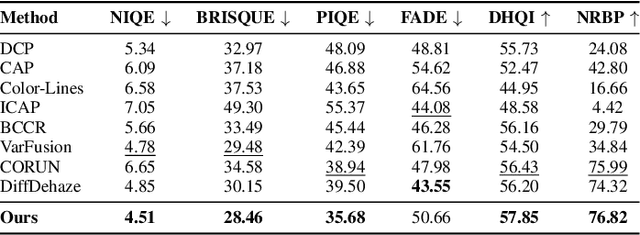

A PDE-Based Image Dehazing Method via Atmospheric Scattering Theory

Jun 10, 2025

Abstract:This paper presents a novel partial differential equation (PDE) framework for single-image dehazing. By integrating the atmospheric scattering model with nonlocal regularization and dark channel prior, we propose the improved PDE: \[ -\text{div}\left(D(\nabla u)\nabla u\right) + \lambda(t) G(u) = \Phi(I,t,A) \] where $D(\nabla u) = (|\nabla u| + \epsilon)^{-1}$ is the edge-preserving diffusion coefficient, $G(u)$ is the Gaussian convolution operator, and $\lambda(t)$ is the adaptive regularization parameter based on transmission map $t$. We prove the existence and uniqueness of weak solutions in $H_0^1(\Omega)$ using Lax-Milgram theorem, and implement an efficient fixed-point iteration scheme accelerated by PyTorch GPU computation. The experimental results demonstrate that this method is a promising deghazing solution that can be generalized to the deep model paradigm.

M2Restore: Mixture-of-Experts-based Mamba-CNN Fusion Framework for All-in-One Image Restoration

Jun 09, 2025

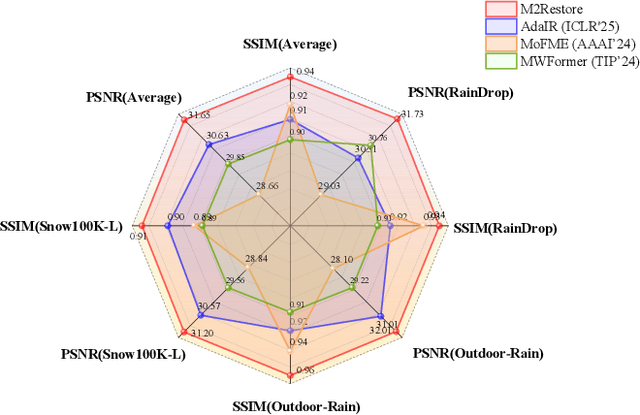

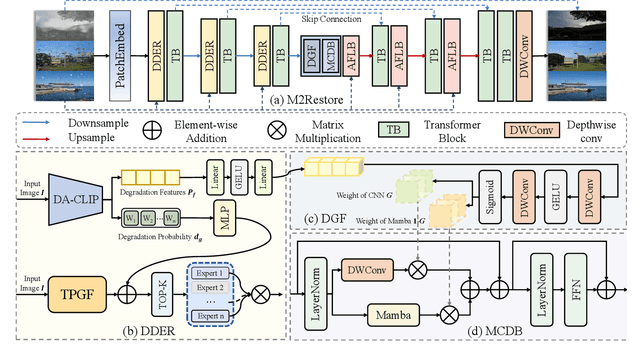

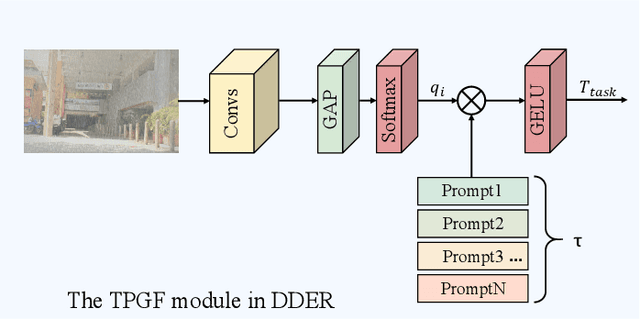

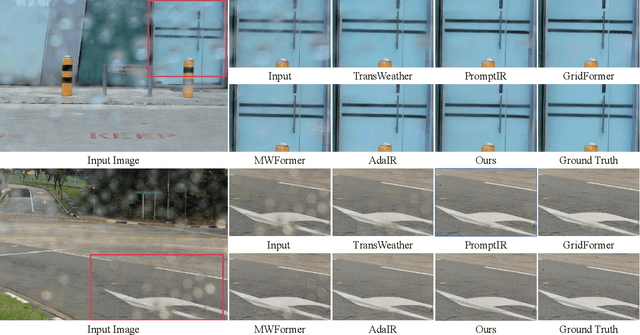

Abstract:Natural images are often degraded by complex, composite degradations such as rain, snow, and haze, which adversely impact downstream vision applications. While existing image restoration efforts have achieved notable success, they are still hindered by two critical challenges: limited generalization across dynamically varying degradation scenarios and a suboptimal balance between preserving local details and modeling global dependencies. To overcome these challenges, we propose M2Restore, a novel Mixture-of-Experts (MoE)-based Mamba-CNN fusion framework for efficient and robust all-in-one image restoration. M2Restore introduces three key contributions: First, to boost the model's generalization across diverse degradation conditions, we exploit a CLIP-guided MoE gating mechanism that fuses task-conditioned prompts with CLIP-derived semantic priors. This mechanism is further refined via cross-modal feature calibration, which enables precise expert selection for various degradation types. Second, to jointly capture global contextual dependencies and fine-grained local details, we design a dual-stream architecture that integrates the localized representational strength of CNNs with the long-range modeling efficiency of Mamba. This integration enables collaborative optimization of global semantic relationships and local structural fidelity, preserving global coherence while enhancing detail restoration. Third, we introduce an edge-aware dynamic gating mechanism that adaptively balances global modeling and local enhancement by reallocating computational attention to degradation-sensitive regions. This targeted focus leads to more efficient and precise restoration. Extensive experiments across multiple image restoration benchmarks validate the superiority of M2Restore in both visual quality and quantitative performance.

Latent label distribution grid representation for modeling uncertainty

May 27, 2025Abstract:Although \textbf{L}abel \textbf{D}istribution \textbf{L}earning (LDL) has promising representation capabilities for characterizing the polysemy of an instance, the complexity and high cost of the label distribution annotation lead to inexact in the construction of the label space. The existence of a large number of inexact labels generates a label space with uncertainty, which misleads the LDL algorithm to yield incorrect decisions. To alleviate this problem, we model the uncertainty of label distributions by constructing a \textbf{L}atent \textbf{L}abel \textbf{D}istribution \textbf{G}rid (LLDG) to form a low-noise representation space. Specifically, we first construct a label correlation matrix based on the differences between labels, and then expand each value of the matrix into a vector that obeys a Gaussian distribution, thus building a LLDG to model the uncertainty of the label space. Finally, the LLDG is reconstructed by the LLDG-Mixer to generate an accurate label distribution. Note that we enforce a customized low-rank scheme on this grid, which assumes that the label relations may be noisy and it needs to perform noise-reduction with the help of a Tucker reconstruction technique. Furthermore, we attempt to evaluate the effectiveness of the LLDG by considering its generation as an upstream task to achieve the classification of the objects. Extensive experimental results show that our approach performs competitively on several benchmarks.

UHD Image Dehazing via anDehazeFormer with Atmospheric-aware KV Cache

May 20, 2025

Abstract:In this paper, we propose an efficient visual transformer framework for ultra-high-definition (UHD) image dehazing that addresses the key challenges of slow training speed and high memory consumption for existing methods. Our approach introduces two key innovations: 1) an \textbf{a}daptive \textbf{n}ormalization mechanism inspired by the nGPT architecture that enables ultra-fast and stable training with a network with a restricted range of parameter expressions; and 2) we devise an atmospheric scattering-aware KV caching mechanism that dynamically optimizes feature preservation based on the physical haze formation model. The proposed architecture improves the training convergence speed by \textbf{5 $\times$} while reducing memory overhead, enabling real-time processing of 50 high-resolution images per second on an RTX4090 GPU. Experimental results show that our approach maintains state-of-the-art dehazing quality while significantly improving computational efficiency for 4K/8K image restoration tasks. Furthermore, we provide a new dehazing image interpretable method with the help of an integrated gradient attribution map. Our code can be found here: https://anonymous.4open.science/r/anDehazeFormer-632E/README.md.

Distribution-aware Dataset Distillation for Efficient Image Restoration

Apr 21, 2025Abstract:With the exponential increase in image data, training an image restoration model is laborious. Dataset distillation is a potential solution to this problem, yet current distillation techniques are a blank canvas in the field of image restoration. To fill this gap, we propose the Distribution-aware Dataset Distillation method (TripleD), a new framework that extends the principles of dataset distillation to image restoration. Specifically, TripleD uses a pre-trained vision Transformer to extract features from images for complexity evaluation, and the subset (the number of samples is much smaller than the original training set) is selected based on complexity. The selected subset is then fed through a lightweight CNN that fine-tunes the image distribution to align with the distribution of the original dataset at the feature level. To efficiently condense knowledge, the training is divided into two stages. Early stages focus on simpler, low-complexity samples to build foundational knowledge, while later stages select more complex and uncertain samples as the model matures. Our method achieves promising performance on multiple image restoration tasks, including multi-task image restoration, all-in-one image restoration, and ultra-high-definition image restoration tasks. Note that we can train a state-of-the-art image restoration model on an ultra-high-definition (4K resolution) dataset using only one consumer-grade GPU in less than 8 hours (500 savings in computing resources and immeasurable training time).

AdaQual-Diff: Diffusion-Based Image Restoration via Adaptive Quality Prompting

Apr 17, 2025Abstract:Restoring images afflicted by complex real-world degradations remains challenging, as conventional methods often fail to adapt to the unique mixture and severity of artifacts present. This stems from a reliance on indirect cues which poorly capture the true perceptual quality deficit. To address this fundamental limitation, we introduce AdaQual-Diff, a diffusion-based framework that integrates perceptual quality assessment directly into the generative restoration process. Our approach establishes a mathematical relationship between regional quality scores from DeQAScore and optimal guidance complexity, implemented through an Adaptive Quality Prompting mechanism. This mechanism systematically modulates prompt structure according to measured degradation severity: regions with lower perceptual quality receive computationally intensive, structurally complex prompts with precise restoration directives, while higher quality regions receive minimal prompts focused on preservation rather than intervention. The technical core of our method lies in the dynamic allocation of computational resources proportional to degradation severity, creating a spatially-varying guidance field that directs the diffusion process with mathematical precision. By combining this quality-guided approach with content-specific conditioning, our framework achieves fine-grained control over regional restoration intensity without requiring additional parameters or inference iterations. Experimental results demonstrate that AdaQual-Diff achieves visually superior restorations across diverse synthetic and real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge