Yeying Jin

Erased, But Not Forgotten: Erased Rectified Flow Transformers Still Remain Unsafe Under Concept Attack

Oct 01, 2025Abstract:Recent advances in text-to-image (T2I) diffusion models have enabled impressive generative capabilities, but they also raise significant safety concerns due to the potential to produce harmful or undesirable content. While concept erasure has been explored as a mitigation strategy, most existing approaches and corresponding attack evaluations are tailored to Stable Diffusion (SD) and exhibit limited effectiveness when transferred to next-generation rectified flow transformers such as Flux. In this work, we present ReFlux, the first concept attack method specifically designed to assess the robustness of concept erasure in the latest rectified flow-based T2I framework. Our approach is motivated by the observation that existing concept erasure techniques, when applied to Flux, fundamentally rely on a phenomenon known as attention localization. Building on this insight, we propose a simple yet effective attack strategy that specifically targets this property. At its core, a reverse-attention optimization strategy is introduced to effectively reactivate suppressed signals while stabilizing attention. This is further reinforced by a velocity-guided dynamic that enhances the robustness of concept reactivation by steering the flow matching process, and a consistency-preserving objective that maintains the global layout and preserves unrelated content. Extensive experiments consistently demonstrate the effectiveness and efficiency of the proposed attack method, establishing a reliable benchmark for evaluating the robustness of concept erasure strategies in rectified flow transformers.

Knowing or Guessing? Robust Medical Visual Question Answering via Joint Consistency and Contrastive Learning

Aug 26, 2025Abstract:In high-stakes medical applications, consistent answering across diverse question phrasings is essential for reliable diagnosis. However, we reveal that current Medical Vision-Language Models (Med-VLMs) exhibit concerning fragility in Medical Visual Question Answering, as their answers fluctuate significantly when faced with semantically equivalent rephrasings of medical questions. We attribute this to two limitations: (1) insufficient alignment of medical concepts, leading to divergent reasoning patterns, and (2) hidden biases in training data that prioritize syntactic shortcuts over semantic understanding. To address these challenges, we construct RoMed, a dataset built upon original VQA datasets containing 144k questions with variations spanning word-level, sentence-level, and semantic-level perturbations. When evaluating state-of-the-art (SOTA) models like LLaVA-Med on RoMed, we observe alarming performance drops (e.g., a 40\% decline in Recall) compared to original VQA benchmarks, exposing critical robustness gaps. To bridge this gap, we propose Consistency and Contrastive Learning (CCL), which integrates two key components: (1) knowledge-anchored consistency learning, aligning Med-VLMs with medical knowledge rather than shallow feature patterns, and (2) bias-aware contrastive learning, mitigating data-specific priors through discriminative representation refinement. CCL achieves SOTA performance on three popular VQA benchmarks and notably improves answer consistency by 50\% on the challenging RoMed test set, demonstrating significantly enhanced robustness. Code will be released.

HieroAction: Hierarchically Guided VLM for Fine-Grained Action Analysis

Aug 23, 2025Abstract:Evaluating human actions with clear and detailed feedback is important in areas such as sports, healthcare, and robotics, where decisions rely not only on final outcomes but also on interpretable reasoning. However, most existing methods provide only a final score without explanation or detailed analysis, limiting their practical applicability. To address this, we introduce HieroAction, a vision-language model that delivers accurate and structured assessments of human actions. HieroAction builds on two key ideas: (1) Stepwise Action Reasoning, a tailored chain of thought process designed specifically for action assessment, which guides the model to evaluate actions step by step, from overall recognition through sub action analysis to final scoring, thus enhancing interpretability and structured understanding; and (2) Hierarchical Policy Learning, a reinforcement learning strategy that enables the model to learn fine grained sub action dynamics and align them with high level action quality, thereby improving scoring precision. The reasoning pathway structures the evaluation process, while policy learning refines each stage through reward based optimization. Their integration ensures accurate and interpretable assessments, as demonstrated by superior performance across multiple benchmark datasets. Code will be released upon acceptance.

Mem4D: Decoupling Static and Dynamic Memory for Dynamic Scene Reconstruction

Aug 12, 2025Abstract:Reconstructing dense geometry for dynamic scenes from a monocular video is a critical yet challenging task. Recent memory-based methods enable efficient online reconstruction, but they fundamentally suffer from a Memory Demand Dilemma: The memory representation faces an inherent conflict between the long-term stability required for static structures and the rapid, high-fidelity detail retention needed for dynamic motion. This conflict forces existing methods into a compromise, leading to either geometric drift in static structures or blurred, inaccurate reconstructions of dynamic objects. To address this dilemma, we propose Mem4D, a novel framework that decouples the modeling of static geometry and dynamic motion. Guided by this insight, we design a dual-memory architecture: 1) The Transient Dynamics Memory (TDM) focuses on capturing high-frequency motion details from recent frames, enabling accurate and fine-grained modeling of dynamic content; 2) The Persistent Structure Memory (PSM) compresses and preserves long-term spatial information, ensuring global consistency and drift-free reconstruction for static elements. By alternating queries to these specialized memories, Mem4D simultaneously maintains static geometry with global consistency and reconstructs dynamic elements with high fidelity. Experiments on challenging benchmarks demonstrate that our method achieves state-of-the-art or competitive performance while maintaining high efficiency. Codes will be publicly available.

Undress to Redress: A Training-Free Framework for Virtual Try-On

Aug 11, 2025Abstract:Virtual try-on (VTON) is a crucial task for enhancing user experience in online shopping by generating realistic garment previews on personal photos. Although existing methods have achieved impressive results, they struggle with long-sleeve-to-short-sleeve conversions-a common and practical scenario-often producing unrealistic outputs when exposed skin is underrepresented in the original image. We argue that this challenge arises from the ''majority'' completion rule in current VTON models, which leads to inaccurate skin restoration in such cases. To address this, we propose UR-VTON (Undress-Redress Virtual Try-ON), a novel, training-free framework that can be seamlessly integrated with any existing VTON method. UR-VTON introduces an ''undress-to-redress'' mechanism: it first reveals the user's torso by virtually ''undressing,'' then applies the target short-sleeve garment, effectively decomposing the conversion into two more manageable steps. Additionally, we incorporate Dynamic Classifier-Free Guidance scheduling to balance diversity and image quality during DDPM sampling, and employ Structural Refiner to enhance detail fidelity using high-frequency cues. Finally, we present LS-TON, a new benchmark for long-sleeve-to-short-sleeve try-on. Extensive experiments demonstrate that UR-VTON outperforms state-of-the-art methods in both detail preservation and image quality. Code will be released upon acceptance.

Adapting Large VLMs with Iterative and Manual Instructions for Generative Low-light Enhancement

Jul 24, 2025Abstract:Most existing low-light image enhancement (LLIE) methods rely on pre-trained model priors, low-light inputs, or both, while neglecting the semantic guidance available from normal-light images. This limitation hinders their effectiveness in complex lighting conditions. In this paper, we propose VLM-IMI, a novel framework that leverages large vision-language models (VLMs) with iterative and manual instructions (IMIs) for LLIE. VLM-IMI incorporates textual descriptions of the desired normal-light content as enhancement cues, enabling semantically informed restoration. To effectively integrate cross-modal priors, we introduce an instruction prior fusion module, which dynamically aligns and fuses image and text features, promoting the generation of detailed and semantically coherent outputs. During inference, we adopt an iterative and manual instruction strategy to refine textual instructions, progressively improving visual quality. This refinement enhances structural fidelity, semantic alignment, and the recovery of fine details under extremely low-light conditions. Extensive experiments across diverse scenarios demonstrate that VLM-IMI outperforms state-of-the-art methods in both quantitative metrics and perceptual quality. The source code is available at https://github.com/sunxiaoran01/VLM-IMI.

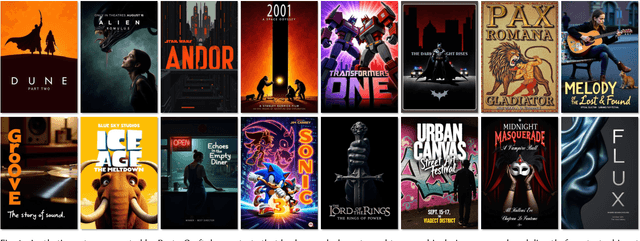

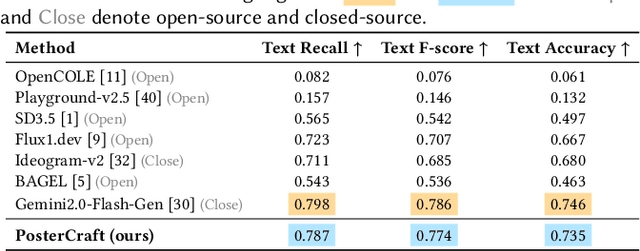

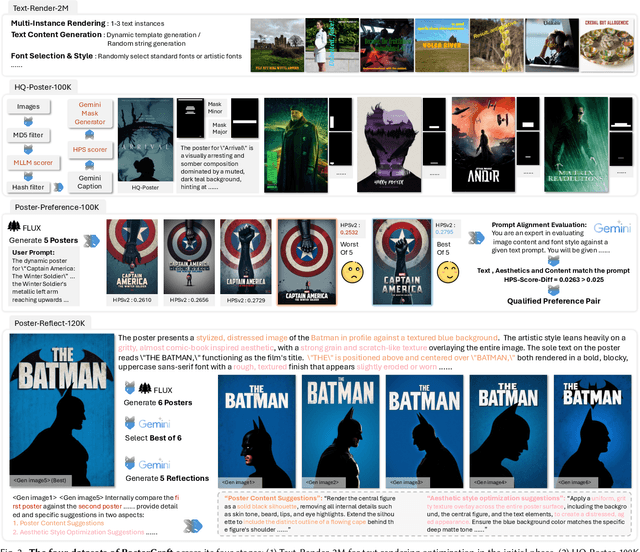

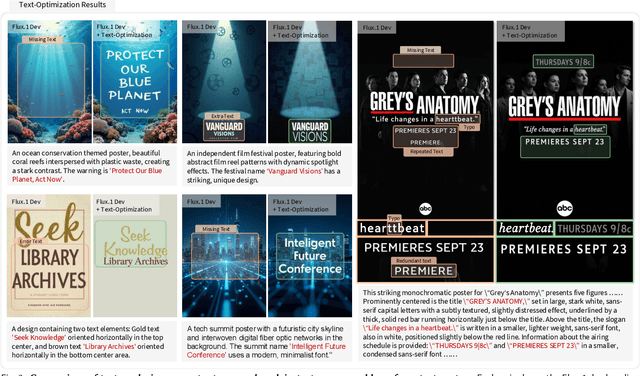

PosterCraft: Rethinking High-Quality Aesthetic Poster Generation in a Unified Framework

Jun 12, 2025

Abstract:Generating aesthetic posters is more challenging than simple design images: it requires not only precise text rendering but also the seamless integration of abstract artistic content, striking layouts, and overall stylistic harmony. To address this, we propose PosterCraft, a unified framework that abandons prior modular pipelines and rigid, predefined layouts, allowing the model to freely explore coherent, visually compelling compositions. PosterCraft employs a carefully designed, cascaded workflow to optimize the generation of high-aesthetic posters: (i) large-scale text-rendering optimization on our newly introduced Text-Render-2M dataset; (ii) region-aware supervised fine-tuning on HQ-Poster100K; (iii) aesthetic-text-reinforcement learning via best-of-n preference optimization; and (iv) joint vision-language feedback refinement. Each stage is supported by a fully automated data-construction pipeline tailored to its specific needs, enabling robust training without complex architectural modifications. Evaluated on multiple experiments, PosterCraft significantly outperforms open-source baselines in rendering accuracy, layout coherence, and overall visual appeal-approaching the quality of SOTA commercial systems. Our code, models, and datasets can be found in the Project page: https://ephemeral182.github.io/PosterCraft

MMGT: Motion Mask Guided Two-Stage Network for Co-Speech Gesture Video Generation

May 29, 2025Abstract:Co-Speech Gesture Video Generation aims to generate vivid speech videos from audio-driven still images, which is challenging due to the diversity of different parts of the body in terms of amplitude of motion, audio relevance, and detailed features. Relying solely on audio as the control signal often fails to capture large gesture movements in video, leading to more pronounced artifacts and distortions. Existing approaches typically address this issue by introducing additional a priori information, but this can limit the practical application of the task. Specifically, we propose a Motion Mask-Guided Two-Stage Network (MMGT) that uses audio, as well as motion masks and motion features generated from the audio signal to jointly drive the generation of synchronized speech gesture videos. In the first stage, the Spatial Mask-Guided Audio Pose Generation (SMGA) Network generates high-quality pose videos and motion masks from audio, effectively capturing large movements in key regions such as the face and gestures. In the second stage, we integrate the Motion Masked Hierarchical Audio Attention (MM-HAA) into the Stabilized Diffusion Video Generation model, overcoming limitations in fine-grained motion generation and region-specific detail control found in traditional methods. This guarantees high-quality, detailed upper-body video generation with accurate texture and motion details. Evaluations show improved video quality, lip-sync, and gesture. The model and code are available at https://github.com/SIA-IDE/MMGT.

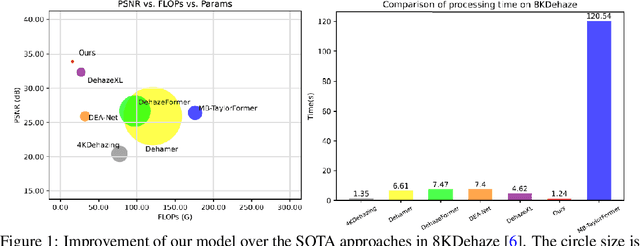

UHD Image Dehazing via anDehazeFormer with Atmospheric-aware KV Cache

May 20, 2025

Abstract:In this paper, we propose an efficient visual transformer framework for ultra-high-definition (UHD) image dehazing that addresses the key challenges of slow training speed and high memory consumption for existing methods. Our approach introduces two key innovations: 1) an \textbf{a}daptive \textbf{n}ormalization mechanism inspired by the nGPT architecture that enables ultra-fast and stable training with a network with a restricted range of parameter expressions; and 2) we devise an atmospheric scattering-aware KV caching mechanism that dynamically optimizes feature preservation based on the physical haze formation model. The proposed architecture improves the training convergence speed by \textbf{5 $\times$} while reducing memory overhead, enabling real-time processing of 50 high-resolution images per second on an RTX4090 GPU. Experimental results show that our approach maintains state-of-the-art dehazing quality while significantly improving computational efficiency for 4K/8K image restoration tasks. Furthermore, we provide a new dehazing image interpretable method with the help of an integrated gradient attribution map. Our code can be found here: https://anonymous.4open.science/r/anDehazeFormer-632E/README.md.

Black-box Adversaries from Latent Space: Unnoticeable Attacks on Human Pose and Shape Estimation

May 17, 2025Abstract:Expressive human pose and shape (EHPS) estimation is vital for digital human generation, particularly in live-streaming applications. However, most existing EHPS models focus primarily on minimizing estimation errors, with limited attention on potential security vulnerabilities. Current adversarial attacks on EHPS models often require white-box access (e.g., model details or gradients) or generate visually conspicuous perturbations, limiting their practicality and ability to expose real-world security threats. To address these limitations, we propose a novel Unnoticeable Black-Box Attack (UBA) against EHPS models. UBA leverages the latent-space representations of natural images to generate an optimal adversarial noise pattern and iteratively refine its attack potency along an optimized direction in digital space. Crucially, this process relies solely on querying the model's output, requiring no internal knowledge of the EHPS architecture, while guiding the noise optimization toward greater stealth and effectiveness. Extensive experiments and visual analyses demonstrate the superiority of UBA. Notably, UBA increases the pose estimation errors of EHPS models by 17.27%-58.21% on average, revealing critical vulnerabilities. These findings underscore the urgent need to address and mitigate security risks associated with digital human generation systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge