Weibin Chen

Unveiling Hidden Vulnerabilities in Digital Human Generation via Adversarial Attacks

Apr 24, 2025

Abstract:Expressive human pose and shape estimation (EHPS) is crucial for digital human generation, especially in applications like live streaming. While existing research primarily focuses on reducing estimation errors, it largely neglects robustness and security aspects, leaving these systems vulnerable to adversarial attacks. To address this significant challenge, we propose the \textbf{Tangible Attack (TBA)}, a novel framework designed to generate adversarial examples capable of effectively compromising any digital human generation model. Our approach introduces a \textbf{Dual Heterogeneous Noise Generator (DHNG)}, which leverages Variational Autoencoders (VAE) and ControlNet to produce diverse, targeted noise tailored to the original image features. Additionally, we design a custom \textbf{adversarial loss function} to optimize the noise, ensuring both high controllability and potent disruption. By iteratively refining the adversarial sample through multi-gradient signals from both the noise and the state-of-the-art EHPS model, TBA substantially improves the effectiveness of adversarial attacks. Extensive experiments demonstrate TBA's superiority, achieving a remarkable 41.0\% increase in estimation error, with an average improvement of approximately 17.0\%. These findings expose significant security vulnerabilities in current EHPS models and highlight the need for stronger defenses in digital human generation systems.

Deep Random Features for Scalable Interpolation of Spatiotemporal Data

Dec 16, 2024

Abstract:The rapid growth of earth observation systems calls for a scalable approach to interpolate remote-sensing observations. These methods in principle, should acquire more information about the observed field as data grows. Gaussian processes (GPs) are candidate model choices for interpolation. However, due to their poor scalability, they usually rely on inducing points for inference, which restricts their expressivity. Moreover, commonly imposed assumptions such as stationarity prevents them from capturing complex patterns in the data. While deep GPs can overcome this issue, training and making inference with them are difficult, again requiring crude approximations via inducing points. In this work, we instead approach the problem through Bayesian deep learning, where spatiotemporal fields are represented by deep neural networks, whose layers share the inductive bias of stationary GPs on the plane/sphere via random feature expansions. This allows one to (1) capture high frequency patterns in the data, and (2) use mini-batched gradient descent for large scale training. We experiment on various remote sensing data at local/global scales, showing that our approach produce competitive or superior results to existing methods, with well-calibrated uncertainties.

Efficient Model Compression Techniques with FishLeg

Dec 03, 2024

Abstract:In many domains, the most successful AI models tend to be the largest, indeed often too large to be handled by AI players with limited computational resources. To mitigate this, a number of compression methods have been developed, including methods that prune the network down to high sparsity whilst retaining performance. The best-performing pruning techniques are often those that use second-order curvature information (such as an estimate of the Fisher information matrix) to score the importance of each weight and to predict the optimal compensation for weight deletion. However, these methods are difficult to scale to high-dimensional parameter spaces without making heavy approximations. Here, we propose the FishLeg surgeon (FLS), a new second-order pruning method based on the Fisher-Legendre (FishLeg) optimizer. At the heart of FishLeg is a meta-learning approach to amortising the action of the inverse FIM, which brings a number of advantages. Firstly, the parameterisation enables the use of flexible tensor factorisation techniques to improve computational and memory efficiency without sacrificing much accuracy, alleviating challenges associated with scalability of most second-order pruning methods. Secondly, directly estimating the inverse FIM leads to less sensitivity to the amplification of stochasticity during inversion, thereby resulting in more precise estimates. Thirdly, our approach also allows for progressive assimilation of the curvature into the parameterisation. In the gradual pruning regime, this results in a more efficient estimate refinement as opposed to re-estimation. We find that FishLeg achieves higher or comparable performance against two common baselines in the area, most notably in the high sparsity regime when considering a ResNet18 model on CIFAR-10 (84% accuracy at 95% sparsity vs 60% for OBS) and TinyIM (53% accuracy at 80% sparsity vs 48% for OBS).

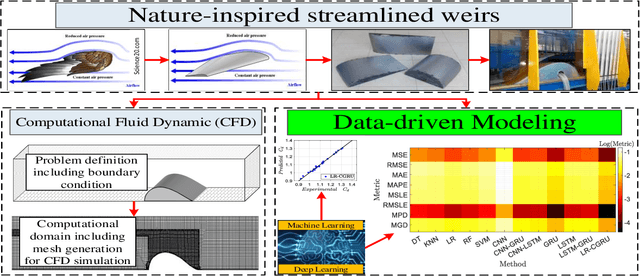

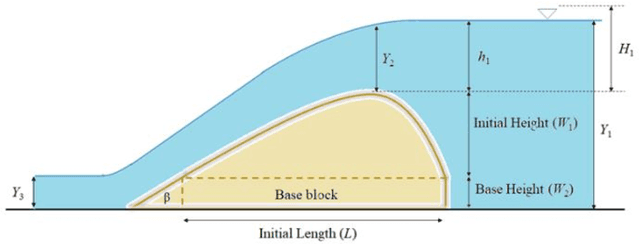

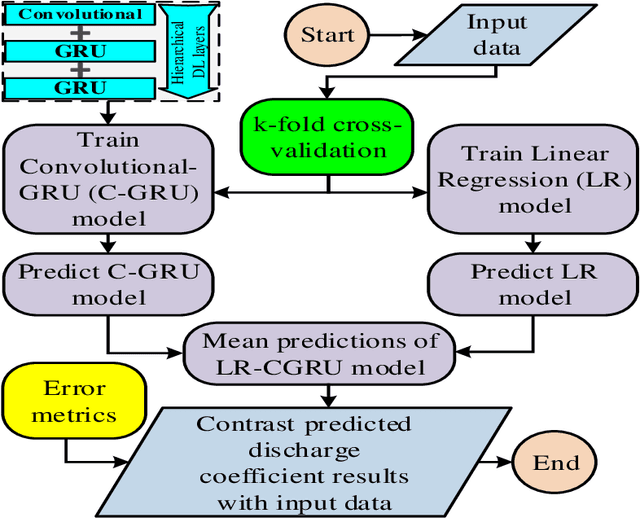

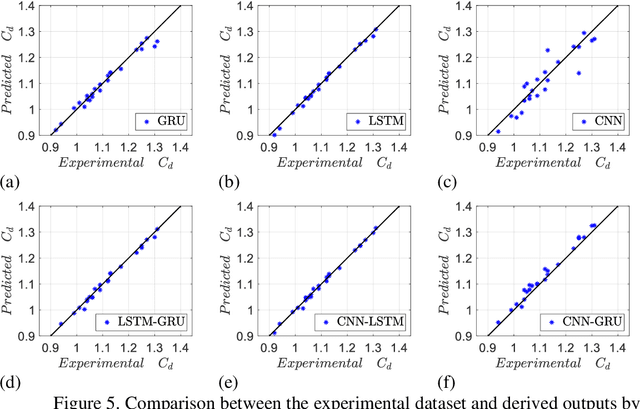

Accurate Discharge Coefficient Prediction of Streamlined Weirs by Coupling Linear Regression and Deep Convolutional Gated Recurrent Unit

Apr 12, 2022

Abstract:Streamlined weirs which are a nature-inspired type of weir have gained tremendous attention among hydraulic engineers, mainly owing to their established performance with high discharge coefficients. Computational fluid dynamics (CFD) is considered as a robust tool to predict the discharge coefficient. To bypass the computational cost of CFD-based assessment, the present study proposes data-driven modeling techniques, as an alternative to CFD simulation, to predict the discharge coefficient based on an experimental dataset. To this end, after splitting the dataset using a k fold cross validation technique, the performance assessment of classical and hybrid machine learning deep learning (ML DL) algorithms is undertaken. Among ML techniques linear regression (LR) random forest (RF) support vector machine (SVM) k-nearest neighbor (KNN) and decision tree (DT) algorithms are studied. In the context of DL, long short-term memory (LSTM) convolutional neural network (CNN) and gated recurrent unit (GRU) and their hybrid forms such as LSTM GRU, CNN LSTM and CNN GRU techniques, are compared using different error metrics. It is found that the proposed three layer hierarchical DL algorithm consisting of a convolutional layer coupled with two subsequent GRU levels, which is also hybridized with the LR method, leads to lower error metrics. This paper paves the way for data-driven modeling of streamlined weirs.

* 28 pages, 7 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge