Jie Gui

Multimodal Skeleton-Based Action Representation Learning via Decomposition and Composition

Dec 24, 2025Abstract:Multimodal human action understanding is a significant problem in computer vision, with the central challenge being the effective utilization of the complementarity among diverse modalities while maintaining model efficiency. However, most existing methods rely on simple late fusion to enhance performance, which results in substantial computational overhead. Although early fusion with a shared backbone for all modalities is efficient, it struggles to achieve excellent performance. To address the dilemma of balancing efficiency and effectiveness, we introduce a self-supervised multimodal skeleton-based action representation learning framework, named Decomposition and Composition. The Decomposition strategy meticulously decomposes the fused multimodal features into distinct unimodal features, subsequently aligning them with their respective ground truth unimodal counterparts. On the other hand, the Composition strategy integrates multiple unimodal features, leveraging them as self-supervised guidance to enhance the learning of multimodal representations. Extensive experiments on the NTU RGB+D 60, NTU RGB+D 120, and PKU-MMD II datasets demonstrate that the proposed method strikes an excellent balance between computational cost and model performance.

Diversifying Counterattacks: Orthogonal Exploration for Robust CLIP Inference

Nov 12, 2025Abstract:Vision-language pre-training models (VLPs) demonstrate strong multimodal understanding and zero-shot generalization, yet remain vulnerable to adversarial examples, raising concerns about their reliability. Recent work, Test-Time Counterattack (TTC), improves robustness by generating perturbations that maximize the embedding deviation of adversarial inputs using PGD, pushing them away from their adversarial representations. However, due to the fundamental difference in optimization objectives between adversarial attacks and counterattacks, generating counterattacks solely based on gradients with respect to the adversarial input confines the search to a narrow space. As a result, the counterattacks could overfit limited adversarial patterns and lack the diversity to fully neutralize a broad range of perturbations. In this work, we argue that enhancing the diversity and coverage of counterattacks is crucial to improving adversarial robustness in test-time defense. Accordingly, we propose Directional Orthogonal Counterattack (DOC), which augments counterattack optimization by incorporating orthogonal gradient directions and momentum-based updates. This design expands the exploration of the counterattack space and increases the diversity of perturbations, which facilitates the discovery of more generalizable counterattacks and ultimately improves the ability to neutralize adversarial perturbations. Meanwhile, we present a directional sensitivity score based on averaged cosine similarity to boost DOC by improving example discrimination and adaptively modulating the counterattack strength. Extensive experiments on 16 datasets demonstrate that DOC improves adversarial robustness under various attacks while maintaining competitive clean accuracy. Code is available at https://github.com/bookman233/DOC.

LOTA: Bit-Planes Guided AI-Generated Image Detection

Oct 16, 2025Abstract:The rapid advancement of GAN and Diffusion models makes it more difficult to distinguish AI-generated images from real ones. Recent studies often use image-based reconstruction errors as an important feature for determining whether an image is AI-generated. However, these approaches typically incur high computational costs and also fail to capture intrinsic noisy features present in the raw images. To solve these problems, we innovatively refine error extraction by using bit-plane-based image processing, as lower bit planes indeed represent noise patterns in images. We introduce an effective bit-planes guided noisy image generation and exploit various image normalization strategies, including scaling and thresholding. Then, to amplify the noise signal for easier AI-generated image detection, we design a maximum gradient patch selection that applies multi-directional gradients to compute the noise score and selects the region with the highest score. Finally, we propose a lightweight and effective classification head and explore two different structures: noise-based classifier and noise-guided classifier. Extensive experiments on the GenImage benchmark demonstrate the outstanding performance of our method, which achieves an average accuracy of \textbf{98.9\%} (\textbf{11.9}\%~$\uparrow$) and shows excellent cross-generator generalization capability. Particularly, our method achieves an accuracy of over 98.2\% from GAN to Diffusion and over 99.2\% from Diffusion to GAN. Moreover, it performs error extraction at the millisecond level, nearly a hundred times faster than existing methods. The code is at https://github.com/hongsong-wang/LOTA.

Structure-Aware Automatic Channel Pruning by Searching with Graph Embedding

Jun 13, 2025Abstract:Channel pruning is a powerful technique to reduce the computational overhead of deep neural networks, enabling efficient deployment on resource-constrained devices. However, existing pruning methods often rely on local heuristics or weight-based criteria that fail to capture global structural dependencies within the network, leading to suboptimal pruning decisions and degraded model performance. To address these limitations, we propose a novel structure-aware automatic channel pruning (SACP) framework that utilizes graph convolutional networks (GCNs) to model the network topology and learn the global importance of each channel. By encoding structural relationships within the network, our approach implements topology-aware pruning and this pruning is fully automated, reducing the need for human intervention. We restrict the pruning rate combinations to a specific space, where the number of combinations can be dynamically adjusted, and use a search-based approach to determine the optimal pruning rate combinations. Extensive experiments on benchmark datasets (CIFAR-10, ImageNet) with various models (ResNet, VGG16) demonstrate that SACP outperforms state-of-the-art pruning methods on compression efficiency and competitive on accuracy retention.

A Survey on Small Sample Imbalance Problem: Metrics, Feature Analysis, and Solutions

Apr 21, 2025Abstract:The small sample imbalance (S&I) problem is a major challenge in machine learning and data analysis. It is characterized by a small number of samples and an imbalanced class distribution, which leads to poor model performance. In addition, indistinct inter-class feature distributions further complicate classification tasks. Existing methods often rely on algorithmic heuristics without sufficiently analyzing the underlying data characteristics. We argue that a detailed analysis from the data perspective is essential before developing an appropriate solution. Therefore, this paper proposes a systematic analytical framework for the S\&I problem. We first summarize imbalance metrics and complexity analysis methods, highlighting the need for interpretable benchmarks to characterize S&I problems. Second, we review recent solutions for conventional, complexity-based, and extreme S&I problems, revealing methodological differences in handling various data distributions. Our summary finds that resampling remains a widely adopted solution. However, we conduct experiments on binary and multiclass datasets, revealing that classifier performance differences significantly exceed the improvements achieved through resampling. Finally, this paper highlights open questions and discusses future trends.

ColorVein: Colorful Cancelable Vein Biometrics

Apr 19, 2025

Abstract:Vein recognition technologies have become one of the primary solutions for high-security identification systems. However, the issue of biometric information leakage can still pose a serious threat to user privacy and anonymity. Currently, there is no cancelable biometric template generation scheme specifically designed for vein biometrics. Therefore, this paper proposes an innovative cancelable vein biometric generation scheme: ColorVein. Unlike previous cancelable template generation schemes, ColorVein does not destroy the original biometric features and introduces additional color information to grayscale vein images. This method significantly enhances the information density of vein images by transforming static grayscale information into dynamically controllable color representations through interactive colorization. ColorVein allows users/administrators to define a controllable pseudo-random color space for grayscale vein images by editing the position, number, and color of hint points, thereby generating protected cancelable templates. Additionally, we propose a new secure center loss to optimize the training process of the protected feature extraction model, effectively increasing the feature distance between enrolled users and any potential impostors. Finally, we evaluate ColorVein's performance on all types of vein biometrics, including recognition performance, unlinkability, irreversibility, and revocability, and conduct security and privacy analyses. ColorVein achieves competitive performance compared with state-of-the-art methods.

Survey of Adversarial Robustness in Multimodal Large Language Models

Mar 18, 2025

Abstract:Multimodal Large Language Models (MLLMs) have demonstrated exceptional performance in artificial intelligence by facilitating integrated understanding across diverse modalities, including text, images, video, audio, and speech. However, their deployment in real-world applications raises significant concerns about adversarial vulnerabilities that could compromise their safety and reliability. Unlike unimodal models, MLLMs face unique challenges due to the interdependencies among modalities, making them susceptible to modality-specific threats and cross-modal adversarial manipulations. This paper reviews the adversarial robustness of MLLMs, covering different modalities. We begin with an overview of MLLMs and a taxonomy of adversarial attacks tailored to each modality. Next, we review key datasets and evaluation metrics used to assess the robustness of MLLMs. After that, we provide an in-depth review of attacks targeting MLLMs across different modalities. Our survey also identifies critical challenges and suggests promising future research directions.

External Reliable Information-enhanced Multimodal Contrastive Learning for Fake News Detection

Mar 05, 2025Abstract:With the rapid development of the Internet, the information dissemination paradigm has changed and the efficiency has been improved greatly. While this also brings the quick spread of fake news and leads to negative impacts on cyberspace. Currently, the information presentation formats have evolved gradually, with the news formats shifting from texts to multimodal contents. As a result, detecting multimodal fake news has become one of the research hotspots. However, multimodal fake news detection research field still faces two main challenges: the inability to fully and effectively utilize multimodal information for detection, and the low credibility or static nature of the introduced external information, which limits dynamic updates. To bridge the gaps, we propose ERIC-FND, an external reliable information-enhanced multimodal contrastive learning framework for fake news detection. ERIC-FND strengthens the representation of news contents by entity-enriched external information enhancement method. It also enriches the multimodal news information via multimodal semantic interaction method where the multimodal constrative learning is employed to make different modality representations learn from each other. Moreover, an adaptive fusion method is taken to integrate the news representations from different dimensions for the eventual classification. Experiments are done on two commonly used datasets in different languages, X (Twitter) and Weibo. Experiment results demonstrate that our proposed model ERIC-FND outperforms existing state-of-the-art fake news detection methods under the same settings.

Training-Free Zero-Shot Temporal Action Detection with Vision-Language Models

Jan 23, 2025Abstract:Existing zero-shot temporal action detection (ZSTAD) methods predominantly use fully supervised or unsupervised strategies to recognize unseen activities. However, these training-based methods are prone to domain shifts and require high computational costs, which hinder their practical applicability in real-world scenarios. In this paper, unlike previous works, we propose a training-Free Zero-shot temporal Action Detection (FreeZAD) method, leveraging existing vision-language (ViL) models to directly classify and localize unseen activities within untrimmed videos without any additional fine-tuning or adaptation. We mitigate the need for explicit temporal modeling and reliance on pseudo-label quality by designing the LOGarithmic decay weighted Outer-Inner-Contrastive Score (LogOIC) and frequency-based Actionness Calibration. Furthermore, we introduce a test-time adaptation (TTA) strategy using Prototype-Centric Sampling (PCS) to expand FreeZAD, enabling ViL models to adapt more effectively for ZSTAD. Extensive experiments on the THUMOS14 and ActivityNet-1.3 datasets demonstrate that our training-free method outperforms state-of-the-art unsupervised methods while requiring only 1/13 of the runtime. When equipped with TTA, the enhanced method further narrows the gap with fully supervised methods.

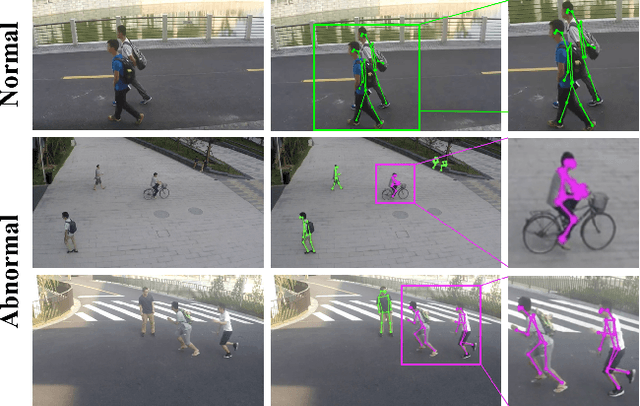

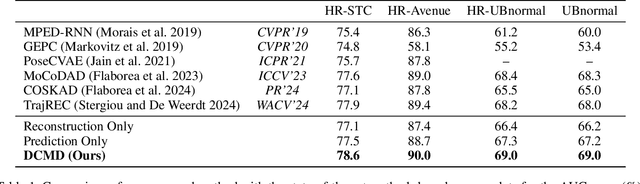

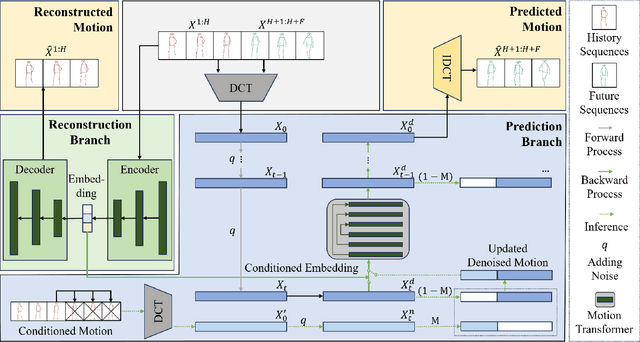

Dual Conditioned Motion Diffusion for Pose-Based Video Anomaly Detection

Dec 23, 2024

Abstract:Video Anomaly Detection (VAD) is essential for computer vision research. Existing VAD methods utilize either reconstruction-based or prediction-based frameworks. The former excels at detecting irregular patterns or structures, whereas the latter is capable of spotting abnormal deviations or trends. We address pose-based video anomaly detection and introduce a novel framework called Dual Conditioned Motion Diffusion (DCMD), which enjoys the advantages of both approaches. The DCMD integrates conditioned motion and conditioned embedding to comprehensively utilize the pose characteristics and latent semantics of observed movements, respectively. In the reverse diffusion process, a motion transformer is proposed to capture potential correlations from multi-layered characteristics within the spectrum space of human motion. To enhance the discriminability between normal and abnormal instances, we design a novel United Association Discrepancy (UAD) regularization that primarily relies on a Gaussian kernel-based time association and a self-attention-based global association. Finally, a mask completion strategy is introduced during the inference stage of the reverse diffusion process to enhance the utilization of conditioned motion for the prediction branch of anomaly detection. Extensive experiments on four datasets demonstrate that our method dramatically outperforms state-of-the-art methods and exhibits superior generalization performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge