Yanwei Yu

Enhancing Underwater Images via Adaptive Semantic-aware Codebook Learning

Feb 11, 2026Abstract:Underwater Image Enhancement (UIE) is an ill-posed problem where natural clean references are not available, and the degradation levels vary significantly across semantic regions. Existing UIE methods treat images with a single global model and ignore the inconsistent degradation of different scene components. This oversight leads to significant color distortions and loss of fine details in heterogeneous underwater scenes, especially where degradation varies significantly across different image regions. Therefore, we propose SUCode (Semantic-aware Underwater Codebook Network), which achieves adaptive UIE from semantic-aware discrete codebook representation. Compared with one-shot codebook-based methods, SUCode exploits semantic-aware, pixel-level codebook representation tailored to heterogeneous underwater degradation. A three-stage training paradigm is employed to represent raw underwater image features to avoid pseudo ground-truth contamination. Gated Channel Attention Module (GCAM) and Frequency-Aware Feature Fusion (FAFF) jointly integrate channel and frequency cues for faithful color restoration and texture recovery. Extensive experiments on multiple benchmarks demonstrate that SUCode achieves state-of-the-art performance, outperforming recent UIE methods on both reference and no-reference metrics. The code will be made public available at https://github.com/oucailab/SUCode.

ShapeCond: Fast Shapelet-Guided Dataset Condensation for Time Series Classification

Feb 09, 2026Abstract:Time series data supports many domains (e.g., finance and climate science), but its rapid growth strains storage and computation. Dataset condensation can alleviate this by synthesizing a compact training set that preserves key information. Yet most condensation methods are image-centric and often fail on time series because they miss time-series-specific temporal structure, especially local discriminative motifs such as shapelets. In this work, we propose ShapeCond, a novel and efficient condensation framework for time series classification that leverages shapelet-based dataset knowledge via a shapelet-guided optimization strategy. Our shapelet-assisted synthesis cost is independent of sequence length: longer series yield larger speedups in synthesis (e.g., 29$\times$ faster over prior state-of-the-art method CondTSC for time-series condensation, and up to 10,000$\times$ over naively using shapelets on the Sleep dataset with 3,000 timesteps). By explicitly preserving critical local patterns, ShapeCond improves downstream accuracy and consistently outperforms all prior state-of-the-art time series dataset condensation methods across extensive experiments. Code is available at https://github.com/lunaaa95/ShapeCond.

Weighted Graph Clustering via Scale Contraction and Graph Structure Learning

Jan 24, 2026Abstract:Graph clustering aims to partition nodes into distinct clusters based on their similarity, thereby revealing relationships among nodes. Nevertheless, most existing methods do not fully utilize these edge weights. Leveraging edge weights in graph clustering tasks faces two critical challenges. (1) The introduction of edge weights may significantly increase storage space and training time, making it essential to reduce the graph scale while preserving nodes that are beneficial for the clustering task. (2) Edge weight information may inherently contain noise that negatively impacts clustering results. However, few studies can jointly optimize clustering and edge weights, which is crucial for mitigating the negative impact of noisy edges on clustering task. To address these challenges, we propose a contractile edge-weight-aware graph clustering network. Specifically, a cluster-oriented graph contraction module is designed to reduce the graph scale while preserving important nodes. An edge-weight-aware attention network is designed to identify and weaken noisy connections. In this way, we can more easily identify and mitigate the impact of noisy edges during the clustering process, thus enhancing clustering effectiveness. We conducted extensive experiments on three real-world weighted graph datasets. In particular, our model outperforms the best baseline, demonstrating its superior performance. Furthermore, experiments also show that the proposed graph contraction module can significantly reduce training time and storage space.

Structure-Aware Automatic Channel Pruning by Searching with Graph Embedding

Jun 13, 2025Abstract:Channel pruning is a powerful technique to reduce the computational overhead of deep neural networks, enabling efficient deployment on resource-constrained devices. However, existing pruning methods often rely on local heuristics or weight-based criteria that fail to capture global structural dependencies within the network, leading to suboptimal pruning decisions and degraded model performance. To address these limitations, we propose a novel structure-aware automatic channel pruning (SACP) framework that utilizes graph convolutional networks (GCNs) to model the network topology and learn the global importance of each channel. By encoding structural relationships within the network, our approach implements topology-aware pruning and this pruning is fully automated, reducing the need for human intervention. We restrict the pruning rate combinations to a specific space, where the number of combinations can be dynamically adjusted, and use a search-based approach to determine the optimal pruning rate combinations. Extensive experiments on benchmark datasets (CIFAR-10, ImageNet) with various models (ResNet, VGG16) demonstrate that SACP outperforms state-of-the-art pruning methods on compression efficiency and competitive on accuracy retention.

Efficient Discovery of Motif Transition Process for Large-Scale Temporal Graphs

Apr 22, 2025Abstract:Understanding the dynamic transition of motifs in temporal graphs is essential for revealing how graph structures evolve over time, identifying critical patterns, and predicting future behaviors, yet existing methods often focus on predefined motifs, limiting their ability to comprehensively capture transitions and interrelationships. We propose a parallel motif transition process discovery algorithm, PTMT, a novel parallel method for discovering motif transition processes in large-scale temporal graphs. PTMT integrates a tree-based framework with the temporal zone partitioning (TZP) strategy, which partitions temporal graphs by time and structure while preserving lossless motif transitions and enabling massive parallelism. PTMT comprises three phases: growth zone parallel expansion, overlap-aware result aggregation, and deterministic encoding of motif transitions, ensuring accurate tracking of dynamic transitions and interactions. Results on 10 real-world datasets demonstrate that PTMT achieves speedups ranging from 12.0$\times$ to 50.3$\times$ compared to the SOTA method.

ScaleGNN: Towards Scalable Graph Neural Networks via Adaptive High-order Neighboring Feature Fusion

Apr 22, 2025Abstract:Graph Neural Networks (GNNs) have demonstrated strong performance across various graph-based tasks by effectively capturing relational information between nodes. These models rely on iterative message passing to propagate node features, enabling nodes to aggregate information from their neighbors. Recent research has significantly improved the message-passing mechanism, enhancing GNN scalability on large-scale graphs. However, GNNs still face two main challenges: over-smoothing, where excessive message passing results in indistinguishable node representations, especially in deep networks incorporating high-order neighbors; and scalability issues, as traditional architectures suffer from high model complexity and increased inference time due to redundant information aggregation. This paper proposes a novel framework for large-scale graphs named ScaleGNN that simultaneously addresses both challenges by adaptively fusing multi-level graph features. We first construct neighbor matrices for each order, learning their relative information through trainable weights through an adaptive high-order feature fusion module. This allows the model to selectively emphasize informative high-order neighbors while reducing unnecessary computational costs. Additionally, we introduce a High-order redundant feature masking mechanism based on a Local Contribution Score (LCS), which enables the model to retain only the most relevant neighbors at each order, preventing redundant information propagation. Furthermore, low-order enhanced feature aggregation adaptively integrates low-order and high-order features based on task relevance, ensuring effective capture of both local and global structural information without excessive complexity. Extensive experiments on real-world datasets demonstrate that our approach consistently outperforms state-of-the-art GNN models in both accuracy and computational efficiency.

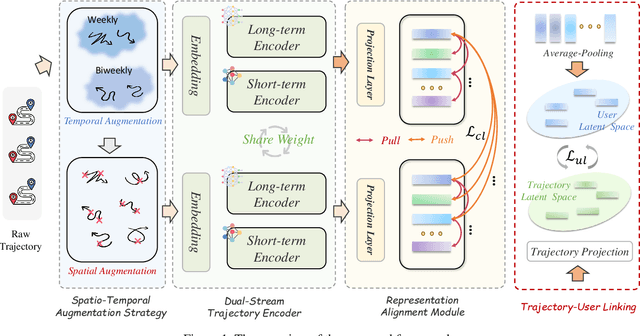

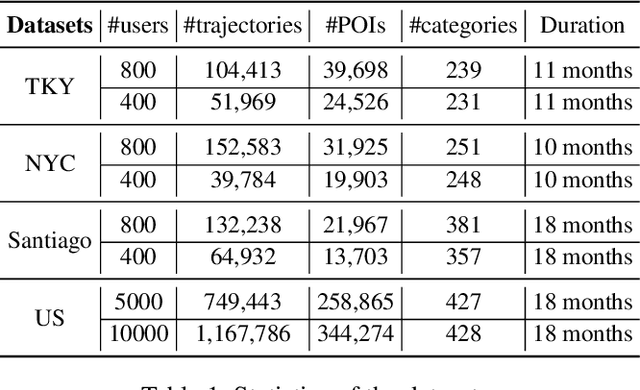

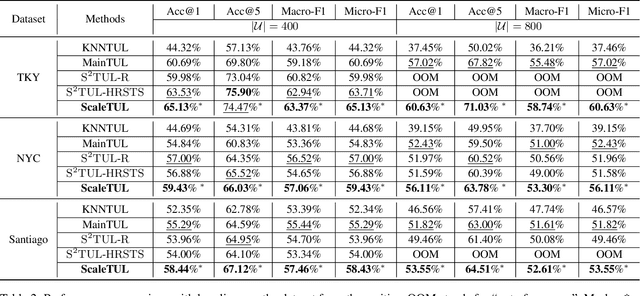

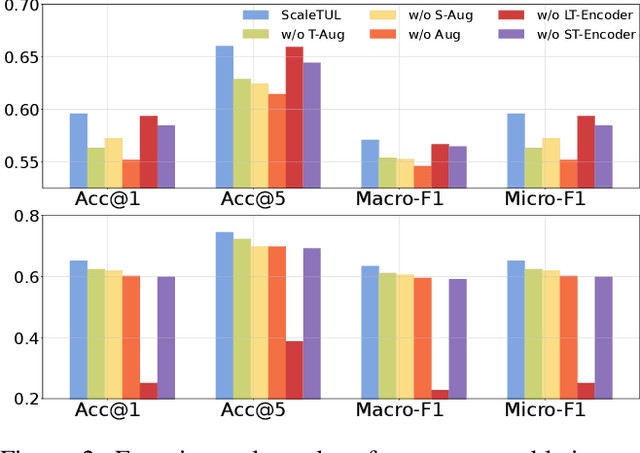

Scalable Trajectory-User Linking with Dual-Stream Representation Networks

Mar 19, 2025

Abstract:Trajectory-user linking (TUL) aims to match anonymous trajectories to the most likely users who generated them, offering benefits for a wide range of real-world spatio-temporal applications. However, existing TUL methods are limited by high model complexity and poor learning of the effective representations of trajectories, rendering them ineffective in handling large-scale user trajectory data. In this work, we propose a novel $\underline{Scal}$abl$\underline{e}$ Trajectory-User Linking with dual-stream representation networks for large-scale $\underline{TUL}$ problem, named ScaleTUL. Specifically, ScaleTUL generates two views using temporal and spatial augmentations to exploit supervised contrastive learning framework to effectively capture the irregularities of trajectories. In each view, a dual-stream trajectory encoder, consisting of a long-term encoder and a short-term encoder, is designed to learn unified trajectory representations that fuse different temporal-spatial dependencies. Then, a TUL layer is used to associate the trajectories with the corresponding users in the representation space using a two-stage training model. Experimental results on check-in mobility datasets from three real-world cities and the nationwide U.S. demonstrate the superiority of ScaleTUL over state-of-the-art baselines for large-scale TUL tasks.

Incorporating Attributes and Multi-Scale Structures for Heterogeneous Graph Contrastive Learning

Mar 18, 2025Abstract:Heterogeneous graphs (HGs) are composed of multiple types of nodes and edges, making it more effective in capturing the complex relational structures inherent in the real world. However, in real-world scenarios, labeled data is often difficult to obtain, which limits the applicability of semi-supervised approaches. Self-supervised learning aims to enable models to automatically learn useful features from data, effectively addressing the challenge of limited labeling data. In this paper, we propose a novel contrastive learning framework for heterogeneous graphs (ASHGCL), which incorporates three distinct views, each focusing on node attributes, high-order and low-order structural information, respectively, to effectively capture attribute information, high-order structures, and low-order structures for node representation learning. Furthermore, we introduce an attribute-enhanced positive sample selection strategy that combines both structural information and attribute information, effectively addressing the issue of sampling bias. Extensive experiments on four real-world datasets show that ASHGCL outperforms state-of-the-art unsupervised baselines and even surpasses some supervised benchmarks.

Spatiotemporal-aware Trend-Seasonality Decomposition Network for Traffic Flow Forecasting

Feb 17, 2025Abstract:Traffic prediction is critical for optimizing travel scheduling and enhancing public safety, yet the complex spatial and temporal dynamics within traffic data present significant challenges for accurate forecasting. In this paper, we introduce a novel model, the Spatiotemporal-aware Trend-Seasonality Decomposition Network (STDN). This model begins by constructing a dynamic graph structure to represent traffic flow and incorporates novel spatio-temporal embeddings to jointly capture global traffic dynamics. The representations learned are further refined by a specially designed trend-seasonality decomposition module, which disentangles the trend-cyclical component and seasonal component for each traffic node at different times within the graph. These components are subsequently processed through an encoder-decoder network to generate the final predictions. Extensive experiments conducted on real-world traffic datasets demonstrate that STDN achieves superior performance with remarkable computation cost. Furthermore, we have released a new traffic dataset named JiNan, which features unique inner-city dynamics, thereby enriching the scenario comprehensiveness in traffic prediction evaluation.

TPAoI: Ensuring Fresh Service Status at the Network Edge in Compute-First Networking

Dec 24, 2024

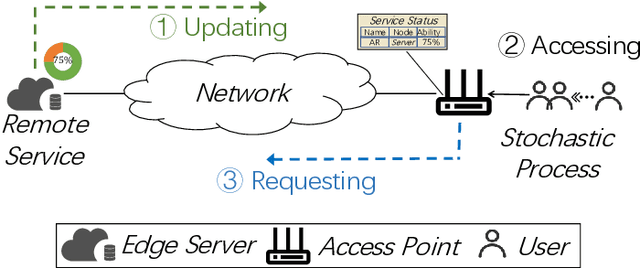

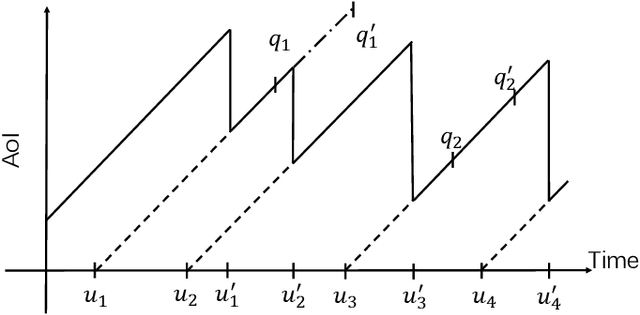

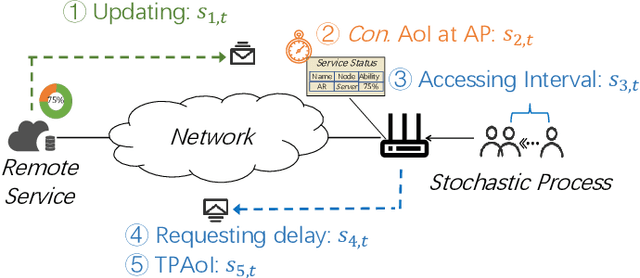

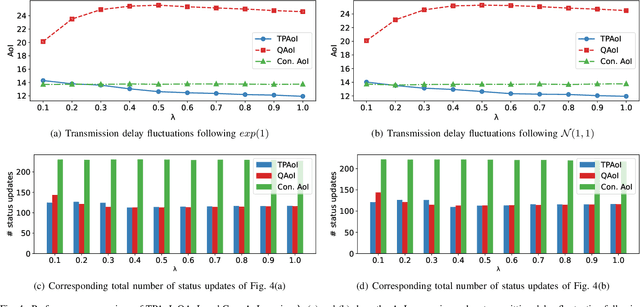

Abstract:In compute-first networking, maintaining fresh and accurate status information at the network edge is crucial for effective access to remote services. This process typically involves three phases: Status updating, user accessing, and user requesting. However, current studies on status effectiveness, such as Age of Information at Query (QAoI), do not comprehensively cover all these phases. Therefore, this paper introduces a novel metric, TPAoI, aimed at optimizing update decisions by measuring the freshness of service status. The stochastic nature of edge environments, characterized by unpredictable communication delays in updating, requesting, and user access times, poses a significant challenge when modeling. To address this, we model the problem as a Markov Decision Process (MDP) and employ a Dueling Double Deep Q-Network (D3QN) algorithm for optimization. Extensive experiments demonstrate that the proposed TPAoI metric effectively minimizes AoI, ensuring timely and reliable service updates in dynamic edge environments. Results indicate that TPAoI reduces AoI by an average of 47\% compared to QAoI metrics and decreases update frequency by an average of 48\% relative to conventional AoI metrics, showing significant improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge