Zhipeng Gui

Wuhan University

AutoGEEval: A Multimodal and Automated Framework for Geospatial Code Generation on GEE with Large Language Models

May 19, 2025Abstract:Geospatial code generation is emerging as a key direction in the integration of artificial intelligence and geoscientific analysis. However, there remains a lack of standardized tools for automatic evaluation in this domain. To address this gap, we propose AutoGEEval, the first multimodal, unit-level automated evaluation framework for geospatial code generation tasks on the Google Earth Engine (GEE) platform powered by large language models (LLMs). Built upon the GEE Python API, AutoGEEval establishes a benchmark suite (AutoGEEval-Bench) comprising 1325 test cases that span 26 GEE data types. The framework integrates both question generation and answer verification components to enable an end-to-end automated evaluation pipeline-from function invocation to execution validation. AutoGEEval supports multidimensional quantitative analysis of model outputs in terms of accuracy, resource consumption, execution efficiency, and error types. We evaluate 18 state-of-the-art LLMs-including general-purpose, reasoning-augmented, code-centric, and geoscience-specialized models-revealing their performance characteristics and potential optimization pathways in GEE code generation. This work provides a unified protocol and foundational resource for the development and assessment of geospatial code generation models, advancing the frontier of automated natural language to domain-specific code translation.

A Survey on Small Sample Imbalance Problem: Metrics, Feature Analysis, and Solutions

Apr 21, 2025Abstract:The small sample imbalance (S&I) problem is a major challenge in machine learning and data analysis. It is characterized by a small number of samples and an imbalanced class distribution, which leads to poor model performance. In addition, indistinct inter-class feature distributions further complicate classification tasks. Existing methods often rely on algorithmic heuristics without sufficiently analyzing the underlying data characteristics. We argue that a detailed analysis from the data perspective is essential before developing an appropriate solution. Therefore, this paper proposes a systematic analytical framework for the S\&I problem. We first summarize imbalance metrics and complexity analysis methods, highlighting the need for interpretable benchmarks to characterize S&I problems. Second, we review recent solutions for conventional, complexity-based, and extreme S&I problems, revealing methodological differences in handling various data distributions. Our summary finds that resampling remains a widely adopted solution. However, we conduct experiments on binary and multiclass datasets, revealing that classifier performance differences significantly exceed the improvements achieved through resampling. Finally, this paper highlights open questions and discusses future trends.

PEACE: Empowering Geologic Map Holistic Understanding with MLLMs

Jan 10, 2025

Abstract:Geologic map, as a fundamental diagram in geology science, provides critical insights into the structure and composition of Earth's subsurface and surface. These maps are indispensable in various fields, including disaster detection, resource exploration, and civil engineering. Despite their significance, current Multimodal Large Language Models (MLLMs) often fall short in geologic map understanding. This gap is primarily due to the challenging nature of cartographic generalization, which involves handling high-resolution map, managing multiple associated components, and requiring domain-specific knowledge. To quantify this gap, we construct GeoMap-Bench, the first-ever benchmark for evaluating MLLMs in geologic map understanding, which assesses the full-scale abilities in extracting, referring, grounding, reasoning, and analyzing. To bridge this gap, we introduce GeoMap-Agent, the inaugural agent designed for geologic map understanding, which features three modules: Hierarchical Information Extraction (HIE), Domain Knowledge Injection (DKI), and Prompt-enhanced Question Answering (PEQA). Inspired by the interdisciplinary collaboration among human scientists, an AI expert group acts as consultants, utilizing a diverse tool pool to comprehensively analyze questions. Through comprehensive experiments, GeoMap-Agent achieves an overall score of 0.811 on GeoMap-Bench, significantly outperforming 0.369 of GPT-4o. Our work, emPowering gEologic mAp holistiC undErstanding (PEACE) with MLLMs, paves the way for advanced AI applications in geology, enhancing the efficiency and accuracy of geological investigations.

GeoCode-GPT: A Large Language Model for Geospatial Code Generation Tasks

Oct 23, 2024

Abstract:The increasing demand for spatiotemporal data and modeling tasks in geosciences has made geospatial code generation technology a critical factor in enhancing productivity. Although large language models (LLMs) have demonstrated potential in code generation tasks, they often encounter issues such as refusal to code or hallucination in geospatial code generation due to a lack of domain-specific knowledge and code corpora. To address these challenges, this paper presents and open-sources the GeoCode-PT and GeoCode-SFT corpora, along with the GeoCode-Eval evaluation dataset. Additionally, by leveraging QLoRA and LoRA for pretraining and fine-tuning, we introduce GeoCode-GPT-7B, the first LLM focused on geospatial code generation, fine-tuned from Code Llama-7B. Furthermore, we establish a comprehensive geospatial code evaluation framework, incorporating option matching, expert validation, and prompt engineering scoring for LLMs, and systematically evaluate GeoCode-GPT-7B using the GeoCode-Eval dataset. Experimental results show that GeoCode-GPT outperforms other models in multiple-choice accuracy by 9.1% to 32.1%, in code summarization ability by 1.7% to 25.4%, and in code generation capability by 1.2% to 25.1%. This paper provides a solution and empirical validation for enhancing LLMs' performance in geospatial code generation, extends the boundaries of domain-specific model applications, and offers valuable insights into unlocking their potential in geospatial code generation.

Research on Foundation Model for Spatial Data Intelligence: China's 2024 White Paper on Strategic Development of Spatial Data Intelligence

May 30, 2024Abstract:This report focuses on spatial data intelligent large models, delving into the principles, methods, and cutting-edge applications of these models. It provides an in-depth discussion on the definition, development history, current status, and trends of spatial data intelligent large models, as well as the challenges they face. The report systematically elucidates the key technologies of spatial data intelligent large models and their applications in urban environments, aerospace remote sensing, geography, transportation, and other scenarios. Additionally, it summarizes the latest application cases of spatial data intelligent large models in themes such as urban development, multimodal systems, remote sensing, smart transportation, and resource environments. Finally, the report concludes with an overview and outlook on the development prospects of spatial data intelligent large models.

Interpreting the Curse of Dimensionality from Distance Concentration and Manifold Effect

Jan 07, 2024Abstract:The characteristics of data like distribution and heterogeneity, become more complex and counterintuitive as the dimensionality increases. This phenomenon is known as curse of dimensionality, where common patterns and relationships (e.g., internal and boundary pattern) that hold in low-dimensional space may be invalid in higher-dimensional space. It leads to a decreasing performance for the regression, classification or clustering models or algorithms. Curse of dimensionality can be attributed to many causes. In this paper, we first summarize five challenges associated with manipulating high-dimensional data, and explains the potential causes for the failure of regression, classification or clustering tasks. Subsequently, we delve into two major causes of the curse of dimensionality, distance concentration and manifold effect, by performing theoretical and empirical analyses. The results demonstrate that nearest neighbor search (NNS) using three typical distance measurements, Minkowski distance, Chebyshev distance, and cosine distance, becomes meaningless as the dimensionality increases. Meanwhile, the data incorporates more redundant features, and the variance contribution of principal component analysis (PCA) is skewed towards a few dimensions. By interpreting the causes of the curse of dimensionality, we can better understand the limitations of current models and algorithms, and drive to improve the performance of data analysis and machine learning tasks in high-dimensional space.

Scalable manifold learning by uniform landmark sampling and constrained locally linear embedding

Jan 05, 2024Abstract:As a pivotal approach in machine learning and data science, manifold learning aims to uncover the intrinsic low-dimensional structure within complex nonlinear manifolds in high-dimensional space. By exploiting the manifold hypothesis, various techniques for nonlinear dimension reduction have been developed to facilitate visualization, classification, clustering, and gaining key insights. Although existing manifold learning methods have achieved remarkable successes, they still suffer from extensive distortions incurred in the global structure, which hinders the understanding of underlying patterns. Scalability issues also limit their applicability for handling large-scale data. Here, we propose a scalable manifold learning (scML) method that can manipulate large-scale and high-dimensional data in an efficient manner. It starts by seeking a set of landmarks to construct the low-dimensional skeleton of the entire data, and then incorporates the non-landmarks into the learned space based on the constrained locally linear embedding (CLLE). We empirically validated the effectiveness of scML on synthetic datasets and real-world benchmarks of different types, and applied it to analyze the single-cell transcriptomics and detect anomalies in electrocardiogram (ECG) signals. scML scales well with increasing data sizes and embedding dimensions, and exhibits promising performance in preserving the global structure. The experiments demonstrate notable robustness in embedding quality as the sample rate decreases.

A Robust and Efficient Boundary Point Detection Method by Measuring Local Direction Dispersion

Dec 07, 2023Abstract:Boundary points pose a significant challenge for machine learning tasks, including classification, clustering, and dimensionality reduction. Due to the similarity of features, boundary areas can result in mixed-up classes or clusters, leading to a crowding problem in dimensionality reduction. To address this challenge, numerous boundary point detection methods have been developed, but they are insufficiently to accurately and efficiently identify the boundary points in non-convex structures and high-dimensional manifolds. In this work, we propose a robust and efficient method for detecting boundary points using Local Direction Dispersion (LoDD). LoDD considers that internal points are surrounded by neighboring points in all directions, while neighboring points of a boundary point tend to be distributed only in a certain directional range. LoDD adopts a density-independent K-Nearest Neighbors (KNN) method to determine neighboring points, and defines a statistic-based metric using the eigenvalues of the covariance matrix of KNN coordinates to measure the centrality of a query point. We demonstrated the validity of LoDD on five synthetic datasets (2-D and 3-D) and ten real-world benchmarks, and tested its clustering performance by equipping with two typical clustering methods, K-means and Ncut. Our results show that LoDD achieves promising and robust detection accuracy in a time-efficient manner.

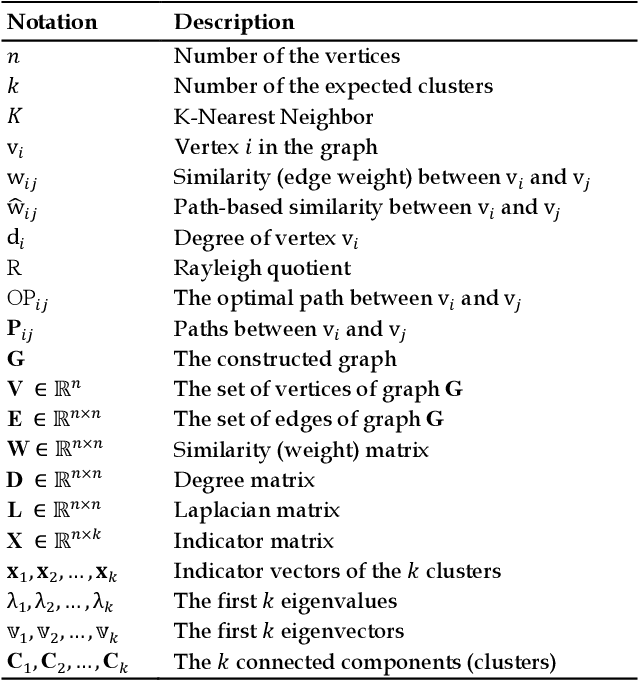

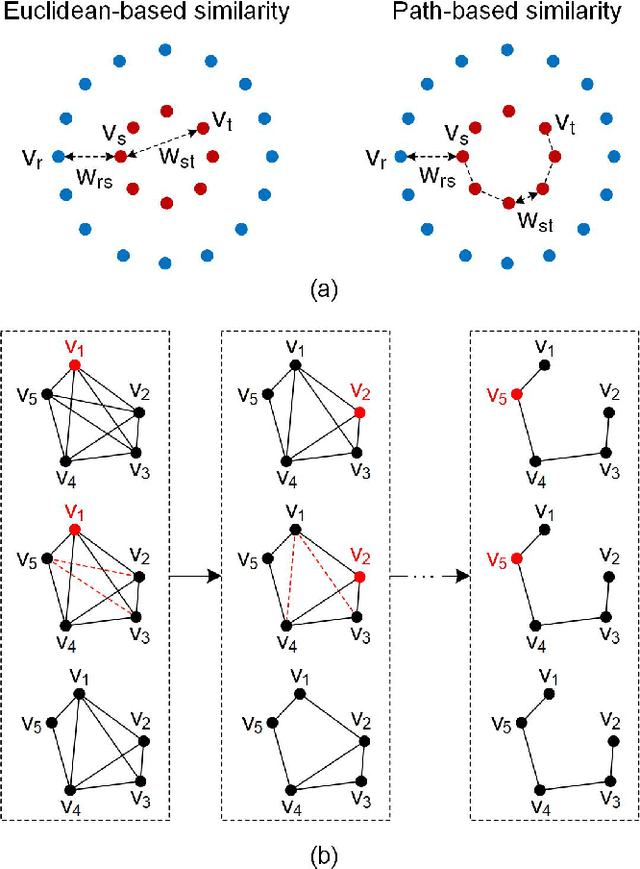

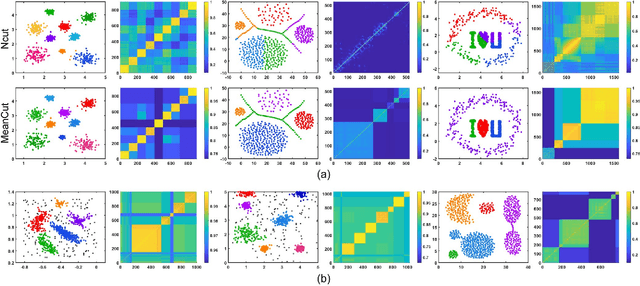

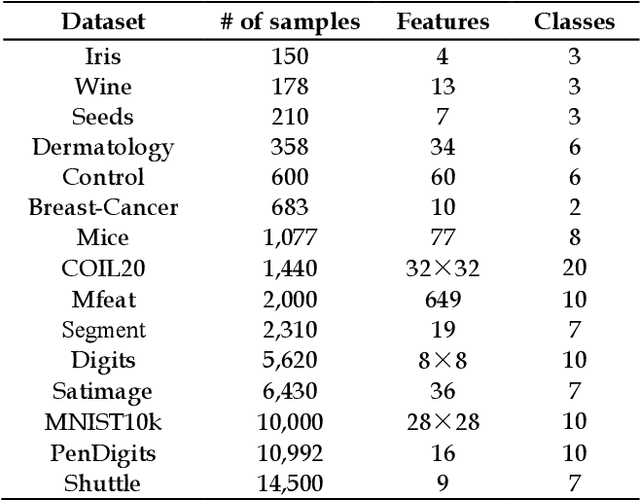

MeanCut: A Greedy-Optimized Graph Clustering via Path-based Similarity and Degree Descent Criterion

Dec 07, 2023

Abstract:As the most typical graph clustering method, spectral clustering is popular and attractive due to the remarkable performance, easy implementation, and strong adaptability. Classical spectral clustering measures the edge weights of graph using pairwise Euclidean-based metric, and solves the optimal graph partition by relaxing the constraints of indicator matrix and performing Laplacian decomposition. However, Euclidean-based similarity might cause skew graph cuts when handling non-spherical data distributions, and the relaxation strategy introduces information loss. Meanwhile, spectral clustering requires specifying the number of clusters, which is hard to determine without enough prior knowledge. In this work, we leverage the path-based similarity to enhance intra-cluster associations, and propose MeanCut as the objective function and greedily optimize it in degree descending order for a nondestructive graph partition. This algorithm enables the identification of arbitrary shaped clusters and is robust to noise. To reduce the computational complexity of similarity calculation, we transform optimal path search into generating the maximum spanning tree (MST), and develop a fast MST (FastMST) algorithm to further improve its time-efficiency. Moreover, we define a density gradient factor (DGF) for separating the weakly connected clusters. The validity of our algorithm is demonstrated by testifying on real-world benchmarks and application of face recognition. The source code of MeanCut is available at https://github.com/ZPGuiGroupWhu/MeanCut-Clustering.

Uncertainty-Aware Multi-View Visual Semantic Embedding

Sep 15, 2023

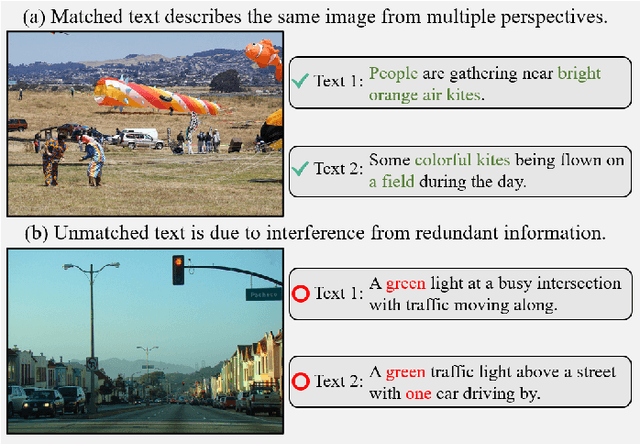

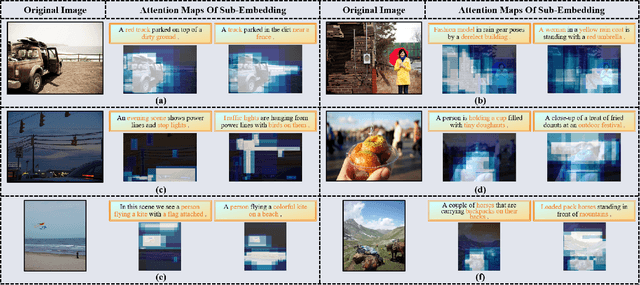

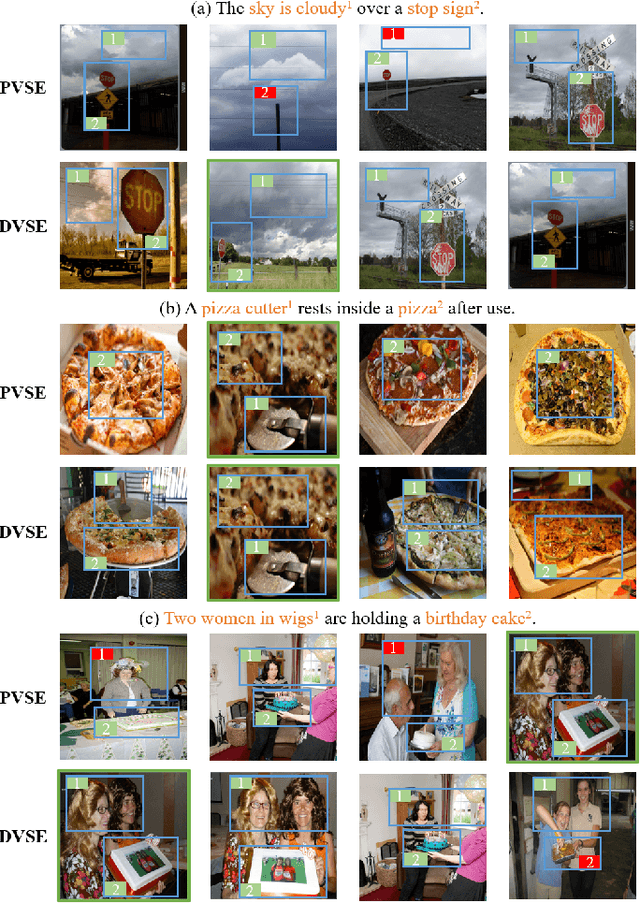

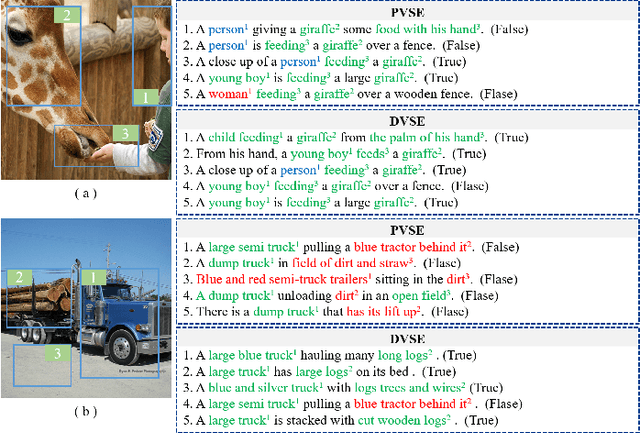

Abstract:The key challenge in image-text retrieval is effectively leveraging semantic information to measure the similarity between vision and language data. However, using instance-level binary labels, where each image is paired with a single text, fails to capture multiple correspondences between different semantic units, leading to uncertainty in multi-modal semantic understanding. Although recent research has captured fine-grained information through more complex model structures or pre-training techniques, few studies have directly modeled uncertainty of correspondence to fully exploit binary labels. To address this issue, we propose an Uncertainty-Aware Multi-View Visual Semantic Embedding (UAMVSE)} framework that decomposes the overall image-text matching into multiple view-text matchings. Our framework introduce an uncertainty-aware loss function (UALoss) to compute the weighting of each view-text loss by adaptively modeling the uncertainty in each view-text correspondence. Different weightings guide the model to focus on different semantic information, enhancing the model's ability to comprehend the correspondence of images and texts. We also design an optimized image-text matching strategy by normalizing the similarity matrix to improve model performance. Experimental results on the Flicker30k and MS-COCO datasets demonstrate that UAMVSE outperforms state-of-the-art models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge